PyTorch学习(一)——Linear Model、Gradient Desent、Back propogation

原文链接: http://bit.ly/PyTorchZeroAll

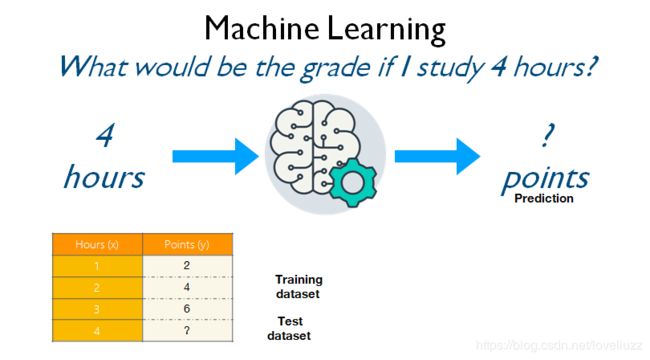

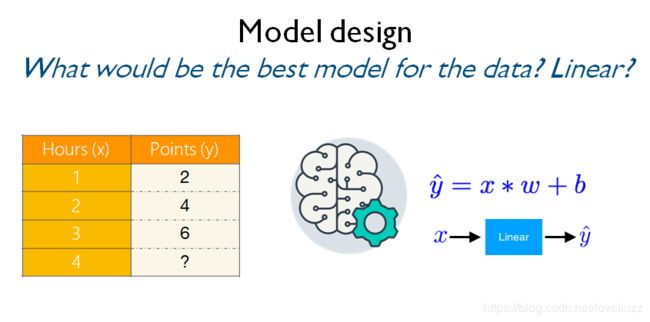

一、Linear Model

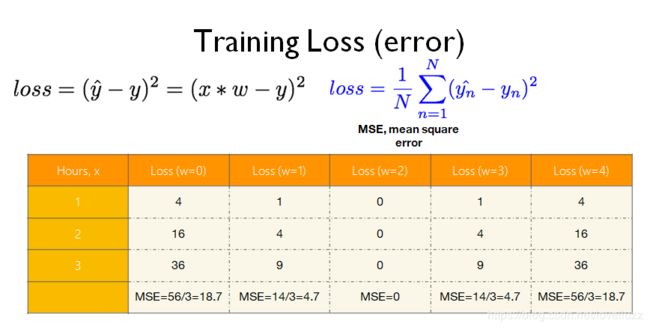

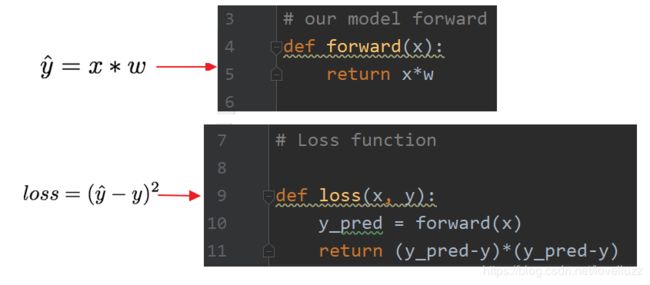

1、Mde和lLoss函数的构建

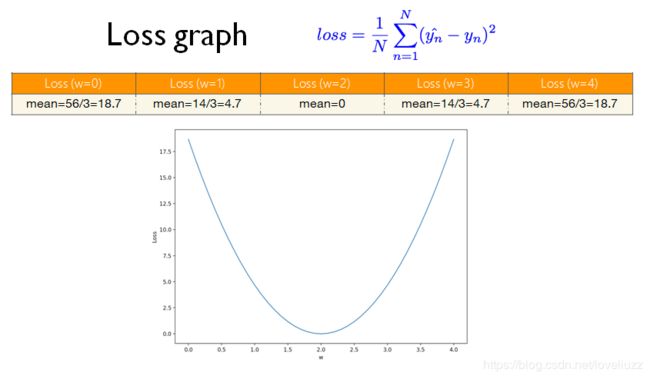

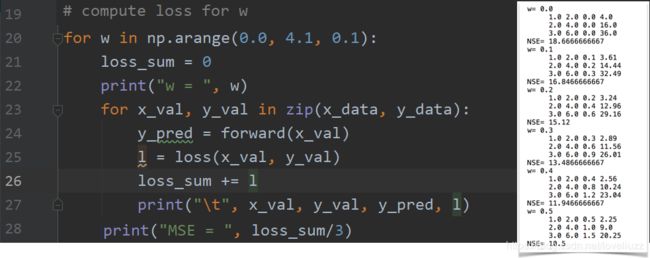

2、计算权重w的损失loss

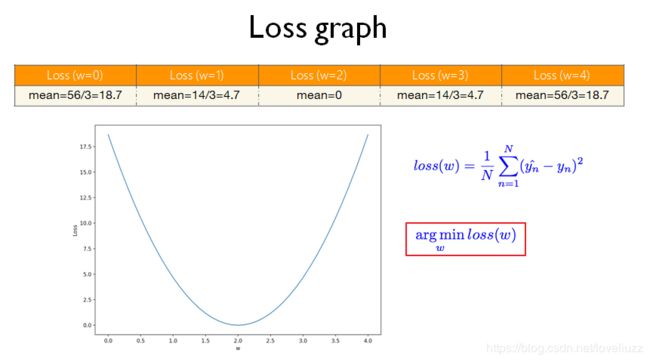

3、画出w和mse的关系图

4、全部整体的代码

import matplotlib.pyplot as plt

import numpy as np

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

# our model forward

def forward(x):

return x*w

# Loss function

def loss(x, y):

y_pred = forward(x)

return (y_pred-y)*(y_pred-y)

# compute loss for w

w_list = []

mse_list = []

for w in np.arange(0.0, 4.1, 0.1):

loss_sum = 0

print("w = ", w)

for x_val, y_val in zip(x_data, y_data):

y_pred = forward(x_val)

l = loss(x_val, y_val)

loss_sum += l

print("\t", x_val, y_val, y_pred, l)

print("MSE = ", loss_sum/3)

w_list.append(w)

mse_list.append(loss_sum/3)

plt.plot(w_list, mse_list)

plt.xlabel("w")

plt.ylabel("loss")

plt.show()

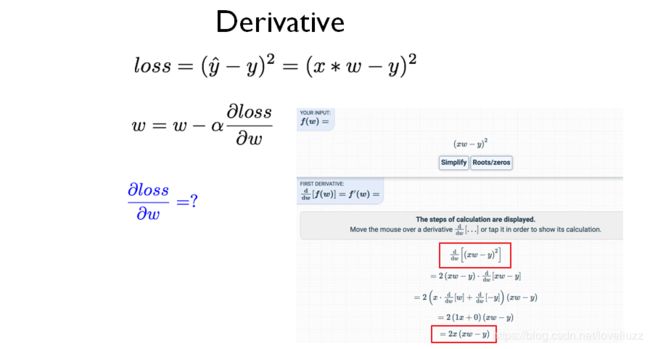

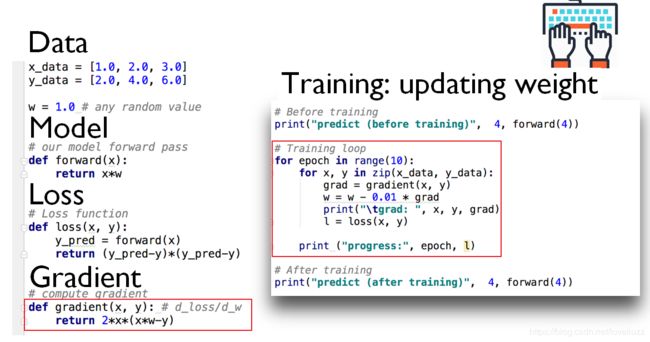

二、Gradient Desent

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w = 1.0 # any random value

# our model forward

def forward(x):

return x*w

# Loss function

def loss(x, y):

y_pred = forward(x)

return (y_pred-y)*(y_pred-y)

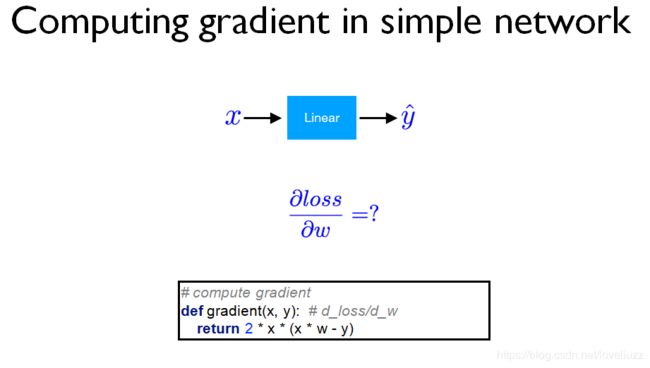

# compute gradient

def gradient(x, y):

return 2*x*(x*w - y)

# before training

print("predict (before training )", 4, forward(4))

# traning loop

for epoch in range(10):

for x, y in zip(x_data, y_data):

grad = gradient(x, y)

w = w - 0.01 * grad

print("\tgrad: ", x, y, grad)

l = loss(x, y)

print("", epoch, l)

# After traning

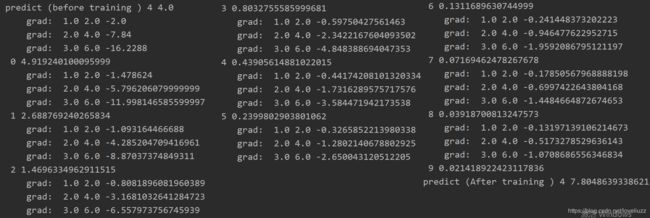

print("predict (After training )", 4, forward(4))运行结果:

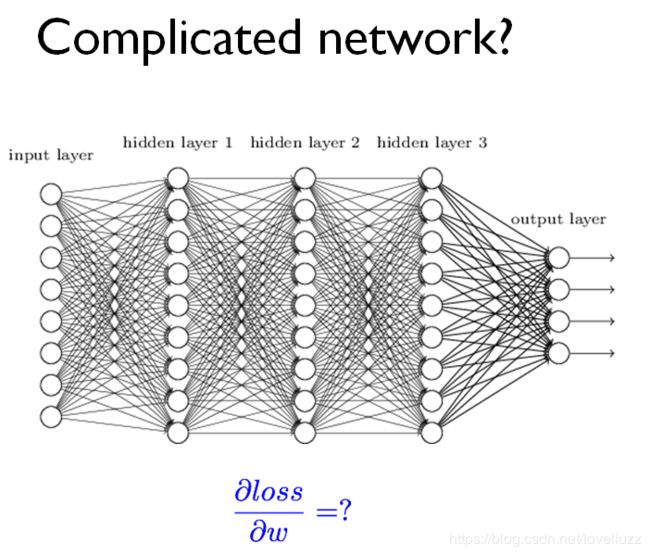

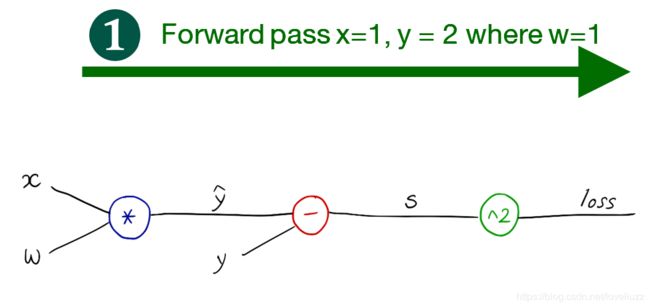

三、Back propogation

import torch

from torch.autograd import Variable

x_data = [1.0, 2.0, 3.0]

y_data = [2.0, 4.0, 6.0]

w = Variable(torch.Tensor([1.0]), requires_grad=True)

# our model forward

def forward(x):

return x*w

# Loss function

def loss(x, y):

y_pred = forward(x)

return (y_pred-y)*(y_pred-y)

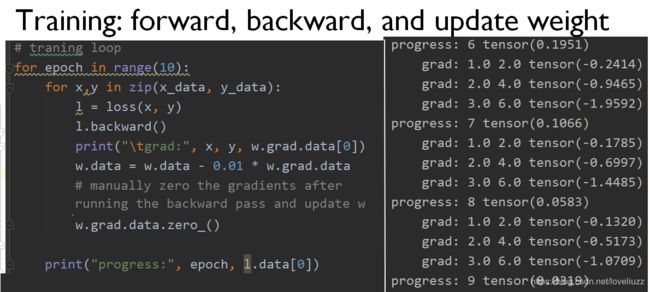

# traning loop

for epoch in range(10):

for x,y in zip(x_data, y_data):

l = loss(x, y)

l.backward()

print("\tgrad:", x, y, w.grad.data[0])

w.data = w.data - 0.01 * w.grad.data

# manually zero the gradients after running the backward pass and update w

w.grad.data.zero_()

print("progress:", epoch, l.data[0])