Keras实现LeNet-5网络

前言

本着要搞懂各种模型,最近学了Keras,所以心血来潮,试着学习复现一下经典的LeNet模型。

论文地址为:Gradient-Based Learning Applied to Document Recoginition

模型结构

首先得搞清楚模型的结构,包括输入大小,卷积核大小,卷积步长等。这里除了最后一层用了softmax函数,其他的激活函数用的都是tanh

- 输入为32x32的灰度图像,那么输入的

input_shape=(32,32,1) - 第一层有6个5x5的卷积核,no padding;

- 第二层为2x2的MaxPooling层,stride 为 2;

- 第三层为16个5x5的卷积层,no padding;

- 第四层为2x2的MaxPooling层,stride 为2;

- 第五层为Flatten和Dense层,连接120个节点;

- 第六层为Dense层,连接84个节点;

- 第七层为Dense层,activation是softmax。

原论文中第二层池化层和第三层卷积层之间为是部分连接。本文中并未考虑,而是做成全连接

另外,可以到这个网站画一下图:http://alexlenail.me/NN-SVG/LeNet.html

下面是我画的图:

代码部分

由于我用的是mnist的数据集,然后mnist的大小是(28,28,1)的,所以实际上我的模型是这样的:

现在上代码:

import tensorflow as tf

from tensorflow.keras.optimizers import SGD

# 使用keras内置的mnist数据集

mnist = tf.keras.datasets.mnist

(training_images, training_labels), (test_images, test_labels) = mnist.load_data()

# 处理一下数据

training_images = training_images.reshape(-1, 28, 28, 1)

test_images = test_images.reshape(-1, 28, 28, 1)

training_images, test_images = training_images / 255.0, test_images / 255.0

# print(test_images) # one-hot code

# 定义模型lenet

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(6, (5,5), activation='tanh', input_shape=(28,28,1)),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Conv2D(16, (5,5), activation='tanh'),

tf.keras.layers.MaxPooling2D(2,2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(120, activation='tanh'),

tf.keras.layers.Dense(84, activation='tanh'),

tf.keras.layers.Dense(10, activation='softmax')

])

model.summary()

# keras.optimizers.SGD(lr=0.01, momentum=0.0, decay=0.0, nesterov=False)

model.compile(loss='sparse_categorical_crossentropy',

optimizer=SGD(lr=0.05, decay=1e-6, momentum=0.9, nesterov=True),

metrics=['acc'])

# fit(x=None, y=None, batch_size=None, epochs=1, verbose=1, callbacks=None, validation_split=0.0, validation_data=None, shuffle=True, class_weight=None, sample_weight=None, initial_epoch=0, steps_per_epoch=None, validation_steps=None, validation_freq=1)

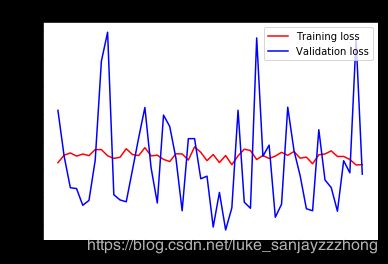

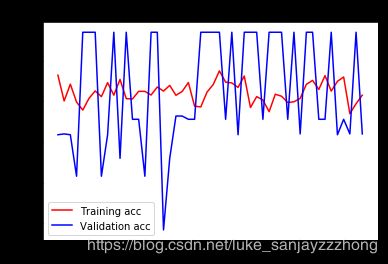

history = model.fit(training_images, training_labels, batch_size=32, epochs=50, verbose=1, shuffle=True, validation_data=(test_images, test_labels))

import matplotlib.pyplot as plt

loss = history.history['loss']

val_loss = history.history['val_loss']

acc = history.history['acc']

val_acc = history.history['val_acc']

epochs = range(len(acc))

plt.plot(epochs, loss, 'r', label="Training loss")

plt.plot(epochs, val_loss, 'b', label="Validation loss")

plt.title("Loss")

plt.legend()

plt.figure()

plt.plot(epochs, acc, 'r', label="Training acc")

plt.plot(epochs, val_acc, 'b', label="Validation acc")

plt.title("Acc")

plt.legend()

plt.show()

结果

猜测的结果是激活函数,以及优化函数都比较一般。