提要

本文介绍如何通过 Vagrant 快速搭建 k8s 测试集群(参照官方博客https://kubernetes.io/blog/2019/03/15/kubernetes-setup-using-ansible-and-vagrant/,有改动,网络组件从 calico 改为 flannel ),以及在搭建过程中碰到的坑。需要对 Vagrant, K8s,Ansible 有一些基础知识,一些配置/脚本文件不会做详细说明

安装 Vagrant 和 VBox

https://www.vagrantup.com/downloads.html

https://www.virtualbox.org/wiki/Downloads

去此处下载对应系统的安装包,直接安装即可

创建 Vagrant File

任意位置创建一个目录,作为 Vagrant 工作目录,在此目录下创建文件 Vagrant:

IMAGE_NAME = "bento/ubuntu-16.04"

N = 2

Vagrant.configure("2") do |config|

config.ssh.insert_key = false

config.vm.provider "virtualbox" do |v|

v.memory = 1024

v.cpus = 2

end

config.vm.define "k8s-master" do |master|

master.vm.box = IMAGE_NAME

master.vm.network "private_network", ip: "192.168.50.10"

master.vm.hostname = "k8s-master"

master.vm.provision "ansible" do |ansible|

ansible.playbook = "setup/master-playbook.yml"

ansible.extra_vars = {

node_ip: "192.168.50.10",

}

end

end

(1..N).each do |i|

config.vm.define "node-#{i}" do |node|

node.vm.box = IMAGE_NAME

node.vm.network "private_network", ip: "192.168.50.#{i + 10}"

node.vm.hostname = "node-#{i}"

node.vm.provision "ansible" do |ansible|

ansible.playbook = "setup/node-playbook.yml"

ansible.extra_vars = {

node_ip: "192.168.50.#{i + 10}",

}

end

end

end

end

创建搭建 K8s 的 Ansible Playbook

此步骤需要神秘力量科学上网

在 vagrant 工作目录下建立 setup 目录,让后进入该目录

创建 master 节点部署脚本:master-playbook.yml

该脚本会自动安装 docker 和 kubelet, kubeadm, kubectl 等组件,并生成 kubeadm join 命令到 vagrant 工作目录, 如果某步骤失败,可以自己参照脚本手动执行

---

- hosts: all

become: true

tasks:

- name: Install packages that allow apt to be used over HTTPS

apt:

name: "{{ packages }}"

state: present

update_cache: yes

vars:

packages:

- apt-transport-https

- ca-certificates

- curl

- gnupg-agent

- software-properties-common

- name: Add an apt signing key for Docker

apt_key:

url: https://download.docker.com/linux/ubuntu/gpg

state: present

- name: Add apt repository for stable version

apt_repository:

repo: deb [arch=amd64] https://download.docker.com/linux/ubuntu xenial stable

state: present

- name: Install docker and its dependecies

apt:

name: "{{ packages }}"

state: present

update_cache: yes

vars:

packages:

- docker-ce

- docker-ce-cli

- containerd.io

notify:

- docker status

- name: Add vagrant user to docker group

user:

name: vagrant

group: docker

- name: Remove swapfile from /etc/fstab

mount:

name: "{{ item }}"

fstype: swap

state: absent

with_items:

- swap

- none

- name: Disable swap

command: swapoff -a

when: ansible_swaptotal_mb > 0

- name: Add an apt signing key for Kubernetes

apt_key:

url: https://packages.cloud.google.com/apt/doc/apt-key.gpg

state: present

- name: Adding apt repository for Kubernetes

apt_repository:

repo: deb https://apt.kubernetes.io/ kubernetes-xenial main

state: present

filename: kubernetes.list

- name: Install Kubernetes binaries

apt:

name: "{{ packages }}"

state: present

update_cache: yes

vars:

packages:

- kubelet

- kubeadm

- kubectl

- name: touch kubelet config file

file:

path: /etc/default/kubelet

state: touch

- name: Configure node ip

lineinfile:

path: /etc/default/kubelet

line: KUBELET_EXTRA_ARGS=--node-ip={{ node_ip }}

- name: Restart kubelet

service:

name: kubelet

daemon_reload: yes

state: restarted

- name: Initialize the Kubernetes cluster using kubeadm

command: kubeadm init --apiserver-advertise-address="192.168.50.10" --apiserver-cert-extra-sans="192.168.50.10" --node-name k8s-master --pod-network-cidr=10.244.0.0/16

- name: Setup kubeconfig for vagrant user

command: "{{ item }}"

with_items:

- mkdir -p /home/vagrant/.kube

- cp -i /etc/kubernetes/admin.conf /home/vagrant/.kube/config

- chown vagrant:vagrant /home/vagrant/.kube/config

- name: Install calico pod network

become: false

command: kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

- name: Generate join command

command: kubeadm token create --print-join-command

register: join_command

- name: Copy join command to local file

local_action: copy content="{{ join_command.stdout_lines[0] }}" dest="./join-command"

handlers:

- name: docker status

service: name=docker state=started

创建 Minion Node 的部署脚本 node.yml

同样,这个脚本会在 Minion 节点上安装 docker 和 kube 组件,并执行 Master 节点生成的 join 命令加入集群,如果某步骤出现问题可参照脚本手动修正。

---

- hosts: all

become: true

tasks:

- name: Install packages that allow apt to be used over HTTPS

apt:

name: "{{ packages }}"

state: present

update_cache: yes

vars:

packages:

- apt-transport-https

- ca-certificates

- curl

- gnupg-agent

- software-properties-common

- name: Add an apt signing key for Docker

apt_key:

url: https://download.docker.com/linux/ubuntu/gpg

state: present

- name: Add apt repository for stable version

apt_repository:

repo: deb [arch=amd64] https://download.docker.com/linux/ubuntu xenial stable

state: present

- name: Install docker and its dependecies

apt:

name: "{{ packages }}"

state: present

update_cache: yes

vars:

packages:

- docker-ce

- docker-ce-cli

- containerd.io

notify:

- docker status

- name: Add vagrant user to docker group

user:

name: vagrant

group: docker

- name: Remove swapfile from /etc/fstab

mount:

name: "{{ item }}"

fstype: swap

state: absent

with_items:

- swap

- none

- name: Disable swap

command: swapoff -a

when: ansible_swaptotal_mb > 0

- name: Add an apt signing key for Kubernetes

apt_key:

url: https://packages.cloud.google.com/apt/doc/apt-key.gpg

state: present

- name: Adding apt repository for Kubernetes

apt_repository:

repo: deb https://apt.kubernetes.io/ kubernetes-xenial main

state: present

filename: kubernetes.list

- name: Install Kubernetes binaries

apt:

name: "{{ packages }}"

state: present

update_cache: yes

vars:

packages:

- kubelet

- kubeadm

- kubectl

- name: touch kubelet config file

file:

path: /etc/default/kubelet

state: touch

- name: Configure node ip

lineinfile:

path: /etc/default/kubelet

line: KUBELET_EXTRA_ARGS=--node-ip={{ node_ip }}

- name: Restart kubelet

service:

name: kubelet

daemon_reload: yes

state: restarted

- name: Copy the join command to server location

copy: src=join-command dest=/tmp/join-command.sh mode=0777

- name: Join the node to cluster

command: sh /tmp/join-command.sh

handlers:

- name: docker status

service: name=docker state=started

启动集群

vagrant up --provider=virtualbox

通过 Vagrant File 的定义, 我们会创建一个 1 Master 2 Minion 的集群,系统使用的是 Ubuntu 16.04

如果镜像下载比较慢,可以尝试手动从官方仓库下载,地址如下

https://app.vagrantup.com/boxes/search

或者从国内仓库下载 .box 文件,不过国内仓库版本信息不全,很难找到合适的(需要安装 python),需要耐心尝试,国内仓库:

https://mirrors.tuna.tsinghua.edu.cn/ubuntu-cloud-images/

下载完成后通过 vagrant add 添加到本地, 再up

vagrant box add ~/Download/virtualbox.box --name bento/ubuntu-16.04

如果一切顺利的话,到现在为止已经拥有了一个可以用于测试的 k8s 集群。

集群验证

vagrant ssh k8s-master

vagrant@k8s-master:~$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready master 2d23h v1.15.2 192.168.50.10 Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://19.3.1

node-1 Ready 2d21h v1.15.2 192.168.50.11 Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://19.3.1

node-2 Ready 2d21h v1.15.2 10.0.2.15 Ubuntu 16.04.6 LTS 4.4.0-150-generic docker://19.3.1

Minion 节点已经成功加入集群

vagrant@k8s-master:~$ alias ksys="kubectl -n kube-system"

vagrant@k8s-master:~$ ksys get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-5c98db65d4-565hf 1/1 Running 0 3d1h 10.244.0.3 k8s-master

coredns-5c98db65d4-gtflf 1/1 Running 0 3d1h 10.244.0.2 k8s-master

etcd-k8s-master 1/1 Running 0 3d1h 192.168.50.10 k8s-master

kube-apiserver-k8s-master 1/1 Running 0 3d1h 192.168.50.10 k8s-master

kube-controller-manager-k8s-master 1/1 Running 0 3d1h 192.168.50.10 k8s-master

kube-flannel-ds-amd64-fbpqj 1/1 Running 0 2d23h 192.168.50.10 k8s-master

kube-flannel-ds-amd64-jbtt6 1/1 Running 0 2d23h 192.168.50.11 node-1

kube-flannel-ds-amd64-rjvqg 1/1 Running 0 2d23h 10.0.2.15 node-2

kube-proxy-hw977 1/1 Running 0 3d1h 192.168.50.10 k8s-master

kube-proxy-p9z84 1/1 Running 0 2d23h 10.0.2.15 node-2

kube-proxy-vg6ms 1/1 Running 0 2d23h 192.168.50.11 node-1

flannel 等组件已成功启动

Pods 通信验证

在上文的 Master 节点 playbook 中,指定了 Pods 的子网为 10.244.0.0/16

启动三个 Pods:

kubectl run -i -t busybox --image=busybox

kubectl run -i -t busybox2 --image=busybox

kubectl run -i -t busybox3 --image=busybox

查看 Pods:

vagrant@k8s-master:~$ kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

busybox-767bf65c49-mzm9v 1/1 Running 1 2d23h 10.244.1.2 node-1

busybox2-868b98bf99-bf6sz 1/1 Running 0 2d23h 10.244.2.2 node-2

busybox3-7f44486c85-jk7kh 1/1 Running 1 2d23h 10.244.1.3 node-1

两个 Pods 已经正常启动,并且分配了正确的 IP

同节点 Pods 通信验证

vagrant@k8s-master:~$ kubectl exec -it busybox-767bf65c49-mzm9v /bin/sh

/ # ping 10.244.1.3

PING 10.244.1.3 (10.244.1.3): 56 data bytes

64 bytes from 10.244.1.3: seq=0 ttl=64 time=0.275 ms

64 bytes from 10.244.1.3: seq=1 ttl=64 time=0.312 ms

64 bytes from 10.244.1.3: seq=2 ttl=64 time=0.094 ms

跨界点 Pods 通信验证

vagrant@k8s-master:~$ kubectl exec -it busybox-767bf65c49-mzm9v /bin/sh

/ # ping 10.244.2.2

PING 10.244.2.2 (10.244.2.2): 56 data bytes

^C

--- 10.244.2.2 ping statistics ---

6 packets transmitted, 0 packets received, 100% packet loss

问题来了, 跨节点无法互通。

查看各节点网卡及路由

master:

| interface | ip |

|---|---|

| cni0 | 10.244.0.1 |

| docker0 | 172.17.0.1 |

| eth0 | 10.0.2.15 |

| eth1 | 192.168.50.10 |

| flannel.1 | 10.244.0.0 |

node-1:

| interface | ip |

|---|---|

| cni0 | 10.244.1.1 |

| docker0 | 172.17.0.1 |

| eth0 | 10.0.2.15 |

| eth1 | 192.168.50.11 |

| flannel.1 | 10.244.1.0 |

node-2:

| interface | ip |

|---|---|

| cni0 | 10.244.2.1 |

| docker0 | 172.17.0.1 |

| eth0 | 10.0.2.15 |

| eth1 | 192.168.50.12 |

| flannel.1 | 10.244.2.0 |

node-1 的路由,其他节点差不多,不一一列举

vagrant@node-1:~$ route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 10.0.2.2 0.0.0.0 UG 0 0 0 eth0

10.244.0.0 10.244.0.0 255.255.255.0 UG 0 0 0 flannel.1

10.244.1.0 0.0.0.0 255.255.255.0 UG 0 0 0 cni0

10.244.2.0 10.244.2.0 255.255.255.0 U 0 0 0 flannel.1

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

192.168.50.0 0.0.0.0 255.255.255.0 U 0 0 0 eth1

发现都配置了通过 flannel.1 前往其他节点子网的路由信息,参照 https://blog.csdn.net/u011394397/article/details/54584819 此文后尝试添加大网路由

sudo route add -net 10.244.0.0/16 dev flannel.1

结果还是不通

查了半天资料都没有解决,所以接下来仔细分析一下网络模型。

Vagrant 部署 k8s 集群跨节点 Pods 网络不通问题调查

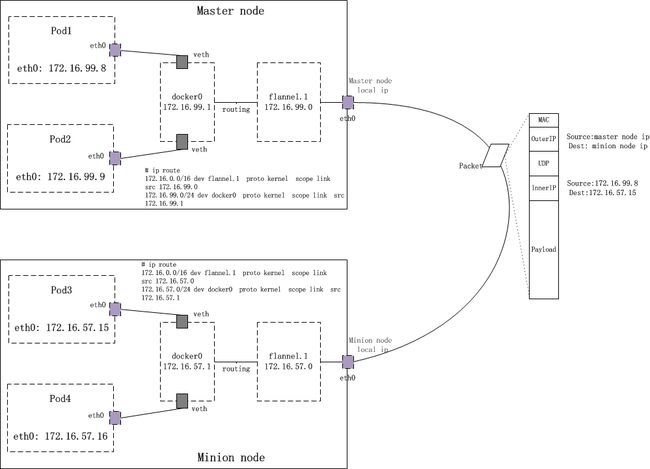

网上对于 flannel 网络模型差不多都是用的下图:

然而这个图已经太过古老,跟现在的网络对应不上,缺少 cni0 网桥, 而且通过 tcpdump 对 ping 包进行跟踪,发现 ping 包无论如何都走不到 docker0, 同节点的 ping 包只会通过 cni0,跨节点的 ping 包终止于 flannel.1 但并没有经过 docker0,并且达不到另一个 node。

经过多放查找,目前最新的网络结构图如下:

此图和最新的 k8s cni 网络终于对上了。

- 查阅相关资料发现 k8s 早已抛弃了 docker0 网桥, 改用自己的 cni0 网桥来管理 Pods 网络,所以 docker0 可以无视。

- 一个数据包从 Pod 里发出后经过 cni 和 flannel 的处理和路由,会最终通过宿主机的 eth0 接口送到集群网络。

然而通过上文列出的各节点网卡可以发现,三个节点的 eth0 都是 10.0.2.15。。明明创建 VM 时指定的是192.168.50.0/24 啊? 那试着把默认路由改到 eth1?

sudo route delete default

sudo route add default dev eth1

然后再次验证。结果。。。节点间依然不通,还导致节点不通外网。这是什么鬼?

回过头想想。eth0 的 10.0.2.0/24 网络是哪里来的?打开 vbox 查看,原来是 vagrant 给自动分配了一个 NAT 网口,NAT 网口的特点是

- VM 可以 ping 通宿主机

- 宿主机不能 ping 通 VM

- VM 之间不能通信

- 宿主机可通外网,则 VM 也可

vagrant 管理 VM 是通过 Vagrant File 中指定 ip 的 host-only 网络,即 VM 的 eth1 口,此网络的特点

- VM 之间可互通

- VM 与 宿主机可以互通(VM 的 eth1 口挂在宿主机 vboxnet0 虚拟网卡)

- VM 不通外网

所以到这里也就明了,Pods 跨节点无法通信完全是 vagrant NAT 网络的锅,三个节点的 eth0 本来就不是互通的,所以我们把 eth0 改为桥接模式即可。