以下内容摘自https://medium.com/jim-fleming/running-tensorflow-on-kubernetes-ca00d0e67539

This guide assumes that the proper GPU drivers and CUDA version have been installed.

(假定合适的GPU驱动和CUDA对应版本已经安装好)

Working without nvidia-docker

A common way to run containerized GPU applications is to usenvidia-docker. Here is an example of running TensorFlow with full GPU support inside a container.

(通常运行容器化的GPU应用是通过nvidia-docker来运行,下面例子是支持所有GPU)

nvidia-docker run -it tensorflow/tensorflow:latest-gpu python -c 'import tensorflow'

Unfortunately it’s not current possible to use nvidia-docker directly from Kubernetes. Additionally, Kubernetes does not support thenvidia-docker-pluginsince Kubernetes does not use Docker’s volume mechanism.

(不幸的是,当前不能从kubernetes里直接使用nvidia-docker,此外kubernetes并不支持nvidia-docker-plugin)

The goal is to manually replicate the functionality provided by nvidia-docker (and it’s plugin). For demonstration, query the nvidia-docker-plugin REST API to query the command line arguments:

(通过REST API可以查询nvidia-docker-plugin的命令行参数)

# curl -s localhost:3476/docker/cli

--volume-driver=nvidia-docker

--volume=nvidia_driver_375.26:/usr/local/nvidia:ro

--device=/dev/nvidiactl

--device=/dev/nvidia-uvm

--device=/dev/nvidia-uvm-tools

--device=/dev/nvidia0

Which will feed into docker, running the same python command:

docker run -it`curl -s`localhost:3476/docker/cli` tensorflow/tensorflow:latest-gpu python -c ‘import tensorflow'

Enabling GPU devices

With the knowledge of what Docker needs to be able to run a GPU-enabled container it is straightforward to add this to Kubernetes. The first step is to enable an experiment flag on all of the GPU nodes. In the Kubelet options (found in /etc/default/kubelet if you use upstart for services), add--experimental-nvidia-gpus=1. This does two things… First, it allows GPU resources on the node for use by the scheduler. Second, when a GPU resource is requested, it will add the appropriate device flags to the docker command. This post describes a little more about what and why this flag exists:

http://blog.clarifai.com/how-to-scale-your-gpu-cloud-infrastructure-with-kubernetes

The full GPU proposal, including the existing flag and future steps can be found here:

https://github.com/kubernetes/community/blob/master/contributors/design-proposals/gpu-support.md

Pod Spec

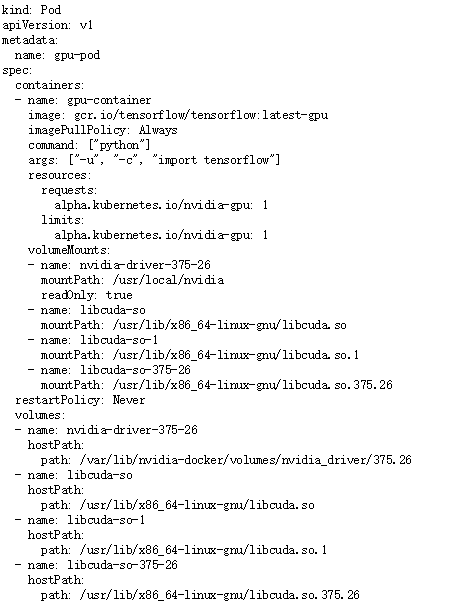

With the device flags added by the experimental GPU flag the final step requires adding the necessary volumes to the pod spec. A sample pod spec is provided below:

kind: Pod

apiVersion: v1

metadata:

name: gpu-pod

spec:

containers:

- name: gpu-container

image: gcr.io/tensorflow/tensorflow:latest-gpu

imagePullPolicy: Always

command: ["python"]

args: ["-u", "-c", "import tensorflow"]

resources:

requests:

alpha.kubernetes.io/nvidia-gpu: 1

limits:

alpha.kubernetes.io/nvidia-gpu: 1

volumeMounts:

- name: nvidia-driver-375-26

mountPath: /usr/local/nvidia

readOnly: true

- name: libcuda-so

mountPath: /usr/lib/x86_64-linux-gnu/libcuda.so

- name: libcuda-so-1

mountPath: /usr/lib/x86_64-linux-gnu/libcuda.so.1

- name: libcuda-so-375-26

mountPath: /usr/lib/x86_64-linux-gnu/libcuda.so.375.26

restartPolicy: Never

volumes:

- name: nvidia-driver-375-26

hostPath:

path: /var/lib/nvidia-docker/volumes/nvidia_driver/375.26

- name: libcuda-so

hostPath:

path: /usr/lib/x86_64-linux-gnu/libcuda.so

- name: libcuda-so-1

hostPath:

path: /usr/lib/x86_64-linux-gnu/libcuda.so.1

- name: libcuda-so-375-26

hostPath:

path: /usr/lib/x86_64-linux-gnu/libcuda.so.375.26