Android录音并根据音量大小实现简单动画效果

Android录音并根据音量大小实现简单动画效果

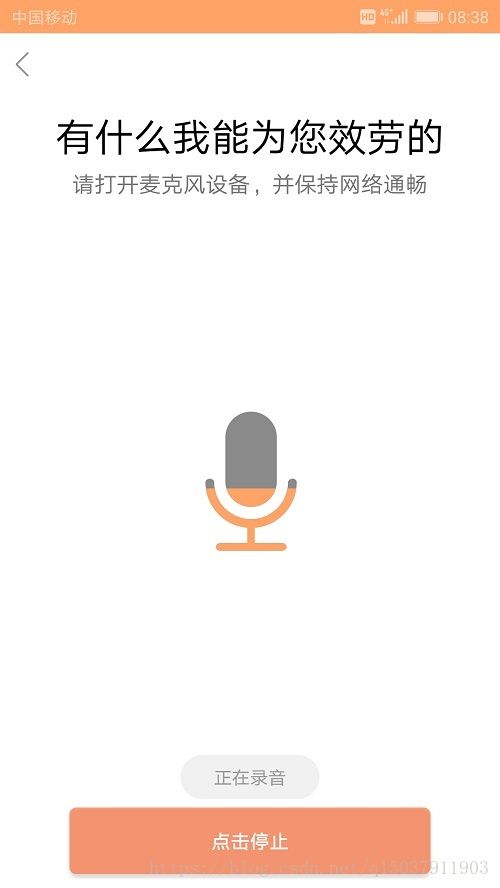

最终效果:

录音使用的是Android提供的 AudioRecord,可以在录音的同时判断音量,动画效果使用的是 clipDrawable 对象的 setLevel 方法,具体的过程如下:

clipDrawable

新建一个drawable资源文件,其中的属性也容易理解,本质上就是将前景裁剪一部分然后覆盖到背景上。具体内容如下;

<layer-list xmlns:android="http://schemas.android.com/apk/res/android"> <item android:id="@android:id/background" android:drawable="@drawable/voice_gray" /> <item android:id="@android:id/progress"> <clip android:clipOrientation="vertical" android:drawable="@drawable/voice_accent" android:gravity="bottom" /> item> layer-list>之后就是在布局文件中的

ImageView中设置src为新建的drawable文件,并在活动中获得它的引用:<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android" xmlns:app="http://schemas.android.com/apk/res-auto" xmlns:tools="http://schemas.android.com/tools" android:layout_width="match_parent" android:layout_height="match_parent" tools:context=".MainActivity"> <ImageView android:id="@+id/img" android:layout_width="wrap_content" android:layout_height="wrap_content" android:layout_centerInParent="true" android:src="@drawable/clip_test" /> <Button android:id="@+id/button" android:layout_width="wrap_content" android:layout_height="wrap_content" android:layout_alignParentBottom="true" android:layout_centerHorizontal="true" android:layout_marginBottom="20dp" android:text="按住说话" /> RelativeLayout>ImageView imageView = findViewById(R.id.img); drawable = imageView.getDrawable();

使用AudioRecord

使用

AudioRecord类的大致过程为(暂时忽略录音机不可用等异常情况):构造

AudioRecord对象 -> 在新线程中执行startRecording方法 -> 调用read方法获取录取的声音信息 -> 停止录制,释放资源。

AudioRecord对象会每次讲录制到的声音存放到缓冲区中,通过read方法即可以获取其内容。具体过程如下:

构造

AuidioRecord对象record = new AudioRecord(MediaRecorder.AudioSource.MIC, 8000, AudioFormat.CHANNEL_IN_DEFAULT, AudioFormat.ENCODING_PCM_16BIT, bufferSize);参数分别为 音源,采样率,信道配置,格式配置,缓冲区大小,其中缓冲区大小

bufferSize取值以如下方式获得:

bufferSize = AudioRecord.getMinBufferSize(sampleRateInHz, channelConfig, audioFormat);其他参数涉及音频方面的专业知识,大家可以自行百度。

判断

record对象状态,开始录制。if (record.getState() == AudioRecord.STATE_UNINITIALIZED) { Log.e(TAG, "start: record state uninitialized"); return; } record.startRecording();关于状态的取值,通过查看

getState的源码就可以看到,有STATE_INITIALIZED和STATE_UNINITIALIZED两个 :/** * Returns the state of the AudioRecord instance. This is useful after the * AudioRecord instance has been created to check if it was initialized * properly. This ensures that the appropriate hardware resources have been * acquired. * @see AudioRecord#STATE_INITIALIZED * @see AudioRecord#STATE_UNINITIALIZED */ public int getState() { return mState; }通过调用

record对象的read方法,读取数据,需要注意的是, 该方法有许多的重载,主要的区别在于缓冲区buffer为byte还是short,后者一般用于计算声音分贝,而前者则多用于写入数据。并且Android规定,如果使用short类型的缓冲区,则构造record对象时需要将audioFormat设置为AudioFormat.ENCODING_PCM_16BIT。

数据读取后即可以计算出音量大小,进而得出分贝值 vol ,然后通过clipDrawable的setLevel方法设置裁剪的高度,setLevel方法的取值为 0-10000, 可以参考源码理解。short[] buffer = new short[bufferSize]; int sz = record.read(buffer, 0, bufferSize); long v = 0; for (int tmp : buffer) v += tmp * tmp; double vol = 10 * Math.log10(v / (double) sz); Message message = new Message(); message.what = VOICE_VOLUME; message.arg1 = (int) (vol * 50 + 3000); handler.sendMessage(message); try { Thread.sleep(100); } catch (InterruptedException e) { e.printStackTrace(); }额,这里涉及到一个音量的计算,我参考的是 https://blog.csdn.net/greatpresident/article/details/38402147 这篇博客中的方法,具体原理。。。我也不是很清楚。使用

handler是因为这是在子线程中运行的。最后就是停止录音,释放资源了:

record.stop(); record.release(); record = null;

下面是我做得一个简单的demo(删除了package和import语句以精简。。。好像也没怎么精简):

封装后的 AudioRecorder 类:

public class AudioRecorder {

public final static int VOICE_VOLUME = 1;

public final static int RECORD_STOP = 2;

private static final String TAG = "AudioRecorder";

private static int sampleRateInHz = 8000;

private static int channelConfig = AudioFormat.CHANNEL_IN_DEFAULT;

private static int audioFormat = AudioFormat.ENCODING_PCM_16BIT;

private static AudioRecorder recorder;

private int bufferSize;

private AudioRecord record;

private boolean on;

private AudioRecorder() {

on = false;

record = null;

bufferSize = AudioRecord.getMinBufferSize(sampleRateInHz, channelConfig, audioFormat);

}

/**

* singleton

* @return an AudioRecorder object

*/

public static AudioRecorder getInstance() {

if (recorder == null)

recorder = new AudioRecorder();

return recorder;

}

/**

* start recorder

* @param handler a handler to send back message for ui changing

*/

public void start(final Handler handler) {

if (record != null || on)

return;

record = new AudioRecord(MediaRecorder.AudioSource.MIC,

sampleRateInHz,

channelConfig,

audioFormat,

bufferSize);

if (record.getState() == AudioRecord.STATE_UNINITIALIZED) {

Log.e(TAG, "start: record state uninitialized");

return;

}

new Thread(new Runnable() {

@Override

public void run() {

record.startRecording();

on = true;

while (on) {

short[] buffer = new short[bufferSize];

int sz = record.read(buffer, 0, bufferSize);

long v = 0;

for (int tmp : buffer)

v += tmp * tmp;

double vol = 10 * Math.log10(v / (double) sz);

Message message = new Message();

message.what = VOICE_VOLUME;

message.arg1 = (int) (vol * 50 + 3000);

handler.sendMessage(message);

try {

Thread.sleep(100);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

record.stop();

record.release();

record = null;

Message message = new Message();

message.what = RECORD_STOP;

handler.sendMessage(message);

}

}).start();

}

/**

* stop recording

*/

public void stop() {

on = false;

}

}

活动中逻辑流程(需要在manifest中配置并动态申请权限:

public class MainActivity extends AppCompatActivity {

private static final String TAG = "MainActivity";

private Drawable drawable;

private boolean recording = false;

private Button button;

private Handler handler = new Handler(new Handler.Callback() {

@Override

public boolean handleMessage(Message msg) {

switch (msg.what){

case AudioRecorder.VOICE_VOLUME:

drawable.setLevel(msg.arg1);

break;

case AudioRecorder.RECORD_STOP:

drawable.setLevel(0);

break;

default:

break;

}

return true;

}

});

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

button = findViewById(R.id.button);

ImageView imageView = findViewById(R.id.img);

drawable = imageView.getDrawable();

button.setOnLongClickListener(new View.OnLongClickListener() {

@Override

public boolean onLongClick(View v) {

Log.e(TAG, "onLongClick: long clicking");

return false;

}

});

button.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

if (ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.RECORD_AUDIO) != PackageManager.PERMISSION_GRANTED) {

ActivityCompat.requestPermissions(MainActivity.this, new String[]{Manifest.permission.RECORD_AUDIO}, 1);

return;

}

if (!recording)

startRecorder();

else

stopRecorder();

}

});

}

@Override

public void onRequestPermissionsResult(int requestCode, @NonNull String[] permissions, @NonNull int[] grantResults) {

if (requestCode == 1){

if (grantResults.length <= 0 || grantResults[0] == PackageManager.PERMISSION_DENIED){

Toast.makeText(this, "不能录音玩个鬼?!!!", Toast.LENGTH_SHORT).show();

if (!ActivityCompat.shouldShowRequestPermissionRationale(this, Manifest.permission.RECORD_AUDIO))

Toast.makeText(this, "你就是头猪!", Toast.LENGTH_SHORT).show();

}

else if (grantResults[0] == PackageManager.PERMISSION_GRANTED){

startRecorder();

}

}

}

private void startRecorder(){

Toast.makeText(MainActivity.this, "正在录音", Toast.LENGTH_SHORT).show();

button.setText("停止录音");

AudioRecorder.getInstance().start(handler);

recording = !recording;

}

private void stopRecorder(){

Toast.makeText(MainActivity.this, "已停止录音", Toast.LENGTH_SHORT).show();

button.setText("开始录音");

AudioRecorder.getInstance().stop();

recording = !recording;

}

}

drawable文件:

<layer-list xmlns:android="http://schemas.android.com/apk/res/android">

<item

android:id="@android:id/background"

android:drawable="@drawable/voice_gray" />

<item android:id="@android:id/progress">

<clip

android:clipOrientation="vertical"

android:drawable="@drawable/voice_accent"

android:gravity="bottom" />

item>

layer-list>

MAinActivity布局文件:

<RelativeLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<ImageView

android:id="@+id/img"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_centerInParent="true"

android:contentDescription="@string/app_name"

android:src="@drawable/clip_test" />

<Button

android:id="@+id/button"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_alignParentBottom="true"

android:layout_centerHorizontal="true"

android:layout_marginBottom="20dp"

android:text="按住说话" />

RelativeLayout>

参考了多个csdn和简书的博客,就不一一列举了

这是谷歌官方的介绍,也挺有用的 https://developer.android.google.cn/reference/android/media/AudioRecord

有问题还请指正,欢迎评论交流。