CUDA学习笔记(6) 共享内存与全局内存

共享内存(Shared memory)是位于每个流处理器组(SM)中的高速内存空间,主要作用是存放一个线程块(Block)中所有线程都会频繁访问的数据。流处理器(SP)访问它的速度仅比寄存器(Register)慢,它的速度远比全局显存快。但是他也是相当宝贵的资源,一般只有几十KByte,

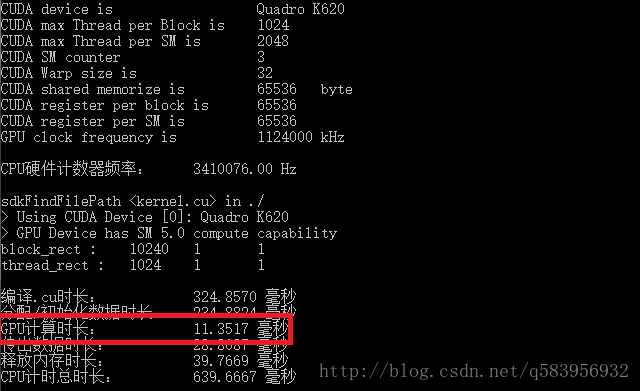

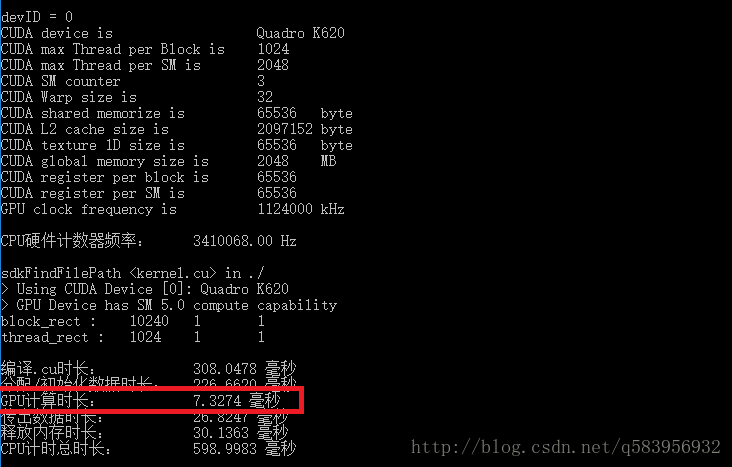

这里以我电脑上的“Quadro K620”为例:

| 硬件资源 | 参数 |

|---|---|

| 流处理器(SP) | 128 * 3 = 384 |

| 流处理器组(SM/SMM) | 3 |

| 全局显存(Global memory) | 2048MByte |

| 每个SM中的共享内存(Shared memory) | 64KByte |

| 每个SM中的寄存器(Register) | 65536个32bit寄存器 |

可以看到全局显存(Global memory)的大小比共享显存(Shared memory)大了几个数量级。当然,共享显存的访问速度也一定比全局显存快。再从硬件上看:

每个流处理器组(SM)都拥有属于自己的共享显存(Shared memory)且大小只有64KByte,而这些流处理器组(SM)都位于同一块芯片上(on the same chip),这块芯片又通过PCB电路连接内存芯片(DRAM)。

- 流处理器(SP)对共享显存(Shared memory)上数据的访问属于片上访问,可以立刻取得数据。

- 流处理器(SP)对内存芯片(DRAM)的访问要通过请求内存控制器等一系列操作,然后才能得到数据。

为了能够明显的看到共享显存(Shared memory)的速度优势,我们就需要把那些被频繁访问的数据直接放到共享显存(Shared memory)中,这样我们的程序在执行时就可以避免频繁访问全局显存(Global memory),导致时间浪费在数据的传输和访问上。

举个例子,我们需要统计一张超高清图片或者20Mbyte大小的一维数组中每个数值的个数,也就是做直方图统计,结合前面的原子操作(Atomic functions)可以将计算时间至少减半,当然不同架构的GPU会有不同程度的优化。

*注4:直方图统计用于记录一组数据中的数值分布情况,即每个数值出现了多少次,统计数值的分布。

方案一:

- 统计数值分布的数组直接放在全局显存(Global memory)中,每个线程依次读取全局显存中的待统计数据。

- 然后使用原子操作对统计数组(Global)中与源数据中数值所对应的元素执行“++”。

方案二:

- 统计数值分布的数组放在全局显存(Global memory)中,每个线程块拥有一个自己的统计数组(Shared)放在共享显存(Shared memory)中。

- 线程块中的每个线程依次读取全局显存中的待统计数据,然后使用原子操作对统计数组(Shared)中与源数据中数值所对应的元素执行“++”。

- 对线程块中的线程同步,以保证该线程块中的所有线程都已经完成原子操作。

- 将当前线程块中的统计数组(Shared)加到统计数组(Global)中。

这样,方案二就通过使用共享显存(Shared memory)避免了对全局显存(Global memory)的频繁访问,以达到提高程序运行速度的效果。

方案一核函数的实现:

// This is kernel function file !

#define hist_MAX_VALUE 256

extern "C" __global__ void kernel_func(unsigned int * thread_index_array, unsigned char *source_data_array, \

unsigned int * histogram_array, unsigned int *clock_counter_array)

{

// 计算线程号

unsigned int block_index = blockIdx.x + blockIdx.y * gridDim.x + blockIdx.z * gridDim.x * gridDim.y;

unsigned int thread_index = block_index * blockDim.x * blockDim.y * blockDim.z + \

threadIdx.x + threadIdx.y * blockDim.x + threadIdx.z * blockDim.x * blockDim.y;

// 总线程数

unsigned int thread_num = gridDim.x * gridDim.y * gridDim.z * blockDim.x * blockDim.y * blockDim.z;

// 记录线程号

thread_index_array[thread_index] = thread_index;

unsigned int counter_1, counter_2;

counter_1 = clock();

unsigned int value = source_data_array[thread_index];

atomicAdd(&histogram_array[value], 1);

counter_2 = clock();

clock_counter_array[thread_index] = counter_1;

clock_counter_array[thread_index + thread_num] = counter_2;

}结果是:方案一统计10MByte大小数据的直方图使用了11.35毫秒。

方案二核函数的实现:

// This is kernel function file !

#define hist_MAX_VALUE 256

extern "C" __global__ void kernel_func(unsigned int * thread_index_array, unsigned char *source_data_array, \

unsigned int * histogram_array, unsigned int *clock_counter_array)

{

// 计算线程号

unsigned int block_index = blockIdx.x + blockIdx.y * gridDim.x + blockIdx.z * gridDim.x * gridDim.y;

unsigned int thread_index = block_index * blockDim.x * blockDim.y * blockDim.z + \

threadIdx.x + threadIdx.y * blockDim.x + threadIdx.z * blockDim.x * blockDim.y;

// 总线程数

unsigned int thread_num = gridDim.x * gridDim.y * gridDim.z * blockDim.x * blockDim.y * blockDim.z;

// 记录线程号

thread_index_array[thread_index] = thread_index;

unsigned int counter_1, counter_2;

counter_1 = clock();

// 初始化共享内存统计数组

__shared__ unsigned int histogram_shared[hist_MAX_VALUE];

histogram_shared[thread_index % hist_MAX_VALUE] = 0;

__syncthreads();

// 对每个Block进行统计

unsigned int value = source_data_array[thread_index];

atomicAdd(&histogram_shared[value], 1);

__syncthreads();

// 将每个Block统计的共享显存数据 加到 全局显存变量中

atomicAdd(&histogram_array[thread_index % hist_MAX_VALUE], histogram_shared[thread_index % hist_MAX_VALUE]);

counter_2 = clock();

clock_counter_array[thread_index] = counter_1;

clock_counter_array[thread_index + thread_num] = counter_2;

}结果是:方案而统计10MByte大小数据的直方图使用了7.33毫秒。

这里我使用了两个寄存器“counter_1, counter_2”来保证记录时间的准确性,通过实验,流处理器(SP)访问寄存器的时间只有6个时钟周期(大约6纳秒),而单个线程耗时越3000个时钟周期,有较高的可信度。

就结果而言,加速比达到了 160% ,其实在其他显卡上还可能达到 200%∼400% 甚至更多。

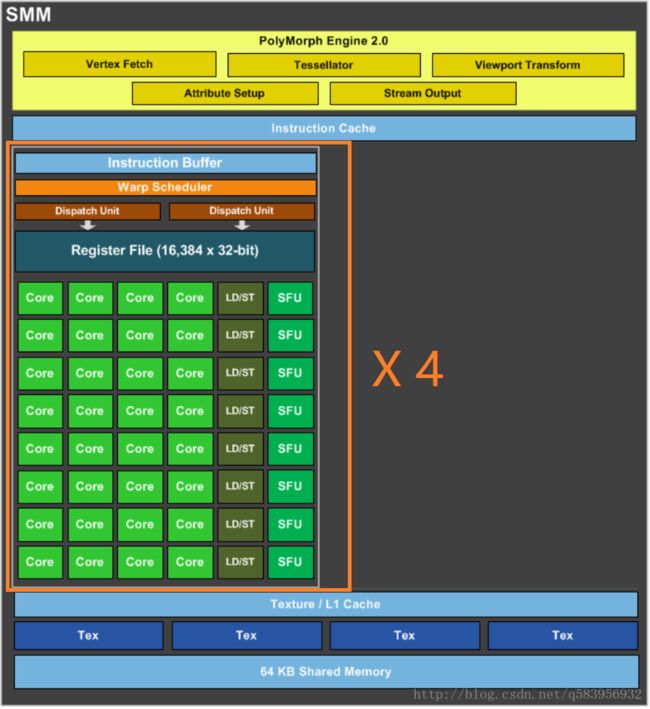

下面补充一个SMM的结构,SMM是SM的改良变种,与SM的功能完全相同。区别在于它的内部将流处理器(SP)以及寄存器(Register)等区域分成了4块,主要目的是获取更大的带宽。

我认为只要原因是我的“Quadro K620”显卡专门对此做过硬件改良,使用SMM的结构代替原来传统的SM结构,大大增加了带宽。同时也拥有2MByte之大的二级缓存,大大增加了访问全局显存(Global memory)的速度,因此使用共享显存(Shared memory)加速比不如其他游戏型显卡高。这些可以在 参考文献5 中查证。

我的“main_code.cpp”代码:

// CUDA Shared memory

// C/C++ IO

#include 我的“kernel.cu”代码:

// This is kernel function file !

#define hist_MAX_VALUE 256

extern "C" __global__ void kernel_func(unsigned int * thread_index_array, unsigned char *source_data_array, \

unsigned int * histogram_array, unsigned int *clock_counter_array)

{

// 计算线程号

unsigned int block_index = blockIdx.x + blockIdx.y * gridDim.x + blockIdx.z * gridDim.x * gridDim.y;

unsigned int thread_index = block_index * blockDim.x * blockDim.y * blockDim.z + \

threadIdx.x + threadIdx.y * blockDim.x + threadIdx.z * blockDim.x * blockDim.y;

// 总线程数

unsigned int thread_num = gridDim.x * gridDim.y * gridDim.z * blockDim.x * blockDim.y * blockDim.z;

// 记录线程号

thread_index_array[thread_index] = thread_index;

unsigned int counter_1, counter_2;

counter_1 = clock();

#if(1)

// 初始化共享内存统计数组

__shared__ unsigned int histogram_shared[hist_MAX_VALUE];

histogram_shared[thread_index % hist_MAX_VALUE] = 0;

__syncthreads();

// 对每个Block进行统计

unsigned int value = source_data_array[thread_index];

atomicAdd(&histogram_shared[value], 1);

__syncthreads();

// 将每个Block统计的共享显存数据 加到 全局显存变量中

atomicAdd(&histogram_array[thread_index % hist_MAX_VALUE], histogram_shared[thread_index % hist_MAX_VALUE]);

#else

unsigned int value = source_data_array[thread_index];

atomicAdd(&histogram_array[value], 1);

#endif

counter_2 = clock();

clock_counter_array[thread_index] = counter_1;

clock_counter_array[thread_index + thread_num] = counter_2;

}

参考:

1.《CUDA并行程序设计》机械工业出版社

2.NVIDIA官方原子操作说明文档

3.CUDA Toolkit Documation

4.CUDA Toolkit Documation Shared memory篇

5.GM107芯片白皮书 NVIDIA GeForce GTX 750 Ti Whitepaper