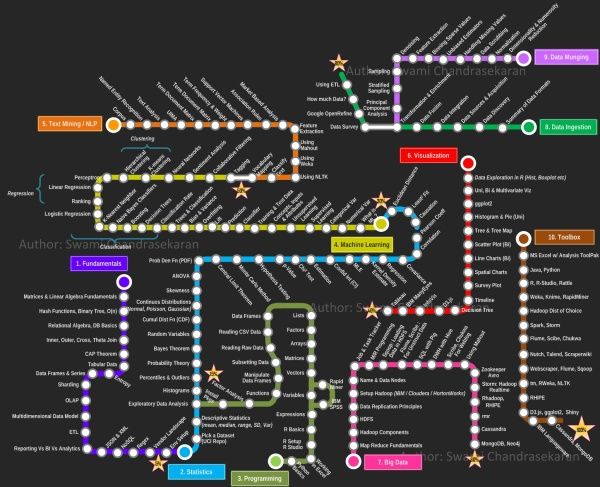

数据挖掘学习路线图

学习一门技术要和行业靠拢,没有行业背景的技术如空中楼阁。技术尤其是计算机领域的技术发展是宽泛且快速更替的(十年前做网页设计都能成立公司),一般人没有这个精力和时间全方位的掌握所有技术细节。但是技术在结合行业之后就能够独当一面了,一方面有利于抓住用户痛点和刚性需求,另一方面能够累计行业经验,使用互联网思维跨界让你更容易取得成功。不要在学习技术时想要面面俱到,这样会失去你的核心竞争力。

一、目前国内的数据挖掘人员工作领域大致可分为三类。

二、说说各工作领域需要掌握的技能。

(1).数据分析师

三、以下是通信行业数据挖掘工程师的工作感受。

真正从数据挖掘项目实践的角度讲,沟通能力对挖掘的兴趣爱好是最重要的,有了爱好才可以愿意钻研,有了不错的沟通能力,才可以正确理解业务问题,才能正确把业务问题转化成挖掘问题,才可以在相关不同专业人才之间清楚表达你的意图和想法,取得他们的理解和支持。所以我认为沟通能力和兴趣爱好是个人的数据挖掘的核心竞争力,是很难学到的;而其他的相关专业知识谁都可以学,算不上个人发展的核心竞争力。

说到这里可能很多数据仓库专家、程序员、统计师等等都要扔砖头了,对不起,我没有别的意思,你们的专业对于数据挖掘都很重要,大家本来就是一个整体的,但是作为单独一个个体的人来说,精力有限,时间有限,不可能这些领域都能掌握,在这种情况下,选择最重要的核心,我想应该是数据挖掘技能和相关业务能力吧(从另外的一个极端的例子,我们可以看,比如一个迷你型的挖掘项目,一个懂得市场营销和数据挖掘技能的人应该可以胜任。这其中他虽然不懂数据仓库,但是简单的Excel就足以胜任高打6万个样本的数据处理;他虽然不懂专业的展示展现技能,但是只要他自己看的懂就行了,这就无需什么展示展现;前面说过,统计技能是应该掌握的,这对一个人的迷你项目很重要;他虽然不懂编程,但是专业挖掘工具和挖掘技能足够让他操练的;这样在迷你项目中,一个懂得挖掘技能和市场营销业务能力的人就可以圆满完成了,甚至在一个数据源中根据业务需求可以无穷无尽的挖掘不同的项目思路,试问就是这个迷你项目,单纯的一个数据仓库专家、单纯的一个程序员、单纯的一个展示展现技师、甚至单纯的一个挖掘技术专家,都是无法胜任的)。这从另一个方面也说明了为什么沟通能力的重要,这些个完全不同的专业领域,想要有效有机地整合在一起进行数据挖掘项目实践,你说没有好的沟通能力行吗?

数据挖掘能力只能在项目实践的熔炉中提升、升华,所以跟着项目学挖掘是最有效的捷径。国外学习挖掘的人都是一开始跟着老板做项目,刚开始不懂不要紧,越不懂越知道应该学什么,才能学得越快越有效果。我不知道国内的数据挖掘学生是怎样学的,但是从网上的一些论坛看,很多都是纸上谈兵,这样很浪费时间,很没有效率。

另外现在国内关于数据挖掘的概念都很混乱,很多BI只是局限在报表的展示和简单的统计分析,却也号称是数据挖掘;另一方面,国内真正规模化实施数据挖掘的行业是屈指可数(银行、保险公司、移动通讯),其他行业的应用就只能算是小规模的,比如很多大学都有些相关的挖掘课题、挖掘项目,但都比较分散,而且都是处于摸索阶段,但是我相信数据挖掘在中国一定是好的前景,因为这是历史发展的必然。

讲到移动方面的实践案例,如果你是来自移动的话,你一定知道国内有家叫华院分析的公司(申明,我跟这家公司没有任何关系,我只是站在数据挖掘者的角度分析过中国大多数的号称数据挖掘服务公司,觉得华院还不错,比很多徒有虚名的大公司来得更实际),他们的业务现在已经覆盖了绝大多数中国省级移动公司的分析挖掘项目,你上网搜索一下应该可以找到一些详细的资料吧。我对华院分析印象最深的一点就是2002年这个公司白手起家,自己不懂不要紧,一边自学一边开始拓展客户,到现在在中国的移动通讯市场全面开花,的确佩服佩服呀。他们最开始都是用EXCEL处理数据,用肉眼比较选择比较不同的模型,你可以想象这其中的艰难吧。

至于移动通讯的具体的数据挖掘的应用,那太多了,比如不同话费套餐的制订、客户流失模型、不同服务交叉销售模型、不同客户对优惠的弹性分析、客户群体细分模型、不同客户生命周期模型、渠道选择模型、恶意欺诈预警模型,太多了,记住,从客户的需求出发,从实践中的问题出发,移动中可以发现太多的挖掘项目。最后告诉你一个秘密,当你数据挖掘能力提升到一定程度时,你会发现无论什么行业,其实数据挖掘的应用有大部分是重合的相似的,这样你会觉得更轻松。

四、成为一名数据科学家需要掌握的技能图。 (原文: Data Science: How do I become a data scientist? )

人一能之,己十之;人十能之,己千之。果能此道矣,虽愚,必明;虽柔,必强。

一、目前国内的数据挖掘人员工作领域大致可分为三类。

- 1)数据分析师:在拥有行业数据的电商、金融、电信、咨询等行业里做业务咨询,商务智能,出分析报告。

- 2)数据挖掘工程师:在多媒体、电商、搜索、社交等大数据相关行业里做机器学习算法实现和分析。

- 3)科学研究方向:在高校、科研单位、企业研究院等高大上科研机构研究新算法效率改进及未来应用。

二、说说各工作领域需要掌握的技能。

(1).数据分析师

- 需要有深厚的数理统计基础,但是对程序开发能力不做要求。

- 需要熟练使用主流的数据挖掘(或统计分析)工具如Business Analytics and Business Intelligence Software(SAS)、SPSS、EXCEL等。

- 需要对与所在行业有关的一切核心数据有深入的理解,以及一定的数据敏感性培养。

- 经典图书推荐:《概率论与数理统计》、《统计学》推荐David Freedman版、《业务建模与数据挖掘》、《数据挖掘导论》、《SAS编程与数据挖掘商业案例》、《Clementine数据挖掘方法及应用》、《Excel 2007 VBA参考大全》、《IBM SPSS Statistics 19 Statistical Procedures Companion》等。

- 需要理解主流机器学习算法的原理和应用。

- 需要熟悉至少一门编程语言如(Python、C、C++、Java、Delphi等)。

- 需要理解数据库原理,能够熟练操作至少一种数据库(Mysql、SQL、DB2、Oracle等),能够明白MapReduce的原理操作以及熟练使用Hadoop系列工具更好。

- 经典图书推荐:《数据挖掘概念与技术》、《机器学习实战》、《人工智能及其应用》、《数据库系统概论》、《算法导论》、《Web数据挖掘》、《 Python标准库》、《thinking in Java》、《Thinking in C++》、《数据结构》等。

- 需要深入学习数据挖掘的理论基础,包括关联规则挖掘 (Apriori和FPTree)、分类算法(C4.5、KNN、Logistic Regression、SVM等) 、聚类算法 (Kmeans、Spectral Clustering)。目标可以先吃透数据挖掘10大算法各自的使用情况和优缺点。

- 相对SAS、SPSS来说R语言更适合科研人员The R Project for Statistical Computing,因为R软件是完全免费的,而且开放的社区环境提供多种附加工具包支持,更适合进行统计计算分析研究。虽然目前在国内流行度不高,但是强烈推荐。

- 可以尝试改进一些主流算法使其更加快速高效,例如实现Hadoop平台下的SVM云算法调用平台--web 工程调用hadoop集群。

- 需要广而深的阅读世界著名会议论文跟踪热点技术。如KDD,ICML,IJCAI,Association for the Advancement of Artificial Intelligence,ICDM 等等;还有数据挖掘相关领域期刊:ACM Transactions on Knowledge Discovery from Data,IEEE Transactions on Knowledge and Data Engineering,Journal of Machine Learning Research Homepage,IEEE Xplore: Pattern Analysis and Machine Intelligence, IEEE Transactions on等。

- 可以尝试参加数据挖掘比赛培养全方面解决实际问题的能力。如Sig KDD ,Kaggle: Go from Big Data to Big Analytics等。

- 可以尝试为一些开源项目贡献自己的代码,比如Apache Mahout: Scalable machine learning and data mining ,myrrix等(具体可以在SourceForge或GitHub.上发现更多好玩的项目)。

- 经典图书推荐:《机器学习》《模式分类》《统计学习理论的本质》《统计学习方法》《数据挖掘实用机器学习技术》《R语言实践》,英文素质是科研人才必备的《Machine Learning: A Probabilistic Perspective》《Scaling up Machine Learning : Parallel and Distributed Approaches》《Data Mining Using SAS Enterprise Miner : A Case Study Approach》《Python for Data Analysis》等。

三、以下是通信行业数据挖掘工程师的工作感受。

真正从数据挖掘项目实践的角度讲,沟通能力对挖掘的兴趣爱好是最重要的,有了爱好才可以愿意钻研,有了不错的沟通能力,才可以正确理解业务问题,才能正确把业务问题转化成挖掘问题,才可以在相关不同专业人才之间清楚表达你的意图和想法,取得他们的理解和支持。所以我认为沟通能力和兴趣爱好是个人的数据挖掘的核心竞争力,是很难学到的;而其他的相关专业知识谁都可以学,算不上个人发展的核心竞争力。

说到这里可能很多数据仓库专家、程序员、统计师等等都要扔砖头了,对不起,我没有别的意思,你们的专业对于数据挖掘都很重要,大家本来就是一个整体的,但是作为单独一个个体的人来说,精力有限,时间有限,不可能这些领域都能掌握,在这种情况下,选择最重要的核心,我想应该是数据挖掘技能和相关业务能力吧(从另外的一个极端的例子,我们可以看,比如一个迷你型的挖掘项目,一个懂得市场营销和数据挖掘技能的人应该可以胜任。这其中他虽然不懂数据仓库,但是简单的Excel就足以胜任高打6万个样本的数据处理;他虽然不懂专业的展示展现技能,但是只要他自己看的懂就行了,这就无需什么展示展现;前面说过,统计技能是应该掌握的,这对一个人的迷你项目很重要;他虽然不懂编程,但是专业挖掘工具和挖掘技能足够让他操练的;这样在迷你项目中,一个懂得挖掘技能和市场营销业务能力的人就可以圆满完成了,甚至在一个数据源中根据业务需求可以无穷无尽的挖掘不同的项目思路,试问就是这个迷你项目,单纯的一个数据仓库专家、单纯的一个程序员、单纯的一个展示展现技师、甚至单纯的一个挖掘技术专家,都是无法胜任的)。这从另一个方面也说明了为什么沟通能力的重要,这些个完全不同的专业领域,想要有效有机地整合在一起进行数据挖掘项目实践,你说没有好的沟通能力行吗?

数据挖掘能力只能在项目实践的熔炉中提升、升华,所以跟着项目学挖掘是最有效的捷径。国外学习挖掘的人都是一开始跟着老板做项目,刚开始不懂不要紧,越不懂越知道应该学什么,才能学得越快越有效果。我不知道国内的数据挖掘学生是怎样学的,但是从网上的一些论坛看,很多都是纸上谈兵,这样很浪费时间,很没有效率。

另外现在国内关于数据挖掘的概念都很混乱,很多BI只是局限在报表的展示和简单的统计分析,却也号称是数据挖掘;另一方面,国内真正规模化实施数据挖掘的行业是屈指可数(银行、保险公司、移动通讯),其他行业的应用就只能算是小规模的,比如很多大学都有些相关的挖掘课题、挖掘项目,但都比较分散,而且都是处于摸索阶段,但是我相信数据挖掘在中国一定是好的前景,因为这是历史发展的必然。

讲到移动方面的实践案例,如果你是来自移动的话,你一定知道国内有家叫华院分析的公司(申明,我跟这家公司没有任何关系,我只是站在数据挖掘者的角度分析过中国大多数的号称数据挖掘服务公司,觉得华院还不错,比很多徒有虚名的大公司来得更实际),他们的业务现在已经覆盖了绝大多数中国省级移动公司的分析挖掘项目,你上网搜索一下应该可以找到一些详细的资料吧。我对华院分析印象最深的一点就是2002年这个公司白手起家,自己不懂不要紧,一边自学一边开始拓展客户,到现在在中国的移动通讯市场全面开花,的确佩服佩服呀。他们最开始都是用EXCEL处理数据,用肉眼比较选择比较不同的模型,你可以想象这其中的艰难吧。

至于移动通讯的具体的数据挖掘的应用,那太多了,比如不同话费套餐的制订、客户流失模型、不同服务交叉销售模型、不同客户对优惠的弹性分析、客户群体细分模型、不同客户生命周期模型、渠道选择模型、恶意欺诈预警模型,太多了,记住,从客户的需求出发,从实践中的问题出发,移动中可以发现太多的挖掘项目。最后告诉你一个秘密,当你数据挖掘能力提升到一定程度时,你会发现无论什么行业,其实数据挖掘的应用有大部分是重合的相似的,这样你会觉得更轻松。

四、成为一名数据科学家需要掌握的技能图。 (原文: Data Science: How do I become a data scientist? )

人一能之,己十之;人十能之,己千之。果能此道矣,虽愚,必明;虽柔,必强。

以下摘自Quora

Before you begin, you need Multivariable Calculus, Linear Algebra, and Python.

If your math background is up to multivariable calculus and linear algebra, you'll have enough background to understand almost all of the probability / statistics / machine learning for the job.

Multivariate Calculus: https://www.quora.com/What-are-the-best-resources-for-mastering-multivariable-calculus

Numerical Linear Algebra / Computational Linear Algebra / Matrix Algebra: Linear Algebra

Multivariate calculus is useful for some parts of machine learning and a lot of probability. Linear / Matrix algebra is absolutely necessary for a lot of concepts in machine learning.

You also need some programming background to begin, preferably in Python. Most other things on this guide can be learned on the job (like random forests, pandas, A/B testing), but you can't get away without knowing how to program!

Python is the most important language for a data scientist to learn.Check out

To learn Python, check out How can I learn to program in Python?

Check out Meetup to find some that interest you! Attend an interesting talk, learn about data science live, and meet data scientists and other aspirational data scientists!

Start reading data science blogs and following influential data scientists!

Be sure to go through a course that involves heavy application in R or Python.

This course is developed in part by a fellow Quora user, Professor Joe Blitzstein.

Intro to the class

Lectures and Slides

2013 Assignments

2014 Assignments

2013 Labs

I would NOT recommend doing any of the prize-money competitions. They usually have datasets that are too large, complicated, or annoying, and are not good for learning ( Kaggle.com)

Start by learning scikit-learn, playing around, reading through tutorials and forums at Data Science London + Scikit-learn for a simple, synthetic, binary classification task.

Next, play around some more and check out the tutorials for Titanic: Machine Learning from Disaster with a slightly more complicated binary classification task (with categorical variables, missing values, etc.)

Afterwards, try some multi-class classification with Forest Cover Type Prediction.

Now, try a regression task Bike Sharing Demand that involves incorporating timestamps.

Try out some natural language processing with Sentiment Analysis on Movie Reviews

Finally, try out any of the other knowledge-based competitions that interest you!

A/B Testing is just a rebranded version of what pharmaceutical companies have been doing for decades. Learn more about A/B testing here: The Ultimate Guide To A/B Testing - Smashing Magazine

Visualization - I would recommend picking up ggplot2 in R to make simple yet beautiful graphics, checking out The Visual Display of Quantitative Information ($), and just browsing DataIsBeautiful • /r/dataisbeautifuland FlowingData for ideas and inspiration.

User Behavior - This set of blogs posts looks useful and interesting - This Explains Everything " User Behavior

Create public github respositories, make a blog, and post your work, side projects, Kaggle solutions, insights, and thoughts! This helps you gain visibility, build a portfolio for your resume, and connect with other people working on the same tasks

Check out more specific versions of this question:

In addition to the concrete steps I listed above to develop the skillset of a data scientist, I include seven challenges below so you can learn to think like a data scientist and develop the right attitude to become one.

As a data scientist you write your own questions and answers. Data scientists are naturally curious about the data that they're looking at, and are creative with ways to approach and solve whatever problem needs to be solved.

Much of data science is not the analysis itself, but discovering an interesting question and figuring out how to answer it.

Here are two great examples:

Challenge: Think of a problem or topic you're interested in and answer it with data!

Much of the contribution of a data scientist (and why it's really hard to replace a data scientist with a machine), is that a data scientist will tell you what's important and what's spurious. This persistent skepticism is healthy in all sciences, and is especially necessarily in a fast-paced environment where it's too easy to let a spurious result be misinterpreted.

You can adopt this mindset yourself by reading news with a critical eye. Many news articles have inherently flawed main premises. Try these two articles. Sample answers are available in the comments.

Easier: You Love Your iPhone. Literally.

Harder: Who predicted Russia’s military intervention?

Challenge: Do this every day when you encounter a news article. Comment on the article and point out the flaws.

Visit a consumer internet product (probably that you know doesn't do extensive A/B testing already), and then think about their main funnel. Do they have a checkout funnel? Do they have a signup funnel? Do they have a virility mechanism? Do they have an engagement funnel?

Go through the funnel multiple times and hypothesize about different ways it could do better to increase a core metric (conversion rate, shares, signups, etc.). Design an experiment to verify if your suggested change can actually change the core metric.

Challenge: Share it with the feedback email for the consumer internet site!

To think like a Bayesian, avoid the Base rate fallacy. This means to form new beliefs you must incorporate both newly observed information AND prior information formed through intuition and experience.

Checking your dashboard, user engagement numbers are significantly down today. Which of the following is most likely?

1. Users are suddenly less engaged

2. Feature of site broke

3. Logging feature broke

Even though explanation #1 completely explains the drop, #2 and #3 should be more likely because they have a much higher prior probability.

You're in senior management at Tesla, and five of Tesla's Model S's have caught fire in the last five months. Which is more likely?

1. Manufacturing quality has decreased and Teslas should now be deemed unsafe.

2. Safety has not changed and fires in Tesla Model S's are still much rarer than their counterparts in gasoline cars.

While #1 is an easy explanation (and great for media coverage), your prior should be strong on #2 because of your regular quality testing. However, you should still be seeking information that can update your beliefs on #1 versus #2 (and still find ways to improve safety). Question for thought: what information should you seek?

Challenge: Identify the last time you committed the Base rate fallacy. Avoid committing the fallacy from now on.

Knowledge is knowing how to perform a ordinary linear regression, wisdom is realizing how rare it applies cleanly in practice.

Knowledge is knowing five different variations of K-means clustering, wisdom is realizing how rarely actual data can be cleanly clustered, and how poorly K-means clustering can work with too many features.

Knowledge is knowing a vast range of sophisticated techniques, but wisdom is being able to choose the one that will provide the most amount of impact for the company in a reasonable amount of time.

You may develop a vast range of tools while you go through your Coursera or EdX courses, but your toolbox is not useful until you know which tools to use.

Challenge: Apply several tools to a real dataset and discover the tradeoffs and limitations of each tools. Which tools worked best, and can you figure out why?

How does Richard Feynman distinguish which concepts he understands and which concepts he doesn't?

What distinguished Richard Feynman was his ability to distill complex concepts into comprehendible ideas. Similarly, what distinguishes top data scientists is their ability to cogently share their ideas and explain their analyses.

Check out Edwin Chen's answers to these questions for examples of cogently-explained technical concepts:

Challenge: Teach a technical concept to a friend or on a public forum, like Quora or YouTube.

Perhaps even more important than a data scientist's ability to explain their analysis is their ability to communicate the value and potential impact of the actionable insights.

Certain tasks of data science will be commoditized as data science tools become better and better. New tools will make obsolete certain tasks such as writing dashboards, unnecessary data wrangling, and even specific kinds of predictive modeling.

However, the need for a data scientist to extract out and communicate what's important will never be made obsolete. With increasing amounts of data and potential insights, companies will always need data scientists (or people in data science-like roles), to triage all that can be done and prioritize tasks based on impact.

The data scientist's role in the company is the serve as the ambassador between the data and the company. The success of a data scientist is measured by how well he/she can tell a story and make an impact. Every other skill is amplified by this ability.

Challenge: Tell a story with statistics. Communicate the important findings in a dataset. Make a convincing presentation that your audience cares about.

Any feedback on this post is appreciated - in the comments, as a suggested edit, or in a private message.

If you liked this material, please consider following:

1) Me! William Chen

2) My personal blog, Storytelling with Statistics

3) Learn Data Science, where I am curating material on Quora that is relevant for anyone seeking to become a data scientist!

If your math background is up to multivariable calculus and linear algebra, you'll have enough background to understand almost all of the probability / statistics / machine learning for the job.

Multivariate Calculus: https://www.quora.com/What-are-the-best-resources-for-mastering-multivariable-calculus

Numerical Linear Algebra / Computational Linear Algebra / Matrix Algebra: Linear Algebra

Multivariate calculus is useful for some parts of machine learning and a lot of probability. Linear / Matrix algebra is absolutely necessary for a lot of concepts in machine learning.

You also need some programming background to begin, preferably in Python. Most other things on this guide can be learned on the job (like random forests, pandas, A/B testing), but you can't get away without knowing how to program!

Python is the most important language for a data scientist to learn.Check out

- Why is Python a language of choice for data scientists?

- Is Python the most important programming language to learn for aspiring data scientists & data miners?

To learn Python, check out How can I learn to program in Python?

Plug Yourself Into the Community

Check out Meetup to find some that interest you! Attend an interesting talk, learn about data science live, and meet data scientists and other aspirational data scientists!

Start reading data science blogs and following influential data scientists!

- What are the best blogs about data?

- What is your source of machine learning and data science news? Why?

- Data Science: what are some best users/agencies to follow on Twitter, Facebook, G+, and LinkedIn?

- What are the best Twitter accounts about data?

Setup your tools

- Install Python, iPython, and related libraries (guide)

- Install R and RStudio (I would say that R is the second most important language. It's good to know both Python and R)

- Install Sublime Text

Learn to use your tools

- Learn R with swirl

- What's the best way to learn to use Sublime Text?

- What is the best way to learn SQL? (I don't think there's too much of a need to install it on your computer, but just learning the syntax will be helpful for the job)

Learn Probability and Statistics

Be sure to go through a course that involves heavy application in R or Python.

- Python Application: Think Stats (free pdf) (Python focus)

- R Applications: An Introduction to Statistical Learning (free pdf)(MOOC) (R focus)

- Print out a copy of The Only Probability Cheatsheet You'll Ever Need

Complete Harvard's Data Science Course

This course is developed in part by a fellow Quora user, Professor Joe Blitzstein.

Intro to the class

- What is it like to design a data science class?

- What is it like to take CS 109/Statistics 121 (Data Science) at Harvard?

Lectures and Slides

- (2013) Lecture Videos

- (2013) Slides

- (2014) Lecture Videos

- (2014) Slides

2013 Assignments

- Intro to Python, Numpy, Matplotlib (Homework 0) (solutions)

- Poll aggregation, web scraping, plotting, model evaluation, and forecasting (Homework 1) (solutions)

- Data prediction, manipulation, and evaluation (Homework 2) (solutions)

- Predictive modeling, model calibration, sentiment analysis(Homework 3) (solutions)

- Recommendation engines, Using mapreduce (Homework 4) (solutions)

- Network visualization and analysis (Homework 5) (solutions)

2014 Assignments

- Data manipulation, modeling, plotting (Homework 1)(solutions)

2013 Labs

- Lab 2: Web Scraping

- Lab 3: EDA, Pandas, Matplotlib

- Lab 4: Scikit-Learn, Regression, PCA

- Lab 5: Bias, Variance, Cross-Validation

- Lab 6: Bayes, Linear Regression, and Metropolis Sampling

- Lab 7: Gibbs Sampling

- Lab 8: MapReduce

- Lab 9: Networks

- Lab 10: Support Vector Machines

Do most of Kaggle's Getting Started and Playground Competitions

I would NOT recommend doing any of the prize-money competitions. They usually have datasets that are too large, complicated, or annoying, and are not good for learning ( Kaggle.com)

Start by learning scikit-learn, playing around, reading through tutorials and forums at Data Science London + Scikit-learn for a simple, synthetic, binary classification task.

Next, play around some more and check out the tutorials for Titanic: Machine Learning from Disaster with a slightly more complicated binary classification task (with categorical variables, missing values, etc.)

Afterwards, try some multi-class classification with Forest Cover Type Prediction.

Now, try a regression task Bike Sharing Demand that involves incorporating timestamps.

Try out some natural language processing with Sentiment Analysis on Movie Reviews

Finally, try out any of the other knowledge-based competitions that interest you!

Learn More

A/B Testing is just a rebranded version of what pharmaceutical companies have been doing for decades. Learn more about A/B testing here: The Ultimate Guide To A/B Testing - Smashing Magazine

Visualization - I would recommend picking up ggplot2 in R to make simple yet beautiful graphics, checking out The Visual Display of Quantitative Information ($), and just browsing DataIsBeautiful • /r/dataisbeautifuland FlowingData for ideas and inspiration.

User Behavior - This set of blogs posts looks useful and interesting - This Explains Everything " User Behavior

Do Side Projects

- What are some good "toy problems" in data science?

- How can I start building a recommendation engine?

- What are some ideas for a quick weekend Python project?

- What is a good measure of the influence of a Twitter user?

- Where can I find large datasets open to the public?

- What are some good algorithms for a prioritized inbox?

- What are some good data science projects?

Code in Public

Create public github respositories, make a blog, and post your work, side projects, Kaggle solutions, insights, and thoughts! This helps you gain visibility, build a portfolio for your resume, and connect with other people working on the same tasks

Check out more specific versions of this question:

- How do I become a data scientist as an undergrad?

- How do I become a data scientist, almost finishing school and without the necessary skills?

- How do I become a data scientist as a PhD student?

- How do I become a data scientist, while currently working in a different job?

- How can I apply for Data Scientist job without holding a PhD?

- How do I become a data scientist in India?

- How do I become a data scientist without going to college/having a degree?

Think like a Data Scientist

In addition to the concrete steps I listed above to develop the skillset of a data scientist, I include seven challenges below so you can learn to think like a data scientist and develop the right attitude to become one.

(1) Satiate your curiosity through data

As a data scientist you write your own questions and answers. Data scientists are naturally curious about the data that they're looking at, and are creative with ways to approach and solve whatever problem needs to be solved.

Much of data science is not the analysis itself, but discovering an interesting question and figuring out how to answer it.

Here are two great examples:

- Hilary: the most poisoned baby name in US history

- A Look at Fire Response Data

Challenge: Think of a problem or topic you're interested in and answer it with data!

(2) Read news with a skeptical eye

Much of the contribution of a data scientist (and why it's really hard to replace a data scientist with a machine), is that a data scientist will tell you what's important and what's spurious. This persistent skepticism is healthy in all sciences, and is especially necessarily in a fast-paced environment where it's too easy to let a spurious result be misinterpreted.

You can adopt this mindset yourself by reading news with a critical eye. Many news articles have inherently flawed main premises. Try these two articles. Sample answers are available in the comments.

Easier: You Love Your iPhone. Literally.

Harder: Who predicted Russia’s military intervention?

Challenge: Do this every day when you encounter a news article. Comment on the article and point out the flaws.

(3) See data as a tool to improve consumer products

Go through the funnel multiple times and hypothesize about different ways it could do better to increase a core metric (conversion rate, shares, signups, etc.). Design an experiment to verify if your suggested change can actually change the core metric.

Challenge: Share it with the feedback email for the consumer internet site!

(4) Think like a Bayesian

To think like a Bayesian, avoid the Base rate fallacy. This means to form new beliefs you must incorporate both newly observed information AND prior information formed through intuition and experience.

Checking your dashboard, user engagement numbers are significantly down today. Which of the following is most likely?

1. Users are suddenly less engaged

2. Feature of site broke

3. Logging feature broke

Even though explanation #1 completely explains the drop, #2 and #3 should be more likely because they have a much higher prior probability.

You're in senior management at Tesla, and five of Tesla's Model S's have caught fire in the last five months. Which is more likely?

1. Manufacturing quality has decreased and Teslas should now be deemed unsafe.

2. Safety has not changed and fires in Tesla Model S's are still much rarer than their counterparts in gasoline cars.

While #1 is an easy explanation (and great for media coverage), your prior should be strong on #2 because of your regular quality testing. However, you should still be seeking information that can update your beliefs on #1 versus #2 (and still find ways to improve safety). Question for thought: what information should you seek?

Challenge: Identify the last time you committed the Base rate fallacy. Avoid committing the fallacy from now on.

(5) Know the limitations of your tools

“Knowledge is knowing that a tomato is a fruit, wisdom is not putting it in a fruit salad.” - Miles Kington

Knowledge is knowing how to perform a ordinary linear regression, wisdom is realizing how rare it applies cleanly in practice.

Knowledge is knowing five different variations of K-means clustering, wisdom is realizing how rarely actual data can be cleanly clustered, and how poorly K-means clustering can work with too many features.

Knowledge is knowing a vast range of sophisticated techniques, but wisdom is being able to choose the one that will provide the most amount of impact for the company in a reasonable amount of time.

You may develop a vast range of tools while you go through your Coursera or EdX courses, but your toolbox is not useful until you know which tools to use.

Challenge: Apply several tools to a real dataset and discover the tradeoffs and limitations of each tools. Which tools worked best, and can you figure out why?

(6) Teach a complicated concept

How does Richard Feynman distinguish which concepts he understands and which concepts he doesn't?

Feynman was a truly great teacher. He prided himself on being able to devise ways to explain even the most profound ideas to beginning students. Once, I said to him, "Dick, explain to me, so that I can understand it, why spin one-half particles obey Fermi-Dirac statistics." Sizing up his audience perfectly, Feynman said, "I'll prepare a freshman lecture on it." But he came back a few days later to say, "I couldn't do it. I couldn't reduce it to the freshman level. That means we don't really understand it." - David L. Goodstein, Feynman's Lost Lecture: The Motion of Planets Around the Sun

What distinguished Richard Feynman was his ability to distill complex concepts into comprehendible ideas. Similarly, what distinguishes top data scientists is their ability to cogently share their ideas and explain their analyses.

Check out Edwin Chen's answers to these questions for examples of cogently-explained technical concepts:

- Is there any summary of top models for the Netflix prize?

- What is a good explanation of Latent Dirichlet Allocation?

- What is Least Angle Regression and when should it be used?

Challenge: Teach a technical concept to a friend or on a public forum, like Quora or YouTube.

(7) Convince others about what's important

Perhaps even more important than a data scientist's ability to explain their analysis is their ability to communicate the value and potential impact of the actionable insights.

Certain tasks of data science will be commoditized as data science tools become better and better. New tools will make obsolete certain tasks such as writing dashboards, unnecessary data wrangling, and even specific kinds of predictive modeling.

However, the need for a data scientist to extract out and communicate what's important will never be made obsolete. With increasing amounts of data and potential insights, companies will always need data scientists (or people in data science-like roles), to triage all that can be done and prioritize tasks based on impact.

The data scientist's role in the company is the serve as the ambassador between the data and the company. The success of a data scientist is measured by how well he/she can tell a story and make an impact. Every other skill is amplified by this ability.

Challenge: Tell a story with statistics. Communicate the important findings in a dataset. Make a convincing presentation that your audience cares about.

Any feedback on this post is appreciated - in the comments, as a suggested edit, or in a private message.

If you liked this material, please consider following:

1) Me! William Chen

2) My personal blog, Storytelling with Statistics

3) Learn Data Science, where I am curating material on Quora that is relevant for anyone seeking to become a data scientist!

The best way to become a data scientist is to learn - and do - data science. There are a many excellent courses and tools available online that can help you get there.

Here is an incredible list of resources compiled by Jonathan Dinu, Co-founder of Zipfian Academy, which trains data scientists and data engineers in San Francisco via immersive programs, fellowships, and workshops.

EDIT: I've had several requests for a permalink to this answer. See here: A Practical Intro to Data Science from Zipfian Academy

EDIT2: See also: "How to Become a Data Scientist" on SlideShare:http://www.slideshare.net/ryanor...

Environment

Python is a great programming language of choice for aspiring data scientists due to its general purpose applicability, a gentle (or firm) learning curve, and — perhaps the most compelling reason — the rich ecosystem of resourcesand libraries actively used by the scientific community.

Development

When learning a new language in a new domain, it helps immensely to have an interactive environment to explore and to receive immediate feedback. IPython provides an interactive REPL which also allows you to integrate a wide variety of frameworks (including R) into your Python programs.

STATISTICS

Data scientists are better at software engineering than statisticians and better at statistics than any software engineer. As such, statistical inference underpins much of the theory behind data analysis and a solid foundation of statistical methods and probability serves as a stepping stone into the world of data science.

Courses

edX: Introduction to Statistics: Descriptive Statistics: A basic introductory statistics course.

Coursera Statistics, Making Sense of Data: A applied Statistics course that teaches the complete pipeline of statistical analysis

MIT: Statistical Thinking and Data Analysis: Introduction to probability, sampling, regression, common distributions, and inference.

While R is the de facto standard for performing statistical analysis, it has quite a high learning curve and there are other areas of data science for which it is not well suited. To avoid learning a new language for a specific problem domain, we recommend trying to perform the exercises of these courses with Python and its numerous statistical libraries. You will find that much of the functionality of R can be replicated with NumPy, @SciPy, @Matplotlib, and @Python Data Analysis Library

Books

Well-written books can be a great reference (and supplement) to these courses, and also provide a more independent learning experience. These may be useful if you already have some knowledge of the subject or just need to fill in some gaps in your understanding:

O'Reilly Think Stats: An Introduction to Probability and Statistics for Python programmers

Introduction to Probability: Textbook for Berkeley’s Stats 134 class, an introductory treatment of probability with complementary exercises.

Berkeley Lecture Notes, Introduction to Probability: Compiled lecture notes of above textbook, complete with exercises.

OpenIntro: Statistics: Introductory text book with supplementary exercises and labs in an online portal.

Think Bayes: An simple introduction to Bayesian Statistics with Python code examples.

MACHINE LEARNING/ALGORITHMS

A solid base of Computer Science and algorithms is essential for an aspiring data scientist. Luckily there are a wealth of great resources online, and machine learning is one of the more lucrative (and advanced) skills of a data scientist.

Courses

Coursera Machine Learning: Stanford’s famous machine learning course taught by Andrew Ng.

Coursera: Computational Methods for Data Analysis: Statistical methods and data analysis applied to physical, engineering, and biological sciences.

MIT Data Mining: An introduction to the techniques of data mining and how to apply ML algorithms to garner insights.

Edx: Introduction to Artificial Intelligence: Introduction to Artificial Intelligence: The first half of Berkeley’s popular AI course that teaches you to build autonomous agents to efficiently make decisions in stochastic and adversarial settings.

Introduction to Computer Science and Programming: MIT’s introductory course to the theory and application of Computer Science.

Books

UCI: A First Encounter with Machine Learning: An introduction to machine learning concepts focusing on the intuition and explanation behind why they work.

A Programmer's Guide to Data Mining: A web based book complete with code samples (in Python) and exercises.

Data Structures and Algorithms with Object-Oriented Design Patterns in Python: An introduction to computer science with code examples in Python — covers algorithm analysis, data structures, sorting algorithms, and object oriented design.

An Introduction to Data Mining: An interactive Decision Tree guide (with hyperlinked lectures) to learning data mining and ML.

Elements of Statistical Learning: One of the most comprehensive treatments of data mining and ML, often used as a university textbook.

Stanford: An Introduction to Information Retrieval: Textbook from a Stanford course on NLP and information retrieval with sections on text classification, clustering, indexing, and web crawling.

DATA INGESTION AND CLEANING

One of the most under-appreciated aspects of data science is the cleaning and munging of data that often represents the most significant time sink during analysis. While there is never a silver bullet for such a problem, knowing the right tools, techniques, and approaches can help minimize time spent wrangling data.

Courses

School of Data: A Gentle Introduction to Cleaning Data: A hands on approach to learning to clean data, with plenty of exercises and web resources.

Tutorials

Predictive Analytics: Data Preparation: An introduction to the concepts and techniques of sampling data, accounting for erroneous values, and manipulating the data to transform it into acceptable formats.

Tools

OpenRefine (formerly Google Refine): A powerful tool for working with messy data, cleaning, transforming, extending it with web services, and linking to databases. Think Excel on steroids.

Data Wrangler: Stanford research project that provides an interactive tool for data cleaning and transformation.

sed - an Introduction and Tutorial: “The ultimate stream editor,” used to process files with regular expressions often used for substitution.

awk - An Introduction and Tutorial: “Another cornerstone of UNIX shell programming” — used for processing rows and columns of information.

VISUALIZATION

The most insightful data analysis is useless unless you can effectively communicate your results. The art of visualization has a long history, and while being one of the most qualitative aspects of data science its methods and tools are well documented.

Courses

UC Berkeley Visualization: Graduate class on the techniques and algorithms for creating effective visualizations.

Rice University Data Visualization: A treatment of data visualization and how to meaningfully present information from the perspective of Statistics.

Harvard University Introduction to Computing, Modeling, and Visualization: Connects the concepts of computing with data to the process of interactively visualizing results.

Books

Tufte: The Visual Display of Quantitative Information: Not freely available, but perhaps the most influential text for the subject of data visualization. A classic that defined the field.

Tutorials

School of Data: From Data to Diagrams: A gentle introduction to plotting and charting data, with exercises.

Predictive Analytics: Overview and Data Visualization: An introduction to the process of predictive modeling, and a treatment of the visualization of its results.

Tools

D3.js: Data-Driven Documents — Declarative manipulation of DOM elements with data dependent functions (with Python port).

Vega: A visualization grammar built on top of D3 for declarative visualizations in JSON. Released by the dream team at Trifacta, it provides a higher level abstraction than D3 for creating “ or SVG based graphics.

Rickshaw: A charting library built on top of D3 with a focus on interactive time series graphs.

Modest Maps: A lightweight library with a simple interface for working with maps in the browser (with ports to multiple languages).

Chart.js: Very simple (only six charts) HTML5 “ based plotting library with beautiful styling and animation.

COMPUTING AT SCALE

When you start operating with data at the scale of the web (or greater), the fundamental approach and process of analysis must change. To combat the ever increasing amount of data, Google developed the MapReduceparadigm. This programming model has become the de facto standard for large scale batch processing since the release of Apache Hadoop in 2007, the open-source MapReduce framework.

Courses

UC Berkeley: Analyzing Big Data with Twitter: A course — taught in close collaboration with Twitter — that focuses on the tools and algorithms for data analysis as applied to Twitter microblog data (with project based curriculum).

Coursera: Web Intelligence and Big Data: An introduction to dealing with large quantities of data from the web; how the tools and techniques for acquiring, manipulating, querying, and analyzing data change at scale.

CMU: Machine Learning with Large Datasets: A course on scaling machine learning algorithms on Hadoop to handle massive datasets.

U of Chicago: Large Scale Learning: A treatment of handling large datasets through dimensionality reduction, classification, feature parametrization, and efficient data structures.

UC Berkeley: Scalable Machine Learning: A broad introduction to the systems, algorithms, models, and optimizations necessary at scale.

Books

Mining Massive Datasets: Stanford course resources on large scale machine learning and MapReduce with accompanying book.

Data-Intensive Text Processing with MapReduce: An introduction to algorithms for the indexing and processing of text that teaches you to “think in MapReduce.”

Hadoop: The Definitive Guide: The most thorough treatment of the Hadoop framework, a great tutorial and reference alike.

Programming Pig: An introduction to the Pig framework for programming data flows on Hadoop.

PUTTING IT ALL TOGETHER

Data Science is an inherently multidisciplinary field that requires a myriad of skills to be a proficient practitioner. The necessary curriculum has not fit into traditional course offerings, but as awareness of the need for individuals who have such abilities is growing, we are seeing universities and private companies creating custom classes.

Courses

UC Berkeley: Introduction to Data Science: A course taught by Jeff Hammerbacher and Mike Franklin that highlights each of the varied skills that a Data Scientist must be proficient with.

How to Process, Analyze, and Visualize Data: A lab oriented course that teaches you the entire pipeline of data science; from acquiring datasets and analyzing them at scale to effectively visualizing the results.

Coursera: Introduction to Data Science: A tour of the basic techniques for Data Science including SQL and NoSQL databases, MapReduce on Hadoop, ML algorithms, and data visualization.

Columbia: Introduction to Data Science: A very comprehensive course that covers all aspects of data science, with an humanistic treatment of the field.

Columbia: Applied Data Science (with book): Another Columbia course — teaches applied software development fundamentals using real data, targeted towards people with mathematical backgrounds.

Coursera: Data Analysis (with notes and lectures): An applied statistics course that covers algorithms and techniques for analyzing data and interpreting the results to communicate your findings.

Books

An Introduction to Data Science: The companion textbook to Syracuse University’s flagship course for their new Data Science program.

Tutorials

Kaggle: Getting Started With Python For Data Science: A guided tour of setting up a development environment, an introduction to making your first competition submission, and validating your results.

CONCLUSION

Data science is infinitely complex field and this is just the beginning.

If you want to get your hands dirty and gain experience working with these tools in a collaborative environment, check out our programs athttp://zipfianacademy.com.

There's also a great SlideShare summarizing these skills: How to Become a Data Scientist

You're also invited to connect with us on Twitter @zipfianacademy and let us know if you want to learn more about any of these topi

Here is an incredible list of resources compiled by Jonathan Dinu, Co-founder of Zipfian Academy, which trains data scientists and data engineers in San Francisco via immersive programs, fellowships, and workshops.

EDIT: I've had several requests for a permalink to this answer. See here: A Practical Intro to Data Science from Zipfian Academy

EDIT2: See also: "How to Become a Data Scientist" on SlideShare:http://www.slideshare.net/ryanor...

Environment

Python is a great programming language of choice for aspiring data scientists due to its general purpose applicability, a gentle (or firm) learning curve, and — perhaps the most compelling reason — the rich ecosystem of resourcesand libraries actively used by the scientific community.

Development

When learning a new language in a new domain, it helps immensely to have an interactive environment to explore and to receive immediate feedback. IPython provides an interactive REPL which also allows you to integrate a wide variety of frameworks (including R) into your Python programs.

STATISTICS

Data scientists are better at software engineering than statisticians and better at statistics than any software engineer. As such, statistical inference underpins much of the theory behind data analysis and a solid foundation of statistical methods and probability serves as a stepping stone into the world of data science.

Courses

edX: Introduction to Statistics: Descriptive Statistics: A basic introductory statistics course.

Coursera Statistics, Making Sense of Data: A applied Statistics course that teaches the complete pipeline of statistical analysis

MIT: Statistical Thinking and Data Analysis: Introduction to probability, sampling, regression, common distributions, and inference.

While R is the de facto standard for performing statistical analysis, it has quite a high learning curve and there are other areas of data science for which it is not well suited. To avoid learning a new language for a specific problem domain, we recommend trying to perform the exercises of these courses with Python and its numerous statistical libraries. You will find that much of the functionality of R can be replicated with NumPy, @SciPy, @Matplotlib, and @Python Data Analysis Library

Books

Well-written books can be a great reference (and supplement) to these courses, and also provide a more independent learning experience. These may be useful if you already have some knowledge of the subject or just need to fill in some gaps in your understanding:

O'Reilly Think Stats: An Introduction to Probability and Statistics for Python programmers

Introduction to Probability: Textbook for Berkeley’s Stats 134 class, an introductory treatment of probability with complementary exercises.

Berkeley Lecture Notes, Introduction to Probability: Compiled lecture notes of above textbook, complete with exercises.

OpenIntro: Statistics: Introductory text book with supplementary exercises and labs in an online portal.

Think Bayes: An simple introduction to Bayesian Statistics with Python code examples.

MACHINE LEARNING/ALGORITHMS

A solid base of Computer Science and algorithms is essential for an aspiring data scientist. Luckily there are a wealth of great resources online, and machine learning is one of the more lucrative (and advanced) skills of a data scientist.

Courses

Coursera Machine Learning: Stanford’s famous machine learning course taught by Andrew Ng.

Coursera: Computational Methods for Data Analysis: Statistical methods and data analysis applied to physical, engineering, and biological sciences.

MIT Data Mining: An introduction to the techniques of data mining and how to apply ML algorithms to garner insights.

Edx: Introduction to Artificial Intelligence: Introduction to Artificial Intelligence: The first half of Berkeley’s popular AI course that teaches you to build autonomous agents to efficiently make decisions in stochastic and adversarial settings.

Introduction to Computer Science and Programming: MIT’s introductory course to the theory and application of Computer Science.

Books

UCI: A First Encounter with Machine Learning: An introduction to machine learning concepts focusing on the intuition and explanation behind why they work.

A Programmer's Guide to Data Mining: A web based book complete with code samples (in Python) and exercises.

Data Structures and Algorithms with Object-Oriented Design Patterns in Python: An introduction to computer science with code examples in Python — covers algorithm analysis, data structures, sorting algorithms, and object oriented design.

An Introduction to Data Mining: An interactive Decision Tree guide (with hyperlinked lectures) to learning data mining and ML.

Elements of Statistical Learning: One of the most comprehensive treatments of data mining and ML, often used as a university textbook.

Stanford: An Introduction to Information Retrieval: Textbook from a Stanford course on NLP and information retrieval with sections on text classification, clustering, indexing, and web crawling.

DATA INGESTION AND CLEANING

One of the most under-appreciated aspects of data science is the cleaning and munging of data that often represents the most significant time sink during analysis. While there is never a silver bullet for such a problem, knowing the right tools, techniques, and approaches can help minimize time spent wrangling data.

Courses

School of Data: A Gentle Introduction to Cleaning Data: A hands on approach to learning to clean data, with plenty of exercises and web resources.

Tutorials

Predictive Analytics: Data Preparation: An introduction to the concepts and techniques of sampling data, accounting for erroneous values, and manipulating the data to transform it into acceptable formats.

Tools

OpenRefine (formerly Google Refine): A powerful tool for working with messy data, cleaning, transforming, extending it with web services, and linking to databases. Think Excel on steroids.

Data Wrangler: Stanford research project that provides an interactive tool for data cleaning and transformation.

sed - an Introduction and Tutorial: “The ultimate stream editor,” used to process files with regular expressions often used for substitution.

awk - An Introduction and Tutorial: “Another cornerstone of UNIX shell programming” — used for processing rows and columns of information.

VISUALIZATION

The most insightful data analysis is useless unless you can effectively communicate your results. The art of visualization has a long history, and while being one of the most qualitative aspects of data science its methods and tools are well documented.

Courses

UC Berkeley Visualization: Graduate class on the techniques and algorithms for creating effective visualizations.

Rice University Data Visualization: A treatment of data visualization and how to meaningfully present information from the perspective of Statistics.

Harvard University Introduction to Computing, Modeling, and Visualization: Connects the concepts of computing with data to the process of interactively visualizing results.

Books

Tufte: The Visual Display of Quantitative Information: Not freely available, but perhaps the most influential text for the subject of data visualization. A classic that defined the field.

Tutorials

School of Data: From Data to Diagrams: A gentle introduction to plotting and charting data, with exercises.

Predictive Analytics: Overview and Data Visualization: An introduction to the process of predictive modeling, and a treatment of the visualization of its results.

Tools

D3.js: Data-Driven Documents — Declarative manipulation of DOM elements with data dependent functions (with Python port).

Vega: A visualization grammar built on top of D3 for declarative visualizations in JSON. Released by the dream team at Trifacta, it provides a higher level abstraction than D3 for creating “ or SVG based graphics.

Rickshaw: A charting library built on top of D3 with a focus on interactive time series graphs.

Modest Maps: A lightweight library with a simple interface for working with maps in the browser (with ports to multiple languages).

Chart.js: Very simple (only six charts) HTML5 “ based plotting library with beautiful styling and animation.

COMPUTING AT SCALE

When you start operating with data at the scale of the web (or greater), the fundamental approach and process of analysis must change. To combat the ever increasing amount of data, Google developed the MapReduceparadigm. This programming model has become the de facto standard for large scale batch processing since the release of Apache Hadoop in 2007, the open-source MapReduce framework.

Courses

UC Berkeley: Analyzing Big Data with Twitter: A course — taught in close collaboration with Twitter — that focuses on the tools and algorithms for data analysis as applied to Twitter microblog data (with project based curriculum).

Coursera: Web Intelligence and Big Data: An introduction to dealing with large quantities of data from the web; how the tools and techniques for acquiring, manipulating, querying, and analyzing data change at scale.

CMU: Machine Learning with Large Datasets: A course on scaling machine learning algorithms on Hadoop to handle massive datasets.

U of Chicago: Large Scale Learning: A treatment of handling large datasets through dimensionality reduction, classification, feature parametrization, and efficient data structures.

UC Berkeley: Scalable Machine Learning: A broad introduction to the systems, algorithms, models, and optimizations necessary at scale.

Books

Mining Massive Datasets: Stanford course resources on large scale machine learning and MapReduce with accompanying book.

Data-Intensive Text Processing with MapReduce: An introduction to algorithms for the indexing and processing of text that teaches you to “think in MapReduce.”

Hadoop: The Definitive Guide: The most thorough treatment of the Hadoop framework, a great tutorial and reference alike.

Programming Pig: An introduction to the Pig framework for programming data flows on Hadoop.

PUTTING IT ALL TOGETHER

Data Science is an inherently multidisciplinary field that requires a myriad of skills to be a proficient practitioner. The necessary curriculum has not fit into traditional course offerings, but as awareness of the need for individuals who have such abilities is growing, we are seeing universities and private companies creating custom classes.

Courses

UC Berkeley: Introduction to Data Science: A course taught by Jeff Hammerbacher and Mike Franklin that highlights each of the varied skills that a Data Scientist must be proficient with.

How to Process, Analyze, and Visualize Data: A lab oriented course that teaches you the entire pipeline of data science; from acquiring datasets and analyzing them at scale to effectively visualizing the results.

Coursera: Introduction to Data Science: A tour of the basic techniques for Data Science including SQL and NoSQL databases, MapReduce on Hadoop, ML algorithms, and data visualization.

Columbia: Introduction to Data Science: A very comprehensive course that covers all aspects of data science, with an humanistic treatment of the field.

Columbia: Applied Data Science (with book): Another Columbia course — teaches applied software development fundamentals using real data, targeted towards people with mathematical backgrounds.

Coursera: Data Analysis (with notes and lectures): An applied statistics course that covers algorithms and techniques for analyzing data and interpreting the results to communicate your findings.

Books

An Introduction to Data Science: The companion textbook to Syracuse University’s flagship course for their new Data Science program.

Tutorials

Kaggle: Getting Started With Python For Data Science: A guided tour of setting up a development environment, an introduction to making your first competition submission, and validating your results.

CONCLUSION

Data science is infinitely complex field and this is just the beginning.

If you want to get your hands dirty and gain experience working with these tools in a collaborative environment, check out our programs athttp://zipfianacademy.com.

There's also a great SlideShare summarizing these skills: How to Become a Data Scientist

You're also invited to connect with us on Twitter @zipfianacademy and let us know if you want to learn more about any of these topi