Spark Streaming实时流处理项目10——日志产生器开发并结合log4j完成日志的输出

Spark Streaming实时流处理项目1——分布式日志收集框架Flume的学习

Spark Streaming实时流处理项目2——分布式消息队列Kafka学习

Spark Streaming实时流处理项目3——整合Flume和Kafka完成实时数据采集

Spark Streaming实时流处理项目4——实战环境搭建

Spark Streaming实时流处理项目5——Spark Streaming入门

Spark Streaming实时流处理项目6——Spark Streaming实战1

Spark Streaming实时流处理项目7——Spark Streaming实战2

Spark Streaming实时流处理项目8——Spark Streaming与Flume的整合

Spark Streaming实时流处理项目9——Spark Streaming整合Kafka实战

Spark Streaming实时流处理项目10——日志产生器开发并结合log4j完成日志的输出

Spark Streaming实时流处理项目11——综合实战

源码

日志产生器开发

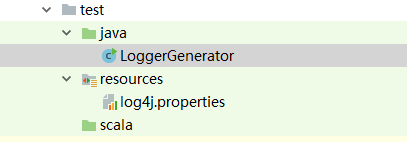

新建文件:

LoggerGenerator.java:

import org.apache.log4j.Logger;

/**

* @author YuZhansheng

* @desc 模拟日志产生

* @create 2019-02-25 9:56

*/

public class LoggerGenerator {

private static Logger logger = Logger.getLogger(LoggerGenerator.class.getName());

public static void main(String[] args) throws InterruptedException {

int index = 0;

while (true){

Thread.sleep(1000);

logger.info("current value is: " + index++);

}

}

}

log4j.properties:

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.target = System.out

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss,SSS} [%t] [%c] [%p] - %m%n使用Flume收集log4j产生的日志

Flume使用的关键就是写配置文件。

streaming.conf

agent1.sources=avro-source

agent1.channels=logger-channel

agent1.sinks=log-sink

#定义source

agent1.sources.avro-source.type=avro

agent1.sources.avro-source.bind=0.0.0.0

agent1.sources.avro-source.port=41414

#定义channel

agent1.channels.logger-channel.type=memory

#定义sink,输出到控制台

agent1.sinks.log-sink.type=logger

#组装一下

agent1.sources.avro-source.channels=logger-channel

agent1.sinks.log-sink.channel=logger-channel

启动该flume的命令:

flume-ng agent -c /soft/flume1.6/conf/ -f /soft/flume1.6/conf/streaming.conf -n agent1 -Dflume.root.logger=INFO,console

log4j和Flume对接需要在log4j配置文件log4j.properties中添加如下配置语句:

log4j.rootLogger=INFO, stdout,flume

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.target = System.out

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d{yyyy-MM-dd HH:mm:ss,SSS} [%t] [%c] [%p] - %m%n

#log4j和Flume对接需要在log4j配置文件log4j.properties中添加如下配置语句

log4j.appender.flume = org.apache.flume.clients.log4jappender.Log4jAppender

log4j.appender.flume.Hostname = hadoop0

log4j.appender.flume.Port = 41414

log4j.appender.flume.UnsafeMode = true

验证:

启动LoggerGenerator之后报下面的错误:

log4j:ERROR Could not instantiate class [org.apache.flume.clients.log4jappender.Log4jAppender].

java.lang.ClassNotFoundException: org.apache.flume.clients.log4jappender.Log4jAppender

添加一个jar包就可以啦:

org.apache.flume.flume-ng-clients

flume-ng-log4jappender

1.6.0

再次运行之后,就可以在flume控制台看到如下输出:

2019-02-25 10:53:49,741 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:94)] Event: { headers:{flume.client.log4j.timestamp=1551063229738, flume.client.log4j.logger.name=LoggerGenerator, flume.client.log4j.log.level=20000, flume.client.log4j.message.encoding=UTF8} body: 63 75 72 72 65 6E 74 20 76 61 6C 75 65 20 69 73 current value is }

又一个问题:“current value is”后面的数字没有显示出来!

我们修改程序,把current给删除掉,再次运行,看结果。

2019-02-25 10:58:03,716 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.LoggerSink.process(LoggerSink.java:94)] Event: { headers:{flume.client.log4j.timestamp=1551063483244, flume.client.log4j.logger.name=LoggerGenerator, flume.client.log4j.log.level=20000, flume.client.log4j.message.encoding=UTF8} body: 76 61 6C 75 65 20 69 73 3A 20 30 value is: 0 }

这次数字就可以正常输出啦!

现在,我们仅仅将数据输出到控制台,没有任何的用处!但是在我们开发的过程中,这一步确实是非常重要的,我们不可能一步就开发很多很多代码,这样不容易调试,开发必须要一步一步来,不能一口吃成胖子;下一步我们就把flume收集的数据sink到kafka中,实现flume和kafka的对接。

使用kafka要先启动zookeeper。

kafka查看所有topic命令: ./kafka-topics.sh --list --zookeeper hadoop0:2181

kafka创建topic命令:./kafka-topics.sh --create --zookeeper hadoop0:2181 --replication-factor 1 --partitions 1 --topic streamingtopic

agent1.sources=avro-source

agent1.channels=logger-channel

agent1.sinks=kafka-sink

#定义source

agent1.sources.avro-source.type=avro

agent1.sources.avro-source.bind=0.0.0.0

agent1.sources.avro-source.port=41414

#定义channel

agent1.channels.logger-channel.type=memory

#定义sink,输出到控制台

agent1.sinks.kafka-sink.type= org.apache.flume.sink.kafka.KafkaSink

agent1.sinks.kafka-sink.topic=streamingtopic

agent1.sinks.kafka-sink.brokerList=hadoop0:9092,hadoop1:9092,hadoop2:9092,hadoop3:9092

agent1.sinks.kafka-sink.requiredAcks=1

agent1.sinks.kafka-sink.batchSize=20

#组装一下

agent1.sources.avro-source.channels=logger-channel

agent1.sinks.kafka-sink.channel=logger-channel

启动Flume后报错:

2019-02-25 15:05:30,168 (lifecycleSupervisor-1-1) [ERROR - org.apache.flume.lifecycle.LifecycleSupervisor$MonitorRunnable.run(LifecycleSupervisor.java:253)] Unable to start SinkRunner: { policy:org.apache.flume.sink.DefaultSinkProcessor@713bf8d1 counterGroup:{ name:null counters:{} } } - Exception follows.

java.lang.NoClassDefFoundError: scala/collection/GenTraversableOnce$class

at kafka.utils.Pool.

at kafka.producer.ProducerStatsRegistry$.

at kafka.producer.ProducerStatsRegistry$.

at kafka.producer.async.DefaultEventHandler.

原因:

flume的libs的jar包兼容性问题,之前scala-library-2.10.1.jar的自己在streaming的时候,更改为scala-library-2.11.8.jar,导致兼容性问题,所以出现报错

解决:将scala-library-2.10.3.jar的jar包,复制到/soft/flume1.6/lib,命令cp scala-library-2.10.3.jar /soft/flume1.6/lib,scala-library-2.11.8.jar和scala-library-2.10.3.jar同时存在也可以,验证通过。

在另一台机器启动一个消费者消费kafka中的数据,./kafka-console-consumer.sh --zookeeper localhost:2181 --topic streamingtopic --from-beginning,可以看到数据在输出,这一步整合成功。

kafka 消费者把数据在控制台打印仍然是没有任何用处,下一步就是kafka和SparkStreaming对接,将kafka中的数据传递到SparkStreaming中去处理。

SparkStreaming和kafka基于Direct的整合:

import org.apache.kafka.clients.consumer.ConsumerRecord

import org.apache.kafka.common.serialization.StringDeserializer

import org.apache.spark.SparkConf

import org.apache.spark.streaming.dstream.{DStream, InputDStream}

import org.apache.spark.streaming.kafka010.KafkaUtils

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.streaming.kafka010.LocationStrategies.PreferConsistent

import org.apache.spark.streaming.kafka010.ConsumerStrategies.Subscribe

/**

* @ author YuZhansheng

* @ desc Spark Streaming对接kafka的方式2——基于Direct的整合

* @ create 2019-02-23 11:28

*/

object KafkaDirectWordCount {

def main(args: Array[String]): Unit = {

val sparkConf = new SparkConf().setAppName("KafkaDirectWordCount").setMaster("local[2]")

val ssc = new StreamingContext(sparkConf,Seconds(5))

val topics:String = "streamingtopic"

val topicarr = topics.split(",")

val brokers = "hadoop0:9092,hadoop1:9092,hadoop2:9092,hadoop3:9092"

val kafkaParams:Map[String,Object] = Map[String,Object](

"bootstrap.servers" -> brokers,

"key.deserializer" -> classOf[StringDeserializer],

"value.deserializer" -> classOf[StringDeserializer],

"group.id" -> "test",

"auto.offset.reset" -> "latest",

"enable.auto.commit" -> (false: java.lang.Boolean)

)

val kafka_streamDStream: InputDStream[ConsumerRecord[String,String]] = KafkaUtils.createDirectStream(

ssc,PreferConsistent,

Subscribe[String,String](topicarr,kafkaParams))

val resDStream: DStream[((Long, Int, String), Int)] = kafka_streamDStream.map(line =>

(line.offset(), line.partition(), line.value())).

flatMap(t =>{t._3.split(" ").map(word => (t._1,t._2,word))}).

map(k => ((k._1,k._2,k._3),1)).reduceByKey(_ + _)

resDStream.print()

ssc.start()

ssc.awaitTermination()

}

}说明:以上是在本地进行测试的,首先在IDEA中启动LoggerGenerator,源源不断产生日志,然后启动Flume agent收集日志,Flume将收集到的日志sink到kafka中,最后SparkStreaming消费kafka中的数据。

在生产环境中,有几点不同的情况,首先,LoggerGenerator这个日志生产类需要打成jar包,上传执行的,Flume和kafka的使用和测试时是一样的,还有一点不同的是,SparkStreaming的代码也是需要打成jar包,上传到服务器运行的,可以使用spark-submit的方式运行,运行模式可以根据实际情况进行选择,可以是:local/yarn/standalone/mesos。在生产上,流程大致是这样的,但是区别是业务逻辑的复杂性,此案例中,我们仅仅是把数据在控制台打印出来,实际生产中,业务逻辑远比此复杂。