glusterfs集群安装

环境准备

系统

[root@VM_0_9_centos ~]# uname -a

Linux VM_0_9_centos 3.10.0-957.el7.x86_64 #1 SMP Thu Nov 8 23:39:32 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

[root@VM_0_9_centos ~]# cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

硬盘:一块50G硬盘

[root@slave-09 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sr0 11:0 1 37.7M 0 rom

vda 253:0 0 50G 0 disk

└─vda1 253:1 0 50G 0 part /

vdb 253:16 0 50G 0 disk

├─vdb1 253:17 0 45G 0 part

└─vdb2 253:18 0 5G 0 part

节点信息

| ip | 主机名 |

|---|---|

| 172.17.0.9 | slave-09 |

| 172.17.0.12 | master-12 |

| 172.17.0.8 | slave-08 |

格式化磁盘

采用xfs存储

mkfs.xfs -f -i size=512 /dev/vdb

mkdir -p /data/gcluster

echo '/dev/vdb /data/gcluster xfs defaults 1 2' >> /etc/fstab

mount -a && mount

[root@master-12 ~]# mkfs.xfs -f -i size=512 /dev/vdb

meta-data=/dev/vdb isize=512 agcount=4, agsize=3276800 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=13107200, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=6400, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@slave-09 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sr0 11:0 1 37.7M 0 rom

vda 253:0 0 50G 0 disk

└─vda1 253:1 0 50G 0 part /

vdb 253:16 0 50G 0 disk /data/gcluster

安装glusterFS

yum install -y centos-release-gluster

yum install -y glusterfs glusterfs-server glusterfs-fuse glusterfs-rdma

[root@slave-09 ~]# systemctl start glusterd.service

[root@slave-09 ~]# systemctl status glusterd.service

加入集群

[root@master-12 ~]# gluster peer probe master-12

peer probe: success. Probe on localhost not needed

[root@master-12 ~]#

[root@master-12 ~]# gluster peer probe slave-09

peer probe: success.

[root@master-12 ~]# gluster peer probe slave-08

查看集群状态

[root@master-12 ~]# gluster peer status

Number of Peers: 2

Hostname: slave-09

Uuid: 28815fe9-ce7f-4ed3-ae94-ef7d032b6854

State: Peer in Cluster (Connected)

Hostname: slave-08

Uuid: ef857dbe-7801-4f26-aa0e-95b8f98e1c64

State: Peer in Cluster (Connected)

安装gluster volume

在每个节点上创建volume目录

mkdir -p /data/gcluster/data

gluster volume create gv0 replica 3 master-12:/data/gcluster/data slave-09:/data/gcluster/data slave-08:/data/gcluster/data

[root@master-12 ~]# gluster volume create gv0 replica 3 master-12:/data/gcluster/data slave-09:/data/gcluster/data slave-08:/data/gcluster/data

volume create: gv0: success: please start the volume to access data

[root@master-12 ~]# gluster volume start gv0

volume start: gv0: success

[root@master-12 ~]# gluster volume info

Volume Name: gv0

Type: Replicate

Volume ID: e5dcd35f-94af-4a6d-a8ad-feb1b5a4278d

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: master-12:/data/gcluster/data

Brick2: slave-09:/data/gcluster/data

Brick3: slave-08:/data/gcluster/data

Options Reconfigured:

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off

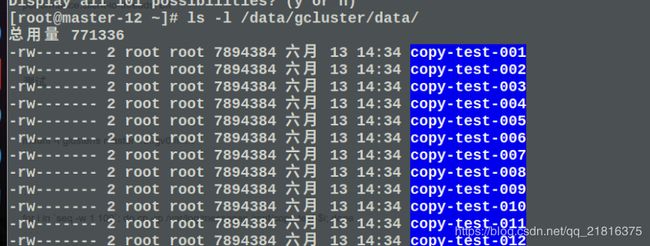

测试

mount -t glusterfs master-12:/gv0 /mnt

for i in `seq -w 1 100`; do cp -rp /var/log/messages /mnt/copy-test-$i; done

参考

https://docs.gluster.org/en/latest/Quick-Start-Guide/Quickstart/

http://mirror.centos.org/centos/7/storage/x86_64/gluster-3.12/

https://www.cnblogs.com/jicki/p/5801712.html