MapReduce实现分词和倒排索引(算法TF-IDF)

MapReduce实现分词和倒排索引(算法TF-IDF)

介绍IFDF

IF:词频(单词在文档中出现的次数/文档中的总词数)

DF:逆向文件频率(log(文档总数/出现该单词的文件数量)),log归一化,避免了一些常用词如 的,了等词的评分

IF/DF能表明单词在索引(文档库)的重要程度

输入文件

id \t 文本内容

3823891101582094 我爱中国

3823891201582094 北京天安门广场现在有升国旗

3823891301582094 武汉天气好热

思路

- 第一个MR需要将文件的每一行进行分词,每一行相当于elasticsearch的一个文档,汇总词在文档出现的次数和文档的单词总数,在这个里还需要记录文档的总数量,以及文件出现每个单词的文件数量。

part-r-00000、part-r-00001(id 词 词出现的次数 文档单词数) 3823891101582094 我 1 4 3823890210294392 爱 1 4 part-r-00002(词 出现该词的文档数量) 0 6 0.03 1 part-r-00003 (文档数量) 123564 - 第二MR的输入是上个MR的输出,该MR需要统计IDF。

需要用到的文件是part-r-00002 0 6 0.03 1 part-r-00003(作为cacheFile) 123564输出 (单词 出现的文件数 IDF) 0 66 274.0 0.03 11 1645.0 0.03元 11 1645.0 - 通过MR1和MR2的铺垫,最好可以通过MR1的TF和MR2的IDF,来计算单词的最终的评分

输出 id 单词 TF DF IF/DF 3823953501983192 0.88斤 0.045454545454545456 9.575816471867984 0.4352643850849084 3823926323040930 0.9斤 0.05263157894736842 9.575816471867984 0.5039903406246308实现

MR1

/**

* @author yzz

* @time 2019/6/1 14:03

* @E-mail [email protected]

* @since 0.0.1

*/

public class IFWordCountJob extends Base {

public static void client() throws Exception {

Configuration conf = new Configuration();

conf.set("mapreduce.app-submission.coress-paltform", "true");

conf.set("mapreduce.framework.name", "local");

Job job = Job.getInstance(conf);

job.setJobName("IFWordCountJob");

job.setJarByClass(IFWordCountJob.class);

Path in = new Path(getConfig(conf, "search.in"));

Path out = new Path(getConfig(conf, "search.out"));

clean(out, conf);

addInPath(job, conf, in);

FileOutputFormat.setOutputPath(job, out);

//map

//切片

job.setPartitionerClass(IFWordCountPartitioner.class);

job.setNumReduceTasks(4);

job.setMapperClass(IFWordCountMapper.class);

job.setMapOutputKeyClass(IFWordCountVO.class);

job.setMapOutputValueClass(NullWritable.class);

job.setCombinerClass(IFWordCountReducer.class);

//reduce

job.setReducerClass(IFWordCountReducer.class);

job.setOutputKeyClass(IFWordCountVO.class);

job.setOutputValueClass(NullWritable.class);

//submit

boolean complete = job.waitForCompletion(true);

Path documentCount = new Path(getConfig(conf, "df.document.count"));

Path wordCountInAllFile = new Path(getConfig(conf, "df.temp.in"), "WordFileCountInIndex.txt");

if (complete) {

//更新 文件总数和词在文件中出现的文件数

readAndWrite(new Path(out, "part-r-00003"), documentCount, conf);

copyFile(new Path(out, "part-r-00000"), new Path(getConfig(conf, "search.if"), job.getJobID().toString() + "_" + "part-r-00000"), conf);

copyFile(new Path(out, "part-r-00001"), new Path(getConfig(conf, "search.if"), job.getJobID().toString() + "_" + "part-r-00001"), conf);

copyFile(new Path(out, "part-r-00002"), wordCountInAllFile, conf);

}

}

}

/**

* @author yzz

* @time 2019/6/1 14:22

* @E-mail [email protected]

* @since 0.0.1

*/

public class IFWordCountMapper extends Mapper {

IFWordCountVO wordCountVO = new IFWordCountVO();

/**

* 3823890264861035 我约了吃饭哦

*

* @param key

* @param value

* @param context

* @throws IOException

* @throws InterruptedException

*/

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//文档统计

documentStatics(context);

//切割

String[] split = StringUtils.split(value.toString(), '\t');

wordCountVO.setId(split[0]);

String content = split[1];

//分词

IKSegmenter ikSegmenter = new IKSegmenter(new StringReader(content), true);

Lexeme ikWord = null;

List list = new ArrayList<>();

//词统计和统计 DF

while ((ikWord = ikSegmenter.next()) != null) {

String word = ikWord.getLexemeText();

list.add(word);

wordCountVO.setId("");

wordCountVO.setWord(word);

wordCountVO.setCount(1);

wordCountVO.setType(3);

context.write(wordCountVO, NullWritable.get());

}

//输出

for (String word : list) {

wordCountVO.setId(split[0]);

wordCountVO.setWord(word);

wordCountVO.setType(1);

wordCountVO.setWordCount(list.size());

context.write(wordCountVO, NullWritable.get());

}

}

public void documentStatics(Context context) throws IOException, InterruptedException {

wordCountVO.setWord("");

wordCountVO.setId("");

wordCountVO.setCount(1);

wordCountVO.setType(2);

context.write(wordCountVO, NullWritable.get());

}

}

/**

* @author yzz

* @time 2019/6/1 14:23

* @E-mail [email protected]

* @since 0.0.1

*/

public class IFWordCountReducer extends Reducer {

/**

* 2222 q 1

* 2222 q 1

* 2222 q 1

* 2

* @param key

* @param values

* @param context

* @throws IOException

* @throws InterruptedException

*/

@Override

protected void reduce(IFWordCountVO key, Iterable values, Context context) throws IOException, InterruptedException {

int sum = 0;

for (NullWritable n : values) {

sum += key.getCount();

}

key.setCount(sum);

context.write(key, NullWritable.get());

}

}

/**

* @author yzz

* @time 2019/6/1 14:13

* @E-mail [email protected]

* @since 0.0.1

*/

public class IFWordCountPartitioner extends HashPartitioner {

@Override

public int getPartition(IFWordCountVO key, NullWritable value, int numReduceTasks) {

if (key.getType() == 2) {

return 3;

}

if (key.getType() == 3) {

return 2;

}

return (key.getId().hashCode() & Integer.MAX_VALUE) % (numReduceTasks - 2);

}

}

/**

* @author yzz

* @time 2019/6/1 14:14

* @E-mail [email protected]

* @since 0.0.1

*/

public class IFWordCountVO implements WritableComparable {

private String id;

private String word;

private int count = 1;

private int wordCount = 0;

int type = 1;//1:表示分词 2.表示文档数量 3 单词出现的文档数

@Override

public void write(DataOutput out) throws IOException {

out.writeUTF(id);

out.writeUTF(word);

out.writeInt(count);

out.writeInt(type);

out.writeInt(wordCount);

}

@Override

public void readFields(DataInput in) throws IOException {

id = in.readUTF();

word = in.readUTF();

count = in.readInt();

type = in.readInt();

wordCount = in.readInt();

}

@Override

public int compareTo(IFWordCountVO o) {

int c = id.compareTo(o.getId());

if (c == 0) {

return word.compareTo(o.getWord());

}

return c;

}

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getWord() {

return word;

}

public void setWord(String word) {

this.word = word;

}

public int getCount() {

return count;

}

public void setCount(int count) {

this.count = count;

}

public int getType() {

return type;

}

public void setType(int type) {

this.type = type;

}

@Override

public String toString() {

if (type == 2) {

return String.valueOf(count);

}

if (type == 3) {

return word + '\t' + count;

}

return id + "\t" + word + '\t' + count + '\t' + wordCount;

}

public int getWordCount() {

return wordCount;

}

public void setWordCount(int wordCount) {

this.wordCount = wordCount;

}

}

MR2

/**

* @author yzz

* @time 2019/6/1 18:02

* @E-mail [email protected]

* @since 0.0.1

*/

public class DFJob extends Base {

/**

* @throws IOException

* @throws ClassNotFoundException

* @throws InterruptedException

*/

public static void client() {

try {

Configuration conf = new Configuration(true);

conf.set("mapreduce.app-submission.coress-paltform", "true");

conf.set("mapreduce.framework.name", "local");

Job job = Job.getInstance(conf);

job.setJobName("DFJob");

job.setJarByClass(DFJob.class);

job.addCacheFile(new Path(getConfig(conf, "df.document.count")).toUri());

Path in = new Path(getConfig(conf, "df.temp.in"));

Path out = new Path(getConfig(conf, "df.temp.out"));

clean(out, conf);

addInPath(job,conf,in);

FileOutputFormat.setOutputPath(job, out);

//map

job.setMapperClass(DFMapper.class);

job.setMapOutputKeyClass(DFVO.class);

job.setMapOutputValueClass(NullWritable.class);

//reduce

job.setReducerClass(DFReducer.class);

job.setOutputKeyClass(DFVO.class);

job.setOutputValueClass(NullWritable.class);

job.setNumReduceTasks(1);

boolean b = job.waitForCompletion(true);

}catch (Exception e){

e.fillInStackTrace();

}

}

}

/**

* @author yzz

* @time 2019/6/1 21:13

* @E-mail [email protected]

* @since 0.0.1

*/

public class DFMapper extends Mapper {

DFVO dfvo = new DFVO();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] split = StringUtils.split(value.toString(), '\t');

dfvo.setWord(split[0]);

dfvo.setDocumentCountInIndex(Long.valueOf(split[1]));

context.write(dfvo, NullWritable.get());

}

}

/**

* @author yzz

* @time 2019/6/1 21:16

* @E-mail [email protected]

* @since 0.0.1

*/

public class DFReducer extends Reducer {

private long documentCount;

@Override

protected void setup(Context context) throws IOException, InterruptedException {

URI[] cacheFiles = context.getCacheFiles();

URI uri = cacheFiles[0];

Path path = new Path(uri);

FileSystem fileSystem = path.getFileSystem(context.getConfiguration());

FSDataInputStream open = fileSystem.open(path);

String s = open.readLine();

documentCount = Long.valueOf(s);

}

@Override

protected void reduce(DFVO key, Iterable values, Context context) throws IOException, InterruptedException {

long num = 0;

for (NullWritable n : values) {

num += key.getDocumentCountInIndex();

}

key.setDocumentCountInIndex(num);

key.setDf(Math.log((documentCount * 1.0) / (num * 1.0D)));

context.write(key, NullWritable.get());

}

}

/**

* @author yzz

* @time 2019/6/1 19:40

* @E-mail [email protected]

* @since 0.0.1

*/

public class DFVO implements WritableComparable {

private String word;

private long documentCountInIndex;

private double df;

@Override

public int compareTo(DFVO o) {

return word.compareTo(o.getWord());

}

@Override

public void write(DataOutput out) throws IOException {

out.writeUTF(word);

out.writeLong(documentCountInIndex);

out.writeDouble(df);

}

@Override

public void readFields(DataInput in) throws IOException {

word = in.readUTF();

documentCountInIndex = in.readLong();

df = in.readLong();

}

public String getWord() {

return word;

}

public void setWord(String word) {

this.word = word;

}

public long getDocumentCountInIndex() {

return documentCountInIndex;

}

public void setDocumentCountInIndex(long documentCountInIndex) {

this.documentCountInIndex = documentCountInIndex;

}

@Override

public String toString() {

return word + '\t' + documentCountInIndex + '\t' + df;

}

public double getDf() {

return df;

}

public void setDf(double df) {

this.df = df;

}

}

MR3

/**

* @author yzz

* @time 2019/6/1 14:03

* @E-mail [email protected]

* @since 0.0.1

*/

public class IFDFJob extends Base {

public static void client() throws Exception {

Configuration conf = new Configuration();

conf.set("mapreduce.app-submission.coress-paltform", "true");

conf.set("mapreduce.framework.name", "local");

Job job = Job.getInstance(conf);

job.setJobName("IFDFJob");

job.setJarByClass(IFDFJob.class);

Path in1 = new Path(getConfig(conf, "search.if"));

Path in3 = new Path(getConfig(conf, "df.temp.out"));

Path out = new Path("/search/end");

clean(out, conf);

FileInputFormat.addInputPath(job, in1);

FileInputFormat.addInputPath(job, in3);

FileOutputFormat.setOutputPath(job, out);

//map

//切片

job.setMapperClass(IFDFMapper.class);

job.setMapOutputKeyClass(IFDFVO.class);

job.setMapOutputValueClass(NullWritable.class);

//reduce

job.setGroupingComparatorClass(IFDFGroupingComparator.class);

job.setReducerClass(IFDFReducer.class);

//submit

boolean complete = job.waitForCompletion(true);

if (complete) {

if (complete) {

//设置config

reWriteConfig(conf, getConfigMap(conf));

}

}

}

}

/**

* @author yzz

* @time 2019/6/1 21:13

* @E-mail [email protected]

* @since 0.0.1

*/

public class IFDFMapper extends Mapper {

IFDFVO ifdfvo = new IFDFVO();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] split = StringUtils.split(value.toString(), '\t');

if (split.length > 3) {

//if

ifdfvo.setId(split[0]);

ifdfvo.setWord(split[1]);

int i1 = Integer.valueOf(split[2]);

int i2 = Integer.valueOf(split[3]);

ifdfvo.setIfc(i1 * 1.0 / i2 * 1.0);

ifdfvo.setType(1);

} else {

//df

ifdfvo.setId("");

ifdfvo.setWord(split[0]);

ifdfvo.setDfc(Double.valueOf(split[2]));

ifdfvo.setType(2);

}

context.write(ifdfvo, NullWritable.get());

}

}

/**

* @author yzz

* @time 2019/6/1 21:16

* @E-mail [email protected]

* @since 0.0.1

*/

public class IFDFReducer extends Reducer {

@Override

protected void reduce(IFDFVO key, Iterable values, Context context) throws IOException, InterruptedException {

double ifc = 0.0;

double dfc = 0.0;

String id = null;

for (NullWritable n : values) {

if (1 == key.getType()) {

ifc = key.getIfc();

id = key.getId();

key.setId(id);

key.setIfdf(ifc * dfc);

key.setDfc(dfc);

context.write(key, NullWritable.get());

} else {

dfc = key.getDfc();

}

}

}

}

/**

* @author yzz

* @time 2019/6/2 13:48

* @E-mail [email protected]

* @since 0.0.1

*/

public class IFDFGroupingComparator extends WritableComparator {

public IFDFGroupingComparator() {

super(IFDFVO.class,true);

}

@Override

public int compare(WritableComparable a, WritableComparable b) {

IFDFVO ifdfvo1 = (IFDFVO) a;

IFDFVO ifdfvo2 = (IFDFVO) b;

return ifdfvo1.getWord().compareTo(ifdfvo2.getWord());

}

}

/**

* @author yzz

* @time 2019/6/2 1:50

* @E-mail [email protected]

* @since 0.0.1

*/

public class IFDFVO implements WritableComparable {

private String id;

private String word;

private double ifc;

private double dfc;

private double ifdf;

private int type;//1 if 2df

@Override

public int compareTo(IFDFVO o) {

int c1 = word.compareTo(o.getWord());

if (c1 == 0) {

return o.getType() - type;

}

return c1;

}

@Override

public void write(DataOutput out) throws IOException {

out.writeUTF(id);

out.writeUTF(word);

out.writeDouble(ifdf);

out.writeInt(type);

out.writeDouble(ifc);

out.writeDouble(dfc);

}

@Override

public void readFields(DataInput in) throws IOException {

id = in.readUTF();

word = in.readUTF();

ifdf = in.readDouble();

type = in.readInt();

ifc = in.readDouble();

dfc = in.readDouble();

}

public String getWord() {

return word;

}

public void setWord(String word) {

this.word = word;

}

public double getIfdf() {

return ifdf;

}

public void setIfdf(double ifdf) {

this.ifdf = ifdf;

}

public int getType() {

return type;

}

public void setType(int type) {

this.type = type;

}

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public double getIfc() {

return ifc;

}

public void setIfc(double ifc) {

this.ifc = ifc;

}

public double getDfc() {

return dfc;

}

public void setDfc(double dfc) {

this.dfc = dfc;

}

@Override

public String toString() {

return id + '\t' + word + '\t' + ifc + '\t' + dfc + '\t' + ifdf;

}

}

Base

/**

* @author yzz

* @time 2019/6/1 18:53

* @E-mail [email protected]

* @since 0.0.1

*/

public class Base {

public static final String CONFIG_PATH = "/search/conf/config.txt";

protected static void clean(Path out, Configuration conf) throws IOException {

if (out.getFileSystem(conf).exists(out)) {

out.getFileSystem(conf).delete(out, true);

out.getFileSystem(conf);

}

}

protected static Map getConfigMap(Configuration configuration) throws IOException {

Path path = new Path(CONFIG_PATH);

FileSystem fileSystem = path.getFileSystem(configuration);

FSDataInputStream open = fileSystem.open(path);

String str = null;

Map map = new HashMap<>();

while (null != (str = open.readLine())) {

if (null != str) {

String[] split = str.split("=");

map.put(split[0], split[1]);

}

}

open.close();

return map;

}

protected static String getConfig(Configuration configuration, String key) throws IOException {

FSDataInputStream open = null;

try {

Path path = new Path(CONFIG_PATH);

FileSystem fileSystem = path.getFileSystem(configuration);

open = fileSystem.open(path);

String str = null;

while (null != (str = open.readLine())) {

if (null != str) {

String[] split = str.split("=");

if (key.equals(split[0])) return split[1];

}

}

}catch (Exception e){

e.fillInStackTrace();

}finally {

open.close();

}

return null;

}

/**

* df.temp.in=/search/temp

* df.temp.out=/search/temp0

*

* @param configuration

* @param map

* @throws IOException

*/

protected static void reWriteConfig(Configuration configuration, Map map) throws IOException {

Path path = new Path(CONFIG_PATH);

FileSystem fileSystem = path.getFileSystem(configuration);

FSDataOutputStream outputStream = fileSystem.create(path, true);

String in = map.get("df.temp.in");

String out = map.get("df.temp.out");

map.put("df.temp.in", out);

map.put("df.temp.out", in);

for (Map.Entry en : map.entrySet()) {

String content = en.getKey() + "=" + en.getValue() + '\n';

outputStream.write(content.getBytes());

}

outputStream.flush();

IOUtils.closeStream(outputStream);

}

public static void addInPath(Job job, Configuration conf, Path path) throws IOException {

FileSystem fileSystem = path.getFileSystem(conf);

RemoteIterator locatedFileStatusRemoteIterator = fileSystem.listFiles(path, true);

while (locatedFileStatusRemoteIterator.hasNext()) {

LocatedFileStatus next = locatedFileStatusRemoteIterator.next();

Path p = next.getPath();

if (next.getLen() > 0)

FileInputFormat.addInputPath(job, p);

}

}

public static int readInt(FSDataInputStream open) {

try {

String a = open.readLine();

open.close();

return Integer.valueOf(a);

} catch (Exception e) {

e.printStackTrace();

return 0;

}

}

public static void copyFile(Path out, Path target, Configuration conf) throws IOException {

FileSystem fileSystem = out.getFileSystem(conf);

if (!fileSystem.exists(target)){

FSDataOutputStream outputStream = fileSystem.create(target);

outputStream.close();

}

FileUtil.copy(fileSystem,out,fileSystem,target,false,conf);

}

public static void readAndWrite(Path out, Path target, Configuration conf) throws IOException {

FileSystem fileSystem = out.getFileSystem(conf);

if (!fileSystem.exists(target)) {

fileSystem.createNewFile(target);

}

FSDataInputStream open = fileSystem.open(out);

FSDataInputStream open1 = fileSystem.open(target);

int c1 = readInt(open);

int c2 = readInt(open1);

FSDataOutputStream outputStream = fileSystem.create(target, true);

outputStream.writeBytes(String.valueOf(c1 + c2));

outputStream.flush();

outputStream.close();

open.close();

open1.close();

}

}

client

/**

* @author yzz

* @time 2019/6/1 15:39

* @E-mail [email protected]

* @since 0.0.1

*/

public class client {

public static void main(String[] args) {

try {

IFWordCountJob.client();

DFJob.client();

IFDFJob.client();

} catch (Exception e) {

e.printStackTrace();

}

}

}

pom

org.apache.hadoop

hadoop-common

${hadoop.version}

org.apache.hadoop

hadoop-client

${hadoop.version}

org.apache.hadoop

hadoop-hdfs

${hadoop.version}

org.apache.hadoop

hadoop-mapreduce-client-core

${hadoop.version}

org.apache.hadoop

hadoop-mapreduce-client-jobclient

${hadoop.version}

junit

junit

4.12

com.janeluo

ikanalyzer

2012_u6

compile

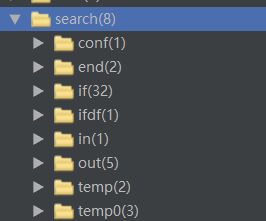

HDFS目录

总结

需要注意的是,所有打开的流必须关闭,HDFS系统对于未关闭的流有保护,会导致程序报错。一批文档上传至HDFS后经过3个MR,最后系统所有的文档的词评分将更新。