九、k8s pv与pvc持久化存储(静态与动态)

PersistenVolume(PV):对存储资源创建和使用的抽象,使得存储作为集群中的资源管理

PV分为静态和动态,动态能够自动创建PV

• PersistentVolumeClaim(PVC):让用户不需要关心具体的Volume实现细节

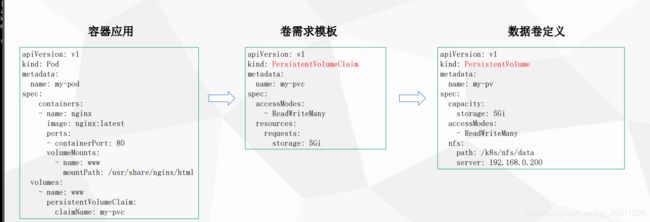

容器与PV、PVC之间的关系,可以如下图所示:

总的来说,PV是提供者,PVC是消费者,消费的过程就是绑定

PersistentVolume 静态绑定

根据上图我们可以此种模式分为三个部分

数据卷定义:(调用pvc)

卷需求模板:(pvc)

容器应用:(pv)

1、配置 数据卷和卷需求模板

[root@master volume]# cat pvc-pod.yaml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

#启用数据卷的名字为wwwroot,并挂载到nginx的html目录下

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

#定义数据卷名字为wwwroot,类型为pvc

volumes:

- name: wwwroot

persistentVolumeClaim:

claimName: my-pvc

---

# 定义pvc的数据来源,根据容量大小来匹配pv

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

#对应上面的名字

name: my-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

创建pvc:

kubectl apply -f pvc-pod.yaml

2、定义数据卷 pv

我们利用nfs做后端的数据来源

[root@master volume]# cat pv-pod.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: my-pv

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

nfs:

path: /opt/container_data

server: 192.168.1.39

创建pv:

kubectl apply -f pv-pod.yaml

此时我们可以看到,pvc根据选定的容量大小,自动匹配上了我们刚刚创建的 pv

[root@master volume]# kubectl get pvc,pv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/my-pvc Bound my-pv 5Gi RWX 11m

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/my-pv 5Gi RWX Retain Bound default/my-pvc

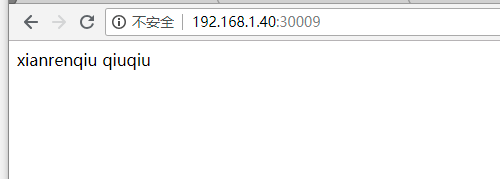

测试访问:

根据service暴露端口:

kubectl get svc

我们在后端nfs中的index.html中添加展示数据

[root@master container_data]# cat index.html

xianrenqiu qiuqi

192.168.1.40:30009

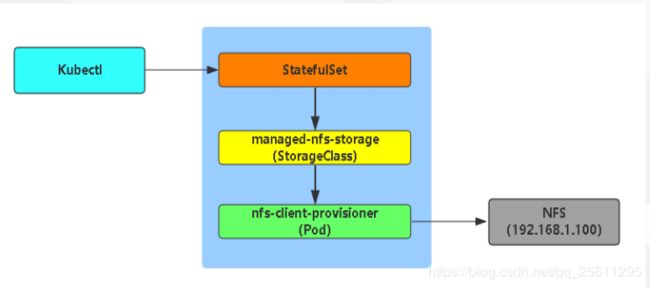

PersistentVolumeClaim pv动态供给

Dynamic Provisioning机制工作的核心在于StorageClass的API对象。

StorageClass声明存储插件,用于自动创建PV

当我们k8s业务上来的时候,大量的pvc,此时我们人工创建匹配的话,工作量就会非常大了,需要动态的自动挂载相应的存储,‘

我们需要使用到StorageClass,来对接存储,靠他来自动关联pvc,并创建pv。

Kubernetes支持动态供给的存储插件:

https://kubernetes.io/docs/concepts/storage/storage-classes/

因为NFS不支持动态存储,所以我们需要借用这个存储插件。

nfs动态相关部署可以参考:

https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client/deploy

部署步骤:

1、定义一个storage

[root@master storage]# cat storageclass-nfs.yaml

apiVersion: storage.k8s.io/v1beta1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs

2、部署授权

因为storage自动创建pv需要经过kube-apiserver,所以要进行授权

[root@master storage]# cat rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["list", "watch", "create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

3、部署一个自动创建pv的服务

这里自动创建pv的服务由nfs-client-provisioner 完成,

[root@master storage]# cat deployment-nfs.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

# imagePullSecrets:

# - name: registry-pull-secret

serviceAccount: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

#image: quay.io/external_storage/nfs-client-provisioner:latest

image: lizhenliang/nfs-client-provisioner:v2.0.0

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

#这个值是定义storage里面的那个值

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.1.39

- name: NFS_PATH

value: /opt/container_data

volumes:

- name: nfs-client-root

nfs:

server: 192.168.1.39

path: /opt/container_data

创建:

kubectl apply -f storageclass-nfs.yaml

kubectl apply -f rbac.yaml

kubectl apply -f deployment-nfs.yaml

查看创建好的storage:

[root@master storage]# kubectl get sc

NAME PROVISIONER AGE

managed-nfs-storage fuseim.pri/ifs 11h

nfs-client-provisioner 会以pod运行在k8s中,

[root@master storage]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-855887f688-hrdwj 1/1 Running 0 10h

4、部署有状态服务,测试自动创建pv

部署yaml文件参考:https://kubernetes.io/docs/tutorials/stateful-application/basic-stateful-set/

我们部署一个nginx服务,让其html下面自动挂载数据卷,

[root@master ~]# cat nginx.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

ports:

- port: 80

name: web

clusterIP: None

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

serviceName: "nginx"

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 1Gi

创建:

kubectl apply -f nginx.yaml

进入其中一个容器,创建一个文件:

kubectl exec -it web-0 sh

# cd /usr/share/nginx/html

# touch 1.txt

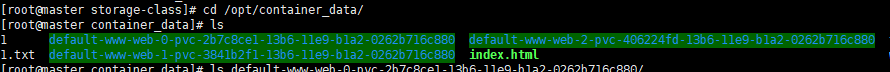

进入nfs数据目录:

此处可见到,nfs下面自动创建了三个有标识的数据卷文件夹。

查看web-0数据卷,是否有刚刚创建的1.txt

[root@master container_data]# ls default-www-web-0-pvc-2b7c8ce1-13b6-11e9-b1a2-0262b716c880/

1.txt

现在我们可以将web-0这个pod删掉,测试数据卷里面的文件会不会消失。

[root@master ~]# kubectl delete pod web-0

pod "web-0" deleted

经过测试我们可以得到,删掉这个pod以后,又会迅速再拉起一个web-0,并且数据不会丢失,这样我们也就达到了动态的数据持久化。