OpenCV3.4.3DNN 模块中sample-colorization、Easy-textdetector、openpose

下载模型:

1、https://github.com/richzhang/colorization/tree/master/colorization/resources(#加载聚类中心)![]()

2、https://github.com/richzhang/colorization/blob/master/colorization/models/colorization_deploy_v2.prototxt(配置文件)

3、http://eecs.berkeley.edu/~rich.zhang/projects/2016_colorization/files/demo_v2/colorization_release_v2.caffemodel(caffe模型

着色模型(colorization model)

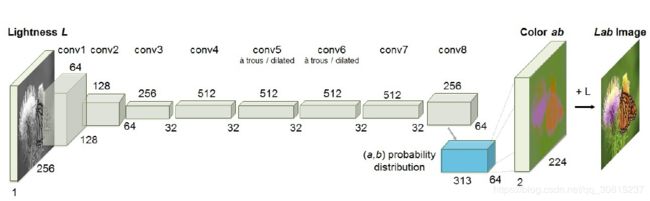

该模型与之前的基于CNN模型的不同之处在于,它是一个无监督的学习过程,不会把着色对象与训练生成看成是一个回归问题、而且是使用CIE Lab色彩空间,使用L分量作为输入,输出为颜色分量a,b,通过对颜色分量进行量化,把网络作为一个分类问题对待, 对得到输出结果,最终加上L分量之后,得到着色之后的图像,模型架构如下:

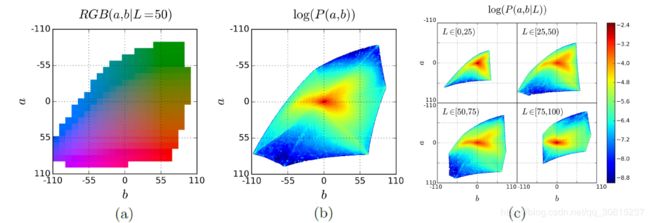

其中卷积层每一个block是有几个重复的conv卷积操作与ReLU + BN层构成!其中蓝色部分,是a,b颜色的313对 ab量化表示。最终学习到的就是WxHx313输出,进一步转换为Color ab的输出, 加上L分量之后就是完整的图像输出!313对ab色彩空间量化表示如下:

(a)量化的ab颜色空间,网格大小为10.总共313个ab对在色域。 (b)ab值的经验概率分布,以对数标度表示。

(c)以L为条件的ab值的经验概率分布以对数标度表示。

针对自然场景下,ab值较低导致生成图像的失真问题,通过分类再平衡技术依靠训练阶段,通过对损失函数调整像素权重,实现了比较好的效果。

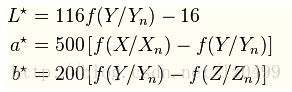

Lab 由三个通道组成,但不是R、G、B通道。它的一个通道是亮度,即L。另外两个是色彩通道,用A和B来表示。A通道包括的颜色是从深绿色(底亮度值)到灰色(中亮度值)再到亮粉红色(高亮度值);B通道则是从亮蓝色(底亮度值)到灰色(中亮度值)再到黄色(高亮度值)。Lab颜色空间中的L分量用于表示像素的亮度,取值范围是[0,100],表示从纯黑到纯白;a表示从红色到绿色的范围,取值范围是[127,-128];b表示从黄色到蓝色的范围,取值范围是[127,-128]。下图所示为Lab颜色空间的图示;

RGB转Lab颜色空间

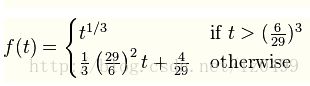

RGB颜色空间不能直接转换为Lab颜色空间,需要借助XYZ颜色空间,把RGB颜色空间转换到XYZ颜色空间,之后再把XYZ颜色空间转换到Lab颜色空间。RGB与XYZ颜色空间有如下关系:

其中 X = 0.412453 * R + 0.412453 *G+ 0.412453B ; 各系数相加之和为0.950456,非常接近于1,我们知道R/G/B的取值范围为[ 0,255 ],如果系数和等于1,则X的取值范围也必然在[ 0,255 ]之间,因此我们可以考虑等比修改各系数,使其之和等于1,这样就做到了XYZ和RGB在同等范围的映射。式中,Xn = 0.950456f; Yn = 1.0f; Zn = 1.088754f;

sample:

// D:\Program Files\OpenCV\opencv\sources\samples\dnn

// This file is part of OpenCV project.

// It is subject to the license terms in the LICENSE file found in the top-level directory

// of this distribution and at http://opencv.org/license.html

#include

#include

#include

#include

using namespace cv;

using namespace cv::dnn;

using namespace std;

// the 313 ab cluster centers from pts_in_hull.npy (already transposed)

static float hull_pts[] = {

-90., -90., -90., -90., -90., -80., -80., -80., -80., -80., -80., -80., -80., -70., -70., -70., -70., -70., -70., -70., -70.,

-70., -70., -60., -60., -60., -60., -60., -60., -60., -60., -60., -60., -60., -60., -50., -50., -50., -50., -50., -50., -50., -50.,

-50., -50., -50., -50., -50., -50., -40., -40., -40., -40., -40., -40., -40., -40., -40., -40., -40., -40., -40., -40., -40., -30.,

-30., -30., -30., -30., -30., -30., -30., -30., -30., -30., -30., -30., -30., -30., -30., -20., -20., -20., -20., -20., -20., -20.,

-20., -20., -20., -20., -20., -20., -20., -20., -20., -10., -10., -10., -10., -10., -10., -10., -10., -10., -10., -10., -10., -10.,

-10., -10., -10., -10., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 0., 10., 10., 10., 10., 10., 10., 10.,

10., 10., 10., 10., 10., 10., 10., 10., 10., 10., 10., 20., 20., 20., 20., 20., 20., 20., 20., 20., 20., 20., 20., 20., 20., 20.,

20., 20., 20., 30., 30., 30., 30., 30., 30., 30., 30., 30., 30., 30., 30., 30., 30., 30., 30., 30., 30., 30., 40., 40., 40., 40.,

40., 40., 40., 40., 40., 40., 40., 40., 40., 40., 40., 40., 40., 40., 40., 40., 50., 50., 50., 50., 50., 50., 50., 50., 50., 50.,

50., 50., 50., 50., 50., 50., 50., 50., 50., 60., 60., 60., 60., 60., 60., 60., 60., 60., 60., 60., 60., 60., 60., 60., 60., 60.,

60., 60., 60., 70., 70., 70., 70., 70., 70., 70., 70., 70., 70., 70., 70., 70., 70., 70., 70., 70., 70., 70., 70., 80., 80., 80.,

80., 80., 80., 80., 80., 80., 80., 80., 80., 80., 80., 80., 80., 80., 80., 80., 90., 90., 90., 90., 90., 90., 90., 90., 90., 90.,

90., 90., 90., 90., 90., 90., 90., 90., 90., 100., 100., 100., 100., 100., 100., 100., 100., 100., 100., 50., 60., 70., 80., 90.,

20., 30., 40., 50., 60., 70., 80., 90., 0., 10., 20., 30., 40., 50., 60., 70., 80., 90., -20., -10., 0., 10., 20., 30., 40., 50.,

60., 70., 80., 90., -30., -20., -10., 0., 10., 20., 30., 40., 50., 60., 70., 80., 90., 100., -40., -30., -20., -10., 0., 10., 20.,

30., 40., 50., 60., 70., 80., 90., 100., -50., -40., -30., -20., -10., 0., 10., 20., 30., 40., 50., 60., 70., 80., 90., 100., -50.,

-40., -30., -20., -10., 0., 10., 20., 30., 40., 50., 60., 70., 80., 90., 100., -60., -50., -40., -30., -20., -10., 0., 10., 20.,

30., 40., 50., 60., 70., 80., 90., 100., -70., -60., -50., -40., -30., -20., -10., 0., 10., 20., 30., 40., 50., 60., 70., 80., 90.,

100., -80., -70., -60., -50., -40., -30., -20., -10., 0., 10., 20., 30., 40., 50., 60., 70., 80., 90., -80., -70., -60., -50.,

-40., -30., -20., -10., 0., 10., 20., 30., 40., 50., 60., 70., 80., 90., -90., -80., -70., -60., -50., -40., -30., -20., -10.,

0., 10., 20., 30., 40., 50., 60., 70., 80., 90., -100., -90., -80., -70., -60., -50., -40., -30., -20., -10., 0., 10., 20., 30.,

40., 50., 60., 70., 80., 90., -100., -90., -80., -70., -60., -50., -40., -30., -20., -10., 0., 10., 20., 30., 40., 50., 60., 70.,

80., -110., -100., -90., -80., -70., -60., -50., -40., -30., -20., -10., 0., 10., 20., 30., 40., 50., 60., 70., 80., -110., -100.,

-90., -80., -70., -60., -50., -40., -30., -20., -10., 0., 10., 20., 30., 40., 50., 60., 70., 80., -110., -100., -90., -80., -70.,

-60., -50., -40., -30., -20., -10., 0., 10., 20., 30., 40., 50., 60., 70., -110., -100., -90., -80., -70., -60., -50., -40., -30.,

-20., -10., 0., 10., 20., 30., 40., 50., 60., 70., -90., -80., -70., -60., -50., -40., -30., -20., -10., 0.

};

int main(int argc, char **argv)

{

const string about =

"This sample demonstrates recoloring grayscale images with dnn.\n"

"This program is based on:\n"

" http://richzhang.github.io/colorization\n"

" https://github.com/richzhang/colorization\n"

"Download caffemodel and prototxt files:\n"

" http://eecs.berkeley.edu/~rich.zhang/projects/2016_colorization/files/demo_v2/colorization_release_v2.caffemodel\n"

" https://raw.githubusercontent.com/richzhang/colorization/master/colorization/models/colorization_deploy_v2.prototxt\n";

const string keys =

"{ h help | | print this help message }"

"{ proto | colorization_deploy_v2.prototxt | model configuration }"

"{ model | colorization_release_v2.caffemodel | model weights }"

"{ image | house.jpg | path to image file }"

"{ opencl | | enable OpenCL }";

CommandLineParser parser(argc, argv, keys);

parser.about(about);

if (parser.has("help"))

{

parser.printMessage();

return 0;

}

string modelTxt = parser.get("proto");

string modelBin = parser.get("model");

string imageFile = parser.get("image");

bool useOpenCL = parser.has("opencl");

if (!parser.check())

{

parser.printErrors();

return 1;

}

Mat img = imread(imageFile, 1);

if (img.empty())

{

cout << "Can't read image from file: " << imageFile << endl;

return 2;

}

// fixed input size for the pretrained network

const int W_in = 224;

const int H_in = 224;

Net net = dnn::readNetFromCaffe(modelTxt, modelBin);

if (useOpenCL)

net.setPreferableTarget(DNN_TARGET_OPENCL);//要求网络在特定目标设备上进行计算。

// setup additional layers:设置其他图层

int sz[] = { 2, 313, 1, 1 };

const Mat pts_in_hull(4, sz, CV_32F, hull_pts);//四维矩阵

Ptr class8_ab = net.getLayer("class8_ab");//取出class8_ab层,对该层进行修改

class8_ab->blobs.push_back(pts_in_hull);//作为blob存储的学习参数列表

Ptr conv8_313_rh = net.getLayer("conv8_313_rh");

conv8_313_rh->blobs.push_back(Mat(1, 313, CV_32F, Scalar(2.606)));

// extract L channel and subtract mean

Mat lab, L, input;

img.convertTo(img, CV_32F, 1.0 / 255);

cvtColor(img, lab, COLOR_BGR2Lab);

extractChannel(lab, L, 0);//0代表通道索引

resize(L, input, Size(W_in, H_in));//调整输入尺寸,与prototxt中输入层的参数一致

input -= 50;//减平均值

// run the L channel through the network

Mat inputBlob = blobFromImage(input);

net.setInput(inputBlob);

Mat result = net.forward();//输出{1,2,56,56}四维Mat

// retrieve the calculated a,b channels from the network output

Size sizz(result.size[0], result.size[1]);

Size siz(result.size[2], result.size[3]);

Mat a = Mat(siz, CV_32F, result.ptr(0, 0));//56*56

Mat b = Mat(siz, CV_32F, result.ptr(0, 1));

resize(a, a, img.size());

resize(b, b, img.size());

// merge, and convert back to BGR

Mat color, chn[] = { L, a, b };

merge(chn, 3, lab);

cvtColor(lab, color, COLOR_Lab2BGR);

imshow("color", color);

imshow("original", img);

waitKey();

return 0;

}

EAST深度学习文本检测器:

原文链接:https://www.colabug.com/4173878.html

NMSBoxes函数在给定框和相应分数下执行非最大抑制:

void cv::dnn::NMSBoxes ( const std::vector< Rect > & bboxes,

const std::vector< float > & scores,

const float score_threshold,

const float nms_threshold,

std::vector< int > & indices,

const float eta = 1.f,

const int top_k = 0

) bboxes:一组矩形框。

scores:一组相应的置信度。

score_threshold:用于按分数过滤矩形框的阈值。

nms_threshold:用于非最大抑制的阈值。

indices:NMS后保留的bbox指数。

eta:自适应阈值公式中的系数:nms_thresholdi + 1 =eta⋅nms_thresholdi。

opencv实例:

#include

#include

#include

using namespace cv;

using namespace cv::dnn;

const char* keys =

"{ help h | | Print help message. }"

"{ input i | 1.jpg| Path to input image or video file. Skip this argument to capture frames from a camera.}"

"{ model m |frozen_east_text_detection.pb | Path to a binary .pb file contains trained network.}"

"{ width | 320 | Preprocess input image by resizing to a specific width. It should be multiple by 32. }"

"{ height | 320 | Preprocess input image by resizing to a specific height. It should be multiple by 32. }"

"{ thr | 0.5 | Confidence threshold. }"

"{ nms | 0.4 | Non-maximum suppression threshold. }";

void decode(const Mat& scores, const Mat& geometry, float scoreThresh,

std::vector& detections, std::vector& confidences);

int main(int argc, char** argv)

{

// Parse command line arguments.

CommandLineParser parser(argc, argv, keys);

parser.about("Use this script to run TensorFlow implementation (https://github.com/argman/EAST) of "

"EAST: An Efficient and Accurate Scene Text Detector (https://arxiv.org/abs/1704.03155v2)");

if (argc == 1 || parser.has("help"))

{

parser.printMessage();

//return 0;

}

float confThreshold = parser.get("thr");//得分阈值

float nmsThreshold = parser.get("nms"); //非极大值抑制

int inpWidth = parser.get("width");

int inpHeight = parser.get("height");

String model = parser.get("model");

if (!parser.check())

{

parser.printErrors();

return 1;

}

CV_Assert(!model.empty());

// Load network.

Net net = readNet(model);

// Open a video file or an image file or a camera stream.

VideoCapture cap;

if (parser.has("input"))

cap.open(parser.get("input"));

else

cap.open(0);

static const std::string kWinName = "EAST: An Efficient and Accurate Scene Text Detector";

namedWindow(kWinName, WINDOW_NORMAL);

std::vector outs;

std::vector outNames(2);

outNames[0] = "feature_fusion/Conv_7/Sigmoid";

outNames[1] = "feature_fusion/concat_3";

Mat frame, blob;

while (waitKey(1) < 0)

{

cap >> frame;

if (frame.empty())

{

waitKey();

break;

}

blobFromImage(frame, blob, 1.0, Size(inpWidth, inpHeight), Scalar(123.68, 116.78, 103.94), true, false);

net.setInput(blob);

net.forward(outs, outNames);

Mat scores = outs[0];// 都是4维

Mat geometry = outs[1];

// Decode predicted bounding boxes.

std::vector boxes;

std::vector confidences;

decode(scores, geometry, confThreshold, boxes, confidences);//boxes是vector,confidences是得分

// Apply non-maximum suppression procedure.应用非最大抑制

std::vector indices;

NMSBoxes(boxes, confidences, confThreshold, nmsThreshold, indices);//indices存放保留下来的矩形框的索引

// Render detections.

Point2f ratio((float)frame.cols / inpWidth, (float)frame.rows / inpHeight);

for (size_t i = 0; i < indices.size(); ++i)

{

RotatedRect& box = boxes[indices[i]];

Point2f vertices[4];

box.points(vertices);

for (int j = 0; j < 4; ++j)

{

vertices[j].x *= ratio.x;

vertices[j].y *= ratio.y;

}

for (int j = 0; j < 4; ++j)//画出矩形框

line(frame, vertices[j], vertices[(j + 1) % 4], Scalar(0, 255, 0), 1);

}

// Put efficiency information.

std::vector layersTimes;

double freq = getTickFrequency() / 1000;

double t = net.getPerfProfile(layersTimes) / freq;

std::string label = format("Inference time: %.2f ms", t);

putText(frame, label, Point(0, 15), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(0, 255, 0));

imshow(kWinName, frame);

}

system("pause");

return 0;

}

void decode(const Mat& scores, const Mat& geometry, float scoreThresh,

std::vector& detections, std::vector& confidences)

{

detections.clear();

CV_Assert(scores.dims == 4); CV_Assert(geometry.dims == 4); CV_Assert(scores.size[0] == 1);

CV_Assert(geometry.size[0] == 1); CV_Assert(scores.size[1] == 1); CV_Assert(geometry.size[1] == 5);

CV_Assert(scores.size[2] == geometry.size[2]); CV_Assert(scores.size[3] == geometry.size[3]);

const int height = scores.size[2];//1*1*80*80,存放每个像素的分数,与阈值比较

const int width = scores.size[3];

for (int y = 0; y < height; ++y)

{

const float* scoresData = scores.ptr(0, 0, y);//取一行

const float* x0_data = geometry.ptr(0, 0, y);//1*5*80*80存放的是矩形框吧

const float* x1_data = geometry.ptr(0, 1, y);

const float* x2_data = geometry.ptr(0, 2, y);

const float* x3_data = geometry.ptr(0, 3, y);

const float* anglesData = geometry.ptr(0, 4, y);

for (int x = 0; x < width; ++x)

{

float score = scoresData[x];

if (score < scoreThresh)

continue;

// Decode a prediction.

// Multiple by 4 because feature maps are 4 time less than input image.

float offsetX = x * 4.0f, offsetY = y * 4.0f;

float angle = anglesData[x];

float cosA = std::cos(angle);

float sinA = std::sin(angle);

float h = x0_data[x] + x2_data[x];

float w = x1_data[x] + x3_data[x];

Point2f offset(offsetX + cosA * x1_data[x] + sinA * x2_data[x],

offsetY - sinA * x1_data[x] + cosA * x2_data[x]);

Point2f p1 = Point2f(-sinA * h, -cosA * h) + offset;

Point2f p3 = Point2f(-cosA * w, sinA * w) + offset;

RotatedRect r(0.5f * (p1 + p3), Size2f(w, h), -angle * 180.0f / (float)CV_PI);//定义矩形框

detections.push_back(r);

confidences.push_back(score);

}

}

}

身体姿势检测

https://github.com/CMU-Perceptual-Computing-Lab/openpose/tree/master/models

https://www.cnblogs.com/caffeaoto/p/7793994.html

//

// this sample demonstrates the use of pretrained openpose networks with opencv's dnn module.

//

// it can be used for body pose detection, using either the COCO model(18 parts):

// http://posefs1.perception.cs.cmu.edu/OpenPose/models/pose/coco/pose_iter_440000.caffemodel

// https://raw.githubusercontent.com/opencv/opencv_extra/master/testdata/dnn/openpose_pose_coco.prototxt

//

// or the MPI model(16 parts):

// http://posefs1.perception.cs.cmu.edu/OpenPose/models/pose/mpi/pose_iter_160000.caffemodel

// https://raw.githubusercontent.com/opencv/opencv_extra/master/testdata/dnn/openpose_pose_mpi_faster_4_stages.prototxt

//

// (to simplify this sample, the body models are restricted to a single person.)

//

//

// you can also try the hand pose model:

// http://posefs1.perception.cs.cmu.edu/OpenPose/models/hand/pose_iter_102000.caffemodel

// https://raw.githubusercontent.com/CMU-Perceptual-Computing-Lab/openpose/master/models/hand/pose_deploy.prototxt

//

#include

#include

#include

using namespace cv;

using namespace cv::dnn;

#include

using namespace std;

// connection table, in the format [model_id][pair_id][from/to]

// please look at the nice explanation at the bottom of:

// https://github.com/CMU-Perceptual-Computing-Lab/openpose/blob/master/doc/output.md

//

const int POSE_PAIRS[3][20][2] = {

{ // COCO body

{ 1,2 },{ 1,5 },{ 2,3 },

{ 3,4 },{ 5,6 },{ 6,7 },

{ 1,8 },{ 8,9 },{ 9,10 },

{ 1,11 },{ 11,12 },{ 12,13 },

{ 1,0 },{ 0,14 },

{ 14,16 },{ 0,15 },{ 15,17 }

},

{ // MPI body

{ 0,1 },{ 1,2 },{ 2,3 },

{ 3,4 },{ 1,5 },{ 5,6 },

{ 6,7 },{ 1,14 },{ 14,8 },{ 8,9 },

{ 9,10 },{ 14,11 },{ 11,12 },{ 12,13 }

},

{ // hand

{ 0,1 },{ 1,2 },{ 2,3 },{ 3,4 }, // thumb

{ 0,5 },{ 5,6 },{ 6,7 },{ 7,8 }, // pinkie

{ 0,9 },{ 9,10 },{ 10,11 },{ 11,12 }, // middle

{ 0,13 },{ 13,14 },{ 14,15 },{ 15,16 }, // ring

{ 0,17 },{ 17,18 },{ 18,19 },{ 19,20 } // small

} };

int main(int argc, char **argv)

{

CommandLineParser parser(argc, argv,

"{ h help | false | print this help message }"

"{ p proto | pose_deploy.prototxt | (required) model configuration, e.g. hand/pose.prototxt }"

"{ m model | pose_iter_102000.caffemodel | (required) model weights, e.g. hand/pose_iter_102000.caffemodel }"

"{ i image | hand.jpg | (required) path to image file (containing a single person, or hand) }"

"{ width | 368 | Preprocess input image by resizing to a specific width. }"

"{ height | 368 | Preprocess input image by resizing to a specific height. }"

"{ t threshold | 0.1 | threshold or confidence value for the heatmap }"

);

String modelTxt = parser.get("proto");

String modelBin = parser.get("model");

String imageFile = parser.get("image");

int W_in = parser.get("width");

int H_in = parser.get("height");

float thresh = parser.get("threshold");

if (parser.get("help") || modelTxt.empty() || modelBin.empty() || imageFile.empty())

{

cout << "A sample app to demonstrate human or hand pose detection with a pretrained OpenPose dnn." << endl;

parser.printMessage();

return 0;

}

// read the network model

Net net = readNetFromCaffe(modelTxt, modelBin);

// and the image

Mat img = imread(imageFile);

if (img.empty())

{

std::cerr << "Can't read image from the file: " << imageFile << std::endl;

exit(-1);

}

// send it through the network

Mat inputBlob = blobFromImage(img, 1.0 / 255, Size(W_in, H_in), Scalar(0, 0, 0), false, false);

net.setInput(inputBlob);

Mat result = net.forward();

// the result is an array of "heatmaps", the probability of a body part being in location x,y

int midx, npairs;

int nparts = result.size[1];

int H = result.size[2];

int W = result.size[3];

// find out, which model we have

if (nparts == 19)

{ // COCO body

midx = 0;

npairs = 17;

nparts = 18; // skip background

}

else if (nparts == 16)

{ // MPI body

midx = 1;

npairs = 14;

}

else if (nparts == 22)

{ // hand

midx = 2;

npairs = 20;

}

else

{

cerr << "there should be 19 parts for the COCO model, 16 for MPI, or 22 for the hand one, but this model has " << nparts << " parts." << endl;

return (0);

}

// find the position of the body parts

vector points(22);

for (int n = 0; n thresh)

p = pm;

points[n] = p;

}

// connect body parts and draw it !

float SX = float(img.cols) / W;

float SY = float(img.rows) / H;

for (int n = 0; n