hue-3.9-cdh-5.7.0安装

hue-3.9-cdh-5.7.0安装

本次安装踩了一个大坑,由于之前先rpm方式安装了mysql,把centos7自带的mariadb删除了,用yum方式安装mysql-devel失败了,所以使用了rpm的方式安装hue。

1.下载安装包

下载地址:http://archive.cloudera.com/cdh5/redhat/5/x86_64/cdh/5.7.0/RPMS/x86_64/

#一共是15个rpm包,beeswax要放在最后一个安装,别问,问就是踩过坑

hue-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-beeswax-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-common-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-doc-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-hbase-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-impala-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-pig-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-plugins-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-rdbms-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-search-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-security-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-server-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-spark-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-sqoop-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

hue-zookeeper-3.9.0+cdh5.15.1+8420-1.cdh5.15.1.p0.4.el7.x86_64.rpm

执行rpm -ivh xxxxxx.rpm, 报freely redistributed under the terms of the GNU GPL错误

解决方法:

rpm -ivh xxx.rpm --nodeps --force

2.编译

#安装结束之后进入hue目录进行编译

[hadoop@hadoop001 bin]$ cd /usr/lib/hue/

[hadoop@hadoop001 hue]$ make apps

如果编译会提示缺失这两个文件libexslt.so.0,libxslt.so.1,目录在/use/lib64/,直接从其他机器上拷贝过来,或者是直接从网上下载。

3.集成大数据环境配置

hdfs-site.xml配置

<!-- 启用WebHdfs. -->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

core-site.xml配置

<!-- Hue配置 -->

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

yarn-site.xml配置

<!--打开HDFS上日志记录功能-->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!--在HDFS上聚合的日志最长保留多少秒。3天-->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>259200</value>

</property>

</configuration>

httpfs-site.xml配置

<property>

<name>httpfs.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>httpfs.proxyuser.hue.groups</name>

<value>*</value>

</property>

集成hive

#/etc/hue/conf/hue.ini配置文件改

[hadoop]

[[hdfs_clusters]]

[[[default]]]

fs_defaultfs=hdfs://hadoop001:8020

webhdfs_url=http://hadoop001:50070/webhdfs/v1

[[yarn_clusters]]

[[[default]]]

resourcemanager_host=hadoop001

resourcemanager_port=8032

submit_to=True

resourcemanager_api_url=http://hadoop001:8088

proxy_api_url=http://hadoop001:8088

history_server_api_url=http://hadoop001:19888

[beeswax]

hive_server_host=hadoop001

hive_server_port=10000

hive-site.xml配置

<property>

<name>hive.metastore.uris</name>

<value>thrift://192.168.9.128:9083</value>

<description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>192.168.9.128</value>

<description>Bind host on which to run the HiveServer2 Thrift service.</description>

</property>

</configuration>

如果是集群操作,配置要同步到其他机器上

4.hue环境配置

#环境变量

export HUE_HOME=/usr/lib/hue

export PATH=$HUE_HOME/build/env/bin:$PATH

#修改desktop的权限

[hadoop@hadoop001 desktop]$ pwd

/usr/lib/hue/desktop

[hadoop@hadoop desktop]$ chmod 777 hue/desktop/

[hadoop@hadoop desktop]$ chmod 766 hue/desktop/desktop.db

[hadoop@hadoop001 desktop]$ ll

total 4

lrwxrwxrwx. 1 hadoop hadoop 13 Apr 17 09:10 conf -> /etc/hue/conf

drwxr-xr-x. 4 hadoop hadoop 172 Apr 17 09:17 core

lrwxrwxrwx. 1 hadoop hadoop 23 Apr 17 09:10 desktop.db -> /var/lib/hue/desktop.db

lrwxrwxrwx. 1 hadoop hadoop 23 Apr 17 09:10 desktop.db.rpmsave.20190417.091101OURCE -> /var/lib/hue/desktop.db

drwxr-xr-x. 15 hadoop hadoop 210 Apr 17 09:11 libs

lrwxrwxrwx. 1 hadoop hadoop 12 Apr 17 09:11 logs -> /var/log/hue

-rw-r--r--. 1 hadoop hadoop 3467 Mar 23 2016 Makefile

#修改hue.ini文件

[hadoop@hadoop001 conf]$ pwd

/etc/hue/conf

# Webserver listens on this address and port

http_host=hadoop001

http_port=8888

#修改hue的数据库为mysql

[[database]]

engine=mysql

host=localhost

port=3306

user=root

password=123456

name=hue

# mysql, oracle, or postgresql configuration.

[[[mysql]]]

nice_name="My SQL DB"

name=hue

engine=mysql

host=localhost

port=3306

5.hue数据库初始化

[hadoop@hadoop001 bin]$ hue syncdb

[hadoop@hadoop001 bin]$ hue migrate

6.启动hue

#1、启动Hive metastore

[hadoop@hadoop001 bin]$ nohup hive --service metastore &

#2、启动hiveserver2

[hadoop@hadoop001 bin]$ nohup hive --service hiveserver2 &

#3、启动Hue

[hadoop@hadoop001 bin]$ nohup supervisor &

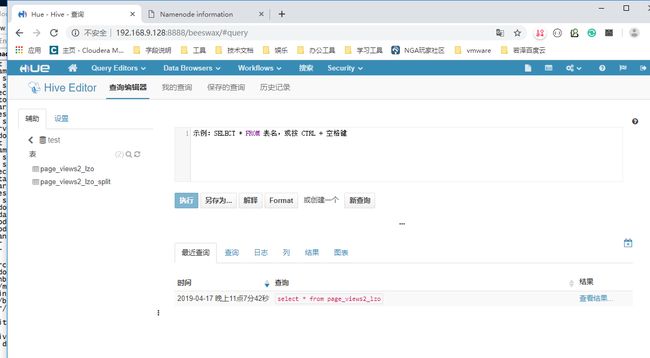

7.Hue Web界面查看

8.踩坑

编译的时候出现这个错误Error in sasl_client_start (-4) SASL(-4): no mechanism available: No worthy mechs found,安装一下软件

yum install cyrus-sasl-plain cyrus-sasl-devel cyrus-sasl-gssapi

出现 hue unable to open database file 错误

desktop以及desktop.db权限问题,参考上面的环境配置

hue界面出现 hue database is locked ,这个是没有修改mysql的配置数据的问题

修改hue.ini中配置,修改为mysql

hue界面出现 Could not connect to hadoop001:10000 错误

没有启动 hive --service metastore 或者 hive --service hiveserver2

使用hue查看hdfs系统报无法访问:/user/hadoop。 Note: you are a Hue admin but not a HDFS superuser, “hdfs” or part of HDFS supergroup, “supergroup”.

出现这个问题,是因为默认的超级用户是hdfs ,我的是hadoop用户启动的, 所以要修改hue.ini配置

# This should be the hadoop cluster admin default_hdfs_superuser=hadoop