Pythno3爬虫

一、网页下载器

from urllib import request

import http.cookiejar

print('First Method') #网页下载器

response1 = request.urlopen(url)

# print(response1) #

print(response1.getcode()) #状态码 200

# print(response1.read()) #页面源码

print(len(response1.read())) 2、添加header

print('Second Method')

req = request.Request(url)

req.add_header('user-agent', 'Mozilla/5.0') #将爬虫程序伪装为一个浏览器

response2 = request.urlopen(req)

print(response2.getcode())

print(len(response2.read()))3、添加特殊情境处理器

HttpCookieProcessor ProxyHandler HttpsHandler HttpRedictHandler

如添加cookie处理

print('Third Method')

cj = http.cookiejar.CookieJar() #创建cookie容器

opener = request.build_opener(request.HTTPCookieProcessor(cj))

request.install_opener(opener)

response3 = request.urlopen(url)

print(response3.getcode())

print(cj)二、网页解析

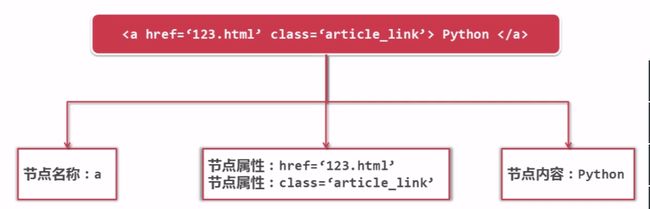

网页的组成

BeautifulSoup的使用

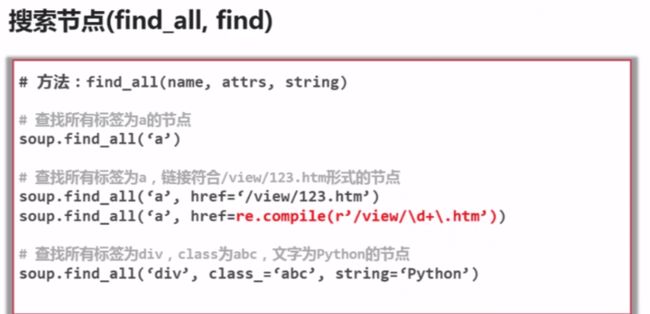

搜索节点

获取节点信息

正则表达式,html.parser,BeautifulSoup,LXML

from bs4 import BeautifulSoup

import re

html_doc = """

The Dormouse's story

The Dormouse's story

Once upon a time there were three little sisters; and their names were

哈哈,

嘿嘿 and

嘎嘎;

and they lived at the bottom of a well.

...

"""网页解析器

soup=BeautifulSoup(html_doc,'html.parser')

# soup=BeautifulSoup(html_doc,'html.parser',from_encoding='utf-8')links=soup.find_all('a')

for link in links:

print(link.name,link['href'],link.get_text())p_node=soup.find("p",class_="title") #加_ 来区别

print(p_node.name,p_node.get_text())link_node=soup.find("a",href="http://example.com/哈哈")

print(link_node.name,link_node['href'],link_node.get_text())

link_node=soup.find('a',href=re.compile(r"嘎")) #出现了\,只需要写一个 \

print(link_node.name,link_node["href"],link_node.get_text())附:urllib.parse.urljoin 解析

import urllib.parse

# http://www.baidu.com/a/1 域名之后的全部替换

print(urllib.parse.urljoin("http://www.baidu.com/1/s?a=1","/a/1"))

# http://www.baidu.com/1/a/1 只是最后/ 之后的替换

print(urllib.parse.urljoin("http://www.baidu.com/1/s?a=1","a/1"))

# http://www.baidu.com/1/2/a/1

print(urllib.parse.urljoin("http://www.baidu.com/1/2/s?a=1","a/1"))

# http://www.cwi.nl/FAQ.html

print(urllib.parse.urljoin('http://www.cwi.nl/%7Eguido/Python.html', '/FAQ.html'))

# http://www.cwi.nl/%7Eguido/FAQ.html

print(urllib.parse.urljoin('http://www.cwi.nl/%7Eguido/Python.html', 'FAQ.html'))

print(urllib.parse.urljoin("http://www.baidu.com/1/s?a=1","a/1"))

print(urllib.parse.urljoin("http://www.baidu.com/1/s?a=1","a/1"))