在学习hadoop中遇到的问题

错误内容包括mapreduce、hive、flume、azkaban、sqoop、

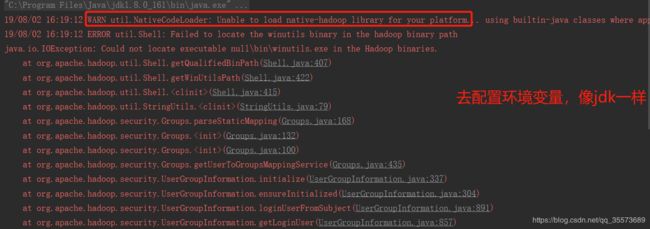

1 启动程序报错:

19/08/02 16:19:12 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

19/08/02 16:19:12 ERROR util.Shell: Failed to locate the winutils binary in the hadoop binary path

java.io.IOException: Could not locate executable null\bin\winutils.exe in the Hadoop binaries.

at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:407)

at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:422)

at org.apache.hadoop.util.Shell.(Shell.java:415)

at org.apache.hadoop.util.StringUtils.(StringUtils.java:79)

at org.apache.hadoop.security.Groups.parseStaticMapping(Groups.java:168)

at org.apache.hadoop.security.Groups.(Groups.java:132)

at org.apache.hadoop.security.Groups.(Groups.java:100)

at org.apache.hadoop.security.Groups.getUserToGroupsMappingService(Groups.java:435)

at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:337)

at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(UserGroupInformation.java:304)

at org.apache.hadoop.security.UserGroupInformation.loginUserFromSubject(UserGroupInformation.java:891)

at org.apache.hadoop.security.UserGroupInformation.getLoginUser(UserGroupInformation.java:857)

at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGroupInformation.java:724)

at org.apache.hadoop.fs.viewfs.ViewFileSystem.(ViewFileSystem.java:136)

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at java.lang.Class.newInstance(Class.java:442)

at java.util.ServiceLoader$LazyIterator.nextService(ServiceLoader.java:380)

at java.util.ServiceLoader$LazyIterator.next(ServiceLoader.java:404)

at java.util.ServiceLoader$1.next(ServiceLoader.java:480)

at org.apache.hadoop.fs.FileSystem.loadFileSystems(FileSystem.java:2767)

at org.apache.hadoop.fs.FileSystem.getFileSystemClass(FileSystem.java:2786)

at org.apache.hadoop.fs.FsUrlStreamHandlerFactory.(FsUrlStreamHandlerFactory.java:62)

at org.apache.hadoop.fs.FsUrlStreamHandlerFactory.(FsUrlStreamHandlerFactory.java:55)

at com.wxj.hdfs.FdfsFileDemo.demo1(FdfsFileDemo.java:19)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.junit.runners.model.FrameworkMethod$1.runReflectiveCall(FrameworkMethod.java:47)

at org.junit.internal.runners.model.ReflectiveCallable.run(ReflectiveCallable.java:12)

at org.junit.runners.model.FrameworkMethod.invokeExplosively(FrameworkMethod.java:44)

at org.junit.internal.runners.statements.InvokeMethod.evaluate(InvokeMethod.java:17)

at org.junit.runners.ParentRunner.runLeaf(ParentRunner.java:271)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:70)

at org.junit.runners.BlockJUnit4ClassRunner.runChild(BlockJUnit4ClassRunner.java:50)

at org.junit.runners.ParentRunner$3.run(ParentRunner.java:238)

at org.junit.runners.ParentRunner$1.schedule(ParentRunner.java:63)

at org.junit.runners.ParentRunner.runChildren(ParentRunner.java:236)

at org.junit.runners.ParentRunner.access$000(ParentRunner.java:53)

at org.junit.runners.ParentRunner$2.evaluate(ParentRunner.java:229)

at org.junit.runners.ParentRunner.run(ParentRunner.java:309)

at org.junit.runner.JUnitCore.run(JUnitCore.java:160)

at com.intellij.junit4.JUnit4IdeaTestRunner.startRunnerWithArgs(JUnit4IdeaTestRunner.java:68)

at com.intellij.rt.execution.junit.IdeaTestRunner$Repeater.startRunnerWithArgs(IdeaTestRunner.java:47)

at com.intellij.rt.execution.junit.JUnitStarter.prepareStreamsAndStart(JUnitStarter.java:242)

at com.intellij.rt.execution.junit.JUnitStarter.main(JUnitStarter.java:70)

这种错误不会影响程序的正常执行,为了处理这个问题,可以配置一下Hadoop的环境变量,配置到path里面,把lib中native中的hadoop.dll 复制到System32目录下,然后重启系统,再重试就不会报这个错了

2 执行wordcount程序报错

2019-08-04 23:45:31,529 WARN [uber-SubtaskRunner] org.apache.hadoop.mapred.MapTask: Unable to initialize MapOutputCollector org.apache.hadoop.mapred.MapTask$MapOutputBuffer

java.lang.ClassCastException: class javafx.scene.text.Text

at java.lang.Class.asSubclass(Class.java:3404)

at org.apache.hadoop.mapred.JobConf.getOutputKeyComparator(JobConf.java:892)

at org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:1011)

at org.apache.hadoop.mapred.MapTask.createSortingCollector(MapTask.java:402)

at org.apache.hadoop.mapred.MapTask.access$100(MapTask.java:81)

at org.apache.hadoop.mapred.MapTask$NewOutputCollector.(MapTask.java:704)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:776)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341)

at org.apache.hadoop.mapred.LocalContainerLauncher$EventHandler.runSubtask(LocalContainerLauncher.java:388)

at org.apache.hadoop.mapred.LocalContainerLauncher$EventHandler.runTask(LocalContainerLauncher.java:302)

at org.apache.hadoop.mapred.LocalContainerLauncher$EventHandler.access$200(LocalContainerLauncher.java:187)

at org.apache.hadoop.mapred.LocalContainerLauncher$EventHandler$1.run(LocalContainerLauncher.java:230)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2019-08-04 23:45:31,536 WARN [uber-SubtaskRunner] org.apache.hadoop.mapred.LocalContainerLauncher: Exception running local (uberized) 'child' : java.io.IOException: Initialization of all the collectors failed. Error in last collector was:java.lang.ClassCastException: class javafx.scene.text.Text

at org.apache.hadoop.mapred.MapTask.createSortingCollector(MapTask.java:416)

at org.apache.hadoop.mapred.MapTask.access$100(MapTask.java:81)

at org.apache.hadoop.mapred.MapTask$NewOutputCollector.(MapTask.java:704)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:776)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341)

at org.apache.hadoop.mapred.LocalContainerLauncher$EventHandler.runSubtask(LocalContainerLauncher.java:388)

at org.apache.hadoop.mapred.LocalContainerLauncher$EventHandler.runTask(LocalContainerLauncher.java:302)

at org.apache.hadoop.mapred.LocalContainerLauncher$EventHandler.access$200(LocalContainerLauncher.java:187)

at org.apache.hadoop.mapred.LocalContainerLauncher$EventHandler$1.run(LocalContainerLauncher.java:230)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ClassCastException: class javafx.scene.text.Text

at java.lang.Class.asSubclass(Class.java:3404)

at org.apache.hadoop.mapred.JobConf.getOutputKeyComparator(JobConf.java:892)

at org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:1011)

at org.apache.hadoop.mapred.MapTask.createSortingCollector(MapTask.java:402)

... 13 more

2019-08-04 23:45:31,537 INFO [uber-SubtaskRunner] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Progress of TaskAttempt attempt_1564461888774_0003_m_000000_0 is : 0.0

2019-08-04 23:45:31,614 INFO [uber-SubtaskRunner] org.apache.hadoop.mapred.Task: Runnning cleanup for the task

2019-08-04 23:45:31,671 WARN [uber-SubtaskRunner] org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter: Could not delete hdfs://node01:8020/wordcountout/_temporary/1/_temporary/attempt_1564461888774_0003_m_000000_0

2019-08-04 23:45:31,671 INFO [uber-SubtaskRunner] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Diagnostics report from attempt_1564461888774_0003_m_000000_0: java.io.IOException: Initialization of all the collectors failed. Error in last collector was:java.lang.ClassCastException: class javafx.scene.text.Text

at org.apache.hadoop.mapred.MapTask.createSortingCollector(MapTask.java:416)

at org.apache.hadoop.mapred.MapTask.access$100(MapTask.java:81)

at org.apache.hadoop.mapred.MapTask$NewOutputCollector.(MapTask.java:704)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:776)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341)

at org.apache.hadoop.mapred.LocalContainerLauncher$EventHandler.runSubtask(LocalContainerLauncher.java:388)

at org.apache.hadoop.mapred.LocalContainerLauncher$EventHandler.runTask(LocalContainerLauncher.java:302)

at org.apache.hadoop.mapred.LocalContainerLauncher$EventHandler.access$200(LocalContainerLauncher.java:187)

at org.apache.hadoop.mapred.LocalContainerLauncher$EventHandler$1.run(LocalContainerLauncher.java:230)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ClassCastException: class javafx.scene.text.Text

at java.lang.Class.asSubclass(Class.java:3404)

at org.apache.hadoop.mapred.JobConf.getOutputKeyComparator(JobConf.java:892)

at org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:1011)

at org.apache.hadoop.mapred.MapTask.createSortingCollector(MapTask.java:402)

... 13 more Text 导包导错了 import org.apache.hadoop.io.Text;

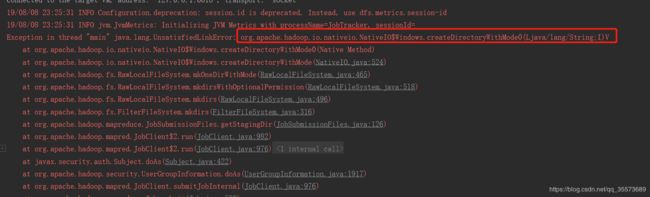

3 测试排序时,使用本地文件模式

19/08/08 23:25:31 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id

19/08/08 23:25:31 INFO jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId=

Exception in thread "main" java.lang.UnsatisfiedLinkError: org.apache.hadoop.io.nativeio.NativeIO$Windows.createDirectoryWithMode0(Ljava/lang/String;I)V

at org.apache.hadoop.io.nativeio.NativeIO$Windows.createDirectoryWithMode0(Native Method)

at org.apache.hadoop.io.nativeio.NativeIO$Windows.createDirectoryWithMode(NativeIO.java:524)

at org.apache.hadoop.fs.RawLocalFileSystem.mkOneDirWithMode(RawLocalFileSystem.java:465)

at org.apache.hadoop.fs.RawLocalFileSystem.mkdirsWithOptionalPermission(RawLocalFileSystem.java:518)

at org.apache.hadoop.fs.RawLocalFileSystem.mkdirs(RawLocalFileSystem.java:496)

at org.apache.hadoop.fs.FilterFileSystem.mkdirs(FilterFileSystem.java:316)

at org.apache.hadoop.mapreduce.JobSubmissionFiles.getStagingDir(JobSubmissionFiles.java:126)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:982)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:976)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1917)

at org.apache.hadoop.mapred.JobClient.submitJobInternal(JobClient.java:976)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:582)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:612)

at com.wxj.sort.SortMain.run(SortMain.java:47)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at com.wxj.sort.SortMain.main(SortMain.java:64)解决方法:

在main方法类中添加如下代码块,强制加载hadoop.dll

static {

try {

System.load("D:\\soft\\hadoop-2.6.0-cdh5.14.0\\bin\\hadoop.dll");

} catch (UnsatisfiedLinkError e) {

System.err.println("Native code library failed to load.\n" + e);

System.exit(1);

}

}

4 flume 启动报错:

Failed to start agent because dependencies were not found in classpath. Error follows.

java.lang.NoClassDefFoundError: org/apache/hadoop/io/SequenceFile$CompressionType

at org.apache.flume.sink.hdfs.HDFSEventSink.configure(HDFSEventSink.java:235)

at org.apache.flume.conf.Configurables.configure(Configurables.java:41)

at org.apache.flume.node.AbstractConfigurationProvider.loadSinks(AbstractConfigurationProvider.java:411)

at org.apache.flume.node.AbstractConfigurationProvider.getConfiguration(AbstractConfigurationProvider.java:102)

at org.apache.flume.node.PollingPropertiesFileConfigurationProvider$FileWatcherRunnable.run(PollingPropertiesFileConfigurationProvider.java:141)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.io.SequenceFile$CompressionType

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:335)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 12 more

解决方式:

根据查询,这个是缺少jar包的问题

find / -name hadoop

我的是这个 /export/servers/hadoop-2.6.0-cdh5.14.0/share/hadoop

找到hadoop下share下的jar包 把 hadoop-common *.jar 上传到flume下的lib目录报错就处理了

5 flume 采集数据到hdfs 报错

2019-09-16 23:50:05,422 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:447)] process failed

java.lang.NullPointerException: Expected timestamp in the Flume event headers, but it was null

at com.google.common.base.Preconditions.checkNotNull(Preconditions.java:204)

at org.apache.flume.formatter.output.BucketPath.replaceShorthand(BucketPath.java:251)

at org.apache.flume.formatter.output.BucketPath.escapeString(BucketPath.java:460)

at org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:368)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:67)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:145)

at java.lang.Thread.run(Thread.java:748)

2019-09-16 23:50:05,423 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:158)] Unable to deliver event. Exception follows.

org.apache.flume.EventDeliveryException: java.lang.NullPointerException: Expected timestamp in the Flume event headers, but it was null

at org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:451)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:67)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:145)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.NullPointerException: Expected timestamp in the Flume event headers, but it was null

at com.google.common.base.Preconditions.checkNotNull(Preconditions.java:204)

at org.apache.flume.formatter.output.BucketPath.replaceShorthand(BucketPath.java:251)

at org.apache.flume.formatter.output.BucketPath.escapeString(BucketPath.java:460)

at org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:368)

... 3 more

2019-09-16 23:50:10,425 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:447)] process failed

java.lang.NullPointerException: Expected timestamp in the Flume event headers, but it was null

at com.google.common.base.Preconditions.checkNotNull(Preconditions.java:204)

at org.apache.flume.formatter.output.BucketPath.replaceShorthand(BucketPath.java:251)

at org.apache.flume.formatter.output.BucketPath.escapeString(BucketPath.java:460)

at org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:368)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:67)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:145)

at java.lang.Thread.run(Thread.java:748)

2019-09-16 23:50:10,428 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:158)] Unable to deliver event. Exception follows.

org.apache.flume.EventDeliveryException: java.lang.NullPointerException: Expected timestamp in the Flume event headers, but it was null

at org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:451)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:67)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:145)

处理问题: 出现这个问题也是因为没有吧jar包导入到flume下的lib下,跟上面的操作一样把hadoop-common* jar包拷贝过去就ok了

6 flume 采集报错

2019-09-18 14:37:47,907 (pool-5-thread-1) [INFO - org.apache.flume.client.avro.ReliableSpoolingFileEventReader.rollCurrentFile(ReliableSpoolingFileEventReader.java:433)] Preparing to move file /export/servers/dirfile/mytest.txt to /export/servers/dirfile/mytest.txt.COMPLETED

2019-09-18 14:37:49,927 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.HDFSDataStream.configure(HDFSDataStream.java:57)] Serializer = TEXT, UseRawLocalFileSystem = false

2019-09-18 14:37:50,494 (SinkRunner-PollingRunner-DefaultSinkProcessor) [INFO - org.apache.flume.sink.hdfs.BucketWriter.open(BucketWriter.java:251)] Creating hdfs://node01:8020/spooldir/files/19-09-18/1430//events-.1568788669928.tmp

2019-09-18 14:37:50,699 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:447)] process failed

java.lang.NoClassDefFoundError: org/apache/commons/configuration/Configuration

at org.apache.hadoop.metrics2.lib.DefaultMetricsSystem.(DefaultMetricsSystem.java:38)

at org.apache.hadoop.metrics2.lib.DefaultMetricsSystem.(DefaultMetricsSystem.java:36)

at org.apache.hadoop.security.UserGroupInformation$UgiMetrics.create(UserGroupInformation.java:139)

at org.apache.hadoop.security.UserGroupInformation.(UserGroupInformation.java:259)

at org.apache.hadoop.fs.FileSystem$Cache$Key.(FileSystem.java:2979)

at org.apache.hadoop.fs.FileSystem$Cache$Key.(FileSystem.java:2971)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2834)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:387)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:296)

at org.apache.flume.sink.hdfs.BucketWriter$1.call(BucketWriter.java:260)

at org.apache.flume.sink.hdfs.BucketWriter$1.call(BucketWriter.java:252)

at org.apache.flume.sink.hdfs.BucketWriter$9$1.run(BucketWriter.java:701)

at org.apache.flume.auth.SimpleAuthenticator.execute(SimpleAuthenticator.java:50)

at org.apache.flume.sink.hdfs.BucketWriter$9.call(BucketWriter.java:698)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ClassNotFoundException: org.apache.commons.configuration.Configuration

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:335)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 18 more

Exception in thread "SinkRunner-PollingRunner-DefaultSinkProcessor" java.lang.NoClassDefFoundError: org/apache/commons/configuration/Configuration

at org.apache.hadoop.metrics2.lib.DefaultMetricsSystem.(DefaultMetricsSystem.java:38)

at org.apache.hadoop.metrics2.lib.DefaultMetricsSystem.(DefaultMetricsSystem.java:36)

at org.apache.hadoop.security.UserGroupInformation$UgiMetrics.create(UserGroupInformation.java:139)

at org.apache.hadoop.security.UserGroupInformation.(UserGroupInformation.java:259)

at org.apache.hadoop.fs.FileSystem$Cache$Key.(FileSystem.java:2979)

at org.apache.hadoop.fs.FileSystem$Cache$Key.(FileSystem.java:2971)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2834)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:387)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:296)

at org.apache.flume.sink.hdfs.BucketWriter$1.call(BucketWriter.java:260)

at org.apache.flume.sink.hdfs.BucketWriter$1.call(BucketWriter.java:252)

at org.apache.flume.sink.hdfs.BucketWriter$9$1.run(BucketWriter.java:701)

at org.apache.flume.auth.SimpleAuthenticator.execute(SimpleAuthenticator.java:50)

at org.apache.flume.sink.hdfs.BucketWriter$9.call(BucketWriter.java:698)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ClassNotFoundException: org.apache.commons.configuration.Configuration

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:335)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 18 more 处理方法:缺少的依赖在commons-configuration-1.6.jar包里,这个包在${HADOOP_HOME}share/hadoop/common/lib/下,将其拷贝到flume的lib目录下。

2019-09-18 16:26:32,352 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:447)] process failed

java.lang.NoClassDefFoundError: org/apache/hadoop/util/PlatformName

处理方法:缺少hadoop-auth-2.4.0.jar依赖,同样将其拷贝到flume的lib目录下

9-18 16:32:25,786 (SinkRunner-PollingRunner-DefaultSinkProcessor) [ERROR - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:447)] process failed

java.lang.NoClassDefFoundError: org/apache/htrace/core/Tracer$Builder

处理方法:缺htrace-core4-4.0.1-incubating.jar包

cp htrace-core4-4.0.1-incubating.jar /export/servers/apache-flume-1.6.0-cdh5.14.0-bin/lib

2019-09-18 16:43:24,083 (SinkRunner-PollingRunner-DefaultSinkProcessor) [WARN - org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:443)] HDFS IO error

java.io.IOException: No FileSystem for scheme: hdfs

处理方法:缺hadoop-hdfs*.jar包

cp ${HADOOP_HOME}share/hadoop/hdfs/hadoop-hdfs*.jar ${FLUME_HOME}/lib/