k8s paas部署

| 服务器 | 角色 |

|---|---|

| 192.168.80.153 | mster1、etcd1、docker、 flannel、 harbor |

| 192.168.80.145 | mster2、etcd2、docker、flannel |

| 192.168.80.144 | mster3、etcd3、docker、 flannel |

| 192.168.80.154 | nod1、docker、flannel、nginx、keepalived |

| 192.168.80.151 | nod2、docker、flannel、nginx、keepalived |

1. 安装前准备

1.1 centos7 关闭SElinux

sudo vim /etc/selinux/config

~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

将SELINUX=enforcing改为SELINUX=disabled

关闭防火墙

# systemctl stop firewalld.service

设置后需要重启才能生效

1.2 linux修改文件打开最大句柄数

sudo vim /etc/security/limits.conf

添加

* soft nofile 65535

* hard nofile 65535

~]# sed -i -e '61a\* soft nofile 65535' -i -e'61a\* hard nofile 65535' /etc/security/limits.conf && cat /etc/security/limits.conf

修改以后保存,注销当前用户,重新登录,执行ulimit -a ,ok

1.3 linux关闭swap

确认方式:

fdisk -l

1、先停止swap分区

/sbin/swapoff /dev/sdb2

2、删除自动挂载配置命令

vi /etc/fstab

这行删除

/dev/sdb2 swap swap defaults 0 0

sudo swapoff -a

1.4 centos7 升级内核

sudo rpm --import RPM-GPG-KEY-elrepo.org

sudo rpm -ivh kernel-lt-4.4.103-1.el7.elrepo.x86_64.rpm

cat /etc/default/grub && echo '##############################' && sed -i 's/GRUB_DEFAULT=saved/GRUB_DEFAULT=0/g' /etc/default/grub && cat /etc/default/grub

sudo vim /etc/default/grub

这行修改

GRUB_DEFAULT=0 //需要修改

sudo grub2-mkconfig -o /boot/grub2/grub.cfg

sudo reboot

uname -r

2 部署etcd集群

2.1 先用yum安装

#yum -y install etcd3

2.2 修改配置文件

# mv /etc/etcd/etcd.conf /etc/etcd/etcd.conf-bak

# vi /etc/etcd/etcd.conf

ETCD_NAME=etcd1

ETCD_DATA_DIR="/var/lib/etcd/etcd1.etcd"

ETCD_LISTEN_PEER_URLS="http://***192.168.56.11***:2380"

ETCD_LISTEN_CLIENT_URLS="http://***192.168.56.11***:2379,http://127.0.0.1:2379"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://***192.168.56.11***:2380"

ETCD_INITIAL_CLUSTER="etcd1=http://192.168.56.11:2380,etcd2=http://192.168.56.12:2380,etcd3=http://192.168.56.13:2380"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="http://***192.168.56.11***:2379"

2.3 三台etcd的安装相同,注意修改配置文件中的ip。

2.4 启动etcd

分别启动 所有master节点的 etcd 服务

# systemctl daemon-reload

# systemctl enable etcd

# systemctl restart etcd

# systemctl status etc

查看 etcd 集群状态:

# etcdctl cluster-health

# 出现 cluster is healthy 表示成功

查看 etcd 集群成员:

# etcdctl member list

3 安装flannel

3.1 部署flannel

tar -zxf flannel-v0.9.1-linux-amd64.tar.gz

mv flanneld /usr/local/bin/

vi /etc/systemd/system/flanneld.service

[Unit]

Description=flanneld

Before=docker.service

After=network.target

[Service]

User=root

Type=notify

ExecStart=/usr/local/bin/flanneld \

--etcd-endpoints=http://etcd1:2379,etcd2:2379,http://etcd3:2379 \

--etcd-prefix=/flannel/network

ExecStop=/bin/pkill flanneld

Restart=on-failure

[Install]

WantedBy=multi-user.target

3.2 验证flanneld是否部署成功

# systemctl daemon-reload

# systemctl start flanneld

# systemctl enable flanneld

# systemctl status flanneld

4 安装docker

4.1 # yum install docker-ce

# mkdir /etc/docker

下面操作主要是创建harbor仓库,后期可以自动拖镜像,文件中的ip是部署harbor的ip,注意修改!

# vi /etc/docker/daemon.json

{

"log-driver": "journald",

"data-root": "/apps/container_storage",

"insecure-registries": [

"hub.paas",

"10.145.131.252",

"hub.paas:80",

"10.145.131.252:80"

]

}

overlay2

{

"storage-driver": "overlay2",

"storage-opts": "overlay2.override_kernel_check=true",

"log-driver": "journald",

"data-root": "/apps/container_storage",

"insecure-registries": [

"hub.paas",

"10.145.131.252",

"hub.paas:80",

"10.145.131.252:80"

]

}

4.2 关于部署后docker的告警的处理方式

关于分区格式参数的告警

WARNING: overlay2: the backing xfs filesystem is formatted without d_type support, which leads to incorrect behavior.

Reformat the filesystem with ftype=1 to enable d_type support.

Running without d_type support will not be supported in future releases.

需要重新格式化

mkfs.xfs -n ftype=1 /dev/mapper/vg02-lv_data

4.3 关联docker和flannel

# vi /usr/lib/systemd/system/docker.service

(注释:只需要改动docker.service文件中两个部分,添加了一行和追加了一行)

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

EnvironmentFile=/run/flannel/subnet.env #添加了此行

ExecStart=/usr/bin/dockerd --bip=${FLANNEL_SUBNET} --mtu=${FLANNEL_MTU} # 后面的是追加了两个环境变量

...

4.4 启动服务

# systemctl daemon-reload

# systemctl start docker

# systemctl enable docker

# systemctl status docker

5、安装tengine

5.1.依赖包安装

# yum -y install gcc gcc-c++ openssl-devel pcre-devel zlib-devel bzip2

# cd /usr/local/src

# 下载安装 jemalloc

wget https://github.com/jemalloc/jemalloc/releases/download/4.4.0/jemalloc-4.4.0.tar.bz2

# tar jxvf jemalloc-4.4.0.tar.bz2

# cd jemalloc-4.4.0

# ./configure && make && make install

# echo '/usr/local/lib' > /etc/ld.so.conf.d/local.conf

# ldconfig

# 下载解压 OpenSSL

wget https://www.openssl.org/source/openssl-1.0.2j.tar.gz

tar zxvf openssl-1.0.2j.tar.gz

# 下载解压 pcre

wget ftp://ftp.csx.cam.ac.uk/pub/software/programming/pcre/pcre-8.40.tar.gz

tar zxvf pcre-8.40.tar.gz

# 下载 zlib

wget https://ncu.dl.sourceforge.net/project/libpng/zlib/1.2.11/zlib-1.2.11.tar.gz

tar zxvf zlib-1.2.11.tar.gz

# 创建www用户和组,创建www虚拟主机使用的目录

# groupadd www

# useradd -g www www -s /sbin/nologin

# mkdir -p /data/www

# chmod +w /data/www

# chown -R www:www /data/www

5.2 编译安装tengine

# cd /usr/local/src

# wget http://tengine.taobao.org/download/tengine-2.2.0.tar.gz

# tar -zxvf tengine-2.2.0.tar.gz

# cd tengine-2.2.0

# ./configure --prefix=/usr/local/nginx \

--user=tengine --group=tengine \

--conf-path=/usr/local/nginx/conf/nginx.conf \

--error-log-path=/var/log/nginx/error.log \

--http-log-path=/var/log/nginx/access.log \

--pid-path=/var/run/nginx.pid \

--lock-path=/var/run/nginx.lock \

--with-http_ssl_module \

--with-http_flv_module \

--with-http_concat_module \

--with-http_realip_module \

--with-http_addition_module \

--with-http_gzip_static_module \

--with-http_random_index_module \

--with-http_stub_status_module \

--with-http_sub_module \

--with-http_dav_module \

--http-client-body-temp-path=/var/tmp/nginx/client/ \

--http-proxy-temp-path=/var/tmp/nginx/proxy/ \

--http-fastcgi-temp-path=/var/tmp/nginx/fcgi/ \

--http-uwsgi-temp-path=/var/tmp/nginx/uwsgi \

--http-scgi-temp-path=/var/tmp/nginx/scgi \

--with-jemalloc --with-openssl=/usr/local/src/openssl-1.0.2j \

--with-zlib=/usr/local/src/zlib-1.2.11 \

--with-pcre=/usr/local/src/pcre-8.40

编译过程略......

# make && make install

5.3 创建/etc/init.d/nginx文件

vim /etc/init.d/nginx

#!/bin/bash

#

# chkconfig: - 85 15

# description: nginx is a World Wide Web server. It is used to serve

# Source function library.

. /etc/rc.d/init.d/functions

# Source networking configuration.

. /etc/sysconfig/network

# Check that networking is up.

[ "$NETWORKING" = "no" ] && exit 0

nginx="/usr/tengine-2.2/sbin/nginx" #修改为自己的安装目录

prog=$(basename $nginx)

NGINX_CONF_FILE="/usr/tengine-2.2/conf/nginx.conf" #修改为自己的安装目录

#[ -f /etc/sysconfig/nginx ] && . /etc/sysconfig/nginx

lockfile=/var/lock/subsys/nginx

#make_dirs() {

# # make required directories

# user=`nginx -V 2>&1 | grep "configure arguments:" | sed 's/[^*]*--user=\([^ ]*\).*/\1/g' -`

# options=`$nginx -V 2>&1 | grep 'configure arguments:'`

# for opt in $options; do

# if [ `echo $opt | grep '.*-temp-path'` ]; then

# value=`echo $opt | cut -d "=" -f 2`

# if [ ! -d "$value" ]; then

# # echo "creating" $value

# mkdir -p $value && chown -R $user $value

# fi

# fi

# done

#}

start() {

[ -x $nginx ] || exit 5

[ -f $NGINX_CONF_FILE ] || exit 6

# make_dirs

echo -n $"Starting $prog: "

daemon $nginx -c $NGINX_CONF_FILE

retval=$?

echo

[ $retval -eq 0 ] && touch $lockfile

return $retval

}

stop() {

echo -n $"Stopping $prog: "

killproc $prog -QUIT

retval=$?

echo

[ $retval -eq 0 ] && rm -f $lockfile

return $retval

}

restart() {

configtest || return $?

stop

sleep 1

start

}

reload() {

configtest || return $?

echo -n $"Reloading $prog: "

# -HUP是nginx平滑重启参数

killproc $nginx -HUP

RETVAL=$?

echo

}

force_reload() {

restart

}

configtest() {

$nginx -t -c $NGINX_CONF_FILE

}

rh_status() {

status $prog

}

rh_status_q() {

rh_status >/dev/null 2>&1

}

case "$1" in

start)

rh_status_q && exit 0

$1

;;

stop)

rh_status_q || exit 0

$1

;;

restart|configtest)

$1

;;

reload)

rh_status_q || exit 7

$1

;;

force-reload)

force_reload

;;

status)

rh_status

;;

condrestart|try-restart)

rh_status_q || exit 0

;;

*)

echo $"Usage: $0 {start|stop|status|restart|condrestart|try-restart|reload|force-reload|configtest}"

exit 2

esac

5.4 修改ngxin文件执行权限

# chmod 755 /etc/init.d/nginx

5.5添加开机启动

# systemctl enable nginx

5.6 启动nginx

# service nginx start

出现 Starting nginx: nginx: [emerg] mkdir() "/var/tmp/nginx/client/" failed (2: No such file or directory) [FAILED]

创建文件目录即可

# mkdir -p /var/tmp/nginx/client

出现 Starting nginx: [ OK ] 正常

# chkconfig --add nginx

# chkconfig --level 35 nginx on

# 查看是否正常启动(nginx端口默认80)

netstat -anpt | grep 80

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 1525/nginx: master

5.7 防火墙添加端口

firewall-cmd --premanent --add-service=http

5.8 配置文件内容

user tengine;

worker_processes 8;

worker_rlimit_nofile 65535;

events {

use epoll;

worker_connections 65535;

}

http {

send_timeout 300;

proxy_connect_timeout 300;

proxy_read_timeout 300;

proxy_next_upstream error timeout invalid_header http_500 http_503 http_404 http_502;

upstream k8s_insecure {

server 192.168.80.153:8080;server 192.168.80.154:8080;server 192.168.80.144:8080;

}

server {

listen 8088;

listen 6443;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "Upgrade";

proxy_buffering off;

location / {

proxy_pass http://k8s_insecure;

}

# location / {

# # root /usr/local/nginx/html;

# # index index.html;

# # }

}

}

6 安装keepalived主从

# yum install openssl-devel

# mkdir /data/ka

# ./configure --prefix=/data/ka

# make

# make install

# cd /data/ka

# cp -r etc/keepalived/ /etc/

# cp sbin/keepalived /sbin/

6.1 修改keepalived配置文件

# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

# router_id 主从一致,全局唯一

router_id paas_inside_master

# router_id paas_inside_backup

}

# 添加一个测试nginx是否运行的脚本

vrrp_script check_nginx {

script "/etc/keepalived/scripts/nginx_check.sh"

interval 3

weight 5

}

vrrp_instance VI_1 {

state MASTER

# state BACKUP

# 修改网卡名

interface eno16777736

# virtual_router_id主从一致,全局唯一

virtual_router_id 51

#mcast_scr_ip是你的本机IP

mcast_src_ip 192.168.80.154

# 主节点priority 需高于从节点

priority 100

# priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass ideal123

}

virtual_ipaddress {

192.168.80.66

}

# 使用nginx检测脚本

track_script {

check_nginx

}

}

6.2创建keepalived启动服务文件

# vi /etc/systemd/system/keepalived.service

[Unit]

Description=Keepalived

[Service]

PIDFile=/run/keepalived.pid

ExecStart=/sbin/keepalived -D

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

[Install]

WantedBy=multi-user.target

6.3启动服务

systemctl daemon-reload

systemctl enable keepalived

systemctl start keepalived

systemctl status keepalived

6.4部署从keepalived

# yum install openssl-devel

# mkdir /data/ka

# ./configure --prefix=/data/ka

# make

# make install

# cd /data/ka

# cp -r etc/keepalived/ /etc/

# cp sbin/keepalived /sbin/

6.5修改配置文件

# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

[email protected]

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

# router_id paas_inside_master

router_id paas_inside_backup

}

vrrp_instance VI_1 {

# state MASTER

state BACKUP

#bond0 需要改成你自己的网卡,可以使用ip a指令来查看

interface eno16777736

virtual_router_id 51

#mcast_scr_ip是你的本机IP

mcast_src_ip 192.168.80.151

# virtual_router_id主备相同但全局唯一,多组主备需区分

# priority 100

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass ideal123

}

virtual_ipaddress {

192.168.80.66

}

}

6.5 创建启动服务脚本

# vi /etc/systemd/system/keepalived.service

[Unit]

Description=Keepalived

[Service]

PIDFile=/run/keepalived.pid

ExecStart=/sbin/keepalived -D

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

[Install]

WantedBy=multi-user.target

systemctl daemon-reload

systemctl enable keepalived

systemctl start keepalived

systemctl status keepalived

6.6 相关脚本参考

nginx_check.sh,此脚本被keepalived配置文件引用,用于在nginx未启动或异常关闭的情况下关闭keepalived进程,促使vip转移。

mkdir /etc/keepalived/scripts/

#!/bin/sh

fuser /usr/local/nginx/sbin/nginx

if [[ $? -eq 0 ]];then

exit 0

else

systemctl stop keepalived

pkill keepalived

exit 1

fi

keepalived_check.sh,此脚本被系统计划任务使用,用于检测nginx与keepalived的状态并尝试恢复。

#!/bin/sh

# exit 1 nginx异常

# exit 2 keepalived异常

check_nginx(){

fuser /usr/local/nginx/sbin/nginx

}

start_nginx(){

systemctl start nginx

}

check_keepalived(){

ip addr |grep 192.168.80.154

}

start_keepalived(){

systemctl start keepalived

}

check_keepalived

if [[ $? -eq 0 ]];then

check_nginx

if [[ $? -eq 0 ]];then

exit 0

else

start_nginx

check_nginx && exit 0 || exit 1

fi

else

check_nginx

if [[ $? -eq 0 ]];then

start_keepalived

check_keepalived && exit 0 || exit 2

else

start_nginx

if [[ $? -eq 0 ]];then

start_keepalived

check_keepalived && exit 0 || exit 2

else

exit 1

fi

fi

fi

6.7添加任务计划

crontab -u root -e

* * * * * /usr/bin/sh /etc/keepalived/scripts/keepalived_check.sh

6.8启动keepalived服务

systemctl daemon-reload

systemctl enable keepalived

systemctl start keepalived

systemctl status keepalived

7 安装harbor

7.1下载docker-compose:

curl -L https://github.com/docker/compose/releases/download/1.9.0/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

7.2下载harbor:

wget https://github.com/vmware/harbor/releases/download/0.5.0/harbor-offline-installer-0.5.0.tgz

#我下载的是offline离线包,这样在后续的部署及安装都会比较快,总共有300M左右的大小!

7.3解压,配置harbor:

tar zxvf harbor-offline-installer-0.5.0.tgz

cd harbor/

#vim harbor.cfg

hostname = 192.168.80.153

#这里只是简单的测试,所以只编辑这一行,其他的默认不做修改;当然也可以根据你自己的实际情况做修改!

7.4配置docker:

#因为docker默认使用的是https连接,而harbor默认使用http连接,所以需要修改docker配置标志insecure registry不安全仓库的主机!

#当然,harbor也可以设置为https,这个后续文章中再列出操作方法吧!

#vim /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd --insecure-registry=192.168.6.113

#只加上–insecure-registry这个参数即可。

#重启docker:

#systemctl daemon-reload

#systemctl restart docker.service

7.5 执行安装脚本:

#会拉取好几个镜像下来,及检查环境:

#./instsll.sh

Note: docker version: 1.12.5

Note: docker-compose version: 1.9.0

[Step 0]: checking installation environment ...

....

[Step 1]: loading Harbor images ...

....

[Step 2]: preparing environment ...

....

[Step 3]: checking existing instance of Harbor ...

....

[Step 4]: starting Harbor ...

....

✔ ----Harbor has been installed and started successfully.----

Now you should be able to visit the admin portal at http://192.168.80.153.

For more details, please visit https://github.com/vmware/harbor .

7.6安装完成后会生成如下6个容器:

# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e6f0baa7ddb7 nginx:1.11.5 "nginx -g 'daemon off" 6 minutes ago Up 6 minutes 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp nginx

1b383261d0c7 vmware/harbor-jobservice:0.5.0 "/harbor/harbor_jobse" 6 minutes ago Up 6 minutes harbor-jobservice

86f1d905ec78 vmware/harbor-db:0.5.0 "docker-entrypoint.sh" 6 minutes ago Up 6 minutes 3306/tcp harbor-db

9cbab69f20b6 library/registry:2.5.0 "/entrypoint.sh serve" 6 minutes ago Up 6 minutes 5000/tcp registry

9c5693a53f4e vmware/harbor-ui:0.5.0 "/harbor/harbor_ui" 6 minutes ago Up 6 minutes harbor-ui

8bef4c4c47f0 vmware/harbor-log:0.5.0 "/bin/sh -c 'crond &&" 6 minutes ago Up 6 minutes 0.0.0.0:1514->514/tcp harbor-log

Harbor容器的stop与start:

进入Harbor目录执行如下命令即可:

docker-compose stop/start

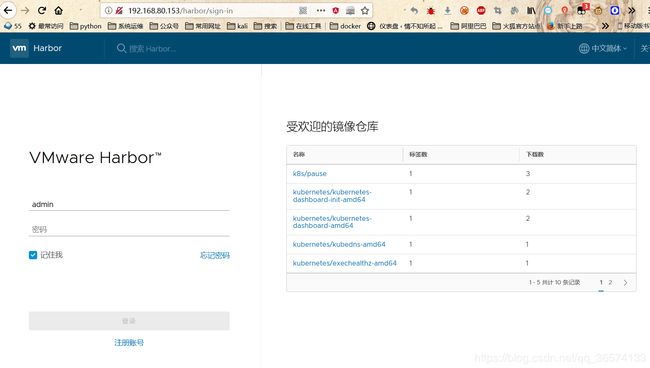

到此便安装完成了,直接打开浏览器登陆即可:

默认用户密码是:admin/Harbor12345

补充以下是另一种安装harbor方式

安装docker-compose

yum -y install epel-release

yum install python-pip

pip install --upgrade pip

yum install -y docker-compose

pip install docker-compose

pip install --upgrade backports.ssl_match_hostname

解压harbor.tar.gz

(可以从/data目录导出数据)

修改harbor的配置文件,请把hostname改成搭建harbor的虚拟机的IP

vim harbor.cfg

执行install.sh脚本,安装harbor(安装过程需要联网进行)

./install.sh --with-clair

生产环境部署时,需要将mysql使用外部的共享mysql,并部署多个harbor实例

因此需要

1)修改文件

./common/templates/adminserver/env

将其中的属性做对应的修改

DATABASE_TYPE=mysql

MYSQL_HOST=mysql

MYSQL_PORT=3306

MYSQL_USR=root

MYSQL_PWD=$db_password

MYSQL_DATABASE=registry

2)启动多个harbor实例后,配置相互复制

补充1:安装时使用–with-clair选项的话后续启动和关闭需要同时使用两个yml文件

docker-compose -f docker-compose.yml -f docker-compose.clair.yml down

docker-compose -f docker-compose.yml -f docker-compose.clair.yml up -d

补充2:修改容器挂载目录启动后adminserver不断重启

原因:

vi harbor.cfg

…

#The path of secretkey storage

secretkey_path = /data

关闭harbor

rm -rf $(data挂载目录)/secretkey

mv /data/secretkey $(data挂载目录)

启动harbor

补充3:导入虚机镜像的数据库密码问题

虚机数据data.tar.gz中的数据库默认密码是ideal123,admin密码是Ideal123

安装harbor时

vi harbor.cfg

db_password = ideal123

如果安装的时候没设置,登陆到数据库的容器里手动改

docker exec -it $(container_id) bash

mysql -S /var/run/mysqld/mysqld.sock -uroot -p’ideal123’

grant all on . to root@’%’ identified by ‘root123’;

补充4:clair数据库离线不能升级生成大量git-remote-http进程的问题

导入基础数据data.tar.gz 后修改配置文件

vi harbor/common/config/clair/config.yaml

updater:

interval: 12000h

8 创建并分发证书

安装cfssl证书创建工具

解压cfssl.tar.gz并把cfssl文件放到/usr/local/bin路径下

创建 CA 证书配置

mkdir /etc/kubernetes/ssl

cd /etc/kubernetes/ssl

创建config.json

vim config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"paas": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

创建csr.json文件

vim csr.json

{

"CN": "paas",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "paas",

"OU": "System"

}

]

}

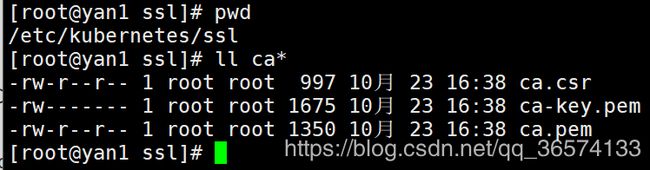

生成 CA 证书和私钥

cd /etc/kubernetes/ssl

cfssl gencert -initca csr.json | cfssljson -bare ca

查看生成:

分发证书:

创建证书目录(所有主机上都要进行这个操作!!!)

mkdir -p /etc/kubernetes/ssl

mkdir -p /etc/docker/certs.d/hub.paas

在证书服务器上向所有节点分发证书:

scp /etc/kubernetes/ssl/* root@所有节点:/etc/kubernetes/ssl/

scp /etc/kubernetes/ssl/* root@所有节点:/etc/docker/certs.d/hub.paas/

9 安装master端

cp kubernetes/client/bin/* /usr/local/bin/

chmod a+x /usr/local/bin/kube*

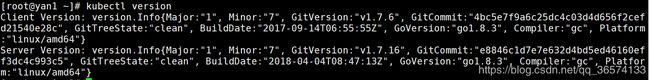

验证安装

kubectl version

创建 admin 证书(注释:这一步只需要在任意一个master节点上执行即可!!)

kubectl 与 kube-apiserver 的安全端口通信,需要为安全通信提供 TLS 证书和秘钥。

cd /etc/kubernetes/ssl

创建admin证书配置文件

vi admin-csr.json

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Shanghai",

"L": "Shanghai",

"O": "system:masters",

"OU": "System"

}

]

}

生成 admin 证书和私钥

cd /etc/kubernetes/ssl

# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/etc/kubernetes/ssl/config.json \

-profile=paas admin-csr.json | cfssljson -bare admin

查看生成

ls admin*

admin.csr admin-csr.json admin-key.pem admin.pem

分发证书

scp admin*.pem root@所有节点:/etc/kubernetes/ssl/

创建 apiserver 证书

cd /etc/kubernetes/ssl

创建 apiserver 证书配置文件

vi apiserver-csr.json

{

"CN": "system:kube-apiserver",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "system:masters",

"OU": "System"

}

]

}

生成 apiserver 证书和私钥

# cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/etc/kubernetes/ssl/config.json \

-profile=paas apiserver-csr.json | cfssljson -bare apiserver

查看生成

ls apiserver*

apiserver.csr apiserver-csr.json apiserver-key.pem apiserver.pem

分发证书

scp -r apiserver* root@所有节点:/etc/kubernetes/ssl

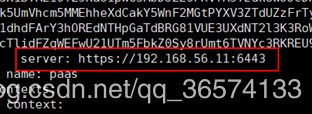

配置 kubectl kubeconfig 文件

# 配置 kubernetes 集群(--server需要填写master的ip)

kubectl config set-cluster paas \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=http://192.168.80.66:8088

配置 客户端认证

kubectl config set-credentials admin \

--client-certificate=/etc/kubernetes/ssl/admin.pem \

--embed-certs=true \

--client-key=/etc/kubernetes/ssl/admin-key.pem

kubectl config set-context paas \

--cluster=paas \

--user=admin

kubectl config use-context paas

分发 kubectl config 文件

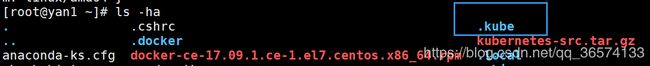

将上面配置的 kubeconfig 文件分发到其他机器,kubeconfig文件位于/root路径下,因为是隐藏文件,所以需要使用 ls -a指令来查看,如下图

其他master主机创建目录

mkdir /root/.kube

scp /root/.kube/config root@master2:/root/.kube/

scp /root/.kube/config root@master3:/root/.kube/

部署 kubernetes Master 节点

Master 需要部署 kube-apiserver , kube-scheduler , kube-controller-manager 这三个组件。

安装 组件

cd kubernetes

cp -r server/bin/{kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,kube-proxy,kubelet} /usr/local/bin/

创建 kubernetes 证书

cd /etc/kubernetes/ssl

vi kubernetes-csr.json

{

"CN": "paas",

"hosts": [

"127.0.0.1",

"192.168.56.11",

"10.254.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}

## 这里 hosts 字段中 三个 IP 分别为 127.0.0.1 本机, 192.168.56.11 为 Master 的IP, 10.254.0.1 为 kubernetes SVC 的 IP, 一般是 部署网络的第一个IP , 如: 10.254.0.1 , 在启动完成后,我们使用 kubectl get svc , 就可以查看到

生成 kubernetes 证书和私钥

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/etc/kubernetes/ssl/config.json \

-profile=paas kubernetes-csr.json |cfssljson -bare paas

查看生成

ls -lt paas*

-rw-r–r-- 1 root root 1245 7月 4 11:25 paas.csr

-rw------- 1 root root 1679 7月 4 11:25 paas.pem

-rw-r–r-- 1 root root 1619 7月 4 11:25 paas.pem

ls -lt kubernetes*

-rw-r–r-- 1 root root 436 7月 4 11:23 kubernetes-csr.json

拷贝到目录

scp -r paas* root@所有节点/etc/kubernetes/ssl/

scp -r kubernetes* root@所有节点/etc/kubernetes/ssl/

配置 kube-apiserver

kubelet 首次启动时向 kube-apiserver 发送 TLS Bootstrapping 请求,kube-apiserver 验证 kubelet 请求中的 token 是否与它配置的 token 一致,如果一致则自动为 kubelet生成证书和秘钥。

生成 token

[root@yan1 ssl]# head -c 16 /dev/urandom | od -An -t x | tr -d ' '

fad3822fc99d6340fa9532c2ebc4c36b

# 创建 token.csv 文件

cd /opt/ssl

vi token.csv

b89980a5a8088a771454080a91c879fb,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

拷贝

cp token.csv root@所有节点:/etc/kubernetes/

开启了 RBAC

vi /etc/systemd/system/kube-apiserver.service

[Unit]

Description=kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

User=root

ExecStart=/usr/local/bin/kube-apiserver \

--admission-control=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--advertise-address=192.168.80.153 \ #修改本机

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/lib/audit.log \

--authorization-mode=RBAC \

--bind-address=192.168.80.153 \ #修改本机

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--enable-swagger-ui=true \

--etcd-cafile=/etc/kubernetes/ssl/ca.pem \

--etcd-certfile=/etc/kubernetes/ssl/paas.pem \

--etcd-keyfile=/etc/kubernetes/ssl/paas-key.pem \

--etcd-servers=http://192.168.80.153:2379,http://192.168.80.145:2379,http://192.168.80.144:2379 \ #注意修改

--event-ttl=1h \

--kubelet-https=true \

--insecure-bind-address=192.168.80.153 \ #修改本机

--runtime-config=rbac.authorization.k8s.io/v1alpha1 \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-cluster-ip-range=10.254.0.0/16 \

--service-node-port-range=30000-32000 \

--tls-cert-file=/etc/kubernetes/ssl/paas.pem \

--tls-private-key-file=/etc/kubernetes/ssl/paas-key.pem \

--experimental-bootstrap-token-auth \

--token-auth-file=/etc/kubernetes/token.csv \

--kubelet-client-certificate=/etc/kubernetes/ssl/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/apiserver-key.pem \

--v=2

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

关闭了 RBAC

vi /etc/systemd/system/kube-apiserver.service

[Unit]

Description=kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

User=root

ExecStart=/usr/local/bin/kube-apiserver \

--advertise-address=192.168.80.153 \ #注意修改

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/lib/audit.log \

--bind-address=192.168.80.153 \ #注意修改

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--enable-swagger-ui=true \

--etcd-cafile=/etc/kubernetes/ssl/ca.pem \

--etcd-certfile=/etc/kubernetes/ssl/paas.pem \

--etcd-keyfile=/etc/kubernetes/ssl/paas-key.pem \

--etcd-servers=http://192.168.80.153:2379,http://192.168.80.145:2379,http://192.168.80.144:2379 \ #注意修改

--event-ttl=1h \

--kubelet-https=true \

--insecure-bind-address=192.168.80.153 \ #注意修改

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-cluster-ip-range=10.254.0.0/16 \

--service-node-port-range=30000-32000 \

--tls-cert-file=/etc/kubernetes/ssl/paas.pem \

--tls-private-key-file=/etc/kubernetes/ssl/paas-key.pem \

--experimental-bootstrap-token-auth \

--token-auth-file=/etc/kubernetes/token.csv \

--kubelet-client-certificate=/etc/kubernetes/ssl/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/apiserver-key.pem \

--requestheader-client-ca-file=/etc/kubernetes/ssl/ca.pem \

--requestheader-allowed-names=aggregator \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--proxy-client-cert-file=/etc/kubernetes/ssl/paas.pem \

--proxy-client-key-file=/etc/kubernetes/ssl/paas-key.pem \

--runtime-config=api/all=true \

--enable-aggregator-routing=true \

--runtime-config=extensions/v1beta1/podsecuritypolicy=true \

--v=2

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

# 这里面要注意的是 --service-node-port-range=30000-32000

# 这个地方是 映射外部端口时 的端口范围,随机映射也在这个范围内映射,指定映射端口必须也在这个范围内。

# 如果启用了多个api-server,为了保证后端etcd数据一致性,一般需要添加--etcd-quorum-read=true 选项。

启动 kube-apiserver

systemctl daemon-reload

systemctl enable kube-apiserver

systemctl start kube-apiserver

systemctl status kube-apiserver

配置 kube-controller-manager

# 创建 kube-controller-manager.service 文件

vi /etc/systemd/system/kube-controller-manager.service

[Unit]

Description=kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--address=127.0.0.1 \

--master=http://192.168.80.66:8088 \ #这里指向的是vip

--allocate-node-cidrs=true \

--service-cluster-ip-range=10.254.0.0/16 \

--cluster-cidr=10.233.0.0/16 \

--cluster-name=paas \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--leader-elect=true \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

在tengine中配置多个master入口的负载均衡

此处的--cluster-cidr与flannel的基础网段一致

启动 kube-controller-manager

systemctl daemon-reload

systemctl enable kube-controller-manager

systemctl start kube-controller-manager

systemctl status kube-controller-manager

配置 kube-scheduler

# 创建 kube-scheduler.service 文件

vi /etc/systemd/system/kube-scheduler.service

[Unit]

Description=kubernetes Scheduler

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-scheduler \

--address=127.0.0.1 \

--master=http://192.168.80.66:8088 \ #配置vip地址

--leader-elect=true \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

启动 kube-scheduler

systemctl daemon-reload

systemctl enable kube-scheduler

systemctl start kube-scheduler

systemctl status kube-scheduler

验证 Master 节点

kubectl get componentstatuses

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"}

etcd-1 Healthy {"health": "true"}

etcd-2 Healthy {"health": "true"}

部署Node节点

(注释:每个node都一定要手动创建,不能直接复制)

Node 节点 需要部署的组件有 docker flannel kubectl kubelet kube-proxy 这几个组件。

配置 kubectl

tar -xzvf kubernetes-client-linux-amd64.tar.gz

cp kubernetes/client/bin/* /usr/local/bin/

chmod a+x /usr/local/bin/kube*

# 验证安装

kubectl version

Client Version: version.Info{Major:"1", Minor:"7", GitVersion:"v1.7.0", GitCommit:"d3ada0119e776222f11ec7945e6d860061339aad", GitTreeState:"clean", BuildDate:"2017-06-29T23:15:59Z", GoVersion:"go1.8.3", Compiler:"gc", Platform:"linux/amd64"}

配置 kubelet

kubelet 启动时向 kube-apiserver 发送 TLS bootstrapping 请求,需要先将 bootstrap token 文件中的 kubelet-bootstrap 用户赋予 system:node-bootstrapper 角色,然后 kubelet 才有权限创建认证请求(certificatesigningrequests)。

# 先创建认证请求

# user 为 master 中 token.csv 文件里配置的用户

# 只需在一个node中创建一次就可以

任意一个master节点执行下方这一条指令即可:

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

tar zxvf kubernetes-server-linux-amd64.tar.gz

cp -r kubernetes/server/bin/{kube-proxy,kubelet} /usr/local/bin/

创建 kubelet kubeconfig 文件

# 配置集群(从这里开始都是在子节点上执行)

(注释:--server只需要填写一个master的Ip就行)

kubectl config set-cluster paas \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=http://192.168.80.66:8088 \

--kubeconfig=bootstrap.kubeconfig

# 配置客户端认证

(注释:token要改成你自己的!!!)

kubectl config set-credentials kubelet-bootstrap \

--token=2f4d84afdd8c5459c9756c226b461168 \

--kubeconfig=bootstrap.kubeconfig

# 配置关联

kubectl config set-context default \

--cluster=paas \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

# 配置默认关联

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

#检查bootstrap.kubeconfig里面的server的IP是否是你的master节点的IP

拷贝生成的 bootstrap.kubeconfig 文件

mv bootstrap.kubeconfig /etc/kubernetes/

创建 kubelet.service 文件

mkdir /apps/var/lib/kubelet

vi /etc/systemd/system/kubelet.service

[Unit]

Description=kubernetes Kubelet

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/apps/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \

--root-dir=/apps/var/lib/kubelet \

--address=192.168.80.151 \ #本机ip

--hostname-override=192.168.80.151 \ #本机ip

--pod-infra-container-image=192.168.80.153/k8s/pause \ #本机ip

--experimental-bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--require-kubeconfig \

--cert-dir=/etc/kubernetes/ssl \

--cluster_dns=10.254.0.2 \

--cluster_domain=cluster.local. \

--hairpin-mode promiscuous-bridge \

--allow-privileged=true \

--serialize-image-pulls=false \

--logtostderr=true \

--anonymous-auth=false \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--v=2

ExecStopPost=/sbin/iptables -A INPUT -s 10.0.0.0/8 -p tcp --dport 4194 -j ACCEPT

ExecStopPost=/sbin/iptables -A INPUT -s 172.16.0.0/12 -p tcp --dport 4194 -j ACCEPT

ExecStopPost=/sbin/iptables -A INPUT -s 192.168.0.0/16 -p tcp --dport 4194 -j ACCEPT

ExecStopPost=/sbin/iptables -A INPUT -p tcp --dport 4194 -j DROP

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

# 如上配置:

192.168.56.14 为本机的IP

10.254.0.2 预分配的 dns 地址

cluster.local. 为 kubernetes 集群的 domain

jicki/pause-amd64:3.0 这个是 pod 的基础镜像,既 gcr 的 gcr.io/google_containers/pause-amd64:3.0 镜像, 下载下来修改为自己的仓库中的比较快。

启动 kubelet

systemctl daemon-reload

systemctl enable kubelet

systemctl restart kubelet

systemctl status kubelet

配置 TLS 认证

# 在master节点上查看 csr 的名称

kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-EUE41uO5bofZZ-7GKD_V31oHXsENKFXCkLPy6Dj35Sc 1m kubelet-bootstrap Pending

# 增加 认证

kubectl certificate approve node-csr-PzcUmVLFILyGVkKOmod251uhJHiSq4ilfY_5xZhA_XE

# 成功后出现的提示

certificatekubsigningrequest "node-csr-EUE41uO5bofZZ-7GKD_V31oHXsENKFXCkLPy6Dj35Sc" approved

验证 nodes

kubectl get nodes

NAME STATUS AGE VERSION

192.168.56.12 Ready 33s v1.7.0

# 成功以后会自动生成配置文件与密钥

# 配置文件

ls /etc/kubernetes/kubelet.kubeconfig

/etc/kubernetes/kubelet.kubeconfig

# 密钥文件

ls /etc/kubernetes/ssl/kubelet*

/etc/kubernetes/ssl/kubelet-client.crt /etc/kubernetes/ssl/kubelet.crt

/etc/kubernetes/ssl/kubelet-client.key /etc/kubernetes/ssl/kubelet.key

配置 kube-proxy

创建 kube-proxy 证书

# 证书方面由于我们node端没有装 cfssl

# 我们回到 master 端 机器 去配置证书,然后拷贝过来

[root@k8s-master-1 ~]# cd /opt/ssl

vi kube-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}

生成 kube-proxy 证书和私钥

cfssl gencert -ca=/etc/kubernetes/ssl/ca.pem \

-ca-key=/etc/kubernetes/ssl/ca-key.pem \

-config=/etc/kubernetes/ssl/config.json \

-profile=paas kube-proxy-csr.json |cfssljson -bare kube-proxy

# 查看生成

ls kube-proxy*

kube-proxy.csr kube-proxy-csr.json kube-proxy-key.pem kube-proxy.pem

# 拷贝到目录

scp kube-proxy*.pem root@所有node节点:/etc/kubernetes/ssl/

拷贝到Node节点

scp kube-proxy*.pem 192.168.56.14:/etc/kubernetes/ssl/

scp kube-proxy*.pem 192.168.56.15:/etc/kubernetes/ssl/

创建 kube-proxy kubeconfig 文件

# 配置集群

kubectl config set-cluster paas \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=http://192.168.80.66:8088 \

--kubeconfig=kube-proxy.kubeconfig

# 配置客户端认证

kubectl config set-credentials kube-proxy \

--client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \

--client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

# 配置关联

kubectl config set-context default \

--cluster=paas \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

# 配置默认关联

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

# 拷贝到目录

mv kube-proxy.kubeconfig /etc/kubernetes/

创建 kube-proxy.service 文件

mkdir -p /var/lib/kube-proxy

vi /etc/systemd/system/kube-proxy.service

[Unit]

Description=kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \

--bind-address=192.168.80.151 \ #本机node节点的ip

--hostname-override=192.168.80.151 \ #本机node节点的ip

--cluster-cidr=10.254.0.0/16 \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig \

--logtostderr=true \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

启动 kube-proxy

systemctl daemon-reload

systemctl enable kube-proxy

systemctl start kube-proxy

systemctl status kube-proxy