python 利用scrapy爬取豆瓣TOP250部电影信息分别保存为csv、json、存入mysql、下载海报图片

目的网址https://movie.douban.com/top250

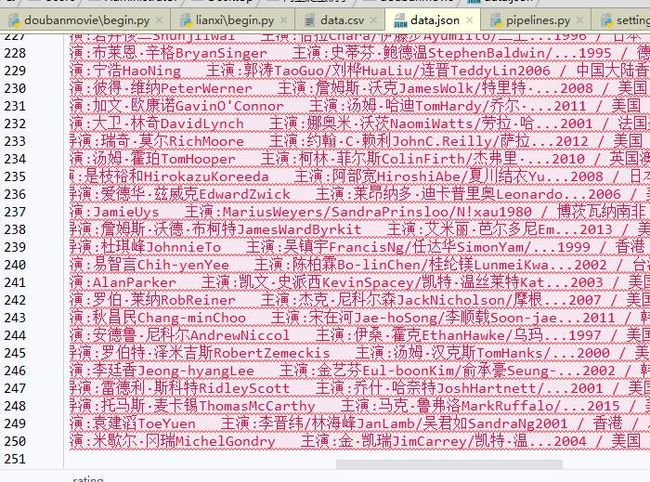

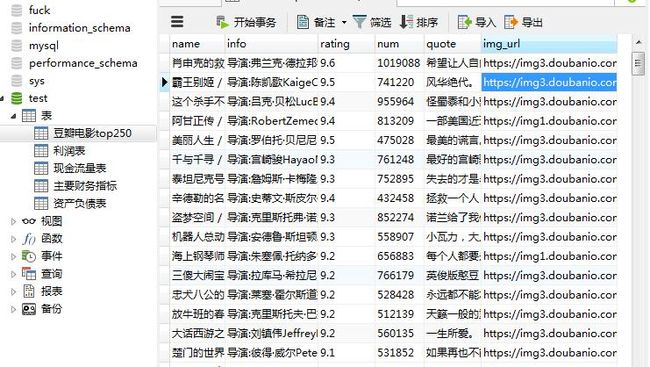

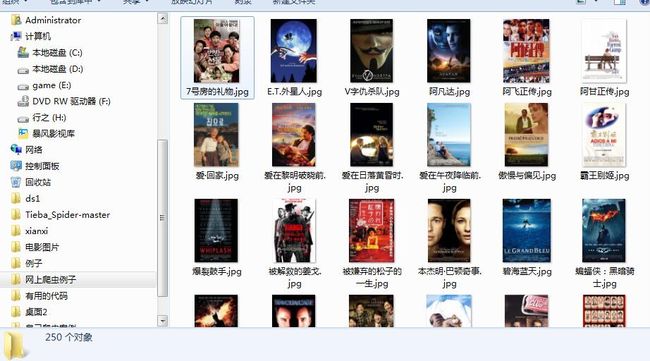

最后保存的内容:csv文件、json文件、存入mysql、下载海报图片

要点:1.middlewares.py 设定UA

2.Pipelines.py 保存为json文件 注意json.dumps()的用法

保存为csv文件,注意csv文件的写法,newline等等,还有os.path.getsize得出文件的大小

插入数据到mysql库,利用lazystore,还得先在begin创建table

下载图片,修改图片的路径名字!!PS:这个只能放在最后执行,因为改名后item会变乱!

3.settings.py 设定重试、间隔时间、带cookies登录、设定UA、设定Pipe的执行顺序、图片下载设定

4.begin.py 利用lazystore,创建table,,启动spider,直接保存csv文件的方法

主体spider.py(其实内容很简单!)

import scrapy from doubanmovie.items import DoubanmovieItem from scrapy.selector import Selector class XianxiSpider(scrapy.Spider): name = "doubanmovie" #begin 好像用的就是这个名字 allowed_domains = ['movie.douban.com'] start_urls = ['https://movie.douban.com/top250'] # 我们爬取35页的全部热门段子 def parse(self, response): sel=Selector(response) movies = response.xpath('//div[@class="item"]') item = DoubanmovieItem() for movie in movies: title=movie.xpath('.//div[@class="hd"]/a').xpath('string(.)').extract() name="".join(title).strip() item['name']=name.replace('\r\n', '').replace(' ', '').replace('\n', '') infos = movie.xpath('.//div[@class="bd"]/p').xpath('string(.)').extract() info="".join(infos).strip() item['info'] = info.replace('\r\n', '').replace(' ', '').replace('\n', '') item['rating'] = movie.xpath('.//span[@class="rating_num"]/text()').extract()[0].strip() item['num'] = movie.xpath('.//div[@class="star"]/span[last()]/text()').extract()[0].strip()[:-3] quotes = movie.xpath('.//span[@class="inq"]/text()').extract() quote = quotes[0].strip() if quotes else '木有!' item['quote'] = quote item['img_url'] = movie.xpath('.//img/@src').extract()[0] yield item #因为最后一页分析出得next_page没有会报错,所以得用try try: next_page = sel.xpath('//span[@class="next"]/a/@href').extract()[0] except: print('最后一页了!!') else: #没问题就进行下面的 url = 'https://movie.douban.com/top250' + next_page yield scrapy.Request(url, callback=self.parse)

items.py(设定item)

import scrapy class DoubanmovieItem(scrapy.Item): # 电影名字 name = scrapy.Field() # 电影信息 info = scrapy.Field() # 评分 rating = scrapy.Field() # 评论人数 num = scrapy.Field() # 经典语句 quote = scrapy.Field() # 电影图片 img_url = scrapy.Field()

middlewares.py(设定了UA,用的是fake_useragent模块)

from fake_useragent import UserAgent from scrapy import signals import random import logging

from scrapy.utils.response import response_status_message

class RandomUserAgent(object): def __init__(self,): self.agent=UserAgent() ##headers的模块 #这是随机选择ua def process_request(self,request,spider): request.headers.setdefault('User-agent',self.agent.random)##随机选择headers @classmethod#这部分好像是middlewares自带的,得加上 def from_crawler(cls, crawler): # This method is used by Scrapy to create your spiders. s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) return s def spider_opened(self, spider): spider.logger.info('Spider opened: %s' % spider.name)

Pipelines.py(重点!!有4个pipe,csv,json,mysql,image)

import json,csv,re,os import scrapy from scrapy.contrib.pipeline.images import ImagesPipeline from lazyspider.lazystore import LazyMysql class JsonPipeline(object): '''保存为json文件''' def process_item(self, item, spider): file_path =os.getcwd() + '\\'+'data.json' with open(file_path, 'a+',encoding='utf-8') as f: line = json.dumps(dict(item), ensure_ascii=False) + "\n" f.write(line) return item #明白了,这是第一个执行的,一定得返回item,不然下一个pipe进行不了 class ImagePipeline(ImagesPipeline): '''下载图片,把图片名字改为电影名字,其他return的item是正常的,这个pipeline return的都是重复的所以要放到最后进行''' def get_media_requests(self, item, info):##跟正常的spider一样了,可以单独利用这个模块下载图片 yield scrapy.Request(item['img_url'],meta={'name':item['name']}) def file_path(self, request, response=None, info=None):##要用meta传下来才行,最后返回的是保存路径 image_guid = request.url.split('/')[-1] newname = re.search(r'(\S+)', request.meta['name']).group(1) path = newname + '.jpg' return 'full/%s' % (path) class CSVPipeline(object):#writerow()里面是tuple或者list '''保存为csv''' def process_item(self, item, spider):###!!!需要加上newline='' dialect="excel"好像可有可无 file_path=os.getcwd() + '\\'+'data.csv' with open(file_path, 'a+', encoding='utf-8', newline='') as f: if os.path.getsize(file_path)==0:#里面没有文件就把列名添加进去 csv.writer(f, dialect="excel").writerow(('name','info','rating','num','quote','img_url')) csv.writer(f,dialect="excel").writerow((item['name'],item['info'],item['rating'],item['num'],item['quote'],item['img_url'])) else: csv.writer(f,dialect="excel").writerow((item['name'],item['info'],item['rating'],item['num'],item['quote'],item['img_url'])) return item class DBPipeline(object): '''保存到mysql''' def process_item(self, item, spider): TEST_DB = { 'host': 'localhost', 'user': 用户名, 'password': 密码, 'db': 'test' } store = LazyMysql(TEST_DB) state = store.save_one_data('豆瓣电影TOP250',item)#在begin设定table,利用lazymysql直接存入mysql return item

settings.py(设定了pipelines的顺序,UA,间隔时间,图片path等)

import os BOT_NAME = 'doubanmovie' SPIDER_MODULES = ['doubanmovie.spiders'] NEWSPIDER_MODULE = 'doubanmovie.spiders' RETRY_ENABLED = False#是否retry重试 DOWNLOAD_DELAY = 0.5#间隔时间 COOKIES_ENABLED = False#是否带cookies登录 DOWNLOADER_MIDDLEWARES = { 'doubanmovie.middlewares.RandomUserAgent': 20, 'scrapy.contrib.downloadermiddleware.useragent.UserAgentMiddleware':None, } ITEM_PIPELINES = { 'doubanmovie.pipelines.JsonPipeline': 1, 'doubanmovie.pipelines.ImagePipeline': 4,#!!!!下载图片一定要放在最后进行!!不然return回来的有问题 'doubanmovie.pipelines.DBPipeline':3, 'doubanmovie.pipelines.CSVPipeline':2 } #图片下载设定 IMAGES_STORE = os.getcwd() + '\\' #存放path IMAGES_EXPIRES = 90 #90天内抓取的都不会被重抓 ROBOTSTXT_OBEY = False

begin(用来启动scrapy,先创建mysql的表table,此文件放在整个文件的第一级目录下)

from scrapy import cmdline from lazyspider.lazystore import LazyMysql '''先创建mysql对应的table''' TEST_DB = { 'host': 'localhost', 'user': 用户名, 'password': 密码, 'db': 'test' } store = LazyMysql(TEST_DB) sql="CREATE TABLE IF NOT EXISTS 豆瓣电影TOP250 \ (name char(30),info char(30),rating char(30),num char(30),quote char(30),img_url char(30))\ ENGINE=InnoDB DEFAULT CHARSET='utf8'" store.query(sql) ##运行scrapy cmdline.execute("scrapy crawl doubanmovie".split())

ps:说个题外话,其实创建csv可以直接在begin里写

cmdline.execute("scrapy crawl lianxi -o info.csv -t csv".split())

结果: