java代码实现kafka生产者和消费者过程

一、准备的环境

之前配置好的单节点的zookeeper和单节点kafka,安装是在腾讯云上的。前两篇博客有zookeeper的安装和kafka的安装。

zk和kafka同时跑在一台机器上,因为我没有太多的服务器,而且只是简单的java demo,没必要上来就搞集群。

这里碰到的一个问题

Exception in thread "main" kafka.common.FailedToSendMessageException: Failed to send messages after 3 tries.

at kafka.producer.async.DefaultEventHandler.handle(DefaultEventHandler.scala:90)

at kafka.producer.Producer.send(Producer.scala:76)

at kafka.javaapi.producer.Producer.send(Producer.scala:33)

at com.tuan55.kafka.test.TestP.main(TestP.java:20)

这是生产者的代码 在向服务器发起连接后,在kafka的服务器配置中有zookeeper.connect=xx.xx.xx.xx:2181的配置 这时候kafka会查找zookeeper。

那么如果我们的hosts 中没有做hosts的配置 kafka经多次尝试连接不上就会报上面的错误。

kafka的server.properties这个配置文件是有配置这两项的地方。 里面也有zookeeper的连接路径,也可以改一下。因为我在同一台机器上跑的,跟默认localhost一样,没必要改。

############################# Server Basics #############################

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=0

############################# Socket Server Settings #############################

# The port the socket server listens on

port=9092

# Hostname the broker will bind to. If not set, the server will bind to all interfaces

#host.name=localhost

host.name=172.x.x.x //这里是腾讯云的内网地址

# Hostname the broker will advertise to producers and consumers. If not set, it uses the

# value for "host.name" if configured. Otherwise, it will use the value returned from

# java.net.InetAddress.getCanonicalHostName().

#advertised.host.name=

advertised.host.name=211.159.160.xxx //这里是腾讯云的外网IP

# The port to publish to ZooKeeper for clients to use. If this is not set,

# it will publish the same port that the broker binds to.

#advertised.port=

# The number of threads handling network requests

num.network.threads=3

# The number of threads doing disk I/O

num.io.threads=8

# The send buffer (SO_SNDBUF) used by the socket server

socket.send.buffer.bytes=102400

# The receive buffer (SO_RCVBUF) used by the socket server

"config/server.properties" 123L, 5623C ps:如果是阿里云,可能还要去管控台把zk和kafka的端口打开,2181 9092。

二、项目结构 java的maven项目

三、pom.xml

junit

junit

4.11

test

org.apache.kafka

kafka_2.9.2

0.8.1.1

jmxri

com.sun.jmx

jms

javax.jms

jmxtools

com.sun.jdmk

org.apache.avro

avro

1.7.3

org.apache.avro

avro-ipc

1.7.3

四、producer

import kafka.javaapi.producer.Producer;

import kafka.producer.KeyedMessage;

import kafka.producer.ProducerConfig;

import kafka.serializer.StringEncoder;

import java.util.Properties;

public class ProducerTest {

public static void main(String[] args) throws Exception {

Properties prop = new Properties();

prop.put("zookeeper.connect", "211.159.160.228:2181");

prop.put("metadata.broker.list", "211.159.160.228:9092");

prop.put("serializer.class", StringEncoder.class.getName());

//prop.put("request.required.acks", "1");

Producer producer = new Producer(new ProducerConfig(prop));

int i = 0;

while (true) {

producer.send(new KeyedMessage("stevetao", "msg:" + i++));

System.out.println("发送消息成功!");

Thread.sleep(1000);

}

}

} import kafka.consumer.Consumer;

import kafka.consumer.ConsumerConfig;

import kafka.consumer.ConsumerIterator;

import kafka.consumer.KafkaStream;

import kafka.javaapi.consumer.ConsumerConnector;

import kafka.serializer.StringEncoder;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.Properties;

public class ConsumerTest {

static final String topic = "stevetao";

public static void main(String[] args) {

Properties prop = new Properties();

prop.put("zookeeper.connect", "211.159.160.228:2181");

prop.put("metadata.broker.list", "211.159.160.228:9092");

prop.put("serializer.class", StringEncoder.class.getName());

prop.put("group.id", "group1");

ConsumerConnector consumer = Consumer.createJavaConsumerConnector(new ConsumerConfig(prop));

Map topicCountMap = new HashMap();

topicCountMap.put(topic, 1);

Map>> messageStreams = consumer.createMessageStreams(topicCountMap);

final KafkaStream kafkaStream = messageStreams.get(topic).get(0);

ConsumerIterator iterator = kafkaStream.iterator();

while (iterator.hasNext()) {

String msg = new String(iterator.next().message());

System.out.println("收到消息:"+msg);

}

}

}

六、启动zookeeper和kafka(这个指令前面博文里有了,不赘述了)

[root@VM_0_7_centos kafka2.10]# ps -ef| grep kafka

root 13903 12991 1 11:20 pts/0 00:00:08 /usr/app/jdk1.8.0_161/bin/java -Xmx1G -Xms1G -server -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:+CMSClassUnloadingEnabled -XX:+CMSScavengeBeforeRemark -XX:+DisableExplicitGC -Djava.awt.headless=true -Xloggc:/usr/app/kafka2.10/bin/../logs/kafkaServer-gc.log -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -XX:+PrintGCTimeStamps -Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false -Dkafka.logs.dir=/usr/app/kafka2.10/bin/../logs -Dlog4j.configuration=file:./bin/../config/log4j.properties -cp .:/usr/app/jdk1.8.0_161/jre/lib/rt.jar:/usr/app/jdk1.8.0_161/jre/lib/dt.jar:/usr/app/jdk1.8.0_161/lib/dt.jar:/usr/app/jdk1.8.0_161/lib/tools.jar:/usr/app/kafka2.10/bin/../core/build/dependant-libs-2.10.4*/*.jar:/usr/app/kafka2.10/bin/../examples/build/libs//kafka-examples*.jar:/usr/app/kafka2.10/bin/../contrib/hadoop-consumer/build/libs//kafka-hadoop-consumer*.jar:/usr/app/kafka2.10/bin/../contrib/hadoop-producer/build/libs//kafka-hadoop-producer*.jar:/usr/app/kafka2.10/bin/../clients/build/libs/kafka-clients*.jar:/usr/app/kafka2.10/bin/../libs/jopt-simple-3.2.jar:/usr/app/kafka2.10/bin/../libs/kafka_2.10-0.8.2.0.jar:/usr/app/kafka2.10/bin/../libs/kafka_2.10-0.8.2.0-javadoc.jar:/usr/app/kafka2.10/bin/../libs/kafka_2.10-0.8.2.0-scaladoc.jar:/usr/app/kafka2.10/bin/../libs/kafka_2.10-0.8.2.0-sources.jar:/usr/app/kafka2.10/bin/../libs/kafka_2.10-0.8.2.0-test.jar:/usr/app/kafka2.10/bin/../libs/kafka-clients-0.8.2.0.jar:/usr/app/kafka2.10/bin/../libs/log4j-1.2.16.jar:/usr/app/kafka2.10/bin/../libs/lz4-1.2.0.jar:/usr/app/kafka2.10/bin/../libs/metrics-core-2.2.0.jar:/usr/app/kafka2.10/bin/../libs/scala-library-2.10.4.jar:/usr/app/kafka2.10/bin/../libs/slf4j-api-1.7.6.jar:/usr/app/kafka2.10/bin/../libs/slf4j-log4j12-1.6.1.jar:/usr/app/kafka2.10/bin/../libs/snappy-java-1.1.1.6.jar:/usr/app/kafka2.10/bin/../libs/zkclient-0.3.jar:/usr/app/kafka2.10/bin/../libs/zookeeper-3.4.6.jar:/usr/app/kafka2.10/bin/../core/build/libs/kafka_2.10*.jar kafka.Kafka config/server.properties

root 14409 12991 0 11:29 pts/0 00:00:00 grep --color=auto kafka

[root@VM_0_7_centos kafka2.10]# vim config/server.properties

[root@VM_0_7_centos kafka2.10]# jps

7029 QuorumPeerMain

16763 Bootstrap

17612 Jps

15165 Bootstrap

13903 Kafka

[root@VM_0_7_centos kafka2.10]#

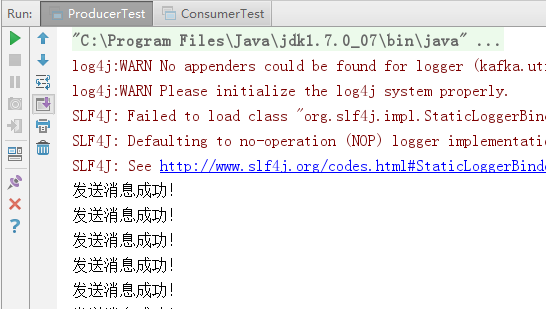

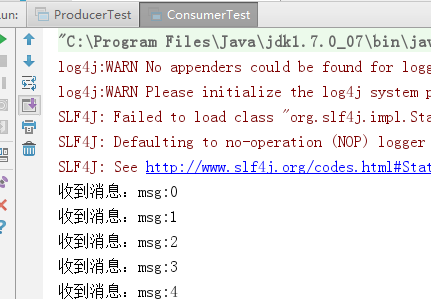

七、运行java代码

记得先运行消费者,关闭先关生产者。