实验拓扑图:

node1主机名配置

[root@localhost ~]# vim /etc/sysconfig/network

3 HOSTNAME=node1.a.com

[root@localhost ~]# hostname node1.a.com #配置后需注销才能生效

[root@localhost ~]# hostname #查看主机名

node1.a.com

配置本地DNS解析

[root@node1 ~]# vim /etc/hosts

5 192.168.2.10 node1.a.com

6 192.168.2.11 node2.a.com

同步时间

[root@node1 ~]# hwclock -s

node2主机名配置

[root@localhost ~]# vim /etc/sysconfig/network

3 HOSTNAME=node1.a.com

[root@localhost ~]# hostname node2.a.com #配置后需注销才能生效

[root@localhost ~]# hostname #查看主机名

node1.a.com

配置本地DNS解析

[root@node2 ~]# vim /etc/hosts

5 192.168.2.10 node1.a.com

6 192.168.2.11 node2.a.com

同步时间

[root@node1 ~]# hwclock -s

实现无障碍copy

node1上配置:

产生钥匙对

[root@node1 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

86:36:c5:c6:70:2b:b9:13:27:b2:c6:6e:f4:2a:ce:ab [email protected]

将公钥copy给node2

[root@node1 ~]# ssh-copy-id -i .ssh/id_rsa.pub [email protected]

15

The authenticity of host 'node2.a.com (192.168.2.11)' can't be established.

RSA key fingerprint is 3a:64:a0:6e:d0:21:14:6c:e9:1e:84:50:89:42:05:29.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node2.a.com,192.168.2.11' (RSA) to the list of known hosts.

[email protected]'s password:

Now try logging into the machine, with "ssh '[email protected]'", and check in:

[root@localhost ~]# vim /etc/sysconfig/network

3 HOSTNAME=node1.a.com

[root@localhost ~]# hostname node2.a.com #配置后需注销才能生效

[root@localhost ~]# hostname #查看主机名

node1.a.com

配置本地DNS解析

[root@node2 ~]# vim /etc/hosts

5 192.168.2.10 node1.a.com

6 192.168.2.11 node2.a.com

同步时间

[root@node1 ~]# hwclock -s

实现无障碍copy

node1上配置:

产生钥匙对

[root@node1 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory '/root/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

86:36:c5:c6:70:2b:b9:13:27:b2:c6:6e:f4:2a:ce:ab [email protected]

将公钥copy给node2

[root@node1 ~]# ssh-copy-id -i .ssh/id_rsa.pub [email protected]

15

The authenticity of host 'node2.a.com (192.168.2.11)' can't be established.

RSA key fingerprint is 3a:64:a0:6e:d0:21:14:6c:e9:1e:84:50:89:42:05:29.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node2.a.com,192.168.2.11' (RSA) to the list of known hosts.

[email protected]'s password:

Now try logging into the machine, with "ssh '[email protected]'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

在node2上同样这样操作

[root@node2 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

05:fb:ac:d5:83:f0:b2:12:73:1d:87:38:38:8f:2d:04 [email protected]

[root@node2 ~]# ssh-copy-id -i .ssh/id_rsa.pub [email protected]

15

The authenticity of host 'node1.a.com (192.168.2.10)' can't be established.

RSA key fingerprint is 3a:64:a0:6e:d0:21:14:6c:e9:1e:84:50:89:42:05:29.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node1.a.com,192.168.2.10' (RSA) to the list of known hosts.

[email protected]'s password:

Now try logging into the machine, with "ssh '[email protected]'", and check in:

在node2上同样这样操作

[root@node2 ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

05:fb:ac:d5:83:f0:b2:12:73:1d:87:38:38:8f:2d:04 [email protected]

[root@node2 ~]# ssh-copy-id -i .ssh/id_rsa.pub [email protected]

15

The authenticity of host 'node1.a.com (192.168.2.10)' can't be established.

RSA key fingerprint is 3a:64:a0:6e:d0:21:14:6c:e9:1e:84:50:89:42:05:29.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'node1.a.com,192.168.2.10' (RSA) to the list of known hosts.

[email protected]'s password:

Now try logging into the machine, with "ssh '[email protected]'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

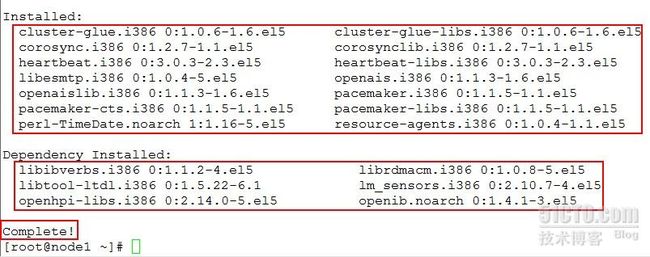

导入实验所需的软件包,如图:

安装服务:(提前配置好yum环境)

[root@node1 ~]# yum localinstall -y *.rpm --nogpgcheck

安装成功,如下图:

[root@node1 ~]# yum localinstall -y *.rpm --nogpgcheck

安装成功,如下图:

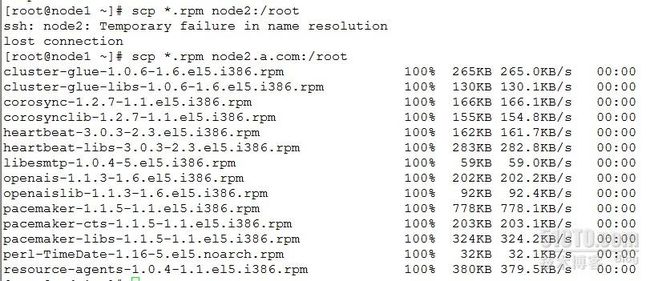

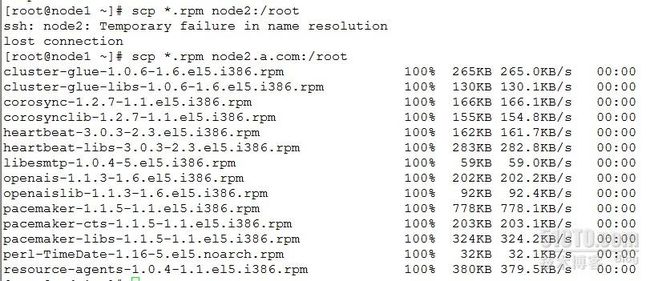

把软件包copy到node2

[root@node1 ~]# scp *.rpm node2:/root

如下图:

安装服务:(提前配置好yum环境)

[root@node2 ~]# yum localinstall -y *.rpm --nogpgcheck

安装成功,如下图:

[root@node1 ~]# scp *.rpm node2:/root

如下图:

安装服务:(提前配置好yum环境)

[root@node2 ~]# yum localinstall -y *.rpm --nogpgcheck

安装成功,如下图:

修改配置文件

[root@node1 ~]# cd /etc/corosync/

[root@node1 corosync]# ls

amf.conf.example corosync.conf.example service.d uidgid.d

[root@node1 corosync]# cp corosync.conf.example corosync.conf

[root@node1 corosync]# vim corosync.conf

[root@node1 corosync]# vim corosync.conf

bindnetaddr: 192.168.2.0

补充一些东西,前面只是底层的东西,因为要用

[root@node1 ~]# cd /etc/corosync/

[root@node1 corosync]# ls

amf.conf.example corosync.conf.example service.d uidgid.d

[root@node1 corosync]# cp corosync.conf.example corosync.conf

[root@node1 corosync]# vim corosync.conf

[root@node1 corosync]# vim corosync.conf

bindnetaddr: 192.168.2.0

补充一些东西,前面只是底层的东西,因为要用

pacemakerservice {

ver: 0

name: pacemaker

}

虽然用不到openais ,但是会用到一些子选项

ver: 0

name: pacemaker

}

虽然用不到openais ,但是会用到一些子选项

aisexec {

user: root

group: root

}

在node1,node2上创建目录

[root@node1 corosync]# mkdir /var/log/cluster

[root@node1 corosync]# ssh node2.a.com 'mkdir /var/log/cluster'

为了便面其他主机加入该集群,需要认证,生成一个authkey

[root@node1 corosync]# corosync-keygen

Corosync Cluster Engine Authentication key generator.

Gathering 1024 bits for key from /dev/random.

Press keys on your keyboard to generate entropy.

Writing corosync key to /etc/corosync/authkey.

将authkey,配置文件copy到node2上

[root@node1 corosync]# scp -p authkey corosync.conf node2.a.com:/etc/corosync/

authkey 100% 128 0.1KB/s 00:00

corosync.conf

在node1,node2上启动服务

[root@node1 corosync]# service corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@node1 corosync]# ssh node2.a.com 'service corosync start'

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@node1 corosync]#

验证corosync引擎是否正常启动了

[root@node1 corosync]# grep -i -e "corosync cluster engine" -e "configuration file" /var/log/messages

Sep 18 01:52:10 localhost smartd[3123]: Opened configuration file /etc/smartd.conf

Sep 18 01:52:10 localhost smartd[3123]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Sep 18 02:02:24 localhost smartd[2991]: Opened configuration file /etc/smartd.conf

Sep 18 02:02:24 localhost smartd[2991]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 18 18:30:10 localhost smartd[2990]: Opened configuration file /etc/smartd.conf

Oct 18 18:30:10 localhost smartd[2990]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 19 19:30:18 localhost smartd[2990]: Opened configuration file /etc/smartd.conf

Oct 19 19:30:18 localhost smartd[2990]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 19 20:31:22 localhost corosync[3391]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Oct 19 20:31:22 localhost corosync[3391]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

Oct 19 20:40:32 localhost corosync[3391]: [MAIN ] Corosync Cluster Engine exiting with status 0 at main.c:170.

Oct 19 20:40:32 localhost corosync[13918]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Oct 19 20:40:32 localhost corosync[13918]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

查看初始化成员节点通知是否发出

[root@node1 corosync]# grep -i totem /var/log/messages

Oct 19 20:40:33 localhost corosync[13918]: [TOTEM ] The network interface [192.168.2.10] is now up.

Oct 19 20:40:33 localhost corosync[13918]: [TOTEM ] Process pause detected for 748 ms, flushing membership messages.

Oct 19 20:40:33 localhost corosync[13918]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 19 20:41:11 localhost corosync[13918]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

检查过程中是否有错误产生

[root@node1 corosync]# grep -i error: /var/log/messages

检查pacemaker时候已经启动了

[root@node1 corosync]# grep -i pcmk_startup /var/log/messages

Oct 19 20:31:22 localhost corosync[3391]: [pcmk ] info: pcmk_startup: CRM: Initialized

Oct 19 20:31:22 localhost corosync[3391]: [pcmk ] Logging: Initialized pcmk_startup

Oct 19 20:31:22 localhost corosync[3391]: [pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Oct 19 20:31:22 localhost corosync[3391]: [pcmk ] info: pcmk_startup: Service: 9

Oct 19 20:31:22 localhost corosync[3391]: [pcmk ] info: pcmk_startup: Local hostname: node1.a.com

Oct 19 20:40:33 localhost corosync[13918]: [pcmk ] info: pcmk_startup: CRM: Initialized

Oct 19 20:40:33 localhost corosync[13918]: [pcmk ] Logging: Initialized pcmk_startup

Oct 19 20:40:33 localhost corosync[13918]: [pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Oct 19 20:40:33 localhost corosync[13918]: [pcmk ] info: pcmk_startup: Service: 9

Oct 19 20:40:33 localhost corosync[13918]: [pcmk ] info: pcmk_startup: Local hostname: node1.a.com

[root@node1 corosync]#

user: root

group: root

}

在node1,node2上创建目录

[root@node1 corosync]# mkdir /var/log/cluster

[root@node1 corosync]# ssh node2.a.com 'mkdir /var/log/cluster'

为了便面其他主机加入该集群,需要认证,生成一个authkey

[root@node1 corosync]# corosync-keygen

Corosync Cluster Engine Authentication key generator.

Gathering 1024 bits for key from /dev/random.

Press keys on your keyboard to generate entropy.

Writing corosync key to /etc/corosync/authkey.

将authkey,配置文件copy到node2上

[root@node1 corosync]# scp -p authkey corosync.conf node2.a.com:/etc/corosync/

authkey 100% 128 0.1KB/s 00:00

corosync.conf

在node1,node2上启动服务

[root@node1 corosync]# service corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@node1 corosync]# ssh node2.a.com 'service corosync start'

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@node1 corosync]#

验证corosync引擎是否正常启动了

[root@node1 corosync]# grep -i -e "corosync cluster engine" -e "configuration file" /var/log/messages

Sep 18 01:52:10 localhost smartd[3123]: Opened configuration file /etc/smartd.conf

Sep 18 01:52:10 localhost smartd[3123]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Sep 18 02:02:24 localhost smartd[2991]: Opened configuration file /etc/smartd.conf

Sep 18 02:02:24 localhost smartd[2991]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 18 18:30:10 localhost smartd[2990]: Opened configuration file /etc/smartd.conf

Oct 18 18:30:10 localhost smartd[2990]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 19 19:30:18 localhost smartd[2990]: Opened configuration file /etc/smartd.conf

Oct 19 19:30:18 localhost smartd[2990]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 19 20:31:22 localhost corosync[3391]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Oct 19 20:31:22 localhost corosync[3391]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

Oct 19 20:40:32 localhost corosync[3391]: [MAIN ] Corosync Cluster Engine exiting with status 0 at main.c:170.

Oct 19 20:40:32 localhost corosync[13918]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Oct 19 20:40:32 localhost corosync[13918]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

查看初始化成员节点通知是否发出

[root@node1 corosync]# grep -i totem /var/log/messages

Oct 19 20:40:33 localhost corosync[13918]: [TOTEM ] The network interface [192.168.2.10] is now up.

Oct 19 20:40:33 localhost corosync[13918]: [TOTEM ] Process pause detected for 748 ms, flushing membership messages.

Oct 19 20:40:33 localhost corosync[13918]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 19 20:41:11 localhost corosync[13918]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

检查过程中是否有错误产生

[root@node1 corosync]# grep -i error: /var/log/messages

检查pacemaker时候已经启动了

[root@node1 corosync]# grep -i pcmk_startup /var/log/messages

Oct 19 20:31:22 localhost corosync[3391]: [pcmk ] info: pcmk_startup: CRM: Initialized

Oct 19 20:31:22 localhost corosync[3391]: [pcmk ] Logging: Initialized pcmk_startup

Oct 19 20:31:22 localhost corosync[3391]: [pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Oct 19 20:31:22 localhost corosync[3391]: [pcmk ] info: pcmk_startup: Service: 9

Oct 19 20:31:22 localhost corosync[3391]: [pcmk ] info: pcmk_startup: Local hostname: node1.a.com

Oct 19 20:40:33 localhost corosync[13918]: [pcmk ] info: pcmk_startup: CRM: Initialized

Oct 19 20:40:33 localhost corosync[13918]: [pcmk ] Logging: Initialized pcmk_startup

Oct 19 20:40:33 localhost corosync[13918]: [pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Oct 19 20:40:33 localhost corosync[13918]: [pcmk ] info: pcmk_startup: Service: 9

Oct 19 20:40:33 localhost corosync[13918]: [pcmk ] info: pcmk_startup: Local hostname: node1.a.com

[root@node1 corosync]#

在node2上同样要进行将前面的验证步骤

[root@node2 corosync]# grep -i -e "corosync cluster engine" -e "configuration file" /var/log/messages

Sep 18 01:52:10 localhost smartd[3123]: Opened configuration file /etc/smartd.conf

Sep 18 01:52:10 localhost smartd[3123]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Sep 18 02:02:24 localhost smartd[2991]: Opened configuration file /etc/smartd.conf

Sep 18 02:02:24 localhost smartd[2991]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 18 18:30:10 localhost smartd[2990]: Opened configuration file /etc/smartd.conf

Oct 18 18:30:10 localhost smartd[2990]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 18 18:36:17 localhost smartd[3074]: Opened configuration file /etc/smartd.conf

Oct 18 18:36:18 localhost smartd[3074]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 19 20:32:11 localhost corosync[17180]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Oct 19 20:32:11 localhost corosync[17180]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

Oct 19 20:41:10 localhost corosync[17180]: [MAIN ] Corosync Cluster Engine exiting with status 0 at main.c:170.

Oct 19 20:41:10 localhost corosync[3045]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Oct 19 20:41:10 localhost corosync[3045]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

[root@node2 corosync]# grep -i totem /var/log/messages

Oct 19 20:32:11 localhost corosync[17180]: [TOTEM ] Initializing transport (UDP/IP).

Oct 19 20:32:11 localhost corosync[17180]: [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Oct 19 20:32:11 localhost corosync[17180]: [TOTEM ] The network interface is down.

Oct 19 20:32:12 localhost corosync[17180]: [TOTEM ] Process pause detected for 1187 ms, flushing membership messages.

Oct 19 20:32:12 localhost corosync[17180]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 19 20:41:10 localhost corosync[3045]: [TOTEM ] Initializing transport (UDP/IP).

Oct 19 20:41:10 localhost corosync[3045]: [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Oct 19 20:41:11 localhost corosync[3045]: [TOTEM ] The network interface [192.168.2.11] is now up.

Oct 19 20:41:12 localhost corosync[3045]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 19 20:41:12 localhost corosync[3045]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

[root@node2 corosync]# grep -i error: /var/log/messages

[root@node2 corosync]# grep -i pcmk_startup /var/log/messages

Oct 19 20:32:11 localhost corosync[17180]: [pcmk ] info: pcmk_startup: CRM: Initialized

Oct 19 20:32:11 localhost corosync[17180]: [pcmk ] Logging: Initialized pcmk_startup

Oct 19 20:32:11 localhost corosync[17180]: [pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Oct 19 20:32:11 localhost corosync[17180]: [pcmk ] info: pcmk_startup: Service: 9

Oct 19 20:32:11 localhost corosync[17180]: [pcmk ] info: pcmk_startup: Local hostname: node2.a.com

Oct 19 20:41:11 localhost corosync[3045]: [pcmk ] info: pcmk_startup: CRM: Initialized

Oct 19 20:41:11 localhost corosync[3045]: [pcmk ] Logging: Initialized pcmk_startup

Oct 19 20:41:11 localhost corosync[3045]: [pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Oct 19 20:41:11 localhost corosync[3045]: [pcmk ] info: pcmk_startup: Service: 9

Oct 19 20:41:11 localhost corosync[3045]: [pcmk ] info: pcmk_startup: Local hostname: node2.a.com

[root@node2 corosync]#

[root@node2 corosync]# grep -i -e "corosync cluster engine" -e "configuration file" /var/log/messages

Sep 18 01:52:10 localhost smartd[3123]: Opened configuration file /etc/smartd.conf

Sep 18 01:52:10 localhost smartd[3123]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Sep 18 02:02:24 localhost smartd[2991]: Opened configuration file /etc/smartd.conf

Sep 18 02:02:24 localhost smartd[2991]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 18 18:30:10 localhost smartd[2990]: Opened configuration file /etc/smartd.conf

Oct 18 18:30:10 localhost smartd[2990]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 18 18:36:17 localhost smartd[3074]: Opened configuration file /etc/smartd.conf

Oct 18 18:36:18 localhost smartd[3074]: Configuration file /etc/smartd.conf was parsed, found DEVICESCAN, scanning devices

Oct 19 20:32:11 localhost corosync[17180]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Oct 19 20:32:11 localhost corosync[17180]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

Oct 19 20:41:10 localhost corosync[17180]: [MAIN ] Corosync Cluster Engine exiting with status 0 at main.c:170.

Oct 19 20:41:10 localhost corosync[3045]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Oct 19 20:41:10 localhost corosync[3045]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

[root@node2 corosync]# grep -i totem /var/log/messages

Oct 19 20:32:11 localhost corosync[17180]: [TOTEM ] Initializing transport (UDP/IP).

Oct 19 20:32:11 localhost corosync[17180]: [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Oct 19 20:32:11 localhost corosync[17180]: [TOTEM ] The network interface is down.

Oct 19 20:32:12 localhost corosync[17180]: [TOTEM ] Process pause detected for 1187 ms, flushing membership messages.

Oct 19 20:32:12 localhost corosync[17180]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 19 20:41:10 localhost corosync[3045]: [TOTEM ] Initializing transport (UDP/IP).

Oct 19 20:41:10 localhost corosync[3045]: [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Oct 19 20:41:11 localhost corosync[3045]: [TOTEM ] The network interface [192.168.2.11] is now up.

Oct 19 20:41:12 localhost corosync[3045]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

Oct 19 20:41:12 localhost corosync[3045]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

[root@node2 corosync]# grep -i error: /var/log/messages

[root@node2 corosync]# grep -i pcmk_startup /var/log/messages

Oct 19 20:32:11 localhost corosync[17180]: [pcmk ] info: pcmk_startup: CRM: Initialized

Oct 19 20:32:11 localhost corosync[17180]: [pcmk ] Logging: Initialized pcmk_startup

Oct 19 20:32:11 localhost corosync[17180]: [pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Oct 19 20:32:11 localhost corosync[17180]: [pcmk ] info: pcmk_startup: Service: 9

Oct 19 20:32:11 localhost corosync[17180]: [pcmk ] info: pcmk_startup: Local hostname: node2.a.com

Oct 19 20:41:11 localhost corosync[3045]: [pcmk ] info: pcmk_startup: CRM: Initialized

Oct 19 20:41:11 localhost corosync[3045]: [pcmk ] Logging: Initialized pcmk_startup

Oct 19 20:41:11 localhost corosync[3045]: [pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Oct 19 20:41:11 localhost corosync[3045]: [pcmk ] info: pcmk_startup: Service: 9

Oct 19 20:41:11 localhost corosync[3045]: [pcmk ] info: pcmk_startup: Local hostname: node2.a.com

[root@node2 corosync]#

在任何一个节点上 查看集群的成员状态

[root@node1 corosync]# crm status

如图:

[root@node1 corosync]# crm status

如图:

[root@node2 corosync]# crm status

如图:

提供高可用服务

在corosync中,定义服务可以用两种接口

1.图形接口 (使用hb—gui)

2.crm (pacemaker 提供,是一个shell)

例如:

[root@node1 corosync]# ssh node2.a.com 'date'

Fri Oct 19 21:01:16 CST 2012

[root@node1 corosync]#

在corosync中,定义服务可以用两种接口

1.图形接口 (使用hb—gui)

2.crm (pacemaker 提供,是一个shell)

例如:

[root@node1 corosync]# ssh node2.a.com 'date'

Fri Oct 19 21:01:16 CST 2012

[root@node1 corosync]#

[root@node2 corosync]# crm status

============

Last updated: Fri Oct 19 20:54:47 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

0 Resources configured.

============

============

Last updated: Fri Oct 19 20:54:47 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

0 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

[root@node2 corosync]# crm

crm(live)# configure

crm(live)configure# show

node node1.a.com

node node2.a.com

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2"

crm(live)configure# exit

bye

如何验证该文件的语法错误

[root@node1 corosync]# crm_verify -L

crm_verify[2532]: 2012/10/19_21:03:12 ERROR: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

crm_verify[2532]: 2012/10/19_21:03:12 ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

crm_verify[2532]: 2012/10/19_21:03:12 ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

Errors found during check: config not valid

-V may provide more details

可以看到有stonith错误,在高可用的环境里面,会禁止实用任何资源

可以禁用stonith

[root@node1 corosync]# crm

crm(live)# configure

crm(live)configure# property stonith-enabled=false

crm(live)configure# commit

crm(live)configure# show

node node1.a.com

node node2.a.com

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# exit

bye

crm(live)# configure

crm(live)configure# show

node node1.a.com

node node2.a.com

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2"

crm(live)configure# exit

bye

如何验证该文件的语法错误

[root@node1 corosync]# crm_verify -L

crm_verify[2532]: 2012/10/19_21:03:12 ERROR: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

crm_verify[2532]: 2012/10/19_21:03:12 ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

crm_verify[2532]: 2012/10/19_21:03:12 ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

Errors found during check: config not valid

-V may provide more details

可以看到有stonith错误,在高可用的环境里面,会禁止实用任何资源

可以禁用stonith

[root@node1 corosync]# crm

crm(live)# configure

crm(live)configure# property stonith-enabled=false

crm(live)configure# commit

crm(live)configure# show

node node1.a.com

node node2.a.com

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# exit

bye

[root@node2 corosync]# crm

crm(live)# configure

crm(live)configure# property stonith-enabled=false

crm(live)configure# commit

crm(live)configure# show

node node1.a.com

node node2.a.com

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# bye

bye

再次进行检查

[root@node1 corosync]# crm_verify -L

[root@node1 corosync]# crm_verify -L

可以看到没有错误了

显示stonith所指示的类型

[root@node1 corosync]# stonith -L

crm(live)# configure

crm(live)configure# property stonith-enabled=false

crm(live)configure# commit

crm(live)configure# show

node node1.a.com

node node2.a.com

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# bye

bye

再次进行检查

[root@node1 corosync]# crm_verify -L

[root@node1 corosync]# crm_verify -L

可以看到没有错误了

显示stonith所指示的类型

[root@node1 corosync]# stonith -L

集群的资源类型有4种

primitive 本地主资源 (只能运行在一个节点上)

group 把多个资源轨道一个组里面,便于管理

clone 需要在多个节点上同时启用的 (如ocfs2 ,stonith ,没有主次之分)

master 有主次之分,如drbd

查看资源代理类型

[root@node1 corosync]# crm

crm(live)# configure

crm(live)configure# ra

crm(live)configure ra# list

usage: list []

crm(live)configure ra# classes

heartbeat

lsb

ocf / heartbeat pacemaker

stonith

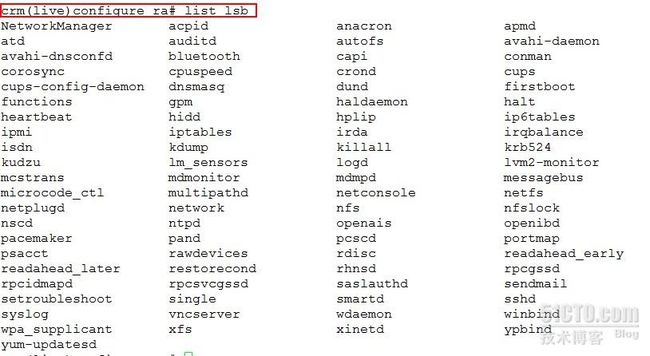

比如查看lsb

crm(live)configure ra# list lsb

如图:

primitive 本地主资源 (只能运行在一个节点上)

group 把多个资源轨道一个组里面,便于管理

clone 需要在多个节点上同时启用的 (如ocfs2 ,stonith ,没有主次之分)

master 有主次之分,如drbd

查看资源代理类型

[root@node1 corosync]# crm

crm(live)# configure

crm(live)configure# ra

crm(live)configure ra# list

usage: list

crm(live)configure ra# classes

heartbeat

lsb

ocf / heartbeat pacemaker

stonith

比如查看lsb

crm(live)configure ra# list lsb

如图:

举例:

查看ocf的heartbeat

crm(live)configure ra# list ocf heartbeat

crm(live)configure ra# meta ocf:heartbeat:IPaddr

查看ocf的heartbeat

crm(live)configure ra# list ocf heartbeat

crm(live)configure ra# meta ocf:heartbeat:IPaddr

配置一个资源,可以在configuration 下面进行配置

1.先资源名字

[root@node1 corosync]# crm

crm(live)# configure

crm(live)configure# primitive webIP ocf:heartbeat:IPaddr params ip=192.168.2.20

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.2.20"

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# commit

安装apache服务

[root@node1 corosync]# yum install httpd -y

[root@node1 corosync]# echo "node1.a.com" >/var/www/html/index.html

[root@node2 corosync]# yum install httpd -y

[root@node2 corosync]# echo "node2.a.com" >/var/www/html/index.html

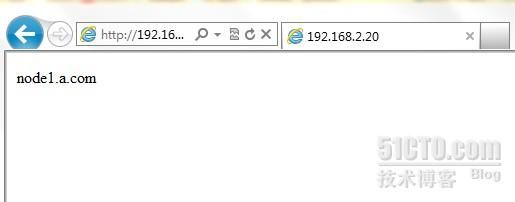

查看状态,可以看到资源在node1上启动

[root@node1 corosync]# crm

crm(live)# status

============

Last updated: Fri Oct 19 21:32:25 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

1.先资源名字

[root@node1 corosync]# crm

crm(live)# configure

crm(live)configure# primitive webIP ocf:heartbeat:IPaddr params ip=192.168.2.20

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.2.20"

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# commit

安装apache服务

[root@node1 corosync]# yum install httpd -y

[root@node1 corosync]# echo "node1.a.com" >/var/www/html/index.html

[root@node2 corosync]# yum install httpd -y

[root@node2 corosync]# echo "node2.a.com" >/var/www/html/index.html

查看状态,可以看到资源在node1上启动

[root@node1 corosync]# crm

crm(live)# status

============

Last updated: Fri Oct 19 21:32:25 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

webIP (ocf::heartbeat:IPaddr): Started node1.a.com

crm(live)#

使用ifconfig 在节点1上进行查看,如下图:

crm(live)#

使用ifconfig 在节点1上进行查看,如下图:

定义web服务资源

[root@node1 corosync]# crm

crm(live)# ra

crm(live)ra# meta lsb:httpd

lsb:httpd

[root@node1 corosync]# crm

crm(live)# ra

crm(live)ra# meta lsb:httpd

lsb:httpd

Apache is a World Wide Web server. It is used to serve \

HTML files and CGI.

HTML files and CGI.

Operations' defaults (advisory minimum):

start timeout=15

stop timeout=15

status timeout=15

restart timeout=15

force-reload timeout=15

monitor interval=15 timeout=15 start-delay=15

crm(live)ra# end

crm(live)# configure

crm(live)configure# primitive webserver lsb:httpd

crm(live)configure# commit

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.2.20"

primitive webserver lsb:httpd

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure#

crm(live)configure# end

crm(live)# status

============

Last updated: Fri Oct 19 21:38:00 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

2 Resources configured.

============

stop timeout=15

status timeout=15

restart timeout=15

force-reload timeout=15

monitor interval=15 timeout=15 start-delay=15

crm(live)ra# end

crm(live)# configure

crm(live)configure# primitive webserver lsb:httpd

crm(live)configure# commit

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.2.20"

primitive webserver lsb:httpd

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure#

crm(live)configure# end

crm(live)# status

============

Last updated: Fri Oct 19 21:38:00 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

2 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

webIP (ocf::heartbeat:IPaddr): Started node1.a.com

webserver (lsb:httpd): Started node2.a.com

在node1,node2上查看http服务状态

[root@node1 ~]# service httpd status

httpd is stopped

[root@node2 ~]# service httpd status

httpd (pid 3334) is running...

vip在node1上,而httpd服务在node2上启动,这显然不对,下面定义group

[root@node1 ~]# crm

crm(live)# configure

crm(live)configure# group web webIP webserver

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.2.20"

primitive webserver lsb:httpd

group web webIP webserver

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# commit

crm(live)configure# end

查看状态

crm(live)# status

============

Last updated: Sat Oct 20 16:48:00 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

webserver (lsb:httpd): Started node2.a.com

在node1,node2上查看http服务状态

[root@node1 ~]# service httpd status

httpd is stopped

[root@node2 ~]# service httpd status

httpd (pid 3334) is running...

vip在node1上,而httpd服务在node2上启动,这显然不对,下面定义group

[root@node1 ~]# crm

crm(live)# configure

crm(live)configure# group web webIP webserver

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.2.20"

primitive webserver lsb:httpd

group web webIP webserver

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false"

crm(live)configure# commit

crm(live)configure# end

查看状态

crm(live)# status

============

Last updated: Sat Oct 20 16:48:00 2012

Stack: openais

Current DC: node1.a.com - partition with quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

Online: [ node1.a.com node2.a.com ]

Resource Group: web

webIP (ocf::heartbeat:IPaddr): Started node1.a.com

webserver (lsb:httpd): Started node1.a.com

crm(live)#

这次结果对了

用个client测试:

如图:

webIP (ocf::heartbeat:IPaddr): Started node1.a.com

webserver (lsb:httpd): Started node1.a.com

crm(live)#

这次结果对了

用个client测试:

如图:

在node1把corosync停用

[root@node1 ~]# service corosync stop

Signaling Corosync Cluster Engine (corosync) to terminate: [ OK ]

Waiting for corosync services to unload:........ [ OK ]

在node1,node2上查看状态

[root@node1 ~]# crm status

Connection to cluster failed: connection failed

[root@node2 ~]# crm status

============

Last updated: Sat Oct 20 16:52:52 2012

Stack: openais

Current DC: node2.a.com - partition WITHOUT quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

[root@node2 ~]# crm status

============

Last updated: Sat Oct 20 16:52:52 2012

Stack: openais

Current DC: node2.a.com - partition WITHOUT quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

Online: [ node2.a.com ]

OFFLINE: [ node1.a.com ]

可以看到没有票数

关闭 quorum

可选的参数有如下

ignore (忽略)

freeze (冻结,表示已经启用的资源继续实用,没有启用的资源不能启用)

stop(默认)

suicide (所有的资源杀掉)

下面将节点1 的corosync 服务启动起来改变quorum

[root@node1 ~]# service corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@node1 ~]# crm

crm(live)# configure

crm(live)configure# property no-quorum-policy=ignore

crm(live)configure# commit

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.2.20"

primitive webserver lsb:httpd

group web webIP webserver

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

crm(live)configure# end

crm(live)# bye

bye

[root@node1 ~]# service corosync stop

Signaling Corosync Cluster Engine (corosync) to terminate: [ OK ]

Waiting for corosync services to unload:...... [ OK ]

在node2上查看状态

[root@node2 ~]# crm status

============

Last updated: Sat Oct 20 17:02:25 2012

Stack: openais

Current DC: node2.a.com - partition WITHOUT quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

OFFLINE: [ node1.a.com ]

可以看到没有票数

关闭 quorum

可选的参数有如下

ignore (忽略)

freeze (冻结,表示已经启用的资源继续实用,没有启用的资源不能启用)

stop(默认)

suicide (所有的资源杀掉)

下面将节点1 的corosync 服务启动起来改变quorum

[root@node1 ~]# service corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

[root@node1 ~]# crm

crm(live)# configure

crm(live)configure# property no-quorum-policy=ignore

crm(live)configure# commit

crm(live)configure# show

node node1.a.com

node node2.a.com

primitive webIP ocf:heartbeat:IPaddr \

params ip="192.168.2.20"

primitive webserver lsb:httpd

group web webIP webserver

property $id="cib-bootstrap-options" \

dc-version="1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

crm(live)configure# end

crm(live)# bye

bye

[root@node1 ~]# service corosync stop

Signaling Corosync Cluster Engine (corosync) to terminate: [ OK ]

Waiting for corosync services to unload:...... [ OK ]

在node2上查看状态

[root@node2 ~]# crm status

============

Last updated: Sat Oct 20 17:02:25 2012

Stack: openais

Current DC: node2.a.com - partition WITHOUT quorum

Version: 1.1.5-1.1.el5-01e86afaaa6d4a8c4836f68df80ababd6ca3902f

2 Nodes configured, 2 expected votes

1 Resources configured.

============

Online: [ node2.a.com ]

OFFLINE: [ node1.a.com ]

OFFLINE: [ node1.a.com ]

Resource Group: web

webIP (ocf::heartbeat:IPaddr): Started node2.a.com

webserver (lsb:httpd): Started node2.a.com

[root@node2 ~]#

显然,这是我们想要的结果

测试:

如图:

webIP (ocf::heartbeat:IPaddr): Started node2.a.com

webserver (lsb:httpd): Started node2.a.com

[root@node2 ~]#

显然,这是我们想要的结果

测试:

如图:

文章最后附录本次实验所需的rpm安装包及整理的文档,由于大小限制,分两部分上传的。