Hadoop部署

1. 安装完全分布式Hadoop

1.1 安装准备工作

1.1.1 规划

本安装示例将使用六台服务器(CentOS 6.5 64bit)来实现,其规划如下所示:

IP地址 主机名 运行的进程或扮演的角色 192.168.40.30 master.dbq168.com NameNode,JobTracker,Hive,Hbase 192.168.40.31 snn.dbq168.com SecondaryNameNode 192.168.40.32 datanode-1.dbq168.com DataNode,TaskTracker,zookeeper,regionserver 192.168.40.33 datanode-2.dbq168.com DataNode,TaskTracker,zookeeper,regionserver 192.168.40.35 datanode-3.dbq168.com DataNode,TaskTracker,zookeeper,regionserver 192.168.40.34 mysql.dbq168.com MySQL

1.1.2 版本说明:

用到的应用程序:

CentOS release 6.5 (Final) kernel: 2.6.32-431.el6.x86_64 JDK: jdk-7u45-linux-x64.gz Hadoop: hadoop-2.6.1.tar.gz Hive: apache-hive-1.2.1-bin.tar.gz Hbase: hbase-1.1.2-bin.tar.gz zookeeper:zookeeper-3.4.6.tar.gz

1.1.3 hosts文件:

设置集群各节点的/etc/hosts文件内容如下:

192.168.40.30 master master.dbq168.com 192.168.40.31 snn snn.dbq168.com 192.168.40.32 datanode-1 datanode-1.dbq168.com 192.168.40.33 datanode-2 datanode-2.dbq168.com 192.168.40.35 datanode-3 datanode-3.dbq168.com 192.168.40.34 mysql mysql.dbq168.com

1.1.4 SSH免密码登陆

主要是为了方便管理,或者可以使用自动化管理工具,如ansible等;

[root@master ~]# ssh-keygen -t rsa -P '' [root@master ~]# ssh-copy-id -i .ssh/id_rsa.pub root@master [root@master ~]# ssh-copy-id -i .ssh/id_rsa.pub root@snn [root@master ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode-1 [root@master ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode-2 [root@master ~]# ssh-copy-id -i .ssh/id_rsa.pub root@datanode-3 [root@master ~]# ssh-copy-id -i .ssh/id_rsa.pub root@mysql

先在集群中的每个节点上建立运行hadoop进程的用户hadoop并给其设定密码。

# useradd hadoop # echo "hadoop" | passwd --stdin hadoop [root@master ~]# for i in 30 31 32 33 35;do ssh 192.168.40.$i "useradd hadoop && echo 'hadoop'|passwd --stdin hadoop";done

而后配置master节点的hadoop用户能够以基于密钥的验正方式登录其它各节点,以便启动进程并执行监控等额外的管理工作。以下命令在master节点上执行即可。

[root@master ~]# su - hadoop [hadoop@master ~]$ ssh-keygen -t rsa -P '' [hadoop@master ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode-1 [hadoop@master ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@datanode-2 [hadoop@master ~]$ ssh-copy-id -i .ssh/id_rsa.pub hadoop@snn ......

测试执行命令:

[hadoop@master ~]$ ssh snn 'ls /home/hadoop/ -la' [hadoop@master ~]$ ssh datanode 'ls /home/hadoop/ -la'

1.2 安装JDK

[root@master ~]# for i in 30 31 32 33 35;do scp jdk-7u45-linux-x64.gz 192.168.40.$i:/usr/local/;done [root@master ~]# for i in 30 31 32 33 35;do ssh 192.168.40.$i 'tar -xf /usr/local/jdk-7u45-linux-x64.gz -C /usr/local/';done [root@master ~]# for i in 30 31 32 33 35;do ssh 192.168.40.$i 'ln -sv /usr/local/jdk-7u45-linux-x64 /usr/local/java';done

编辑/etc/profile.d/java.sh,在文件中添加如下内容:

JAVA_HOME=/usr/local/java/ PATH=$JAVA_HOME/bin:$PATH export JAVA_HOME PATH

复制变量文件到其他节点:

[root@master ~]# for i in 30 31 32 33 35;do scp /etc/profile.d/java.sh 192.168.40.$i:/etc/profile.d/;done

切换至hadoop用户,并执行如下命令测试jdk环境配置是否就绪。

# su - hadoop $ java -version java version "1.7.0_45" Java(TM) SE Runtime Environment (build 1.7.0_45-b18) Java HotSpot(TM) 64-Bit Server VM (build 24.45-b08, mixed mode)

1.3 安装Hadoop

Hadoop通常有三种运行模式:本地(独立)模式、伪分布式(Pseudo-distributed)模式和完全分布式(Fully distributed)模式。

本地模式,也是Hadoop的默认模式,此时hadoop使用本地文件系统而非分布式文件系统,而且也不会启动任何hadoop相关进程,map和reduce都作为同一进程的不同部分来执行。因此本地模式下的hadoop仅运行于本机,适合开发调试map reduce应用程序但却避免复杂的后续操作;

伪分布式模式:Hadoop将所有进程运行于同一个主机,但此时Hadoop将使用分布式文件系统,而且各Job也是由Jobtracker服务管理的独立进程;同时伪分布式的hadoop集群只有一个节点,因此HDFS的块复制将限制为单个副本,其中Secondary-master和slave也都将运行于本机。 这种模式除了并非真正意义上的分布式以外,其程序执行逻辑完全类似于分布式,因此常用于开发人员测试程序执行;

完全分布式:能真正发挥Hadoop的威力,由于Zookeeper实现高可用依赖于基数法定票数(an odd-numbered quorum),因此,完全分布式环境至少需要三个节点。

本文档采用完全分布式模式安装。

集群中的每个节点均需要安装Hadoop,以根据配置或需要启动相应的进程等,因此,以下安装过程需要在每个节点上分别执行。

# tar xf hadoop-2.6.1.tar.gz -C /usr/local # for i in 30 31 32 33 35;do ssh 192.168.40.$i 'chown hadoop.hadoop -R /usr/local/hadoop-2.6.1';done # for i in 30 31 32 33 35;do ssh 192.168.40.$i 'ln -sv /usr/local/hadoop-2.6.1 /usr/local/hadoop';done

Master上执行:

然后编辑/etc/profile,设定HADOOP_HOME环境变量的值为hadoop的解压目录,并让其永久有效。编辑/etc/profile.d/hadoop.sh,添加如下内容:

HADOOP_HOME=/usr/local/hadoop PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH export HADOOP_BASE PATH

切换至hadoop用户,并执行如下命令测试jdk环境配置是否就绪。

$ hadoop version Hadoop 2.6.1 Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r b4d876d837b830405ccdb6af94742f99d49f9c04 Compiled by jenkins on 2015-09-16T21:07Z Compiled with protoc 2.5.0 From source with checksum ba9a9397365e3ec2f1b3691b52627f This command was run using /usr/local/hadoop-2.6.1/share/hadoop/common/hadoop-common-2.6.1.jar

1.4 配置Hadoop

集群中的每个节点上Hadoop的配置均相同,Hadoop在启动时会根据配置文件判定当前节点的角色及所需要运行的进程等,因此,下述的配置文件修改需要在每一个节点上运行。

(1) 修改core-site.xml内容如下

[hadoop@master ~]$ cd /usr/local/hadoop/etc/hadoop/ [hadoop@master hadoop]$ vim core-site.xmlfs.default.name hdfs://master.dbq168.com:8020 true The name of the default file system. A URI whose scheme and authority determine the FileSystem implimentation.

(2)修改mapred-site.xml文件为如下内容

[hadoop@master ~]$ cd /usr/local/hadoop/etc/hadoop/ [hadoop@master hadoop]$ cp mapred-site.xml.template mapred-site.xmlmapred.job.tracker master.dbq168.com:8021 true The host and port that the MapReduce JobTracker runs at.

(3) 修改hdfs-site.xml文件为如下内容

[hadoop@master hadoop]$ cd /usr/local/hadoop/etc/hadoopdfs.replication 3 The actual number of replications can be specified when the file is created. dfs.data.dir /hadoop/data ture The directories where the datanode stores blocks. dfs.name.dir /hadoop/name ture The directories where the namenode stores its persistent matadata. fs.checkpoint.dir /hadoop/namesecondary ture The directories where the secondarynamenode stores checkpoints.

说明:根据此配置,需要事先在各节点上创建/hadoop/,并让hadoop用户对其具有全部权限。也可以不指定最后三个属性,让Hadoop为其使用默认位置。

[root@master ~]# for i in 30 31 32 33 35;do ssh 192.168.40.$i 'mkdir /hadoop/{name,data,namesecondary} -pv; chown -R hadoop.hadoop -R /hadoop';done

(4)指定SecondaryNameNode节点的主机名或IP地址,本示例中为如下内容:

hadoop从2.2.0以后就没有masters文件了,更改需要在hdfs-site.xml里写下本例中的:

dfs.secondary.http.address snn:50090 NameNode get the newest fsp_w_picpath via dfs.secondary.http.address

(5)修改/usr/local/hadoop/etc/hadoop/slaves文件,指定各DataNode节点的主机名或IP地址,本示例中只有一个DataNode:

datanode-1 datanode-2 datanode-3

(6)初始化数据节点,在master上执行如下命令

$ hadoop namenode -format

(7)copy文件到两个节点:

[hadoop@master hadoop]$ for i in 30 31 32 33 35;do scp mapred-site.xml core-site.xml slaves yarn-site.xml hdfs-site.xml 192.168.40.$i:/usr/local/hadoop/etc/hadoop/;done

1.5 启动Hadoop

在master节点上执行Hadoop的start-all.sh脚本即可实现启动整个集群。

[hadoop@master ~]$ start-all.sh

其输出内容如下所示:

starting namenode, logging to /usr/local/hadoop/logs/hadoop-hadoop-namenode-master.dbq168.com.out datanode.dbq168.com: starting datanode, logging to /usr/local/hadoop/logs/hadoop-hadoop-datanode-datanode.dbq168.com.out snn.dbq168.com: starting secondarynamenode, logging to /usr/local/hadoop/logs/hadoop-hadoop-secondarynamenode-node3.dbq168.com.out starting jobtracker, logging to /usr/local/hadoop/logs/hadoop-hadoop-jobtracker-master.dbq168.com.out datanode.dbq168.com: starting tasktracker, logging to /usr/local/hadoop/logs/hadoop-hadoop-tasktracker-datanode.dbq168.com.out

如果要停止Hadoop的各进程,则使用stop-all.sh脚本即可。

不过,在一个较大规模的集群环境中,NameNode节点需要在内在中维护整个名称空间中的文件和块的元数据信息,因此,其有着较大的内在需求;而在运行着众多MapReduce任务的环境中,JobTracker节点会用到大量的内存和CPU资源,因此,此场景中通常需要将NameNode和JobTracker运行在不同的物理主机上,这也意味着HDFS集群的主从节点与MapReduce的主从节点将分属于不同的拓扑。启动HDFS的主从进程则需要在NameNode节点上使用start-dfs.sh脚本,而启动MapReduce的各进程则需要在JobTracker节点上通过start-mapred.sh脚本进行。这两个脚本事实上都是通过hadoop-daemons.sh脚本来完成进程启动的。

1.6 管理JobHistory Server

启动可以JobHistory Server,能够通过Web控制台查看集群计算的任务的信息,执行如下命令:

[hadoop@master logs]$ /usr/local/hadoop/sbin/mr-jobhistory-daemon.sh start historyserver

默认使用19888端口。

通过访问http://master:19888/查看任务执行历史信息。

终止JobHistory Server,执行如下命令:

[hadoop@master logs]$ /usr/local/hadoop/sbin/mr-jobhistory-daemon.sh stop historyserver

1.7 检查

Master:

[hadoop@master hadoop]$ jps 14846 NameNode 15102 ResourceManager 15345 Jps 12678 JobHistoryServer

DataNode:

[hadoop@datanode ~]$ jps 12647 Jps 12401 DataNode 12523 NodeManager

SecondaryNode:

[hadoop@snn ~]$ jps 11980 SecondaryNameNode 12031 Jps

#查看服务器监听端口: [hadoop@master ~]$ netstat -tunlp |grep java (Not all processes could be identified, non-owned process info will not be shown, you would have to be root to see it all.) tcp 0 0 192.168.40.30:8020 0.0.0.0:* LISTEN 1294/java tcp 0 0 0.0.0.0:50070 0.0.0.0:* LISTEN 1294/java tcp 0 0 ::ffff:192.168.40.30:8088 :::* LISTEN 1551/java tcp 0 0 ::ffff:192.168.40.30:8030 :::* LISTEN 1551/java tcp 0 0 ::ffff:192.168.40.30:8031 :::* LISTEN 1551/java tcp 0 0 ::ffff:192.168.40.30:16000 :::* LISTEN 2008/java tcp 0 0 ::ffff:192.168.40.30:8032 :::* LISTEN 1551/java tcp 0 0 ::ffff:192.168.40.30:8033 :::* LISTEN 1551/java tcp 0 0 :::16010 :::* LISTEN 2008/java

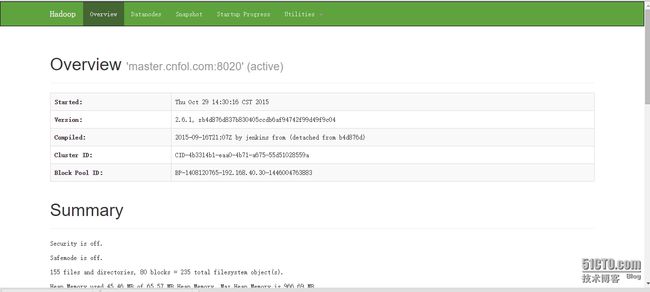

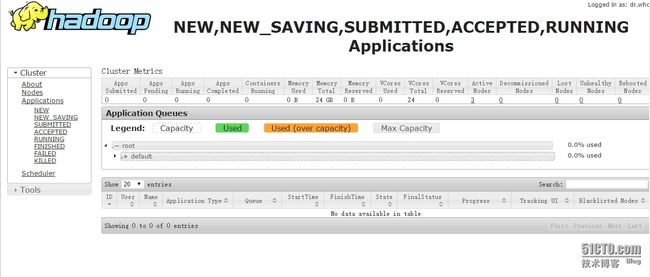

用浏览器打开:

http://master:8088 #查看、配置集群信息 http://master:50070 #类似于Hadoop的一个dashboard

1.8 测试Hadoop

Hadoop提供了MapReduce编程框架,其并行处理能力的发挥需要通过开发Map及Reduce程序实现。为了便于系统测试,Hadoop提供了一个单词统计的应用程序算法样例,其位于Hadoop安装目录下${HADOOP_BASE}/share/hadoop/mapreduce/名称类似hadoop-examples-*.jar的文件中。除了单词统计,这个jar文件还包含了分布式运行的grep等功能的实现,这可以通过如下命令查看。

[hadoop@master ~]$ hadoop jar /usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.1.jar An example program must be given as the first argument. Valid program names are: aggregatewordcount: An Aggregate based map/reduce program that counts the words in the input files. aggregatewordhist: An Aggregate based map/reduce program that computes the histogram of the words in the input files. bbp: A map/reduce program that uses Bailey-Borwein-Plouffe to compute exact digits of Pi. dbcount: An example job that count the pageview counts from a database. distbbp: A map/reduce program that uses a BBP-type formula to compute exact bits of Pi. grep: A map/reduce program that counts the matches of a regex in the input. join: A job that effects a join over sorted, equally partitioned datasets multifilewc: A job that counts words from several files. pentomino: A map/reduce tile laying program to find solutions to pentomino problems. pi: A map/reduce program that estimates Pi using a quasi-Monte Carlo method. randomtextwriter: A map/reduce program that writes 10GB of random textual data per node. randomwriter: A map/reduce program that writes 10GB of random data per node. secondarysort: An example defining a secondary sort to the reduce. sort: A map/reduce program that sorts the data written by the random writer. sudoku: A sudoku solver. teragen: Generate data for the terasort terasort: Run the terasort teravalidate: Checking results of terasort wordcount: A map/reduce program that counts the words in the input files. wordmean: A map/reduce program that counts the average length of the words in the input files. wordmedian: A map/reduce program that counts the median length of the words in the input files. wordstandarddeviation: A map/reduce program that counts the standard deviation of the length of the words in the input files.

下面我们用wordcount来计算单词显示数量:

在HDFS的wc-in目录中存放两个测试文件,而后运行wordcount程序实现对这两个测试文件中各单词出现次数进行统计的实现过程。首先创建wc-in目录,并复制文件至HDFS文件系统中。

$ hadoop fs -mkdir wc-in $ hadoop fs -put /etc/rc.d/init.d/functions /etc/profile wc-in

接下来启动分布式任务,其中的WC-OUT为reduce任务执行结果文件所在的目录,此目标要求事先不能存在,否则运行将会报错。

[hadoop@master ~]$ hadoop jar /usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.1.jar wordcount wc-in WC-OUT 15/11/05 15:18:59 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id 15/11/05 15:18:59 INFO jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId= 15/11/05 15:19:00 INFO input.FileInputFormat: Total input paths to process : 2 15/11/05 15:19:00 INFO mapreduce.JobSubmitter: number of splits:2 15/11/05 15:19:01 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local244789678_0001 15/11/05 15:19:02 INFO mapreduce.Job: The url to track the job: http://localhost:8080/ 15/11/05 15:19:02 INFO mapreduce.Job: Running job: job_local244789678_0001 15/11/05 15:19:02 INFO mapred.LocalJobRunner: OutputCommitter set in config null 15/11/05 15:19:02 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter 15/11/05 15:19:02 INFO mapred.LocalJobRunner: Waiting for map tasks 15/11/05 15:19:02 INFO mapred.LocalJobRunner: Starting task: attempt_local244789678_0001_m_000000_0 15/11/05 15:19:02 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ] 15/11/05 15:19:02 INFO mapred.MapTask: Processing split: hdfs://master.dbq168.com:8020/user/hadoop/wc-in/functions:0+18586 15/11/05 15:19:03 INFO mapreduce.Job: Job job_local244789678_0001 running in uber mode : false 15/11/05 15:19:03 INFO mapreduce.Job: map 0% reduce 0% 15/11/05 15:19:03 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584) 15/11/05 15:19:03 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100 15/11/05 15:19:03 INFO mapred.MapTask: soft limit at 83886080 15/11/05 15:19:03 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600 15/11/05 15:19:03 INFO mapred.MapTask: kvstart = 26214396; length = 6553600 15/11/05 15:19:03 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer 15/11/05 15:19:03 INFO mapred.LocalJobRunner: 15/11/05 15:19:03 INFO mapred.MapTask: Starting flush of map output 15/11/05 15:19:03 INFO mapred.MapTask: Spilling map output 15/11/05 15:19:03 INFO mapred.MapTask: bufstart = 0; bufend = 27567; bufvoid = 104857600 15/11/05 15:19:03 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26203416(104813664); length = 10981/6553600 15/11/05 15:19:04 INFO mapred.MapTask: Finished spill 0 15/11/05 15:19:04 INFO mapred.Task: Task:attempt_local244789678_0001_m_000000_0 is done. And is in the process of committing 15/11/05 15:19:04 INFO mapred.LocalJobRunner: map 15/11/05 15:19:04 INFO mapred.Task: Task 'attempt_local244789678_0001_m_000000_0' done. 15/11/05 15:19:04 INFO mapred.LocalJobRunner: Finishing task: attempt_local244789678_0001_m_000000_0 15/11/05 15:19:04 INFO mapred.LocalJobRunner: Starting task: attempt_local244789678_0001_m_000001_0 15/11/05 15:19:04 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ] 15/11/05 15:19:04 INFO mapred.MapTask: Processing split: hdfs://master.dbq168.com:8020/user/hadoop/wc-in/profile:0+1796 15/11/05 15:19:04 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584) 15/11/05 15:19:04 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100 15/11/05 15:19:04 INFO mapred.MapTask: soft limit at 83886080 15/11/05 15:19:04 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600 15/11/05 15:19:03 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer 15/11/05 15:19:03 INFO mapred.LocalJobRunner: 15/11/05 15:19:03 INFO mapred.MapTask: Starting flush of map output 15/11/05 15:19:03 INFO mapred.MapTask: Spilling map output 15/11/05 15:19:03 INFO mapred.MapTask: bufstart = 0; bufend = 27567; bufvoid = 104857600 15/11/05 15:19:03 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26203416(104813664); length = 10981/6553600 15/11/05 15:19:04 INFO mapred.MapTask: Finished spill 0 15/11/05 15:19:04 INFO mapred.Task: Task:attempt_local244789678_0001_m_000000_0 is done. And is in the process of committing 15/11/05 15:19:04 INFO mapred.LocalJobRunner: map 15/11/05 15:19:04 INFO mapred.Task: Task 'attempt_local244789678_0001_m_000000_0' done. 15/11/05 15:19:04 INFO mapred.LocalJobRunner: Finishing task: attempt_local244789678_0001_m_000000_0 15/11/05 15:19:04 INFO mapred.LocalJobRunner: Starting task: attempt_local244789678_0001_m_000001_0 15/11/05 15:19:04 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ] 15/11/05 15:19:04 INFO mapred.MapTask: Processing split: hdfs://master.dbq168.com:8020/user/hadoop/wc-in/profile:0+1796 15/11/05 15:19:04 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584) 15/11/05 15:19:04 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100 15/11/05 15:19:04 INFO mapred.MapTask: soft limit at 83886080 15/11/05 15:19:04 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600 15/11/05 15:19:04 INFO mapred.MapTask: kvstart = 26214396; length = 6553600 15/11/05 15:19:04 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer 15/11/05 15:19:04 INFO mapred.LocalJobRunner: 15/11/05 15:19:04 INFO mapred.MapTask: Starting flush of map output 15/11/05 15:19:04 INFO mapred.MapTask: Spilling map output 15/11/05 15:19:04 INFO mapred.MapTask: bufstart = 0; bufend = 2573; bufvoid = 104857600 15/11/05 15:19:04 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26213368(104853472); length = 1029/6553600 15/11/05 15:19:04 INFO mapreduce.Job: map 50% reduce 0% 15/11/05 15:19:04 INFO mapred.MapTask: Finished spill 0 15/11/05 15:19:04 INFO mapred.Task: Task:attempt_local244789678_0001_m_000001_0 is done. And is in the process of committing 15/11/05 15:19:04 INFO mapred.LocalJobRunner: map 15/11/05 15:19:04 INFO mapred.Task: Task 'attempt_local244789678_0001_m_000001_0' done. 15/11/05 15:19:04 INFO mapred.LocalJobRunner: Finishing task: attempt_local244789678_0001_m_000001_0 15/11/05 15:19:04 INFO mapred.LocalJobRunner: map task executor complete. 15/11/05 15:19:04 INFO mapred.LocalJobRunner: Waiting for reduce tasks 15/11/05 15:19:04 INFO mapred.LocalJobRunner: Starting task: attempt_local244789678_0001_r_000000_0 15/11/05 15:19:04 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ] 15/11/05 15:19:04 INFO mapred.ReduceTask: Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@775b8754 15/11/05 15:19:04 INFO reduce.MergeManagerImpl: MergerManager: memoryLimit=363285696, maxSingleShuffleLimit=90821424, mergeThreshold=239768576, ioSortFactor=10, memToMemMergeOutputsThreshold=10 15/11/05 15:19:04 INFO reduce.EventFetcher: attempt_local244789678_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events 15/11/05 15:19:04 INFO reduce.LocalFetcher: localfetcher#1 about to shuffle output of map attempt_local244789678_0001_m_000001_0 decomp: 2054 len: 2058 to MEMORY 15/11/05 15:19:04 INFO reduce.InMemoryMapOutput: Read 2054 bytes from map-output for attempt_local244789678_0001_m_000001_0

命令的执行结果按上面命令的指定存储于WC-OUT目录中:

[hadoop@master ~]$ hadoop fs -ls WC-OUT Found 2 items -rw-r--r-- 2 hadoop supergroup 0 2015-11-05 15:19 WC-OUT/_SUCCESS -rw-r--r-- 2 hadoop supergroup 10748 2015-11-05 15:19 WC-OUT/part-r-00000

其中的part-r-00000正是其执行结果的输出文件,使用如下命令查看其执行结果。

[hadoop@master ~]$ hadoop fs -cat WC-OUT/part-r-00000 ! 3 != 15 " 7 "" 1 "", 1 "$#" 4 "$-" 1 "$1" 21 "$1")" 2 "$2" 5 "$3" 1 "$4" 1 "$?" 2 "$@" 2 "$BOOTUP" 17 "$CONSOLETYPE" 1 "$EUID" 2 "$HISTCONTROL" 1 "$RC" 4 "$STRING 1 "$answer" 4 "$base 1 "$base" 1 "$corelimit 2 "$count" 1 "$dst" 4 "$dst: 1 "$file" 3 "$force" 1 "$fs_spec" 1 "$gotbase" 1 "$have_random" 1 "$i" 3 "$key" 6 "$key"; 3 "$killlevel" 3 "$line" 2 "$makeswap" 2 "$mdir" 4 "$mke2fs" 1 "$mode" 1 "$mount_point" 1 "$mount_point") 1 ......

2. Hive 部属:

2.1 环境介绍:

Hive:1.2.1 Mysql-connector-jar: Mysql-connector-java-5.1.37 Hadoop: 2.6.1 MySQL: 5.6.36 64

2.1.1 Hive介绍:

Hive, 适用于数据仓库类的应用程序,但其并不是一个全状态的数据库,这主要受限于Hadoop自身设计的缺陷。其最大的缺陷在于Hive不支持行级别的更新、插入和删除操作。其次,Hadoop是面向批处理的系统,其MapReduce job的启动有着很大的开销,因此Hive查询有着很高的延迟,通常在传统数据上可以几秒钟完成的查询操作在Hive需要更长的时间,即使数据集非常小也无法避免。再次,Hive无法支持OLTP(Online Transaction Processing)的关键特性,而是接近于OLAP(Online Analytic Processing),然而在Online能力方面的表现仍然与期望有着一定的差距。故此,Hive最适用于数据仓库类的应用场景,即通过数据挖掘完成数据分析、生成报告并支持智能决策等。

鉴于Hive本身的限制,如果期望在大数据集上实现OLTP式的特性,就得认真考虑NoSQL数据库了,比如HBase、Cassandra和DynamoDB等。

2.2 Hive 运行模式

与 Hadoop 类似,Hive 也有 3 种运行模式:

2.2.1 内嵌模式

将元数据保存在本地内嵌的 Derby 数据库中,这是使用 Hive 最简单的方式。但是这种方式缺点也比较明显,因为一个内嵌的 Derby 数据库每次只能访问一个数据文件,这也就意味着它不支持多会话连接。

2.2.2 本地模式

这种模式是将元数据保存在本地独立的数据库中(一般是 MySQL),这用就可以支持多会话和多用户连接了。

2.2.3 远程模式

此模式应用于 Hive 客户端较多的情况。把 MySQL 数据库独立出来,将元数据保存在远端独立的 MySQL 服务中,避免了在每个客户端都安装 MySQL 服务从而造成冗余浪费的情况。

2.3 安装 Hive

Hive 是基于 Hadoop 文件系统之上的数据仓库,由Facebook提供。因此,安装Hive之前必须确保 Hadoop 已经成功安装。

2.3.1下载完成后解压:

[root@master src]# wget http://119.255.9.53/mirror.bit.edu.cn/apache/hive/stable/apache-hive-1.2.1-bin.tar.gz

[root@master src]# tar xf apache-hive-1.2.1-bin.tar.gz -C /usr/local/

# 修改属主属组,hadoop用户已在hadoop集群中添加

[root@master src]# chown hadoop.hadoop -R apache-hive-1.2.1-bin/

# 创建软链接:

[root@master src]# ln -sv apache-hive-1.2.1-bin hive

2.3.2 配置系统环境变量

# vim /etc/profile.d/hive.sh HIVE_HOME=/usr/local/hive PATH=$PATH:$HIVE_HOME/bin export HIVE_HOME

使之立即生效

# . /etc/profile.d/hive.sh

2.3.3 修改hive配置脚本

# vim /usr/local/hive/bin/hive-config.sh export JAVA_HOME=/usr/local/java export HIVE_HOME=/usr/local/hive export HADOOP_HOME=/usr/local/hadoop

2.3.4 创建必要目录

前面我们看到 hive-site.xml 文件中有两个重要的路径,切换到 hadoop 用户下查看 HDFS 是否有这些路径:

[hadoop@master conf]$ hadoop fs -ls / Found 4 items drwxr-xr-x - hadoop supergroup 0 2015-11-04 06:38 /hbase drwxr-xr-x - hadoop supergroup 0 2015-11-05 09:38 /hive_data drwx-wx-wx - hadoop supergroup 0 2015-10-28 17:04 /tmp drwxr-xr-x - hadoop supergroup 0 2015-11-02 14:29 /user

没有发现上面提到的路径,需要自己新建这些目录,并且给它们赋予用户写(W)权限。

$ hadoop dfs -mkdir /user/hive/warehouse $ hadoop dfs -mkdir /tmp/hive $ hadoop dfs -chmod 777 /user/hive/warehouse $ hadoop dfs -chmod 777 /tmp/hive

检查是否新建成功 hadoop dfs -ls / 以及 hadoop dfs -ls /user/hive/ :

[hadoop@master conf]$ hadoop fs -ls /user/hive/ Found 1 items drwxrwxrwx - hadoop supergroup 0 2015-11-05 15:57 /user/hive/warehouse

2.4 配置远程模式的数据库MySQL

2.4.1 安装 MySQL:

此处使用的是通用二进制5.6.26版本,mysql安装在/usr/local/mysql,过程略...

2.4.2 创建数据库并授权:

mysql> CREATE DATABASE `hive` /*!40100 DEFAULT CHARACTER SET latin1 */; mysql> GRANT ALL PRIVILEGES ON `hive`.* TO 'hive'@'192.168.40.%' IDENTIFIED BY 'hive'; mysql> FLUSH PRIVILEGES;

2.4.3 下载jdbc驱动:

下载MySQL 的 JDBC 驱动包。这里使用 mysql-connector-java-5.1.37-bin.jar,将其复制到 $HIVE_HOME/lib 目录下:

下载地址: http://dev.mysql.com/downloads/connector/j/

$ tar xf mysql-connector-java-5.1.37.tar.gz && cd mysql-connector-java-5.1.37 $ cp mysql-connector-java-5.1.37-bin.jar /usr/local/hive/lib/

2.5 修改Hive配置文件

[root@master conf]# su - hadoop [hadoop@master ~]$ cd /usr/local/hive/conf/ [hadoop@master conf]$ cp hive-site.xml.template hive-site.xml [hadoop@master conf]$ vim hive-site.xml [hadoop@master conf]$ cp hive-site.xml.template hive-site.xmljavax.jdo.option.ConnectionPassword hive password to use against metastore database javax.jdo.option.Multithreaded true Set this to true if multiple threads access metastore through JDO concurrently. javax.jdo.option.ConnectionURL jdbc:mysql://mysql:3306/hive JDBC connect string for a JDBC metastore javax.jdo.option.ConnectionDriverName com.mysql.jdbc.Driver Driver class name for a JDBC metastore javax.jdo.option.ConnectionUserName hive Username to use against metastore database

2.6 删除多余文件,启动Hive:

Hive 中的 Jline jar 包和 Hadoop 中的 Jline 冲突了,在路径:$HADOOP_HOME/share/hadoop/yarn/lib/jline-0.9.94.jar 将其删除,不然启动Hive会报错。

[hadoop@master lib]$ mv /usr/local/hadoop/share/hadoop/yarn/lib/jline-0.9.94.jar ~/

2.6.2 启动hive

[hadoop@master conf]$ hive Logging initialized using configuration in jar:file:/usr/local/apache-hive-1.2.1-bin/lib/hive-common-1.2.1.jar!/hive-log4j.properties hive> show tables; OK gold_log gold_log_tj1 person student Time taken: 2.485 seconds, Fetched: 4 row(s)

3. Hbase安装

3.0.1 环境介绍:

HBase:1.1.2 Zookeeper:3.4.6 http://hbase.apache.org/ http://zookeeper.apache.org/

3.1 解压包设置权限:(以下操作Master上执行)

# tar xf hbase-1.1.2-bin.tar.gz -C /usr/local/ # cd /usr/local # chown -R hadoop.hadoop hbase-1.1.2/ # ln -sv hbase-1.1.2 hbase

3.2 添加环境变量:

# vim /etc/profile.d/hbase.sh export HBASE_HOME=/usr/local/hbase export PATH=$PATH:$HBASE_HOME/bin

3.3 完全分布式模式配置:

主要的修改的配置文件-- hbase-site.xml, regionservers, 和 hbase-env.sh -- 可以在 conf目录

# cd /usr/local/hbase 3.3.1 修改hbase-site.xml

要想运行完全分布式模式,你要进行如下配置,先在 hbase-site.xml, 加一个属性 hbase.cluster.distributed 设置为 true 然后把 hbase.rootdir 设置为HDFS的NameNode的位置。 例如,你的namenode运行在master.dbq168.com,端口是8020 你期望的目录是 /hbase,使用如下的配置:

hbase.rootdir hdfs://master:8020/hbase The directory shared by RegionServers. hbase.cluster.distributed true The mode the cluster will be in. Possible values are false: standalone and pseudo-distributed setups with managed Zookeeper true: fully-distributed with unmanaged Zookeeper Quorum (see hbase-env.sh)

3.3.2 修改regionservers

完全分布式模式的还需要修改conf/regionservers

$ vim regionservers datanode-1 datanode-2 datanode-3

3.4 ZooKeeper

一个分布式运行的Hbase依赖一个zookeeper集群。所有的节点和客户端都必须能够访问zookeeper。默认的情况下Hbase会管理一个zookeep集群。这个集群会随着Hbase的启动而启动。当然,你也可以自己管理一个zookeeper集群,但需要配置Hbase。你需要修改conf/hbase-env.sh里面的HBASE_MANAGES_ZK 来切换。这个值默认是true的,作用是让Hbase启动的时候同时也启动zookeeper.

当Hbase管理zookeeper的时候,你可以通过修改zoo.cfg来配置zookeeper,一个更加简单的方法是在 conf/hbase-site.xml里面修改zookeeper的配置。Zookeep的配置是作为property写在 hbase-site.xml里面的。option的名字是 hbase.zookeeper.property. 打个比方, clientPort 配置在xml里面的名字是 hbase.zookeeper.property.clientPort. 所有的默认值都是Hbase决定的,包括zookeeper, “HBase 默认配置”. 可以查找 hbase.zookeeper.property 前缀,找到关于zookeeper的配置。

对于zookeepr的配置,你至少要在 hbase-site.xml中列出zookeepr的ensemble servers,具体的字段是 hbase.zookeeper.quorum. 该这个字段的默认值是 localhost,这个值对于分布式应用显然是不可以的. (远程连接无法使用).

你运行一个zookeeper也是可以的,但是在生产环境中,你最好部署3,5,7个节点。部署的越多,可靠性就越高,当然只能部署奇数个,偶数个是不可以的。你需要给每个zookeeper 1G左右的内存,如果可能的话,最好有独立的磁盘。 (独立磁盘可以确保zookeeper是高性能的。).如果你的集群负载很重,不要把Zookeeper和RegionServer运行在同一台机器上面。就像DataNodes 和 TaskTrackers一样

打个比方,Hbase管理着的ZooKeeper集群在节点 rs{1,2,3,4,5}.dbq168.com, 监听2222 端口(默认是2181),并确保conf/hbase-env.sh文件中 HBASE_MANAGE_ZK的值是 true ,再编辑 conf/hbase-site.xml 设置 hbase.zookeeper.property.clientPort 和 hbase.zookeeper.quorum。你还可以设置 hbase.zookeeper.property.dataDir属性来把ZooKeeper保存数据的目录地址改掉。默认值是 /tmp ,这里在重启的时候会被操作系统删掉,可以把它修改到 /hadoop/zookeeper.

3.4.1 配置zookeeper

$ vim hbase-site.xmlhbase.zookeeper.property.clientPort 2222 Property from ZooKeeper's config zoo.cfg.The port at which the clients will connect. hbase.zookeeper.quorum datanode-1,datanode-2,datanode-3 Comma separated list of servers in the ZooKeeper Quorum. For example, "host1.mydomain.com,host2.mydomain.com,host3.mydomain.com". By default this is set to localhost for local and pseudo-distributed modes of operation. For a fully-distributed setup, this should be set to a full list of ZooKeeper quorum servers. If HBASE_MANAGES_ZK is set in hbase-env.sh this is the list of servers which we will start/stop ZooKeeper on. hbase.zookeeper.property.dataDir /hadoop/zookeeper Property from ZooKeeper's config zoo.cfg. The directory where the snapshot is stored.

3.4.2 复制包到其他节点:(包括secondarynode、datanode1-3)

[root@master src]# for i in 31 32 33 35;do scp hbase-1.1.2-bin.tar.gz zookeeper-3.4.6.tar.gz 192.168.40.$i:/usr/local/src/;done

3.4.3 datanode三个节点的操作:

# cd /usr/local/src # tar xf hbase-1.1.2-bin.tar.gz -C .. # chown hadoop.hadoop -R hbase-1.1.2/ # ln -sv hbase-1.1.2 hbase # tar xf zookeeper-3.4.6.tar.gz -C .. # chown hadoop.hadoop zookeeper-3.4.6/ -R # ln -sv zookeeper-3.4.6/ zookeeper [root@datanode-1 ~]$ cd /usr/local/zookeeper/conf/ [root@datanode-1 ~]$ cp zoo_sample.cfg zoo.cfg [root@datanode-1 ~]$ vim zoo.cfg

tickTime=2000 initLimit=10 syncLimit=5 dataDir=/hadoop/zookeeper clientPort=2222 [root@datanode-1 ~]# mkdir /hadoop/zookeeper [root@datanode-1 ~]# chown hadoop.hadoop -R /hadoop/zookeeper/

以上操作在每个datanode节点上都执行,其余两个节点不再演示。

3.4.4 复制Hbase配置文件到Datanode各节点:

[hadoop@master conf]$ cd /usr/local/hbase/conf [hadoop@master conf]$ for i in 31 32 33 35;do scp -p hbase-env.sh hbase-site.xml regionservers 192.168.40.$i:/usr/local/hbase/conf/;done

3.5 启动Hbase:

[hadoop@master conf]$ start-hbase.sh datanode-2: starting zookeeper, logging to /usr/local/hbase/bin/../logs/hbase-hadoop-zookeeper-datanode-2.cnfol.com.out datanode-3: starting zookeeper, logging to /usr/local/hbase/bin/../logs/hbase-hadoop-zookeeper-datanode-3.cnfol.com.out datanode-1: starting zookeeper, logging to /usr/local/hbase/bin/../logs/hbase-hadoop-zookeeper-datanode-1.cnfol.com.out starting master, logging to /usr/local/hbase/logs/hbase-hadoop-master-master.cnfol.com.out datanode-3: starting regionserver, logging to /usr/local/hbase/bin/../logs/hbase-hadoop-regionserver-datanode-3.cnfol.com.out datanode-2: starting regionserver, logging to /usr/local/hbase/bin/../logs/hbase-hadoop-regionserver-datanode-2.cnfol.com.out datanode-1: starting regionserver, logging to /usr/local/hbase/bin/../logs/hbase-hadoop-regionserver-datanode-1.cnfol.com.out

3.5.1 检验启动情况:

[hadoop@master conf]$ jps 3750 Jps 32515 NameNode 301 ResourceManager 3485 HMaster

[hadoop@datanode-1 ~]$ jps 3575 DataNode 3676 NodeManager 5324 Jps 5059 HQuorumPeer 5143 HRegionServer [hadoop@datanode-2 ~]$ jps 4512 Jps 3801 NodeManager 4311 HRegionServer 4242 HQuorumPeer 3700 DataNode [hadoop@datanode-3 ~]$ jps 2128 HRegionServer 2054 HQuorumPeer 1523 DataNode 1622 NodeManager 2289 Jps

3.5.2 查看master上监听的端口:

[hadoop@master conf]$ netstat -tunlp (Not all processes could be identified, non-owned process info will not be shown, you would have to be root to see it all.) Active Internet connections (only servers) Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name tcp 0 0 192.168.40.30:8020 0.0.0.0:* LISTEN 32515/java tcp 0 0 0.0.0.0:50070 0.0.0.0:* LISTEN 32515/java tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN - tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN - tcp 0 0 :::22 :::* LISTEN - tcp 0 0 ::ffff:192.168.40.30:8088 :::* LISTEN 301/java tcp 0 0 ::1:25 :::* LISTEN - tcp 0 0 ::ffff:192.168.40.30:8030 :::* LISTEN 301/java tcp 0 0 ::ffff:192.168.40.30:8031 :::* LISTEN 301/java tcp 0 0 ::ffff:192.168.40.30:16000 :::* LISTEN 3485/java tcp 0 0 ::ffff:192.168.40.30:8032 :::* LISTEN 301/java tcp 0 0 ::ffff:192.168.40.30:8033 :::* LISTEN 301/java tcp 0 0 :::16010 :::* LISTEN 3485/java

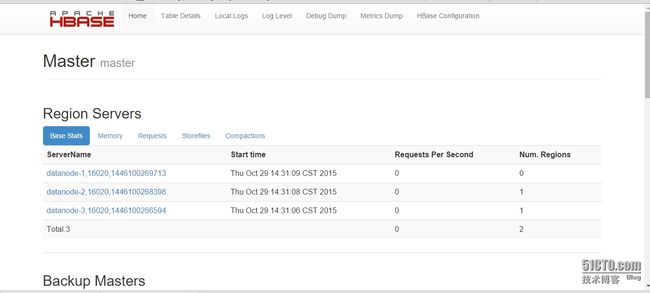

3.5.3 通过浏览器访问,查看HBase情况:

http://192.168.40.30:16010/master-status

最后是几张截图: