一、Ingress概念

Kubernetes关于服务的暴露主要是通过NodePort方式,通过绑定宿主机的某个端口,然后进行pod的请求转发和负载均衡,但这种方式下缺陷是:

Service可能有很多个,如果每个都绑定一个node主机端口的话,主机需要开放外围一堆的端口进行服务调用,管理混乱无法应用很多公司要求的防火墙规则。

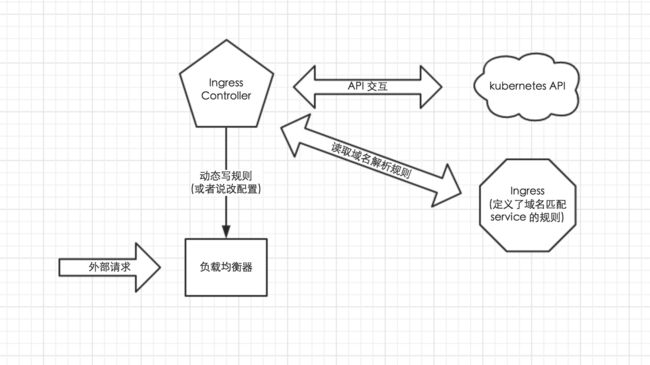

理想的方式是通过一个外部的负载均衡器,绑定固定的端口,比如80,然后根据域名或者服务名向后面的Service ip转发,Nginx很好的解决了这个需求,但问题是如果有新的服务加入,如何去修改Nginx的配置,并且加载这些配置? Kubernetes给出的方案就是Ingress,Ingress包含了两大主件Ingress Controller和Ingress。

Ingress解决的是新的服务加入后,域名和服务的对应问题,基本上是一个ingress的对象,通过yaml进行创建和更新进行加载。-

Ingress Controller是将Ingress这种变化生成一段Nginx的配置,然后将这个配置通过Kubernetes API写到Nginx的Pod中,然后reload.(注意:写入 nginx.conf 的不是service的地址,而是service backend 的 pod 的地址,避免在 service 在增加一层负载均衡转发)

从上图中可以很清晰的看到,实际上请求进来还是被负载均衡器拦截,比如 nginx,然后 Ingress Controller 通过跟 Ingress 交互得知某个域名对应哪个 service,再通过跟 kubernetes API 交互得知 service 地址等信息;综合以后生成配置文件实时写入负载均衡器,然后负载均衡器 reload 该规则便可实现服务发现,即动态映射

了解了以上内容以后,这也就很好的说明了我为什么喜欢把负载均衡器部署为 Daemon Set;因为无论如何请求首先是被负载均衡器拦截的,所以在每个 node 上都部署一下,同时 hostport 方式监听 80 端口;那么就解决了其他方式部署不确定 负载均衡器在哪的问题,同时访问每个 node 的 80 都能正确解析请求;如果前端再 放个 nginx 就又实现了一层负载均衡。

Ingress使用

二、部署配置Ingress

!!!以下部署方法已经过时,新版本已经更新至0.9-beta1,不需要部署default-backend,具体部署文档参考:https://kubernetes.github.io/ingress-nginx/deploy/

2.1 部署文件介绍、准备

第一步: 获取配置文件位置

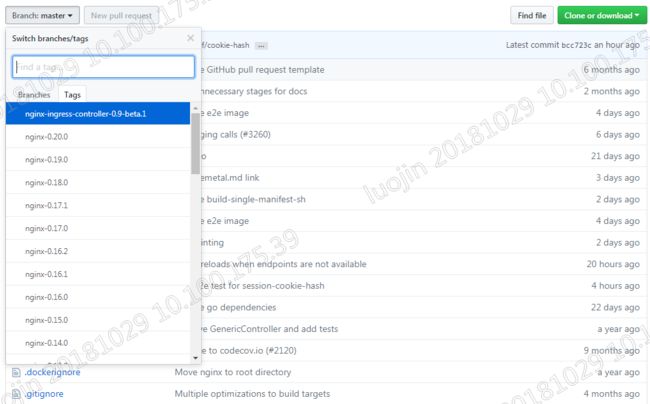

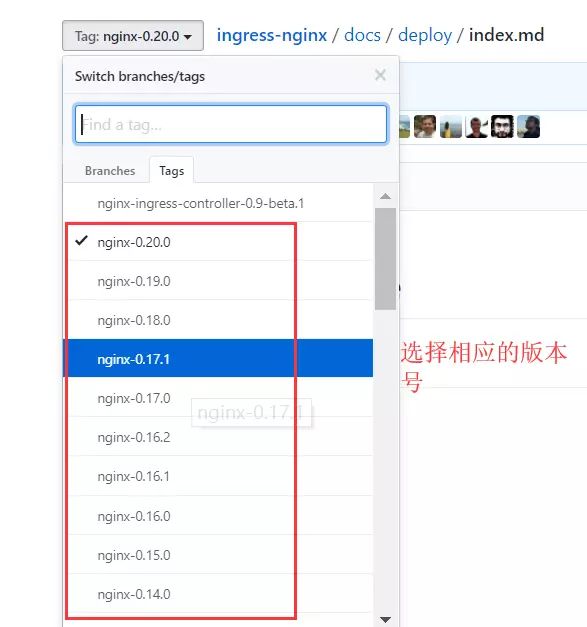

https://github.com/kubernetes/ingress-nginx/tree/nginx-0.20.0/deploy

第二步: 下载部署文件

提供了两种方式 :

- 默认下载最新的yaml

- 指定版本号下载对应的yaml

-

默认下载最新的yaml

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/mandatory.yaml

-

指定版本号下载对应的yaml

如下载ingress-nginx 0.17.0对应的yamlwget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.17.0/deploy/mandatory.yaml

部署文件介绍

- namespace.yaml

创建一个独立的命名空间 ingress-nginx - configmap.yaml

ConfigMap是存储通用的配置变量的,类似于配置文件,使用户可以将分布式系统中用于不同模块的环境变量统一到一个对象中管理;而它与配置文件的区别在于它是存在集群的“环境”中的,并且支持K8S集群中所有通用的操作调用方式。

从数据角度来看,ConfigMap的类型只是键值组,用于存储被Pod或者其他资源对象(如RC)访问的信息。这与secret的设计理念有异曲同工之妙,主要区别在于ConfigMap通常不用于存储敏感信息,而只存储简单的文本信息。

ConfigMap可以保存环境变量的属性,也可以保存配置文件。

创建pod时,对configmap进行绑定,pod内的应用可以直接引用ConfigMap的配置。相当于configmap为应用/运行环境封装配置。

pod使用ConfigMap,通常用于:设置环境变量的值、设置命令行参数、创建配置文件。

default-backend.yaml

如果外界访问的域名不存在的话,则默认转发到default-http-backend这个Service,其会直接返回404:rbac.yaml

负责Ingress的RBAC授权的控制,其创建了Ingress用到的ServiceAccount、ClusterRole、Role、RoleBinding、ClusterRoleBindingwith-rbac.yaml

是Ingress的核心,用于创建ingress-controller。前面提到过,ingress-controller的作用是将新加入的Ingress进行转化为Nginx的配置

这里,我们先对一些重要的文件进行简单介绍。

default-backend.yaml

default-backend的作用是,如果外界访问的域名不存在的话,则默认转发到default-http-backend这个Service,其会直接返回404:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: default-http-backend

labels:

app: default-http-backend

namespace: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app: default-http-backend

template:

metadata:

labels:

app: default-http-backend

spec:

terminationGracePeriodSeconds: 60

containers:

- name: default-http-backend

# Any image is permissible as long as:

# 1. It serves a 404 page at /

# 2. It serves 200 on a /healthz endpoint

image: gcr.io/google_containers/defaultbackend:1.4

livenessProbe:

httpGet:

path: /healthz

port: 8080

scheme: HTTP

initialDelaySeconds: 30

timeoutSeconds: 5

ports:

- containerPort: 8080

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

---

apiVersion: v1

kind: Service

metadata:

name: default-http-backend

namespace: ingress-nginx

labels:

app: default-http-backend

spec:

ports:

- port: 80

targetPort: 8080

selector:

app: default-http-backend

rbac.yaml

rbac.yaml负责Ingress的RBAC授权的控制,其创建了Ingress用到的ServiceAccount、ClusterRole、Role、RoleBinding、ClusterRoleBinding。在上文《从零开始搭建Kubernetes集群(四、搭建K8S Dashboard)》中,我们已对这些概念进行了简单介绍。

apiVersion: v1

kind: ServiceAccount

metadata:

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: nginx-ingress-clusterrole

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- "extensions"

resources:

- ingresses/status

verbs:

- update

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: Role

metadata:

name: nginx-ingress-role

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

resourceNames:

# Defaults to "-"

# Here: "-"

# This has to be adapted if you change either parameter

# when launching the nginx-ingress-controller.

- "ingress-controller-leader-nginx"

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- ""

resources:

- endpoints

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: RoleBinding

metadata:

name: nginx-ingress-role-nisa-binding

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: nginx-ingress-role

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: nginx-ingress-clusterrole-nisa-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: nginx-ingress-clusterrole

subjects:

- kind: ServiceAccount

name: nginx-ingress-serviceaccount

namespace: ingress-nginx

with-rbac.yaml

with-rbac.yaml是Ingress的核心,用于创建ingress-controller。前面提到过,ingress-controller的作用是将新加入的Ingress进行转化为Nginx的配置。

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

replicas: 1

selector:

matchLabels:

app: ingress-nginx

template:

metadata:

labels:

app: ingress-nginx

annotations:

prometheus.io/port: '10254'

prometheus.io/scrape: 'true'

spec:

serviceAccountName: nginx-ingress-serviceaccount

containers:

- name: nginx-ingress-controller

image: quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.14.0

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

- --configmap=$(POD_NAMESPACE)/nginx-configuration

- --tcp-services-configmap=$(POD_NAMESPACE)/tcp-services

- --udp-services-configmap=$(POD_NAMESPACE)/udp-services

- --annotations-prefix=nginx.ingress.kubernetes.io

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

securityContext:

runAsNonRoot: false

如上,可以看到nginx-ingress-controller启动时传入了参数,分别为前面创建的default-backend-service以及configmap。

2.2 部署ingress

第一步: 准备镜像,从这里mandatory.yaml查看需要哪些镜像

已经准备好, 可以直接点击下载

| 镜像名称 | 版本 | 下载地址 |

|---|---|---|

| k8s.gcr.io/defaultbackend-amd64 | 1.5 | registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64 |

| quay.io/kubernetes-ingress-controller/nginx-ingress-controller | 0.20.0 | registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/nginx-ingress-controller |

如:

docker pull registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/nginx-ingress-controller:0.20.0

将镜像上传到自己的私有仓库,以供下面的步骤使用(或者给镜像打tag)。

第二步: 更新mandatory.yaml中的镜像地址

替换成自己的镜像地址(或者给镜像打tag):

-

替换defaultbackend-amd64镜像地址(或者给镜像打tag)

sed -i 's#k8s.gcr.io/defaultbackend-amd64#registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/defaultbackend-amd64#g' mandatory.yaml -

替换nginx-ingress-controller镜像地址(或者给镜像打tag)

sed -i 's#quay.io/kubernetes-ingress-controller/nginx-ingress-controller#registry.cn-qingdao.aliyuncs.com/kubernetes_xingej/nginx-ingress-controller#g' mandatory.yaml

第三步: 部署nginx-ingress-controller

kubectl apply -f mandatory.yaml

第四步: 查看ingress-nginx组件状态?

-

查看相关pod状态

kubectl get pods -n ingress-nginx -o wide[root@master ingress-nginx]# kubectl get pods -n ingress-nginx -owide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE default-http-backend-7f594549df-nzthj 1/1 Running 0 3m59s 192.168.1.90 slave1nginx-ingress-controller-9fc7f4c5f-dr722 1/1 Running 0 3m59s 192.168.2.110 slave2 [root@master ingress-nginx]# -

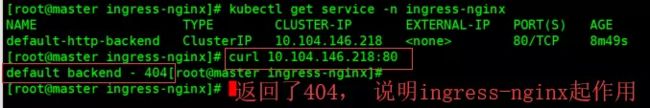

查看service状态

[root@master ingress-nginx]# kubectl get service -n ingress-nginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE default-http-backend ClusterIP 10.104.146.21880/TCP 5m37s [root@master ingress-nginx]#

测试default-http-backend 是否起作用?

系统自动安装了一个default-http-backend pod, 这是一个缺省的http后端服务, 用于返回404结果,如下所示:

三、创建自定义Ingress

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kibana-ingress

namespace: default

spec:

rules:

- host: myk8s.com

http:

paths:

- path: /

backend:

serviceName: kibana

servicePort: 5601

其中:

- rules中的host必须为域名,不能为IP,表示Ingress-controller的Pod所在主机域名,也就是Ingress-controller的IP对应的域名。

- paths中的path则表示映射的路径。如映射

/表示若访问myk8s.com,则会将请求转发至Kibana的service,端口为5601。

创建成功后,查看:

[root@k8s-node1 ingress]# kubectl get ingress -o wide

NAME HOSTS ADDRESS PORTS AGE

kibana-ingress myk8s.com 80 6s

我们再执行kubectl exec nginx-ingress-controller-5b79cbb5c6-2zr7f -it cat /etc/nginx/nginx.conf -n ingress-nginx,可以看到生成nginx配置,篇幅较长,各位自行筛选:

## start server myk8s.com

server {

server_name myk8s.com ;

listen 80;

listen [::]:80;

set $proxy_upstream_name "-";

location /kibana {

log_by_lua_block {

}

port_in_redirect off;

set $proxy_upstream_name "";

set $namespace "kube-system";

set $ingress_name "dashboard-ingress";

set $service_name "kibana";

client_max_body_size "1m";

proxy_set_header Host $best_http_host;

# Pass the extracted client certificate to the backend

# Allow websocket connections

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

proxy_set_header X-Real-IP $the_real_ip;

proxy_set_header X-Forwarded-For $the_real_ip;

proxy_set_header X-Forwarded-Host $best_http_host;

proxy_set_header X-Forwarded-Port $pass_port;

proxy_set_header X-Forwarded-Proto $pass_access_scheme;

proxy_set_header X-Original-URI $request_uri;

proxy_set_header X-Scheme $pass_access_scheme;

# Pass the original X-Forwarded-For

proxy_set_header X-Original-Forwarded-For $http_x_forwarded_for;

# mitigate HTTPoxy Vulnerability

# https://www.nginx.com/blog/mitigating-the-httpoxy-vulnerability-with-nginx/

proxy_set_header Proxy "";

# Custom headers to proxied server

proxy_connect_timeout 5s;

proxy_send_timeout 60s;

proxy_read_timeout 60s;

proxy_buffering "off";

proxy_buffer_size "4k";

proxy_buffers 4 "4k";

proxy_request_buffering "on";

proxy_http_version 1.1;

proxy_cookie_domain off;

proxy_cookie_path off;

# In case of errors try the next upstream server before returning an error

proxy_next_upstream error timeout invalid_header http_502 http_503 http_504;

proxy_next_upstream_tries 0;

# No endpoints available for the request

return 503;

}

location / {

log_by_lua_block {

}

port_in_redirect off;

set $proxy_upstream_name "";

set $namespace "default";

set $ingress_name "kibana-ingress";

set $service_name "kibana";

client_max_body_size "1m";

proxy_set_header Host $best_http_host;

# Pass the extracted client certificate to the backend

# Allow websocket connections

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

proxy_set_header X-Real-IP $the_real_ip;

proxy_set_header X-Forwarded-For $the_real_ip;

proxy_set_header X-Forwarded-Host $best_http_host;

proxy_set_header X-Forwarded-Port $pass_port;

proxy_set_header X-Forwarded-Proto $pass_access_scheme;

proxy_set_header X-Original-URI $request_uri;

proxy_set_header X-Scheme $pass_access_scheme;

# Pass the original X-Forwarded-For

proxy_set_header X-Original-Forwarded-For $http_x_forwarded_for;

# mitigate HTTPoxy Vulnerability

# https://www.nginx.com/blog/mitigating-the-httpoxy-vulnerability-with-nginx/

proxy_set_header Proxy "";

# Custom headers to proxied server

proxy_connect_timeout 5s;

proxy_send_timeout 60s;

proxy_read_timeout 60s;

proxy_buffering "off";

proxy_buffer_size "4k";

proxy_buffers 4 "4k";

proxy_request_buffering "on";

proxy_http_version 1.1;

proxy_cookie_domain off;

proxy_cookie_path off;

# In case of errors try the next upstream server before returning an error

proxy_next_upstream error timeout invalid_header http_502 http_503 http_504;

proxy_next_upstream_tries 0;

# No endpoints available for the request

return 503;

}

}

## end server myk8s.com

设置host

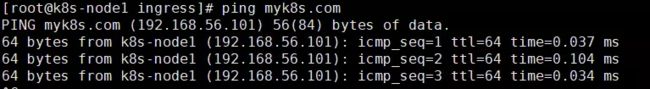

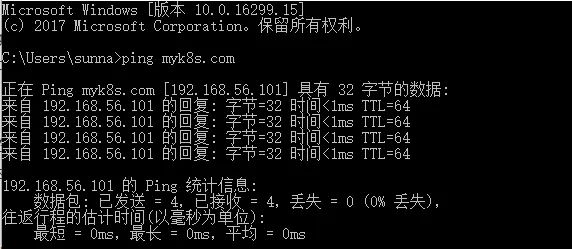

首先,我们需要在Ingress-controller的Pod所在主机上(这里为k8s-node1),将上面提到的域名myk8s.com追加入/etc/hosts文件:

192.168.56.101 myk8s.com

除此之外,如果想在自己的Windows物理机上使用浏览器访问kibana,也需要在C:\Windows\System32\drivers\etc\hosts文件内加入上述内容。设置后,分别在k8s-node1和物理机上测试无误即可:

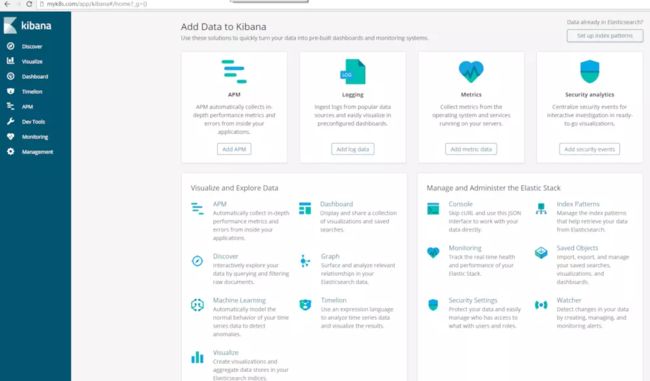

测试

在Windows物理机上,使用Chrome访问myk8s.com,也就是相当于访问了192.168.56.101:80: