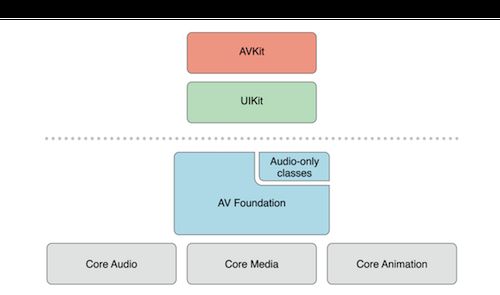

概述

AVFoundation 是一个可以用来使用和创建基于时间的视听媒体数据的框架。AVFoundation 的构建考虑到了目前的硬件环境和应用程序,其设计过程高度依赖多线程机制。充分利用了多核硬件的优势并大量使用block和GCD机制,将复杂的计算机进程放到了后台线程运行。会自动提供硬件加速操作,确保在大部分设备上应用程序能以最佳性能运行。该框架就是针对64位处理器设计的,可以发挥64位处理器的所有优势。

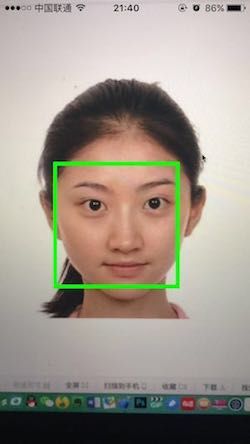

实现效果

捕捉会话

AV Foundation 捕捉栈的核心类是AVCaptureSession。一个捕捉会话相当于一个虚拟的插线板,用于连接输入和输出的资源。捕捉会话管理从物理设备得到的数据流。

self.captureSession = [[AVCaptureSession alloc] init];

[self.captureSession setSessionPreset:AVCaptureSessionPreset640x480];

[self.previewView setSession:self.captureSession];

AVCaptureMetadataOutput

在使用捕捉设备进行处理前,首先要添加输入、输出。输出的类型是 AVCaptureOutput,它是一个抽象基类,用于将捕捉到的数据输出。AV Foundation 框架定义了AVCaptureOutput 的一些扩展,但是在人脸动态识别的时候我们用到是它的子类 AVCaptureMetadataOutput。AVCaptureMetadataOutput 用于输出元数据,如二维码、条形码、以及人脸等。我们在使用的时候需要指定 Metadata 的相关类型,我们可以通过 AVCaptureMetadataOutput 的 ```@property(nonatomic, readonly) NSArray

// Output

self.metaDataOutput = [[AVCaptureMetadataOutput alloc] init];

[self.metaDataOutput setMetadataObjectsDelegate:self queue:dispatch_get_global_queue(0, 0)];

if ([self.captureSession canAddOutput:self.metaDataOutput]) {

[self.captureSession addOutput:self.metaDataOutput];

}

for (NSString *metaType in self.metaDataOutput.availableMetadataObjectTypes) {

NSLog(@"%@", metaType);

}

self.metaDataOutput.metadataObjectTypes = @[AVMetadataObjectTypeFace];

####AVMetadataFaceObject

当检测到人脸的时候AVCaptureMetadataOutput 会输出子类型为 AVMetadataFaceObject 的数组。AVMetadataFaceObject 定义了多个描述检测到人脸的属性。其中最重要的是人脸的边界(bounds),它是CGRect类型的变量。它的坐标系是基于设备标量坐标系,它的范围是摄像头原始朝向左上角(0,0)到右下角(1,1)。除了边界,AVMetadataFaceObject还提供了检测到人脸的斜倾角和偏转角。斜倾角(rollAngle)表示人的头部向肩的方向侧倾角度, 偏转角(yawAngle)表示人沿Y轴旋转的角度。AVMetadataFaceObject 定义如下:

@interface AVMetadataFaceObject : AVMetadataObject

{

@private

AVMetadataFaceObjectInternal *_internal;

}

@property(readonly) NSInteger faceID;

// 斜倾角

@property(readonly) BOOL hasRollAngle;

@property(readonly) CGFloat rollAngle;

// 偏转角

@property(readonly) BOOL hasYawAngle;

@property(readonly) CGFloat yawAngle;

@end

###捕捉预览

AVCaptureVideoPreviewLayer是CALayer的子类,可以对捕捉视频进行实时预览。它有个AVLayerVideoGravity属性可以控制画面的缩放和拉升效果。

AVCaptureVideoPreviewLayer *previewLayer = [AVCaptureVideoPreviewLayer layerWithSession:self.captureSession];

previewLayer.frame = [UIScreen mainScreen].bounds;

[self.view.layer addSublayer:previewLayer];

####坐标变换

由于 AVMetadataFaceObject 中的人脸的边界(bounds)的坐标系是基于设备标量坐标系,它的范围是摄像头原始朝向左上角(0,0)到右下角(1,1),因此需要将它转换到我们的视图坐标系中。在转换的时候系统会考虑orientation, mirroring, videoGravity 等许多因素。在转换的时候我们只需要使用捕捉预览 AVCaptureVideoPreviewLayer 提供的 ```- (nullable AVMetadataObject *)transformedMetadataObjectForMetadataObject:(AVMetadataObject *)metadataObject;``` 方法。

- (NSArray *)transformFacesToLayerFromFaces:(NSArray *)faces

{

NSMutableArray *transformFaces = [NSMutableArray array];

for (AVMetadataFaceObject *face in faces) {

AVMetadataObject *transFace = [(AVCaptureVideoPreviewLayer *)self.layer transformedMetadataObjectForMetadataObject:face]

[transformFaces addObject:transFace];

}

return transformFaces;

}

####实现动态人脸检测

+ 创建捕捉会话,并设置输入、输出以及预览图层。将 AVCaptureMetadataOutput 的metadataObjectTypes 设为捕获人脸 AVMetadataObjectTypeFace。

-

(void)setupSession

{

self.captureSession = [[AVCaptureSession alloc] init];self.captureSession = [[AVCaptureSession alloc] init];

[self.captureSession setSessionPreset:AVCaptureSessionPreset640x480];

[self.previewView setSession:self.captureSession];// Create a device input with the device and add it to the session.

AVCaptureDevice *device = [AVCaptureDevice defaultDeviceWithMediaType:AVMediaTypeVideo];

NSError *error;

AVCaptureDeviceInput *input = [AVCaptureDeviceInput deviceInputWithDevice:device error:&error];

if (!input) {

return;

}

[self.captureSession addInput:input];// Create a VideoDataOutput and add it to the session

self.videoDataOutput = [[AVCaptureVideoDataOutput alloc] init];

[self.videoDataOutput setSampleBufferDelegate:self queue:dispatch_get_global_queue(0, 0)];

self.videoDataOutput.videoSettings = [NSDictionary dictionaryWithObject:[NSNumber numberWithInt:kCVPixelFormatType_420YpCbCr8BiPlanarFullRange] forKey:(id)kCVPixelBufferPixelFormatTypeKey];

[self.captureSession addOutput:self.videoDataOutput];// Output

self.metaDataOutput = [[AVCaptureMetadataOutput alloc] init];[self.metaDataOutput setMetadataObjectsDelegate:self queue:dispatch_get_global_queue(0, 0)];

if ([self.captureSession canAddOutput:self.metaDataOutput]) {

[self.captureSession addOutput:self.metaDataOutput];

}for (NSString *metaType in self.metaDataOutput.availableMetadataObjectTypes) {

NSLog(@"%@", metaType);

}self.metaDataOutput.metadataObjectTypes = @[AVMetadataObjectTypeFace];

[self.captureSession startRunning];

}

+ 创建预览视图 QMPreviewView,将预览视图的 layerClass 设置为 AVCaptureVideoPreviewLayer,并初始化相关视图。由于我们用到了偏转角(yawAngle)在旋转的时候我们需要用到透视投影,这样在绕Y轴旋转的时候才更加逼真。

// 透视投影

static CATransform3D PerspectiveTransformMake(CGFloat eyePosition)

{

CATransform3D transform = CATransform3DIdentity;

transform.m34 = -1.0 / eyePosition;

return transform;

}

- (Class)layerClass

{

return [AVCaptureVideoPreviewLayer class];

}

(instancetype)initWithFrame:(CGRect)frame

{

if (self = [super initWithFrame:frame]) {

[self setupView];

}

return self;

}(instancetype)initWithCoder:(NSCoder *)aDecoder

{

if (self = [super initWithCoder:aDecoder]) {

[self setupView];

}

return self;

}-

(void)setupView

{

_faceLayerDict = [NSMutableDictionary dictionary];AVCaptureVideoPreviewLayer *previewLayer = (id)self.layer;

previewLayer.videoGravity = AVLayerVideoGravityResizeAspectFill;self.overlayLayer = [CALayer layer];

self.overlayLayer.frame = self.bounds;

self.overlayLayer.sublayerTransform = PerspectiveTransformMake(1000);

[previewLayer addSublayer:self.overlayLayer];

}

+ 初始化QMPreviewView,初始化后需要为它设置 AVCaptureSession 才能进行捕捉的实时预览。

(void)viewDidLoad

{

[super viewDidLoad];

[self setupView];

[self setupSession];

}(void)setupView

{

QMPreviewView *previewView = [[QMPreviewView alloc] initWithFrame:self.view.bounds];

[self.view addSubview:previewView];

_previewView = previewView;

}

+ 处理AVCaptureMetadataOutputObjectsDelegate回调方法解析并绘制人脸矩形。每个人脸 AVFoundation 都会给出唯一的faceID,当人脸离开屏幕时候,对应的人脸也会在回调中消失。我们根据人脸ID保存着绘制的矩形,当人脸消失的时候,我们需要将绘制的矩形去除。

-

(void)captureOutput:(AVCaptureOutput *)output didOutputMetadataObjects:(NSArray<__kindof AVMetadataObject *> *)metadataObjects fromConnection:(AVCaptureConnection *)connection

{

// for (AVMetadataFaceObject *face in metadataObjects) {

// NSLog(@"face = %ld, bounds = %@", face.faceID, NSStringFromCGRect(face.bounds));

// }[self.previewView onDetectFaces:metadataObjects];

} -

(NSArray *)transformFacesToLayerFromFaces:(NSArray *)faces

{

NSMutableArray *transformFaces = [NSMutableArray array];

for (AVMetadataFaceObject *face in faces) {

AVMetadataObject *transFace = [(AVCaptureVideoPreviewLayer *)self.layer transformedMetadataObjectForMetadataObject:face]

[transformFaces addObject:transFace];}

return transformFaces;

} (CALayer *)makeLayer

{

CALayer *layer = [CALayer layer];

layer.borderWidth = 5.0f;

layer.borderColor = [UIColor colorWithRed:0.0f green:255.0f blue:0.0f alpha:255.0f].CGColor;

return layer;

}(CATransform3D)transformFromYawAngle:(CGFloat)angle

{

CATransform3D t = CATransform3DMakeRotation(DegreeToRadius(angle), 0.0f, -1.0f, 0.0f);

return CATransform3DConcat(t, [self orientationTransform]);

}(CATransform3D)orientationTransform

{

CGFloat angle = 0.0f;

switch ([UIDevice currentDevice].orientation) {

case UIDeviceOrientationPortraitUpsideDown:

angle = M_PI;

break;

case UIDeviceOrientationLandscapeRight:

angle = -M_PI/2.0;

break;

case UIDeviceOrientationLandscapeLeft:

angle = M_PI/2.0;

break;

default:

angle = 0.0f;

break;

}

return CATransform3DMakeRotation(angle, 0.0f, 0.0f, 1.0f);

}

pragma mark - Public

(void)setSession:(AVCaptureSession *)session

{

((AVCaptureVideoPreviewLayer *)self.layer).session = session;

}-

(void)onDetectFaces:(NSArray *)faces

{

// 坐标变换

NSArray *transFaces = [self transformFacesToLayerFromFaces:faces];

NSMutableArray *missFaces = [[self.faceLayerDict allKeys] mutableCopy];for (AVMetadataFaceObject *face in transFaces) {

NSNumber *faceID = @(face.faceID);

// 如果当前人脸还在镜头里,则不用移除

[missFaces removeObject:faceID];CALayer *layer = self.faceLayerDict[faceID]; if (!layer) { // 生成新的人脸矩形 layer = [self makeLayer]; self.faceLayerDict[faceID] = layer; [self.overlayLayer addSublayer:layer]; } layer.transform = CATransform3DIdentity; layer.frame = face.bounds; // 根据偏转角,对矩形进行旋转 if (face.hasRollAngle) { CATransform3D t = CATransform3DMakeRotation(DegreeToRadius(face.rollAngle), 0, 0, 1.0); layer.transform = CATransform3DConcat(layer.transform, t); } // 根据斜倾角,对矩形进行旋转变换 if (face.hasYawAngle) { CATransform3D t = [self transformFromYawAngle:face.yawAngle]; layer.transform = CATransform3DConcat(layer.transform, t); }}

// 去除离开屏幕的人脸和矩形视图变换

for (NSNumber *faceID in missFaces) {

CALayer *layer = self.faceLayerDict[faceID];

[layer removeFromSuperlayer];

[self.faceLayerDict removeObjectForKey:faceID];

}

}

####参考

[AVFoundation开发秘籍:实践掌握iOS & OSX应用的视听处理技术](https://book.douban.com/subject/26577333/)

源码地址:[AVFoundation开发 https://github.com/QinminiOS/AVFoundation](https://github.com/QinminiOS/AVFoundation)