一、前期准备

1.1、linux-node1端:

[root@linux-node1 ~]# egrep '(vmx|svm)' /proc/cpuinfo ##有输出就支持虚拟化

[root@linux-node1 ~]# cat /etc/redhat-release

CentOS release 6.8 (Final)

[root@linux-node1 ~]# uname -r

2.6.32-642.el6.x86_64

[root@linux-node1 ~]# getenforce

Disabled

[root@linux-node1 ~]# service iptables status

iptables: Firewall is not running.

[root@linux-node1 ~]# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-6.repo

[root@linux-node1 ~]# wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-6.repo

[root@linux-node1 ~]# date ##时间一定要同步

Sun Jun 24 10:20:31 CST 2018

[root@linux-node1 ~]# ifconfig eth0

eth0 Link encap:Ethernet HWaddr 00:0C:29:2B:9F:B1

inet addr:10.0.0.101 Bcast:10.0.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe2b:9fb1/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1238 errors:0 dropped:0 overruns:0 frame:0

TX packets:569 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:122277 (119.4 KiB) TX bytes:77114 (75.3 KiB)

[root@linux-node1 ~]# cat /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=linux-node1

[root@linux-node1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.101 linux-node1

10.0.0.102 linux-node2

[root@linux-node1 ~]# ping linux-node1

PING linux-node1 (10.0.0.101) 56(84) bytes of data.

64 bytes from linux-node1 (10.0.0.101): icmp_seq=1 ttl=64 time=0.045 ms

^C

--- linux-node1 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1896ms

rtt min/avg/max/mdev = 0.042/0.043/0.045/0.006 ms

[root@linux-node1 ~]# ping www.baidu.com

PING www.a.shifen.com (61.135.169.125) 56(84) bytes of data.

64 bytes from 61.135.169.125: icmp_seq=1 ttl=128 time=3.58 ms

64 bytes from 61.135.169.125: icmp_seq=2 ttl=128 time=3.80 ms

^C

--- www.a.shifen.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1313ms

rtt min/avg/max/mdev = 3.587/3.697/3.807/0.110 ms

[root@linux-node1 ~]# yum install -y python-pip gcc gcc-c++ make libtool patch automake python-devel libxslt-devel MySQL-python openssl-devel libudev-devel git wget python-numdisplay device-mapper bridge-utils libffi-devel libffi libvirt-python libvirt qemu-kvm gedit python-eventlet ##基础软件包安装

1.2、linux-node2端:

[root@linux-node2 ~]# egrep '(vmx|svm)' /proc/cpuinfo ##有输出就支持虚拟化

[root@linux-node2 ~]# cat /etc/redhat-release

CentOS release 6.8 (Final)

[root@linux-node2 ~]# uname -r

2.6.32-642.el6.x86_64

[root@linux-node2 ~]# getenforce

Disabled

[root@linux-node2 ~]# service iptables status

iptables: Firewall is not running.

[root@linux-node1 ~]# date

Sat Jun 23 16:40:15 CST 2018

[root@linux-node2 ~]# ifconfig eth0

eth0 Link encap:Ethernet HWaddr 00:0C:29:61:F7:97

inet addr:10.0.0.102 Bcast:10.0.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe61:f797/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1464 errors:0 dropped:0 overruns:0 frame:0

TX packets:533 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:139021 (135.7 KiB) TX bytes:69058 (67.4 KiB)

[root@linux-node2 ~]# cat /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=linux-node2

[root@linux-node2 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.0.0.101 linux-node1

10.0.0.102 linux-node2

[root@linux-node2 ~]# ping linux-node2

PING linux-node2 (10.0.0.102) 56(84) bytes of data.

64 bytes from linux-node2 (10.0.0.102): icmp_seq=1 ttl=64 time=0.100 ms

64 bytes from linux-node2 (10.0.0.102): icmp_seq=2 ttl=64 time=0.047 ms

^C

--- linux-node2 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1028ms

rtt min/avg/max/mdev = 0.047/0.073/0.100/0.027 ms

[root@linux-node2 ~]# ping www.baidu.com

PING www.a.shifen.com (61.135.169.121) 56(84) bytes of data.

64 bytes from 61.135.169.121: icmp_seq=1 ttl=128 time=3.02 ms

64 bytes from 61.135.169.121: icmp_seq=2 ttl=128 time=3.06 ms

^C

--- www.a.shifen.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1376ms

rtt min/avg/max/mdev = 3.022/3.044/3.066/0.022 ms

[root@linux-node2 ~]# yum install -y python-pip gcc gcc-c++ make libtool patch automake python-devel libxslt-devel MySQL-python openssl-devel libudev-devel git wget python-numdisplay device-mapper bridge-utils libffi-devel libffi libvirt-python libvirt qemu-kvm gedit python-eventlet ##基础软件包安装

二、基础服务部署

2.1、数据库服务(Mysql): Openstack的各个组件都需要mysql保存数据

[root@linux-node1 ~]# yum install -y mysql-server

[root@linux-node1 ~]# cp /usr/share/mysql/my-medium.cnf /etc/my.cnf

[root@linux-node1 ~]# vim /etc/my.cnf

default-storage-engine = innodb

innodb_file_per_table

collation-server = utf8_general_ci

init-connect = 'SET NAMES utf8'

character-set-server = utf8

[root@linux-node1 ~]# service mysqld start

[root@linux-node1 ~]# chkconfig mysqld on

[root@linux-node1 ~]# mysql

create database keystone; ##认证服务

grant all on keystone.* to keystone@'10.0.0.0/255.255.255.0' identified by 'keystone';

create database glance; ##镜像服务

grant all on glance.* to glance@'10.0.0.0/255.255.255.0' identified by 'glance';

create database nova; ##计算服务

grant all on nova.* to nova@'10.0.0.0/255.255.255.0' identified by 'nova';

create database neutron; ##网络服务

grant all on neutron.* to neutron@'10.0.0.0/255.255.255.0' identified by 'neutron';

create database cinder; ##存储服务

grant all on cinder.* to cinder@'10.0.0.0/255.255.255.0' identified by 'cinder';

exit

2.2、消息代理(Rabbitmq):消息队列是交通枢纽的作用,在Openstack云平台的沟通过程中需要使用消息队列

[root@linux-node1 ~]# yum install -y rabbitmq-server

[root@linux-node1 ~]# /etc/init.d/rabbitmq-server start

Starting rabbitmq-server: SUCCESS

rabbitmq-server.

[root@linux-node1 ~]# chkconfig rabbitmq-server on

[root@linux-node1 ~]# /usr/lib/rabbitmq/bin/rabbitmq-plugins list ##列出所有的插件

[root@linux-node1 ~]# /usr/lib/rabbitmq/bin/rabbitmq-plugins enable rabbitmq_management ##启用web监控插件

[root@linux-node1 ~]# /etc/init.d/rabbitmq-server restart

[root@linux-node1 ~]# netstat -lntup|grep 5672

tcp 0 0 0.0.0.0:15672 ##web界面端口 0.0.0.0:* LISTEN 13778/beam.smp

tcp 0 0 0.0.0.0:55672 ##web界面端口 0.0.0.0:* LISTEN 13778/beam.smp

tcp 0 0 :::5672 ##rabbitmq服务端口 :::* LISTEN 13778/beam.smp

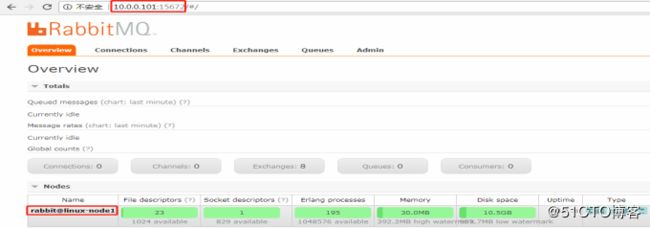

2.2.1、客户端登陆http://10.0.0.101:15672 用户名和密码都是guest

2.2.2、Rabbitmq基本管理

[root@linux-node1 ~]# rabbitmqctl change_password guest openstack ##修改默认的guest密码

[root@linux-node1 ~]# rabbitmqctl add_user openstack openstack ##添加用户

[root@linux-node1 ~]# rabbitmqctl set_user_tags openstack administrator ##设置用户角色

[root@linux-node1 ~]# rabbitmqctl list_users ##列出用户

三、Openstack安装部署

3.1、身份认证服务(Keystone): 用户认证、服务目录

用户和认证:用户权限与用户行为跟踪

服务目录:提供一个服务目录,包括所有服务项与相关API的端点

[root@linux-node1 ~]# vim /etc/yum.repos.d/icehouse.repo ##安装i版的openstack时,需要自己编辑repo文件

[icehouse]

name=Extra icehouse Packages for Enterprise Linux 6 - $basearch

baseurl=https://repos.fedorapeople.org/repos/openstack/EOL/openstack-icehouse/epel-6/

failovermethod=priority

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-6

[root@linux-node1 ~]# yum install -y openstack-keystone python-keystoneclient

[root@linux-node1 ~]# keystone-manage pki_setup --keystone-user keystone --keystone-group keystone

Using configuration from /etc/keystone/ssl/certs/openssl.conf

[root@linux-node1 ~]# chown -R keystone:keystone /etc/keystone/ssl/

[root@linux-node1 ~]# chmod -R o-wrx /etc/keystone/ssl/

[root@linux-node1 ~]# vim /etc/keystone/keystone.conf ##修改配置文件

admin_token=ADMIN

connection=mysql://keystone:[email protected]/keystone

debug=true ##在生产环境不建议打开,还是false

log_file=/var/log/keystone/keystone.log

[root@linux-node1 ~]# keystone-manage db_sync ##同步文件到数据库

[root@linux-node1 ~]# mysql -e "use keystone;show tables;" ##确定表已经建立

+----------------------+

| Tables_in_keystone |

+----------------------+

| assignment |

| credential |

| domain |

| endpoint |

| group |

| migrate_version |

| policy |

| project |

| region |

| role |

| service |

| token |

| trust |

| trust_role |

| user |

| user_group_membership|

+----------------------+

[root@linux-node1 ~]# /etc/init.d/openstack-keystone start

Starting keystone: [FAILED]

[root@linux-node1 ~]# tail /var/log/keystone/keystone-startup.log

_setup_logging_from_conf(product_name, version)

File "/usr/lib/python2.6/site-packages/keystone/openstack/common/log.py", line 525, in _setup_logging_from_conf

filelog = logging.handlers.WatchedFileHandler(logpath)

File "/usr/lib64/python2.6/logging/handlers.py", line 377, in __init__

logging.FileHandler.__init__(self, filename, mode, encoding, delay)

File "/usr/lib64/python2.6/logging/__init__.py", line 835, in __init__

StreamHandler.__init__(self, self._open())

File "/usr/lib64/python2.6/logging/__init__.py", line 854, in _open

stream = open(self.baseFilename, self.mode)

IOError: [Errno 13] Permission denied: '/var/log/keystone/keystone.log'

[root@linux-node1 ~]# ll /var/log/keystone/keystone.log

-rw-r--r-- 1 root root 0 Jun 24 23:49 /var/log/keystone/keystone.log

[root@linux-node1 ~]# chown keystone:keystone /var/log/keystone/keystone.log

[root@linux-node1 ~]# ll /var/log/keystone/keystone.log

-rw-r--r-- 1 keystone keystone 0 Jun 24 23:49 /var/log/keystone/keystone.log

[root@linux-node1 ~]# /etc/init.d/openstack-keystone start

Starting keystone: [ OK ]

[root@linux-node1 ~]# chkconfig openstack-keystone on

[root@linux-node1 ~]# netstat -lntup|egrep '35357|5000'

tcp 0 0 0.0.0.0:35357 #管理端口 0.0.0.0:* LISTEN 7984/python

tcp 0 0 0.0.0.0:5000 #对外端口 0.0.0.0:* LISTEN 7984/python

[root@linux-node1 ~]# export OS_SERVICE_TOKEN=ADMIN

[root@linux-node1 ~]# export OS_SERVICE_ENDPOINT=http://10.0.0.101:35357/v2.0

[root@linux-node1 ~]# keystone role-list ##出现以下内容说明 Keystone 安装成功

+-----------------------------------+----------------+

| id | name |

+------------------------------------+---------------+

| 9fe2ff9ee4384b1894a90878d3e92bab | _member_ |

+-----------------------------------+---------------+

[root@linux-node1 ~]# keystone user-create --name=admin --pass=admin [email protected] #创建admin用户

[root@linux-node1 ~]# keystone role-create --name=admin ##创建admin角色

[root@linux-node1 ~]# keystone tenant-create --name=admin --description="Admin Tenant" ##创建admin租户

[root@linux-node1 ~]# keystone user-role-add --user=admin --tenant=admin --role=admin ##把三者关联起来,把admin用户加入admin租户(项目)里面,授予admin的角色

[root@linux-node1 ~]# keystone user-role-add --user=admin --tenant=admin --role=_member_ ##关联

[root@linux-node1 ~]# keystone role-list

+----------------------------------+-------------+

| id | name |

+----------------------------------+-------------+

| 9fe2ff9ee4384b1894a90878d3e92bab | _member_ |

| be86f8af5d16433aa00f9cb0390919a8 | admin |

+----------------------------------+-------------+

[root@linux-node1 ~]# keystone user-list

+----------------------------------+--------+---------+-------------------+

| id | name | enabled | email |

+----------------------------------+--------+---------+-------------------+

| 014722cf5f804c2bb54f2c0ac2498074 | admin | True | [email protected] |

+----------------------------------+--------+---------+-------------------+

[root@linux-node1 ~]# keystone user-create --name=demo --pass=demo [email protected]

[root@linux-node1 ~]# keystone tenant-create --name=demo --description="Demo Tenant"

[root@linux-node1 ~]# keystone user-role-add --user=demo --role=_member_ --tenant=demo

[root@linux-node1 ~]# keystone tenant-create --name=service --description="Service Tenant" #创建service租户,下文使用这个租户

WARNING: Bypassing authentication using a token & endpoint (authentication credentials are being ignored).

+-----------------+---------------------------------+

| Property | Value |

+-----------------+---------------------------------+

| description | Service Tenant |

| enabled | True |

| id | bcb01124791742708b65a80bcc633436 |

| name | service |

+-----------------+---------------------------------+

[root@linux-node1 ~]# keystone service-create --name=keystone --type=identity --description="Openstack Identity"

WARNING: Bypassing authentication using a token & endpoint (authentication credentials are being ignored).

+----------------+--------------------------------------+

| Property | Value |

+----------------+--------------------------------------+

| description | Openstack Identity |

| enabled | True |

| id | 9de82db1b57d4370914a4b9e20fb92dd |

| name | keystone |

| type | identity |

+----------------+--------------------------------------+

[root@linux-node1 ~]# keystone endpoint-create \

--service-id=$(keystone service-list|awk '/identity/{print $2}') \

--publicurl=http://10.0.0.101:5000/v2.0 \

--internalurl=http://10.0.0.101:5000/v2.0 \

--adminurl=http://10.0.0.101:35357/v2.0

WARNING: Bypassing authentication using a token & endpoint (authentication credentials are being ignored).

+----------------+-----------------------------------+

| Property | Value |

+----------------+-----------------------------------+

| adminurl | http://10.0.0.101:35357/v2.0 |

| id | 83327104425548d2b6b0465225fc75a8 |

| internalurl | http://10.0.0.101:5000/v2.0 |

| publicurl | http://10.0.0.101:5000/v2.0 |

| region | regionOne |

| service_id | 9de82db1b57d4370914a4b9e20fb92dd |

+----------------+-----------------------------------+

[root@linux-node1 ~]# unset OS_SERVICE_TOKEN OS_SERVICE_ENDPOINT ##一定要取消环境变量

[root@linux-node1 ~]# keystone --os-username=admin --os-password=admin --os-auth-url=http://10.0.101:35357/v2.0 token-get ##用admin进行验证

[root@linux-node1 ~]# keystone --os-username=demo --os-password=demo --os-auth-url=http://10.0.0.101:35357/v2.0 token-get ##用demo进行验证

[root@linux-node1 ~]# vim keystone-admin

export OS_TENANT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=admin

export OS_AUTH_URL=http://10.0.0.101:35357/v2.0

[root@linux-node1 ~]# source keystone-admin

[root@linux-node1 ~]# keystone token-get

[root@linux-node1 ~]# cp keystone-admin keystone-demo

[root@linux-node1 ~]# vim keystone-demo

export OS_TENANT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=demo

export OS_AUTH_URL=http://10.0.0.101:35357/v2.0

[root@linux-node1 ~]# keystone user-role-list --user admin --tenant admin

+----------------------------------+--------------+----------------------------------+----------------------------------+

| id | name | user_id | tenant_id |

+----------------------------------+--------------+----------------------------------+----------------------------------+

| 9fe2ff9ee4384b1894a90878d3e92bab | _member_ | 014722cf5f804c2bb54f2c0ac2498074 | 01d5828b54c6495aa1349268df619383 |

| be86f8af5d16433aa00f9cb0390919a8 | admin | 014722cf5f804c2bb54f2c0ac2498074 | 01d5828b54c6495aa1349268df619383 |

+----------------------------------+--------------+----------------------------------+----------------------------------+

[root@linux-node1 ~]# keystone user-role-list --user demo --tenant demo

+-------------------------------------+-------------+------------------------------------+------------------------------------+

| id | name | user_id | tenant_id |

+-------------------------------------+-------------+------------------------------------+------------------------------------+

| 9fe2ff9ee4384b1894a90878d3e92bab | _member_ | 9587cf4299be46e8aff66f2c356e1864 | 2f58035ef9b647f48e30dcb585ae7cce |

+-------------------------------------+-------------+------------------------------------+------------------------------------+

3.2、镜像管理服务(Glance): 主要有glance-api和glance-registry

glance-api:接受云系统镜像的创建、删除、读取请求

Glance-registry:云系统的镜像注册服务

[root@linux-node1 ~]# yum install -y openstack-glance python-glanceclient python-crypto

[root@linux-node1 ~]# vim /etc/glance/glance-api.conf

debug=true

log_file=/var/log/glance/api.log

connection=mysql://glance:[email protected]/glance

[root@linux-node1 ~]# vim /etc/glance/glance-registry.conf

debug=true

log_file=/var/log/glance/registry.log

connection=mysql://glance:[email protected]/glance

[root@linux-node1 ~]# glance-manage db_sync ##同步数据库时出现警告

[root@linux-node1 ~]# mysql -e " use glance;show tables;"

+------------------+

| Tables_in_glance |

+------------------+

| image_locations |

| image_members |

| image_properties |

| image_tags |

| images |

| migrate_version |

| task_info |

| tasks |

+------------------+

[root@linux-node1 ~]# vim /etc/glance/glance-api.conf

notifier_strategy = rabbit ##其实default也是rabbit,但最好还是改过来

rabbit_host=10.0.0.101

rabbit_port=5672

rabbit_use_ssl=false

rabbit_userid=guest

rabbit_password=guest

rabbit_virtual_host=/

rabbit_notification_exchange=glance

rabbit_notification_topic=notifications

rabbit_durable_queues=False

[root@linux-node1 ~]# source keystone-admin

[root@linux-node1 ~]# keystone user-create --name=glance --pass=glance

[root@linux-node1 ~]# keystone user-role-add --user=glance --tenant=service --role=admin

[root@linux-node1 ~]# vim /etc/glance/glance-api.conf

[keystone_authtoken]

auth_host=10.0.0.101

auth_port=35357

auth_protocol=http

admin_tenant_name=service

admin_user=glance

admin_password=glance

flavor=keystone

[root@linux-node1 ~]# vim /etc/glance/glance-registry.conf

[keystone_authtoken]

auth_host=10.0.0.101

auth_port=35357

auth_protocol=http

admin_tenant_name=service

admin_user=glance

admin_password=glance

flavor=keystone

[root@linux-node1 ~]# keystone service-create --name=glance --type=image

+-------------+------------------------------------+

| Property | Value |

+-------------+------------------------------------+

| description | |

| enabled | True |

| id | a843c6e391f64b4894cb736c59c6fbe9 |

| name | glance |

| type | image |

+-------------+------------------------------------+

[root@linux-node1 ~]# keystone endpoint-create \

--service-id=$(keystone service-list|awk '/ image /{print $2}') \

--publicurl=http://10.0.0.101:9292 \

--internalurl=http://10.0.0.101:9292 \

--adminurl=http://10.0.0.101:9292

+---------------+---------------------------------------+

| Property | Value |

+---------------+---------------------------------------+

| adminurl |http://10.0.0.101:9292 |

| id | 5f7f06a3b4874deda19caf67f5f21a1f |

| internalurl | http://10.0.0.101:9292 |

| publicurl | http://10.0.0.101:9292 |

| region | regionOne |

| service_id | 34701cc1acd44e22b8ae8a9be069d0a7 |

+---------------+---------------------------------------+

[root@linux-node1 ~]# keystone service-list

+----------------------------------+--------------+--------------+-----------------------+

| id | name | type | description |

+----------------------------------+--------------+--------------+-----------------------+

| 34701cc1acd44e22b8ae8a9be069d0a7 | glance | image | |

| ea33049270ad4450ac789e9236774878 | keystone | identity | Openstack Identity |

+----------------------------------+--------------+--------------+-----------------------+

[root@linux-node1 ~]# keystone endpoint-list

+------------------------------------------------------+-----------------+--------------------------------------------+-------------------------------------------+------------------------------------------------+--------------------------------------------------------+

| id | region | publicurl | internalurl | adminurl | service_id |

+------------------------------------------------------+-----------------+--------------------------------------------+-------------------------------------------+------------------------------------------------+---------------------------------------------------------+

| 5f7f06a3b4874deda19caf67f5f21a1f | regionOne | http://10.0.0.101:9292 | http://10.0.0.101:9292 | http://10.0.0.101:9292 | 34701cc1acd44e22b8ae8a9be069d0a7 |

| 735a100c58cf4335adc00bd4267a1387 | regionOne | http://10.0.0.101:5000/v2.0 | http://10.0.0.101:5000/v2.0 | http://10.0.0.101:35357/v2.0 | ea33049270ad4450ac789e9236774878 |

+------------------------------------------------------+-----------------+--------------------------------------------+-------------------------------------------+------------------------------------------------+----------------------------------------------------------+

[root@linux-node1 ~]# cd /var/log/glance/

[root@linux-node1 glance]# chown -R glance:glance *

[root@linux-node1 glance]# /etc/init.d/openstack-glance-api start

Starting openstack-glance-api: [ OK ]

[root@linux-node1 ~]# chkconfig openstack-glance-api on

[root@linux-node1 glance]# /etc/init.d/openstack-glance-registry start

Starting openstack-glance-registry: [ OK ]

[root@linux-node1 ~]# chkconfig openstack-glance-registry on

[root@linux-node1 glance]# netstat -lntup|egrep '9191|9292'

tcp 0 0 0.0.0.0:9191 #api对应的端口 0.0.0.0:* LISTEN 5252/python

tcp 0 0 0.0.0.0:9292 #注册对应的端口 0.0.0.0:* LISTEN 5219/python

[root@linux-node1 ~]# glance image-list ##说明正常

+----+------+-------------+------------------+------+--------+

| ID | Name | Disk Format | Container Format | Size | Status |

+----+------+-------------+------------------+------+--------+

+----+------+-------------+------------------+------+--------+

[root@linux-node1 ~]# rz cirros-0.3.0-x86_64-disk.img

[root@linux-node1 ~]# glance image-create --name "cirros-0.3.0-x86_64" --disk-format qcow2 --container-format bare --is-public True --progress < cirros-0.3.0-x86_64-disk.img

[=============================>] 100%

+--------------------+------------------------------------------+

| Property | Value |

+--------------------+------------------------------------------+

| checksum | 50bdc35edb03a38d91b1b071afb20a3c |

| container_format | bare |

| created_at | 2018-06-29T13:14:54 |

| deleted | False |

| deleted_at | None |

| disk_format | qcow2 |

| id | 38e7040a-2f18-44e1-acbc-24a7926e448a |

| is_public | True |

| min_disk | 0 |

| min_ram | 0 |

| name | cirros-0.3.0-x86_64 |

| owner | 058b14b50b374f69ad1d1d535eb70eeb |

| protected | False |

| size | 9761280 |

| status | active |

| updated_at | 2018-06-29T13:14:55 |

| virtual_size | None |

+--------------------+------------------------------------------+

[root@linux-node1 ~]# ll /var/lib/glance/images/ ##默认存放在:/var/lib/glance/images/

total 9536

-rw-r----- 1 glance glance 9761280 Jun 29 21:14 38e7040a-2f18-44e1-acbc-24a7926e448a

[root@linux-node1 ~]# glance image-list

+----------------------------------------+------------------------------+------------------+----------------------+---------+--------+

| ID | Name | Disk Format | Container Format | Size | Status |

+----------------------------------------+------------------------------+------------------+----------------------+---------+--------+

| 38e7040a-2f18-44e1-acbc-24a7926e448a | cirros-0.3.0-x86_64 | qcow2 | bare | 9761280 | active |

+----------------------------------------+------------------------------+------------------+----------------------+---------+--------+

[root@linux-node1 ~]# rm -f cirros-0.3.0-x86_64-disk.img

3.3、Compute Services(Nova)

3.3.1、Nova控制节点安装(Install Compute controller services)

在控制节点安装时,需要安装除了nova-compute之外的其它的所有的nova服务

[root@linux-node1 ~]# yum install -y openstack-nova-api openstack-nova-cert openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler python-novaclient

[root@linux-node1 ~]# vim /etc/nova/nova.conf

connection=mysql://nova:[email protected]/nova

[root@linux-node1 ~]# nova-manage db sync

[root@linux-node1 ~]# mysql -e "use nova;show tables" ##有表表明同步成功

[root@linux-node1 ~]# vim /etc/nova/nova.conf

rabbit_host=10.0.0.101

abbit_port=5672

rabbit_use_ssl=false

rabbit_userid=guest

rabbit_password=guest

rpc_backend=rabbit

[root@linux-node1 ~]# source keystone-admin

[root@linux-node1 ~]# keystone user-create --name=nova --pass=nova

+-----------+-------------------------------------+

| Property | Value |

+-----------+-------------------------------------+

| email | |

| enabled | True |

| id | 0e2c33bcbc10467b87efe4300b7d02b6 |

| name | nova |

| username | nova |

+-----------+-------------------------------------+

[root@linux-node1 ~]# keystone user-role-add --user=nova --tenant=service --role=admin

[root@linux-node1 ~]# vim /etc/nova/nova.conf

auth_strategy=keystone

auth_host=10.0.0.101

auth_port=35357

auth_protocol=http

auth_uri=http://10.0.0.101:5000

auth_version=v2.0

admin_user=nova

admin_password=nova

admin_tenant_name=service

[root@linux-node1 ~]# vim /etc/nova/nova.conf

novncproxy_base_url=http://10.0.0.101:6080/vnc_auto.html

vncserver_listen=0.0.0.0

vncserver_proxyclient_address=10.0.0.101

vnc_enabled=true

vnc_keymap=en-us

my_ip=10.0.0.101

glance_host=$my_ip

lock_path=/var/lib/nova/tmp

state_path=/var/lib/nova

instances_path=$state_path/instances

compute_driver=libvirt.LibvirtDriver

[root@linux-node1 ~]# keystone service-create --name=nova --type=compute

+----------------+-------------------------------------+

| Property | Value |

+----------------+-------------------------------------+

| description | |

| enabled | True |

| id | 3825f34e11de45399cf82f9a1c56b5c5 |

| name | nova |

| type | compute |

+----------------+-------------------------------------+

[root@linux-node1 ~]# keystone endpoint-create \

--service-id=$(keystone service-list|awk '/ compute /{print $2}') \

--publicurl=http://10.0.0.101:8774/v2/%\(tenant_id\)s \

--internalurl=http://10.0.0.101:8774/v2/%\(tenant_id\)s \

--adminurl=http://10.0.0.101:8774/v2/%\(tenant_id\)s

+----------------+-----------------------------------------------+

| Property | Value |

+----------------+-----------------------------------------------+

| adminurl | http://10.0.0.101:8774/v2/%(tenant_id)s |

| id | 4821c43cdbcb4a8c9e202017ad056437 |

| internalurl | http://10.0.0.101:8774/v2/%(tenant_id)s |

| publicurl | http://10.0.0.101:8774/v2/%(tenant_id)s |

| region | regionOne |

| service_id | 3825f34e11de45399cf82f9a1c56b5c5 |

+----------------+-----------------------------------------------+

[root@linux-node1 ~]# for i in {api,cert,conductor,consoleauth,novncproxy,scheduler};do service openstack-nova-"$i" start;done

Starting openstack-nova-api: [ OK ]

Starting openstack-nova-cert: [ OK ]

Starting openstack-nova-conductor: [ OK ]

Starting openstack-nova-consoleauth: [ OK ]

Starting openstack-nova-novncproxy: [ OK ]

Starting openstack-nova-scheduler: [ OK ]

[root@linux-node1 ~]# for i in {api,cert,conductor,consoleauth,novncproxy,scheduler};do chkconfig openstack-nova-"$i" on;done

[root@linux-node1 ~]# nova host-list ##验证安装成功

+-------------+--------------+-------------+

| host_name | service | zone |

+-------------+--------------+-------------+

| linux-node1 | conductor | internal |

| linux-node1 | consoleauth | internal |

| linux-node1 | cert | internal |

| linux-node1 | scheduler | internal |

+-------------+--------------+-------------+

[root@linux-node1 ~]# nova flavor-list ##验证安装成功

+----+---------------+------------+-------+---------+-------+-------+-------------+-----------+

| ID | Name | Memory_MB | Disk | Ephemeral | Swap | VCPUs | RXTX_Factor | Is_Public |

+----+---------------+------------+-------+---------+-------+-------+-------------+-----------+

| 1 | m1.tiny | 512 | 1 | 0 | | 1 | 1.0 | True |

| 2 | m1.small | 2048 | 20 | 0 | | 1 | 1.0 | True |

| 3 | m1.medium | 4096 | 40 | 0 | | 2 | 1.0 | True |

| 4 | m1.large | 8192 | 80 | 0 | | 4 | 1.0 | True |

| 5 | m1.xlarge | 16384 | 160 | 0 | | 8 | 1.0 | True |

+----+---------------+------------+-------+---------+-------+-------+-------------+-----------+

3.3.2、Nova计算节点安装(Configure a compute node)

nova-comupte:一般运行在计算节点上,通过Message Queue接收并管理VM的生命周期

nova-comupte:通过Libvirt管理KVM,通过XenAPI管理Xen等

[root@linux-node2 ~]# yum install -y qemu-kvm libvirt openstack-nova-compute python-novaclient

在linux-node1上把Nova的配置文件scp到linux-node2上

[root@linux-node1 ~]# scp /etc/nova/nova.conf [email protected]:/etc/nova

[root@linux-node2 ~]# vim /etc/nova/nova.conf

vncserver_proxyclient_address=10.0.0.102

[root@linux-node2 ~]# /etc/init.d/libvirtd start

Starting libvirtd daemon:

[root@linux-node2 ~]# /etc/init.d/messagebus start

Starting system message bus:

[root@linux-node2 ~]# /etc/init.d/openstack-nova-compute start

Starting openstack-nova-compute: [ OK ]

[root@linux-node2 ~]# chkconfig libvirtd on

[root@linux-node2 ~]# chkconfig messagebus on

[root@linux-node2 ~]# chkconfig openstack-nova-compute on

在linux-node1上验证是否上线

[root@linux-node1 ~]# nova host-list

+-------------+---------------+----------+

| host_name | service | zone |

+-------------+---------------+----------+

| linux-node1 | conductor | internal |

| linux-node1 | consoleauth | internal |

| linux-node1 | cert | internal |

| linux-node1 | scheduler | internal |

| linux-node2 | compute | nova |

+-------------+---------------+----------+

[root@linux-node1 ~]# nova service-list

+-------------------+-------------+-----------+---------+------+---------------------------+-----------------+

| Binary | Host | Zone | Status | State | Updated_at | Disabled Reason |

+-------------------+-------------+-----------+---------+------+---------------------------+-----------------+

| nova-conductor | linux-node1 | internal | enabled | up | 2018-06-30T09:37:52.000000 | - |

| nova-consoleauth | linux-node1 | internal | enabled | up | 2018-06-30T09:37:52.000000 | - |

| nova-cert | linux-node1 | internal | enabled | up | 2018-06-30T09:37:52.000000 | - |

| nova-scheduler | linux-node1 | internal | enabled | up | 2018-06-30T09:37:52.000000 | - |

| nova-compute | linux-node2 | nova | enabled | up | 2018-06-30T09:37:50.000000 | - |

+-------------------+-------------+-----------+---------+------+---------------------------+-----------------+

3.4、Networking(Neutron)

Nova-Network:开始只支持linux网桥;

Quantum:还支持vxlan和gre,后因quantum和一公司名称相同,因此改为neutron.

3.4.1、在linux-node1端:

[root@linux-node1 ~]# yum install -y openstack-neutron openstack-neutron-ml2 python-neutronclient openstack-neutron-linuxbridge

[root@linux-node1 ~]# vim /etc/neutron/neutron.conf

connection = mysql://neutron:[email protected]:3306/neutron

[root@linux-node1 ~]# source keystone-admin

[root@linux-node1 ~]# keystone user-create --name neutron --pass neutron

+-------------+------------------------------------+

| Property | Value |

+-------------+------------------------------------+

| email | |

| enabled | True |

| id | cc572f0a0e4c422781955e510b5f1462 |

| name | neutron |

| username | neutron |

+-------------+------------------------------------+

[root@linux-node1 ~]# keystone user-role-add --user neutron --tenant service --role admin

[root@linux-node1 ~]# vim /etc/neutron/neutron.conf

debug = true

state_path = /var/lib/neutron

lock_path = $state_path/lock

core_plugin = ml2

service_plugins = router,firewall,lbaas

api_paste_config = /usr/share/neutron/api-paste.ini

auth_strategy = keystone

rabbit_host = 10.0.0.101

rabbit_password = guest

rabbit_port = 5672

rabbit_userid = guest

rabbit_virtual_host = /

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

nova_url = http://10.0.0.101:8774/v2

nova_admin_username = nova

nova_admin_tenant_id = b8d9cf30de5c4c908e26b7eb5c89f2f8 ##keystone tenant-list可得到tenant的id

nova_admin_password = nova

nova_admin_auth_url = http://10.0.0.101:35357/v2.0

root_helper = sudo neutron-rootwrap /etc/neutron/rootwrap.conf

auth_host = 10.0.0.101

auth_port = 35357

auth_protocol = http

admin_tenant_name = service

admin_user = neutron

admin_password = neutron

[root@linux-node1 ~]# vim /etc/neutron/plugins/ml2/ml2_conf.ini

type_drivers = flat,vlan,gre,vxlan

tenant_network_types = flat,vlan,gre,vxlan

mechanism_drivers = linuxbridge,openvswitch

flat_networks = physnet1

enable_security_group = True

[root@linux-node1 ~]# vim /etc/neutron/plugins/linuxbridge/linuxbridge_conf.ini

network_vlan_ranges = physnet1

physical_interface_mappings = physnet1:eth0

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

enable_security_group = True

[root@linux-node1 ~]# vim /etc/nova/nova.conf

network_api_class=nova.network.neutronv2.api.API

linuxnet_interface_driver=nova.network.linux_net.LinuxBridgeInterfaceDriver

neutron_url=http://10.0.0.101:9696

neutron_admin_username=neutron

neutron_admin_password=neutron

neutron_admin_tenant_id=b8d9cf30de5c4c908e26b7eb5c89f2f8

neutron_admin_tenant_name=service

neutron_admin_auth_url=http://10.0.0.101:5000/v2.0

neutron_auth_strategy=keystone

security_group_api=neutron

firewall_driver=nova.virt.firewall.NoopFirewallDriver

vif_driver=nova.virt.libvirt.vif.NeutronLinuxBridgeVIFDriver

[root@linux-node1 ~]# for i in {api,conductor,scheduler};do service openstack-nova-"$i" restart;done

[root@linux-node1 ~]# keystone service-create --name neutron --type network

+-------------+------------------------------------+

| Property | Value |

+-------------+------------------------------------+

| description | |

| enabled | True |

| id | fd5b4252c2e34a4dbe5255a50c84d61f |

| name | neutron |

| type | network |

+-------------+------------------------------------+

[root@linux-node1 ~]# keystone endpoint-create \

--service-id=$(keystone service-list |awk '/ network / {print $2}') \

--publicurl=http://10.0.0.101:9696 \

--internalurl=http://10.0.0.101:9696 \

--adminurl=http://10.0.0.101:9696

+----------------+---------------------------------------------+

| Property | Value |

+----------------+---------------------------------------------+

| adminurl | http://10.0.0.101:9696 |

| id | 8faadda104854407beffc2ba76a35c48 |

| internalurl | http://10.0.0.101:9696 |

| publicurl | http://10.0.0.101:9696 |

| region | regionOne |

| service_id | fd5b4252c2e34a4dbe5255a50c84d61f |

+----------------+---------------------------------------------+

[root@linux-node1 ~]# keystone service-list

+----------------------------------+------------+------------+--------------------+

| id | name | type | description |

+----------------------------------+------------+------------+--------------------+

| 34701cc1acd44e22b8ae8a9be069d0a7 | glance | image | |

| ea33049270ad4450ac789e9236774878 | keystone | identity | Openstack Identity |

| fd5b4252c2e34a4dbe5255a50c84d61f | neutron | network | |

| 3825f34e11de45399cf82f9a1c56b5c5 | nova | compute | |

+----------------------------------+------------+------------+--------------------+

[root@linux-node1 ~]# keystone endpoint-list

+------------------------------------------------------+-----------------+----------------------------------------------------------------+-------------------------------------------------------------+-----------------------------------------------------------------+---------------------------------------------------+

| id | region | publicurl | internalurl | adminurl | service_id |

+------------------------------------------------------+-----------------+----------------------------------------------------------------+-------------------------------------------------------------+-----------------------------------------------------------------+---------------------------------------------------+

| 4821c43cdbcb4a8c9e202017ad056437 | regionOne | http://10.0.0.101:8774/v2/%(tenant_id)s | http://10.0.0.101:8774/v2/%(tenant_id)s | http://10.0.0.101:8774/v2/%(tenant_id)s | 3825f34e11de45399cf82f9a1c56b5c5 |

| 5f7f06a3b4874deda19caf67f5f21a1f | regionOne | http://10.0.0.101:9292 | http://10.0.0.101:9292 | http://10.0.0.101:9292 | 34701cc1acd44e22b8ae8a9be069d0a7 |

| 735a100c58cf4335adc00bd4267a1387 | regionOne | http://10.0.0.101:5000/v2.0 | http://10.0.0.101:5000/v2.0 | http://10.0.0.101:35357/v2.0 | ea33049270ad4450ac789e9236774878 |

| 8faadda104854407beffc2ba76a35c48 | regionOne | http://10.0.0.101:9696 | http://10.0.0.101:9696 | http://10.0.0.101:9696 | fd5b4252c2e34a4dbe5255a50c84d61f |

+------------------------------------------------------+-----------------+----------------------------------------------------------------+-------------------------------------------------------------+-------------------------------------------------------------------+-----------------------------------------------------+

[root@linux-node1 ~]# neutron-server --config-file=/etc/neutron/neutron.conf --config-file=/etc/neutron/plugins/ml2/ml2_conf.ini --config-file=/etc/neutron/plugins/linuxbridge/linuxbridge_conf.ini

##可以先在前面测试启动是否成功

把/etc/init.d/neutron-server和/etc/init.d/neutron-linuxbridge-agent中config函数统一改为下面的config函数

15 configs=(

16 "/etc/neutron/neutron.conf" \

17 "/etc/neutron/plugins/ml2/ml2_conf.ini" \

18 "/etc/neutron/plugins/linuxbridge/linuxbridge_conf.ini" \

19 )

[root@linux-node1 ~]# /etc/init.d/neutron-server start

Starting neutron: [ OK ]

[root@linux-node1 ~]# /etc/init.d/neutron-linuxbridge-agent start

Starting neutron-linuxbridge-agent: [ OK ]

[root@linux-node1 ~]# neutron agent-list

+-------------------------------------+--------------------+--------------+----------+----------------+

| id | agent_type | host | alive | admin_state_up |

+-------------------------------------+--------------------+--------------+----------+----------------+

| dc5b1b78-c0fb-41ef-aad3-c17fc050aa87 | Linux bridge agent | linux-node1 | :-) | True |

+-------------------------------------+--------------------+--------------+----------+----------------+

3.4.2、在linux-node2端:

[root@linux-node2 ~]# yum install -y openstack-neutron openstack-neutron-ml2 python-neutronclient openstack-neutron-linuxbridge

在linux-node1上把Neutron的配置文件scp到linux-node2上

[root@linux-node1 ~]# scp /etc/nova/nova.conf [email protected]:/etc/nova/

[root@linux-node1 ~]# scp /etc/neutron/neutron.conf [email protected]:/etc/neutron/

[root@linux-node1 ~]# scp /etc/neutron/plugins/ml2/ml2_conf.ini [email protected]:/etc/neutron/plugins/ml2/

[root@linux-node1 ~]# scp /etc/neutron/plugins/linuxbridge/linuxbridge_conf.ini [email protected]:/etc/neutron/plugins/linuxbridge/

[root@linux-node1 ~]# scp /etc/init.d/neutron-* [email protected]:/etc/init.d/

[root@linux-node2 ~]# vim /etc/nova/nova.conf

vncserver_proxyclient_address=10.0.0.102

[root@linux-node2 ~]# /etc/init.d/openstack-nova-compute restart

[root@linux-node2 ~]# /etc/init.d/neutron-linuxbridge-agent start

Starting neutron-linuxbridge-agent: [ OK ]

[root@linux-node2 ~]# chkconfig neutron-linuxbridge-agent on

在linux-node1上验证是否成功

[root@linux-node1 ~]# nova host-list

+-------------+-------------+------------+

| host_name | service | zone |

+-------------+-------------+------------+

| linux-node1 | conductor | internal |

| linux-node1 | consoleauth | internal |

| linux-node1 | cert | internal |

| linux-node1 | scheduler | internal |

| linux-node2 | compute | nova |

+-------------+-------------+------------+

[root@linux-node1 ~]# neutron agent-list

+---------------------------------------+---------------------+-------------+----------+-----------------------+

| id | agent_type | host | alive | admin_state_up |

+---------------------------------------+---------------------+-------------+----------+-----------------------+

| 4c3d6c29-14e9-4622-ab14-d0775c9d60e8 | Linux bridge agent | linux-node2 | :-) | True |

| dc5b1b78-c0fb-41ef-aad3-c17fc050aa87 | Linux bridge agent | linux-node1 | :-) | True |

+---------------------------------------+---------------------+-------------+----------+-----------------------+

3.5、Add the Dashboard(Horizon)

Horizon:主要是为openstack提供一个web的管理界面

[root@linux-node1 ~]# yum install -y httpd mod_wsgi memcached python-memcached openstack-dashboard

出现下面的报错:

Error: Package: python-django-horizon-2014.1.5-1.el6.noarch (icehouse)

Requires: Django14

Error: Package: python-django-openstack-auth-1.1.5-1.el6.noarch (icehouse)

Requires: Django14

Error: Package: python-django-appconf-0.5-3.el6.noarch (icehouse)

Requires: Django14

Error: Package: python-django-compressor-1.3-2.el6.noarch (icehouse)

Requires: Django

You could try using --skip-broken to work around the problem

You could try running: rpm -Va --nofiles --nodigest

[root@linux-node1 ~]# rz Django14-1.4.21-1.el6.noarch.rpm ##从网上找到了rpm包,然后上传到控制节点

[root@linux-node1 ~]# rpm -ivh Django14-1.4.21-1.el6.noarch.rpm

warning: Django14-1.4.21-1.el6.noarch.rpm: Header V3 DSA/SHA1 Signature, key ID 96b71b07: NOKEY

Preparing... ########################################### [100%]

1:Django14 ########################################### [100%]

[root@linux-node1 ~]# yum install -y httpd mod_wsgi memcached python-memcached openstack-dashboard

Loaded plugins: fastestmirror, security

Setting up Install Process

[root@linux-node1 ~]# yum install -y httpd mod_wsgi memcached python-memcached openstack-dashboard

[root@linux-node1 ~]# /etc/init.d/memcached start

Starting memcached: [ OK ]

[root@linux-node1 ~]# chkconfig memcached on

[root@linux-node1 ~]# vim /etc/openstack-dashboard/local_settings

ALLOWED_HOSTS = ['horizon.example.com', 'localhost','10.0.0.101']

CACHES = {

'default': {

'BACKEND' : 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION' : '127.0.0.1:11211',

}

}

OPENSTACK_HOST = "10.0.0.101" ##keystone的ip地址

[root@linux-node1 ~]# /etc/init.d/httpd start

[root@linux-node1 ~]# chkconfig httpd on

此时就可以在浏览器上管理了: http://10.0.0.101/dashboard

建立网络:

[root@linux-node1 ~]# keystone tenant-list ##以普通用户demo为例

+------------------------------------+-------------+--------------+

| id | name | enabled |

+------------------------------------+-------------+--------------+

| 058b14b50b374f69ad1d1d535eb70eeb | admin | True |

| 064e6442044c4ed58fb0954f12910599 | demo | True |

| b8d9cf30de5c4c908e26b7eb5c89f2f8 | service | True |

+------------------------------------+-------------+--------------+

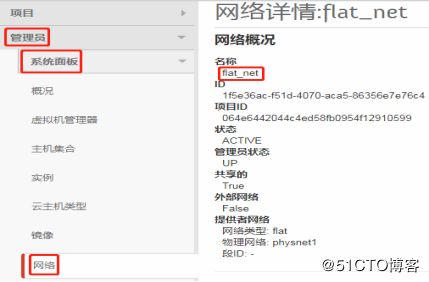

[root@linux-node1 ~]# neutron net-create --tenant-id 064e6442044c4ed58fb0954f12910599 flat_net --shared --provider:network_type flat --provider:physical_network physnet1

Created a new network:

+----------------------------------------+-------------------------------------------------------+

| Field | Value |

+----------------------------------------+-------------------------------------------------------+

| admin_state_up | True |

| id | 1f5e36ac-f51d-4070-aca5-86356e7e76c4 |

| name | flat_net |

| provider:network_type | flat |

| provider:physical_network | physnet1 |

| provider:segmentation_id | |

| shared | True |

| status | ACTIVE |

| subnets | |

| tenant_id | 064e6442044c4ed58fb0954f12910599 |

+----------------------------------------+-------------------------------------------------------+

[root@linux-node1 ~]# neutron net-list

+--------------------------------------------------------+------------+--------------+

| id | name | subnets |

+--------------------------------------------------------+------------+--------------+

| 1f5e36ac-f51d-4070-aca5-86356e7e76c4 | flat_net | |

+--------------------------------------------------------+------------+--------------+

为创建子网做准备:

[root@linux-node1 ~]# route -n

Destination Gateway Genmask Flags Metric Ref Use Iface

10.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

172.16.1.0 0.0.0.0 255.255.255.0 U 0 0 0 eth1

192.168.122.0 0.0.0.0 255.255.255.0 U 0 0 0 virbr0

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 eth0

169.254.0.0 0.0.0.0 255.255.0.0 U 1003 0 0 eth1

0.0.0.0 10.0.0.2 0.0.0.0 UG 0 0 0 eth0

[root@linux-node1 ~]# cat /etc/resolv.conf ##DNS解析地址

nameserver 223.5.5.5

退出admin账户,用demo账户登录

创建虚拟机:

创建虚拟机成功后,在计算节点(linux-node2)查看变化

[root@linux-node2 ~]# vim /etc/sysctl.conf

net.ipv4.ip_forward = 1

[root@linux-node2 ~]# sysctl -p

[root@linux-node2 ~]# ifconfig

brq1f5e36ac-f5 Link encap:Ethernet HWaddr 00:0C:29:61:F7:97 ##变成eth0的ip

inet addr:10.0.0.102 Bcast:10.0.0.255 Mask:255.255.255.0

inet6 addr: fe80::f027:4aff:fe52:631c/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:15766 errors:0 dropped:0 overruns:0 frame:0

TX packets:14993 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2492994 (2.3 MiB) TX bytes:8339199 (7.9 MiB)

eth0 Link encap:Ethernet HWaddr 00:0C:29:61:F7:97 ##eth0的ip没有了

inet6 addr: fe80::20c:29ff:fe61:f797/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:188425 errors:0 dropped:0 overruns:0 frame:0

TX packets:133889 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:124137812 (118.3 MiB) TX bytes:31074146 (29.6 MiB)

eth1 Link encap:Ethernet HWaddr 00:0C:29:61:F7:A1

inet addr:172.16.1.102 Bcast:172.16.1.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe61:f7a1/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:6 errors:0 dropped:0 overruns:0 frame:0

TX packets:13 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:360 (360.0 b) TX bytes:938 (938.0 b)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:178753 errors:0 dropped:0 overruns:0 frame:0

TX packets:178753 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:9384857 (8.9 MiB) TX bytes:9384857 (8.9 MiB)

tap003e633e-77 Link encap:Ethernet HWaddr FE:16:3E:47:56:8F

inet6 addr: fe80::fc16:3eff:fe47:568f/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:27 errors:0 dropped:0 overruns:0 frame:0

TX packets:4158 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:500

RX bytes:4302 (4.2 KiB) TX bytes:384259 (375.2 KiB)

virbr0 Link encap:Ethernet HWaddr 52:54:00:10:9D:8B

inet addr:192.168.122.1 Bcast:192.168.122.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

[root@linux-node2 ~]# brctl show

bridge name bridge id STP enabled interfaces

brq1f5e36ac-f5 8000.000c2961f797 no eth0

tap003e633e-77

virbr0 8000.525400109d8b yes virbr0-nic

在整个过程中碰到的两个小问题及解决方法:

1、创建完成demo之后,有一个”无法连接到Neutron ”的报错,网上查询得知:

在Icehouse版本创建虚拟机会遇到错误:无法连接到Neutron.的报错,但是虚拟机还可以创建成功,这个是一个已知的bug,可以通过修改源码解决;还有一种情况,就是你的Neutron真的无法连接,要查看服务和监听端口9696是否正常。

Yum安装的文件在这里/usr/share/openstack-dashboard/openstack_dashboard/api/neutron.py

源码安装的在这里/usr/lib/python2.6/site-packages/openstack_dashboard/api/neutron.py

在class FloatingIpManager类里少了is_supported的方法,这个是一个bug,可以通过手动修改解决。

def is_simple_associate_supported(self):

# NOTE: There are two reason that simple association support

# needs more considerations. (1) Neutron does not support the

# default floating IP pool at the moment. It can be avoided

# in case where only one floating IP pool exists.

# (2) Neutron floating IP is associated with each VIF and

# we need to check whether such VIF is only one for an instance

# to enable simple association support.

return False

#在这个类的最下面,增加下面的方法,注意缩进。

def is_supported(self):

network_config = getattr(settings, 'OPENSTACK_NEUTRON_NETWORK', {})

return network_config.get('enable_router', True)

修改完毕后,需要重启apache才可以生效/etc/init.d/httpd restart

2、OpenStack Icehouse版,openstack-nova-novncproxy启动失败 无法访问控制台,6080端口没启动

首先查看nova-novncproxy的状态:

/etc/init.d/openstack-nova-novncproxy status

openstack-nova-novncproxy dead but pid file exists

然后debug输出看看:

[root@controller ~]# /usr/bin/nova-novncproxy --debug

TTraceback (most recent call last):

File "/usr/bin/nova-novncproxy", line 10, in

sys.exit(main())

File "/usr/lib/python2.6/site-packages/nova/cmd/novncproxy.py", line 87, in main

wrap_cmd=None)

File "/usr/lib/python2.6/site-packages/nova/console/websocketproxy.py", line 47, in __init__

ssl_target=None, *args, **kwargs)

File "/usr/lib/python2.6/site-packages/websockify/websocketproxy.py", line 231, in __init__

websocket.WebSocketServer.__init__(self, RequestHandlerClass, *args, **kwargs)

TypeError: __init__() got an unexpected keyword argument 'no_parent'

错误原因:websockify版本太低,升级websockify版本即可。

解决措施:pip install websockify==0.5.1

最后的效果:

注:不管能否解决你遇到的问题,欢迎相互交流,共同提高!

镜像:cirros-0.3.0-x86_64-disk.img

DjangoRPM包:Django14-1.4.21-1.el6.noarch.rpm

官方安装文档:OpenStack-install-guide-yum-icehouse.pdf

有需要文件的请下方留言