原创作品,允许转载,转载时请务必以超链接形式标明文章 原始出处 、作者信息和本声明。否则将追究法律责任。http://sohudrgon.blog.51cto.com/3088108/1599984

一.RHCS简介:

RHCS是Red Hat ClusterSuite的缩写。

RHCS是一个功能完备的集群应用解决方案,它从应用的前端访问到后端的数据存储都提供了一个行之有效的集群架构实现,通过RHCS提供的这种解决方案,不但能保证前端应用持久、稳定的提供服务,同时也保证了后端数据存储的安全。

RHCS集群的组成:

RHCS是一个集群套件,其主要包括以下几部分:

1.集群构架管理器:RHCS的基础套件,提供集群的基本功能,主要包括布式集群管理器(CMAN)、锁管理(DLM)、配置文件管理(CCS)、栅设备(FENCE)

2.rgmanager高可用服务管理器

提供节点服务监控和服务故障转移功能,当一个节点服务出现故障时,将服务转移到另一个健康节点。

3.集群管理工具

RHCS通过system-config-cluster来进行配置,这是一个基于图形界面的工具,可以很简单、明了的进行配置

4.负载均衡工具

RHCS通过LVS实现服务之间的负载均衡,LVS是系统内核中的套件,所有性能比较好。

5.GFSv2

集群文件系统,这是由RedHat公司开发的,GFS文件系统允许多个服务同时读写一个磁盘分区,通过GFS可以实现数据的集中管理,免去了数据同步和拷贝的麻烦,但GFS并不能孤立的存在,安装GFS需要RHCS的底层组件支持。

6.Cluster Logical Volume Manager

Cluster逻辑卷管理,即CLVM,是LVM的扩展,这种扩展允许cluster中的机器使用LVM来管理共享存储,但是配置之前需要开启lvm支持集群功能。

7.ISCSI

iSCSI是一种在Internet协议上,利用tcp/ip机制对fc、fc-xx等进行封装后在网络中进行传输。isici是基于C/S架构的,数据首先被封装成scsi报文,在封装成iscsi报文,最后封装tcp/ip报文进行传输!iscsi是基于tcp的,监听在3260上,通过3260端口向外提供tcp/ip的服务的,isisc的会话是一直保存建立的,知道会话介绍再断开。RHCS可以通过ISCSI技术来导出和分配共享存储的使用。

运行原理:

1 分布式集群管理器

管理集群成员,了解成员之间的运行状态。

2 锁管理

每一个节点都运行了一个后台进程DLM,当用记操作一个元数据时,会通知其它节点,只能读取这个元数据。

3 配置文件管理

Cluster Configuration System,主要用于集群配置文件管理,用于配置文件的同步。

每个节点运行了CSS后台进程。当发现配置文件变化后,马上将此变化传播到其它节点上去。

/etc/cluster/cluster.conf

4.栅设备(fence)

工作原理:当主机异常,务机会调用栅设备,然后将异常主机重启,当栅设备操作成功后,返回信息给备机。

备机接到栅设备的消息后,接管主机的服务和资源。

Conga集群管理软件: 该工具包含在最新发布的RHEL中,通过使用Conga,你可以非常方便的配置和管理好你的服务器集群和存储阵列.conga由两部分组成,luci和ricci,luci是跑在集群管理机器上的服务,而Ricci则是跑在各集群节点上的服务,集群的管理和配置由这两个服务进行通信.我们就使用Conga来管理RHCS集群。

二.实验环境

实验使用CentOS 6.6 64bit系统搭建,各节点IP,软件规划表:

RHCS实验架构图:

三.前期准备工作

1.节点之间时间必须同步,建议使用ntp协议进行;

2.节点之间必须要通过主机名互相通信;

建议使用hosts文件;

通信中使用的名字必须与其节点为上“uname -n”命令展示出的名字保持一致;

3.我们这里是三个存储节点,如果是2个节点需要提供:qdisk仲裁设备

4.节点之间彼此root用户能基于ssh密钥方式进行无密钥通信;

5.yum源也需要配置好。

前提的实现:

1.设置管理端:

将管理端的公钥私钥传输给各节点:

|

1

2

|

[root@nfs ~]# ssh-keygen

[root@nfs ~]#

for

i in {1..4} ;dossh-

copy

-id -i node

$i

; done

|

管理端实现无密钥登录。

2.各节点主机名解析一致;

先于管理端配置完毕;复制到各节点。

|

1

2

3

4

5

6

7

8

9

|

[root@nfs ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

172.16.0.1 server.magelinux.com server

172.16.31.12 nfs.stu31.com nfs

172.16.31.10 node1.stu31.com node1

172.16.31.11 node2.stu31.com node2

172.16.31.13 node3.stu31.com node3

172.16.31.14 node4.stu31.com node4

|

|

1

2

3

4

5

|

[root@nfs ~]#

for

i in {1..4} ;

do

scp/etc/hosts root@node

$i

:/etc/hosts ; done

hosts 100% 369 0.4KB/s 00:00

hosts 100% 369 0.4KB/s 00:00

hosts 100% 369 0.4KB/s 00:00

hosts 100% 369 0.4KB/s 00:00

|

2.节点之间实现无密钥通信

|

1

2

|

[root@node1 ~]# ssh-keygen -t rsa -P

""

[root@node1 ~]#

for

i in {1..4} ; dossh-

copy

-id -i .ssh/id_rsa.pub root@node

$i

; done

|

其它节点依次实现:

最后实现时间测试:

|

1

2

3

4

5

|

[root@node1 ~]#

date

; ssh node2

date

; sshnode3

date

; ssh node4

date

Mon Jan 5 20:05:16 CST 2015

Mon Jan 5 20:05:16 CST 2015

Mon Jan 5 20:05:16 CST 2015

Mon Jan 5 20:05:17 CST 2015

|

或者在管理节点nfs上测试时间:

|

1

|

[root@nfs ~]#

for

i in {1..4} ;

do

sshnode

$i

'date'

; done

|

节点的yum源都有特定服务器提供:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[root@node1 ~]# cat/etc/yum.repos.d/centos6.6.repo

[base]

name=CentOS

$releasever

$basearch

on localserver 172.16.0.1

baseurl=http:

//172.16.0.1/cobbler/ks_mirror/CentOS-6.6-$basearch/

gpgcheck=0

[extra]

name=CentOS

$releasever

$basearch

extras

baseurl=http:

//172.16.0.1/centos/$releasever/extras/$basearch/

gpgcheck=0

[epel]

name=Fedora EPEL

for

CentOS

$releasever

$basearch

on local server 172.16.0.1

baseurl=http:

//172.16.0.1/fedora-epel/$releasever/$basearch/

gpgcheck=0

|

四.conga的安装配置

安装luci和ricci(管理端安装luci,节点安装ricci)

Luci是运行WEB样式的Conga服务器端,它可以通过web界面很容易管理整个RHCS集群,每一步操作都会在/etc/cluster/cluster.conf生成相应的配置信息

1.在管理端安装luci

|

1

|

[root@nfs ~]# yum install -y luci

|

安装完成后启动luci:

|

1

2

3

4

5

6

7

8

9

|

[root@nfs ~]# /etc/rc.d/init.d/luci start

Adding following auto-detected host IDs (IPaddresses/domain names), corresponding to `nfs.stu31.com

' address, to theconfiguration of self-managed certificate `/var/lib/luci/etc/cacert.config'

(you can change them by editing `/

var

/lib/luci/etc/cacert.config

', removing thegenerated certificate `/var/lib/luci/certs/host.pem'

and

restarting luci):

(none suitable found, you can still

do

it manually

as

mentioned above)

Generating a 2048 bit RSA

private

key

writing

new

private

key to

'/var/lib/luci/certs/host.pem'

Starting saslauthd: [ OK ]

Start luci... [ OK ]

Point your web browser tohttps:

//nfs.stu31.com:8084 (or equivalent) to access luci

|

\\生成以上信息,说明配置成功,注意:安装luci会安装很多python包,python包尽量采用光盘自带的包,否则启动luci会出现报错现象。

2.在其他节点上安装ricci;在管理端运行安装简单一些。

|

1

|

[root@nfs ~]#

for

i in {1..4};

do

sshnode

$i

"yum install ricci -y"

; done

|

安装完成后启动ricci服务:

|

1

|

[root@nfs ~]#

for

i in {1..4};

do

sshnode

$i

"chkconfig ricci on && /etc/rc.d/init.d/ricci start"

;done

|

为各节点的ricci设置密码,在Conga web页面添加节点的时候需要输入ricci密码。

|

1

|

[root@nfs ~]#

for

i in {1..4};

do

sshnode

$i

"echo 'oracle' | passwd ricci --stdin"

; done

|

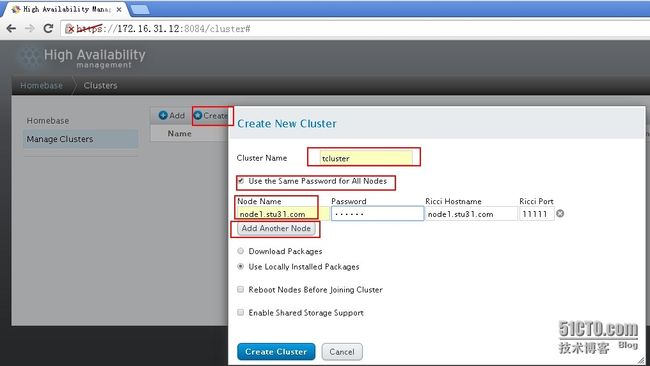

4.添加节点node1-node3,设置3个节点满足RHCS集群的要求,password为各节点ricci的密码,然后勾选“DownloadPackages”(在各节点yum配置好的基础上,自动安装cman和rgmanager及相关的依赖包),勾选“Enable Shared Storage Support”,安装存储相关的包,并支持gfs2文件系统、DLM锁、clvm逻辑卷等。

5.访问https://172.16.31.13:8084或者https://nfs.stu31.com:8084

警告忽略:

选择Manage Clusters创建集群,点击Create:

集群名称,添加节点:

RHCS必须存在3个节点哦!

创建完成;将会自动安装cman和rgmanager,及自动安装lvm2-cluster,并自动启动服务:

注意:创建集群失败,启动cman失败,我们需要将NetworkManager服务关闭:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[root@node1 ~]# service cman start

Starting cluster:

Checking

if

cluster has been disabled at boot... [ OK ]

Checking Network Manager...

Network Manager is either running orconfigured to run. Please disable it in the cluster.

[FAILED]

Stopping cluster:

Leaving fence domain... [ OK ]

Stopping gfs_controld... [ OK ]

Stopping dlm_controld... [ OK ]

Stopping fenced... [ OK ]

Stopping cman... [ OK ]

Unloading kernel modules... [ OK ]

Unmounting configfs... [ OK ]

[root@node1 ~]#

|

关闭 NetworkManager服务及其自启动:

|

1

2

3

4

5

|

[root@nfs ~]#

for

i in {1..4};

do

sshnode

$i

"chkconfig NetworkManager off &&/etc/rc.d/init.d/NetworkManager stop"

; done

Stopping NetworkManager daemon: [ OK ]

Stopping NetworkManager daemon: [ OK ]

Stopping NetworkManager daemon: [ OK ]

Stopping NetworkManager daemon: [ OK ]

|

再次加入节点创建集群成功。

7.我们需要将cman和rgmanager和clvmd服务设置为开机自启动。

|

1

|

[root@nfs ~]#

for

i in {1..3};

do

sshnode

$i

"chkconfig cman on && chkconfig rgmanager on &&chkconfig clvmd on "

; done

|

登录任意一个节点查看各服务的开机启动情况,为2-5级别自动启动。

|

1

2

3

4

5

6

|

[root@nfs ~]# ssh node1

"chkconfig--list |grep cman "

cman 0:off 1:off 2:on 3:on 4:on 5:on 6:off

[root@nfs ~]# ssh node1

"chkconfig--list |grep rgmanager "

rgmanager 0:off 1:off 2:on 3:on 4:on 5:on 6:off

[root@nfs ~]# ssh node1

"chkconfig--list |grep clvmd "

clvmd 0:off 1:off 2:on 3:on 4:on 5:on 6:off

|

8.查看集群节点状态:

|

1

2

3

4

5

6

7

8

9

|

[root@node2 ~]# clustat

Cluster Status

for

tcluster @ Tue Jan 6 12:39:48 2015

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

node1.stu31.com 1 Online

node2.stu31.com 2 Online, Local

node3.stu31.com 3 Online

|

五.配置共享存储服务器

我们将node4设置为共享存储服务器

|

1

2

3

|

1.存在个空闲的磁盘,分别是sdb

[root@node4 ~]# ls /dev/sd*

/dev/sda /dev/sda1 /dev/sda2 /dev/sdb

|

我们将其格式化为一个LVS的逻辑卷

|

1

|

[root@node4 ~]#

echo

-e -n

"n\np\n1\n\n\nt\n8e\nw\n"

|fdisk /dev/sdb

|

2.安装iSCSI管理端

|

1

|

[root@node4 ~]# yum -y installscsi-target-utils

|

3.配置导出磁盘

|

1

2

3

4

5

|

[root@node4 ~]# vim /etc/tgt/targets.conf

backing-store /dev/sdb1

initiator-address 172.16.31.0/24

|

启动iSCSI服务器:

|

1

2

|

[root@node4 ~]# service tgtd start

Starting SCSI target daemon: [ OK ]

|

查看存储导出状态:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

[root@node4 ~]# tgtadm -L iscsi -m target-o show

Target 1: iqn.2015-01.com.stu31:node4.t1

System information:

Driver: iscsi

State: ready

I_T nexus information:

LUN information:

LUN: 0

Type: controller

SCSI ID: IET 00010000

SCSI SN: beaf10

Size: 0 MB, Block size: 1

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

Backing store type: null

Backing store path: None

Backing store flags:

LUN: 1

Type: disk

SCSI ID: IET 00010001

SCSI SN: beaf11

Size: 16105 MB, Block size: 512

Online: Yes

Removable media: No

Prevent removal: No

Readonly: No

Backing store type: rdwr

Backing store path: /dev/sdb1

Backing store flags:

Account information:

ACL information:

172.16.31.0/24

|

4.在node1-node3节点安装iscsi客户端软件:

|

1

|

[root@nfs ~]#

for

i in {1..3} ;

do

sshnode

$i

"yum -y install iscsi-initiator-utils"

; done

|

5.使用iscsi-iname生成iscsi的随机后缀,注意命令使用的节点

|

1

2

3

|

[root@node1 ~]#

for

i in {1..3} ;

do

sshnode

$i

"echo "

InitiatorName=`iscsi-iname -piqn.2015-01.com.stu31`

" > /etc/iscsi/initiatorname.iscsi"

; done

[root@node1 ~]# cat/etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2015-01.com.stu31:c0d826102dbb

|

6.节点客户端发现共享存储

|

1

2

3

4

5

6

7

|

[root@nfs ~]#

for

i in {1..3};

do

sshnode

$i

"iscsiadm -m discovery -t st -p 172.16.31.14"

; done

[ OK ] iscsid: [ OK ]

172.16.31.14:3260,1iqn.2015-01.com.stu31:node4.t1

[ OK ] iscsid: [ OK ]

172.16.31.14:3260,1iqn.2015-01.com.stu31:node4.t1

[ OK ] iscsid: [ OK ]

172.16.31.14:3260,1iqn.2015-01.com.stu31:node4.t1

|

7.注册iscsi共享设备,节点登录,

|

1

2

3

4

5

6

7

|

[root@nfs ~]#

for

i in {1..3};

do

sshnode

$i

"iscsiadm -m node -l"

;done

Logging in to [iface:

default

, target:iqn.2015-01.com.stu31:node4.t1, portal: 172.16.31.14,3260] (multiple)

Login to [iface:

default

, target:iqn.2015-01.com.stu31:node4.t1, portal: 172.16.31.14,3260] successful.

Logging in to [iface:

default

, target:iqn.2015-01.com.stu31:node4.t1, portal: 172.16.31.14,3260] (multiple)

Login to [iface:

default

, target:iqn.2015-01.com.stu31:node4.t1, portal: 172.16.31.14,3260] successful.

Logging in to [iface:

default

, target:iqn.2015-01.com.stu31:node4.t1, portal: 172.16.31.14,3260] (multiple)

Login to [iface:

default

, target:iqn.2015-01.com.stu31:node4.t1, portal: 172.16.31.14,3260] successful.

|

8.存储共享服务器端查看共享情况

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@node4 ~]# tgtadm --lld iscsi --opshow --mod conn --tid 1

Session: 3

Connection: 0

Initiator: iqn.2015-01.com.stu31:c1e7a22d5392

IP Address: 172.16.31.13

Session: 2

Connection: 0

Initiator: iqn.2015-01.com.stu31:36dfc7f6171

IP Address: 172.16.31.11

Session: 1

Connection: 0

Initiator: iqn.2015-01.com.stu31:34fc39a6696

IP Address: 172.16.31.10

|

9.各节点都会多出一个磁盘/sdb。

|

1

2

3

4

|

[root@nfs ~]#

for

i in {1..3} ;

do

sshnode

$i

"ls -l /dev/sd*|grep -v sda"

;done

brw-rw---- 1 root disk 8, 16 Jan 6 12:55 /dev/sdb

brw-rw---- 1 root disk 8, 16 Jan 6 12:55 /dev/sdb

brw-rw---- 1 root disk 8, 16 Jan 6 12:55 /dev/sdb

|

10.创建集群逻辑卷

将共享磁盘创建为集群逻辑卷

|

1

2

3

4

|

[root@node1 ~]#

echo

-n -e

"n\np\n1\n\n+10G\nt\n8e\nw\n"

|fdisk /dev/sdb

[root@node1 ~]# partx -a /dev/sdb

BLKPG: Device

or

resource busy

error adding partition 1

|

|

1

2

3

4

5

6

|

[root@node1 ~]# ll /dev/sd*

brw-rw---- 1 root disk 8, 0 Jan 6 02:40 /dev/sda

brw-rw---- 1 root disk 8, 1 Jan 6 02:40 /dev/sda1

brw-rw---- 1 root disk 8, 2 Jan 6 02:40 /dev/sda2

brw-rw---- 1 root disk 8, 16 Jan 6 14:29 /dev/sdb

brw-rw---- 1 root disk 8, 17 Jan 6 14:29 /dev/sdb1

|

并且sdb1的格式为逻辑卷磁盘:

|

1

2

3

4

5

6

7

8

9

10

11

|

[root@node2 ~]# fdisk -l /dev/sdb

Disk /dev/sdb: 16.1 GB, 16105065984 bytes

64 heads, 32 sectors/track, 15358 cylinders

Units = cylinders of 2048 * 512 = 1048576bytes

Sector size (logical/physical): 512 bytes /512 bytes

I/O size (minimum/optimal): 512 bytes / 512bytes

Disk identifier: 0xc12c6dc5

Device Boot Start

End

Blocks Id System

/dev/sdb1 1 10241 10486768 8e Linux LVM

|

开始创建逻辑卷:

|

1

2

3

4

|

[root@node1 ~]# pvcreate /dev/sdb1

Physical volume

"/dev/sdb1"

successfully created

[root@node1 ~]# vgcreate cvg /dev/sdb1

Clustered volume group

"cvg"

successfully created

|

[root@node1 ~]# lvcreate -L 5G -n clv cvg

Error locking on nodenode3.stu31.com: Volume group for uuid not found:swue1b5PBQHolDi5LKyvkCvecXN7tMkCOyEHCz50q8HqwodCeacVZccNhZF2kDeb

Error locking on node node2.stu31.com: Volumegroup for uuid not found: swue1b5PBQHolDi5LKyvkCvecXN7tMkCOyEHCz50q8HqwodCeacVZccNhZF2kDeb

Failed to activate new LV.

报错了:

同步出现问题,我们去node2和node3去重新格式化后创建pv:

|

1

2

3

4

5

|

[root@node2 ~]# ll /dev/sd*

brw-rw---- 1 root disk 8, 0 Jan 6 02:40 /dev/sda

brw-rw---- 1 root disk 8, 1 Jan 6 02:40 /dev/sda1

brw-rw---- 1 root disk 8, 2 Jan 6 02:40 /dev/sda2

brw-rw---- 1 root disk 8, 16 Jan 6 14:54 /dev/sdb

|

事例node3,节点2同样操作:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

|

[root@node3 ~]# fdisk /dev/sdb

WARNING: DOS-compatible mode is deprecated.It's strongly recommended to

switch

off the mode (command

'c'

)

and

change display units to

sectors (command

'u'

).

Command (m

for

help): d

Selected partition 1

Command (m

for

help): 1

1: unknown command

Command action

a toggle a bootable flag

b edit bsd disklabel

c toggle the dos compatibilityflag

d

delete

a partition

l list known partition types

m

print

this menu

n add a

new

partition

o create a

new

empty

DOSpartition table

p

print

the partition table

q quit without saving changes

s create a

new

empty

Sundisklabel

t change a partition's system id

u change display/entry units

v verify the partition table

w write table to disk

and

exit

x extra functionality (expertsonly)

Command (m

for

help): p

Disk /dev/sdb: 16.1 GB, 16105065984 bytes

64 heads, 32 sectors/track, 15358 cylinders

Units = cylinders of 2048 * 512 = 1048576bytes

Sector size (logical/physical): 512 bytes /512 bytes

I/O size (minimum/optimal): 512 bytes / 512bytes

Disk identifier: 0xc12c6dc5

Device Boot Start

End

Blocks Id System

Command (m

for

help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-15358,

default

1):

Using

default

value 1

Last cylinder, +cylinders

or

+size{K,M,G}(1-15358,

default

15358): +10G

Command (m

for

help): t

Selected partition 1

Hex code (type L to list codes): 8e

Changed system type of partition 1 to 8e(Linux LVM)

Command (m

for

help): p

Disk /dev/sdb: 16.1 GB, 16105065984 bytes

64 heads, 32 sectors/track, 15358 cylinders

Units = cylinders of 2048 * 512 = 1048576bytes

Sector size (logical/physical): 512 bytes /512 bytes

I/O size (minimum/optimal): 512 bytes / 512bytes

Disk identifier: 0xc12c6dc5

DeviceBoot Start

End

Blocks Id System

/dev/sdb1 1 10241 10486768 8e Linux LVM

Command (m

for

help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

|

|

1

2

3

|

[root@node3 ~]# partx -a /dev/sdb

BLKPG: Device

or

resource busy

error adding partition 1

|

创建PV:错误忽略

|

1

2

3

4

5

6

|

[root@node3 ~]# pvcreate /dev/sdb1

Can't initialize physical volume

"/dev/sdb1"

of volume group

"cvg"

without -ff

[root@node3 ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 vg0 lvm2 a-- 59.99g 7.99g

/dev/sdb1 cvg lvm2 a-- 10.00g 10.00g

|

node2也是同样操作后创建pv:

|

1

2

3

4

5

6

|

[root@node2 ~]# pvcreate /dev/sdb1

Can't initialize physical volume

"/dev/sdb1"

of volume group

"cvg"

without -ff

[root@node2 ~]# vgs

VG #PV #LV #SN Attr VSize VFree

cvg 1 0 0wz--nc 10.00g 10.00g

vg0 1 4 0wz--n- 59.99g 7.99g

|

到node1重新创建:

|

1

2

3

4

5

6

7

8

9

10

|

[root@node1 ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 vg0 lvm2 a-- 59.99g 7.99g

/dev/sdb1 cvg lvm2 a-- 10.00g 10.00g

[root@node1 ~]# vgs

VG #PV #LV #SN Attr VSize VFree

cvg 1 0 0wz--nc 10.00g 10.00g

vg0 1 4 0wz--n- 59.99g 7.99g

[root@node1 ~]# lvcreate -L 5G -n clv cvg

Logical volume

"clv"

created

|

创建成功了

查看逻辑卷:

|

1

2

3

|

[root@node1 ~]# lvs cvg

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

clv cvg -wi-a----- 5.00g

|

格式化逻辑卷为集群文件系统:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

[root@node1 ~]# mkfs.gfs2 -j 3 -ttcluster:clv -p lock_dlm /dev/cvg/clv

This will destroy any data on /dev/cvg/clv.

It appears to contain: symbolic link to`../dm-4'

Are you sure you want to proceed? [y/n] y

Device: /dev/cvg/clv

Blocksize: 4096

Device Size 5.00 GB (1310720 blocks)

Filesystem Size: 5.00 GB (1310718 blocks)

Journals: 3

Resource Groups: 20

Locking Protocol:

"lock_dlm"

Lock Table:

"tcluster:clv"

UUID: 8bdaeca4-aeeb-8fc7-a2d0-23cc7289ae4d

|

节点挂载上gfs2文件系统:

|

1

2

|

[root@node1 ~]# mount -t gfs2 /dev/cvg/clv/

var

/www/html/

[root@node1 ~]#

echo

"page fromCLVM@GFS2"

> /

var

/www/html/index.html

|

我们去节点2挂载上:

|

1

2

3

4

|

[root@node2 ~]# ls /

var

/www/html/

[root@node2 ~]# mount -t gfs2 /dev/cvg/clv/

var

/www/html/

[root@node2 ~]# ls /

var

/www/html/

index.html

|

已经存在测试网页了。

然后我们在管理节点卸载:

|

1

|

[root@nfs ~]#

for

i in {1..3} ;

do

sshnode

$i

"umount /var/www/html"

;done

|

七.登录Conga,进行web集群构建

构建失败转移域:

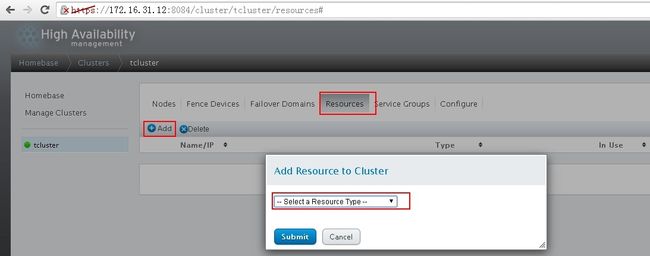

创建资源:VIP,httpd服务,GFS共享存储

添加资源:

如下添加VIP地址:

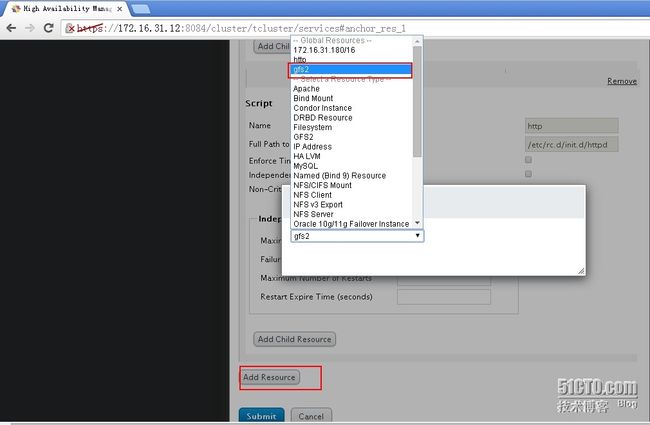

添加服务脚本httpd:

记住写入httpd脚本路径:

添加GFS2共享存储资源:

将资源加入资源组:

创建资源组,并向其中加入设置好的自定义资源或者也可以直接添加设定资源:

添加设置好的VIP资源:

添加设置好的httpd服务资源:

添加设置好的GFS2共享存储资源:

构建完成后启动webservice资源组:

启动成功。

我们去管理端访问一下:

|

1

2

|

[root@nfs ~]# curl http:

//172.16.31.180

page from CLVM@GFS2

|

我们去节点1查看集群启动状态,喔,看怎么启动到node2了!!!!

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

[root@node1 ~]# clustat

Cluster Status

for

tcluster @ Tue Jan 6 13:22:07 2015

Member Status: Quorate

Member Name ID Status

------ ---- ---- ------

node1.stu31.com 1 Online, Local, rgmanager

node2.stu31.com 2 Online,rgmanager

node3.stu31.com 3 Online,rgmanager

Service Name Owner (Last) State

------- ---- ----- ------ -----

service:webservice node2.stu31.com started

|

我们在网页端刷新一下,喔,是node2啊,不能自动更新网页。

我们将集群切换到node1运行:

使用命令:

|

1

2

3

|

[root@node2 ~]# clusvcadm -r webservice -mnode1.stu31.com

Trying to relocate service:webservice tonode1.stu31.com...Success

service:webservice is now running onnode1.stu31.com

|

到网页刷新一下:

切换成功,ok,至此RHCS+Conga+GFS+cLVM共享存储的高可用性web集群就构建成功啦!