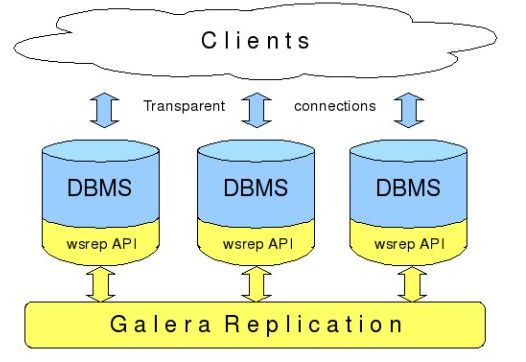

Galera Cluster

Galera本质是一个wsrep提供者(provider),运行依赖于wsrep的API接口。Wsrep API定义了一系列应用回调和复制调用库,来实现事务数据库同步写集(writeset)复制以及相似应用。目的在于从应用细节上实现抽象的,隔离的复制。虽然这个接口的主要目标是基于认证的多主复制,但同样适用于异步和同步的主从复制。

Galera Cluster号称是世界上最先进的开源数据库集群方案,mysql构建在Galera之上,当有新的写操作产生时,数据库之间的复制并不是通过mysql的复制线程来请求二进制日志实现数据的重放,而是通过wsrep API调用galera replication库来实现文件的同步复制,并在每个服务器上执行一遍,要不所有服务器都执行成功,要不就所有都回滚,保证所有服务的数据一致性,而且所有服务器同步实时更新。

Galera的架构:

优点:真正的多主服务模式:多个服务能同时被读写,不像Fabric那样某些服务只能作备份用同步复制:无延迟复制,不会产生数据丢失热备用:当某台服务器当机后,备用服务器会自动接管,不会产生任何当机时间自动扩展节点:新增服务器时,不需手工复制数据库到新的节点支持InnoDB引擎对应用程序透明:应用程序不需作修改

缺点:由于同一个事务需要在集群的多台机器上执行,因此网络传输及并发执行会导致性能上有一定的消耗。所有机器上都存储着相同的数据,全冗余。若一台机器既作为主服务器,又作为备份服务器,出现乐观锁导致rollback的概率会增大,编写程序时要小心。

不支持的SQL:LOCK / UNLOCK TABLES / GET_LOCK(), RELEASE_LOCK()…不支持XA Transaction

实验准备:

三台虚拟机 172.18.250.77 172.18.250.78 172.18.250.79 CentOS 7

一、安装galera cluster

mysql支持galera cluster的三种方法:

1、mysql官方提供支持galera cluster的版本

2、percona-cluster支持的mysql版本

3、Mariadb-Cluster

]# yum -y install MariaDB-Galera-server MariaDB-shared MariaDB-client MariaDB-common galera ]# rpm -ql MariaDB-Galera-server /etc/init.d/mysql //启动文件 /etc/logrotate.d/mysql /etc/my.cnf.d /etc/my.cnf.d/server.cnf //配置文件

配置Galera Cluster:每台节点都配置相同的配置文件

]# vim /etc/my.cnf.d/server.cnf [galera] # Mandatory settings wsrep_provider = /usr/lib64/galera/libgalera_smm.so //实现galera集群的提供者 wsrep_cluster_address= "gcomm://172.18.250.77,172.18.250.78,172.18.250.79" //wsrep的IP地址, gcomm表示提供组会话 binlog_format=row //二进制日志的格式 default_storage_engine=InnoDB //默认存储引擎 innodb_autoinc_lock_mode=2 //锁格式 bind-address=0.0.0.0 //wsrep工作时监听在哪个地址上 wsrep_cluster_name = 'mycluster' //wsrep的名称 # Optional setting wsrep_slave_threads=1 //从服务器线程数 innodb_flush_log_at_trx_commit=0 //事务提交时是否刷新日志

配置各节点的主机名: 每天节点上都需要操作

172.18.250.77 node1.baidu.com 172.18.250.78 node2.baidu.com 172.18.250.79 node3.baidu.com ]# systemctl stop firewalld.service //关闭防火墙,以免影响 ]# setenforce 0 //关闭selinux

启动mysql:

]# /etc/init.d/mysql start --wsrep-new-cluster //首次启动时,需要初始化集群,在其中一个节点上执行 ]# service mysql start //其它两节点正常启动 ]# service mysql start Starting MySQL...SST in progress, setting sleep higher. //显示这个表示集群配置成功 ]# ss -tan //查看启动端口 State Recv-Q Send-Q Local Address:Port Peer Address:Port LISTEN 0 50 *:3306 *:* LISTEN 0 128 *:4567 *:*

在其中一台创建数据库,看是否能正常复制到其它两节点

MariaDB [(none)]> create database hellodb; MariaDB [(none)]> show databases; //在其它两节点上查看 +--------------------+ | Database | +--------------------+ | information_schema | | hellodb | //数据库复制成功 | mysql | | performance_schema | | test | +--------------------+ 5 rows in set (0.23 sec)

创建表,添加数据:

MariaDB [hellodb]> create table students (id int unsigned auto_increment not null primary key,name char(30) not null,age tinyint unsigned,gender enum('f','m'));

Query OK, 0 rows affected (0.92 sec)

MariaDB [hellodb]> insert into students (name) values ("Hi"); //在一台节点上添加数据

Query OK, 1 row affected (0.01 sec)

MariaDB [hellodb]> insert into students (name) values ("Hello");

Query OK, 1 row affected (0.01 sec)

MariaDB [hellodb]> select * from students;

+----+-------+------+--------+

| id | name | age | gender |

+----+-------+------+--------+

| 1 | Hi | NULL | NULL |

| 4 | Hello | NULL | NULL | //如果字段时自动增加的话,则时按集群的节点数显示下一个数据

+----+-------+------+--------+

2 rows in set (0.00 sec)

解决办法:

1、设定一个全局分配ID生成器,解决数据插入时ID顺序不一致

2、手动指定id号,不能自动生成

停止一台节点上的mysql:

]# service mysql stop

Shutting down MySQL.......... SUCCESS!

MariaDB [hellodb]> insert into students (name) values ("World"); //一节点插入数据

Query OK, 1 row affected (0.12 sec)

MariaDB [hellodb]> select * from students;

+----+-------+------+--------+

| id | name | age | gender |

+----+-------+------+--------+

| 1 | Hi | NULL | NULL |

| 4 | Hello | NULL | NULL |

| 6 | World | NULL | NULL |

+----+-------+------+--------+

3 rows in set (0.00 sec)

在启动节点上的mysq:

]# service mysql start Starting MySQL.........SST in progress, setting sleep higher. MariaDB [hellodb]> select * from students; +----+-------+------+--------+ | id | name | age | gender | +----+-------+------+--------+ | 1 | Hi | NULL | NULL | | 4 | Hello | NULL | NULL | | 6 | World | NULL | NULL | //数据自动同步 +----+-------+------+--------+ 3 rows in set (0.01 sec)

HMA:

MHA(Master HA)是一款开源的MySQL的高可用程序,它为MySQL主从复制架构提供了automating master failover功能。MHA在监控到master节点故障时,会提升其中拥有最新数据的slave节点称为新的master节点,在此期间,MHA会通过于其它从节点获取额外信息来避免一致性方面的问题。MHA还提供了master节点的在线切换功能,即按需切换master/slave节点。

MHA服务有两种角色,MHA Manager(管理节点)和MHA Node(数据节点):

MHA Manager:通常单独不熟在一台独立机器上管理多个master/slave集群,每个master/slave集群称作一个application;

MHA node:运行在每台MySQL服务器上(master/slave/manager),它通过监控具备解析和清理logs功能的脚本来家客故障转移。

MHA架构:

实验准备:

1、虚拟机 172.18.250.77 CentOS 7 MHA Manger

2、虚拟机 172.18.250.78 CentOS 7 MHA Node mysql主

3、虚拟机 172.18.250.79 172.18.250.80 CentOS 7 MHA Node mysql从

一、安装MHA

]# yum -y install mha4mysql-manager-0.56-0.el6.noarch.rpm ]# yum -y install mha4mysql-node-0.56-0.el6.noarch.rpm mariadb-server //mysql服务器上安装

配置管理主节点:

]# rpm -ql mha4mysql-manager /usr/bin/masterha_check_repl //检测MySQL复制集群的连接配置参数是否正常; /usr/bin/masterha_check_ssh //检测各节点ssh通信是否正常 /usr/bin/masterha_check_status //显示mysql主节点 /usr/bin/masterha_conf_host //删除或添加配置的节点 /usr/bin/masterha_manager //启动manager /usr/bin/masterha_master_monitor //监控mysql主节点可用性 /usr/bin/masterha_master_switch //master节点切换工具 /usr/bin/masterha_stop //关闭MHA服务的工具

Manager节点需要为每个监控的master/slave集群提供一个专用的配置文件,而所有的master/slave集群也可共享全局配置。全局配置文件默认为/etc/masterha_default .cnf,其为可选配置。如果仅监控一组master/slave集群,也可直接通过application的配置来提供各服务器的默认配置信息。而每个application的配置文件路径为自定义。

]# mkdir /etc/masterha ]# vim /etc/masterha/app1.cnf [server default] user=hauser //MHA登录mysql的账号 password=hauser manager_workdir=/data/masterha/app1 //MHA工作目录 manager_log=/data/masterha/app1/manager.log //MHA日志文件 remote_workdir=/data/masterha/app1 //存储到其它节点的文件信息 ssh_user=root //基于哪个用户远程 repl_user=admin //mysql复制二进制用户 repl_password=admin ping_interval=1 //多长时间扫描一次mysql节点 [server1] hostname=172.18.250.78 //节点主机名称,可以是IP和主机名 candidate_master=1 //如果mysql主节点故障提升为主节点 [server2] hostname=172.18.250.79 candidate_master=1 [server3] hostname=172.18.250.80

配置各节点环境:

MHA集群中的各节点彼此之间是通过SSH通信,以实现远程控制及数据管理 ,基于密钥方式通信就无需输入远程账号密码

]# ssh-keygen -t rsa -P '' //生成密钥对 ]# cat .ssh/id_rsa.pub >.ssh/authorized_keys //把公钥保存在认证文件中 ]# chmod 600 .ssh/authorized_keys ]# scp -p .ssh/id_rsa .ssh/authorized_keys [email protected]:/root/.ssh/ ]# scp -p .ssh/id_rsa .ssh/authorized_keys [email protected]:/root/.ssh/ ]# scp -p .ssh/id_rsa .ssh/authorized_keys [email protected]:/root/.ssh/ //复制私钥和认证文件到其它节点上 ]# systemctl stop firewalld.service ]# setenforce 0 //关闭防火墙和selinux

配置mysql:

]# vim /etc/my.cnf //mysql主服务器上配置 skip_name_resolve=ON innodb_file_per_table=ON log-bin=master-bin relay-log=relay-log ]# vim /etc/my.cnf //mysql从服务器上配置 skip_name_resolve=ON innodb_file_per_table=ON log-bin=master-bin relay-log=relay-log read-only=1 //mha通过这来判断哪个是mysql主服务器 relay-log-purge=0 ]# service mariadb start

配置mysql复制所需的环境:

主服务器上: MariaDB [(none)]> grant all on *.* to 'hauser'@'172.18.250.%' identified by 'hauser'; //授权mha能登录mysql MariaDB [(none)]> grant replication slave,replication client on *.* to 'admin'@'172.18.250.%' identified by 'admin'; // 授权mysql从服务器能远程登录复制 从服务器上: MariaDB [(none)]> change master to master_host='172.18.250.78', master_user='admin', master_password='admin', master_log_file='master-bin.000003', master_log_pos=558; MariaDB [(none)]> start slave; Query OK, 0 rows affected (0.02 sec) MariaDB [(none)]> show slave status\G; *************************** 1. row *************************** Slave_IO_State: Waiting for master to send event Master_Host: 172.18.250.78 Master_User: admin Master_Port: 3306 Connect_Retry: 60 Master_Log_File: master-bin.000004 Read_Master_Log_Pos: 245 Relay_Log_File: relay-log.000003 Relay_Log_Pos: 530 Relay_Master_Log_File: master-bin.000004 Slave_IO_Running: Yes Slave_SQL_Running: Yes Replicate_Do_DB: Replicate_Ignore_DB: Replicate_Do_Table: Replicate_Ignore_Table: Replicate_Wild_Do_Table: Replicate_Wild_Ignore_Table: Last_Errno: 0 Last_Error: Skip_Counter: 0 Exec_Master_Log_Pos: 245 Relay_Log_Space: 1103 Until_Condition: None Until_Log_File: Until_Log_Pos: 0 Master_SSL_Allowed: No Master_SSL_CA_File: Master_SSL_CA_Path: Master_SSL_Cert: Master_SSL_Cipher: Master_SSL_Key: Seconds_Behind_Master: 0 Master_SSL_Verify_Server_Cert: No Last_IO_Errno: 0 Last_IO_Error: Last_SQL_Errno: 0 Last_SQL_Error: Replicate_Ignore_Server_Ids: Master_Server_Id: 1 1 row in set (0.05 sec)

在MHA管理节点上检测ssh,repl等是否正常:

]# masterha_check_ssh --conf=/etc/masterha/app1.cnf

]# masterha_check_repl --conf=/etc/masterha/app1.cnf

启动manager:

]# masterha_manager --conf=/etc/masterha/app1.cnf Thu Jun 9 14:17:49 2016 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping. Thu Jun 9 14:17:49 2016 - [info] Reading application default configuration from /etc/masterha/app1.cnf.. Thu Jun 9 14:17:49 2016 - [info] Reading server configuration from /etc/masterha/app1.cnf.. manger处于前台工作,一直检测中

停止主服务器上的mysql,看是否会把从节点提升为主节点,形成高可用:

]# masterha_manager --conf=/etc/masterha/app1.cnf Thu Jun 9 14:17:49 2016 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping. Thu Jun 9 14:17:49 2016 - [info] Reading application default configuration from /etc/masterha/app1.cnf.. Thu Jun 9 14:17:49 2016 - [info] Reading server configuration from /etc/masterha/app1.cnf.. Creating /data/masterha/app1 if not exists.. ok. Checking output directory is accessible or not.. ok. Binlog found at /var/lib/mysql, up to master-bin.000004 Thu Jun 9 14:20:01 2016 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping. Thu Jun 9 14:20:01 2016 - [info] Reading application default configuration from /etc/masterha/app1.cnf.. Thu Jun 9 14:20:01 2016 - [info] Reading server configuration from /etc/masterha/app1.cnf.. manger立马停止 ]# tail /data/masterha/app1/manager.log Started automated(non-interactive) failover. //启动主服务器流动 The latest slave 172.18.250.79(172.18.250.79:3306) has all relay logs for recovery. Selected 172.18.250.79(172.18.250.79:3306) as a new master. 172.18.250.79(172.18.250.79:3306): OK: Applying all logs succeeded. 172.18.250.80(172.18.250.80:3306): This host has the latest relay log events. Generating relay diff files from the latest slave succeeded. 172.18.250.80(172.18.250.80:3306): OK: Applying all logs succeeded. Slave started, replicating from 172.18.250.79(172.18.250.79:3306) 172.18.250.79(172.18.250.79:3306): Resetting slave info succeeded. Master failover to 172.18.250.79(172.18.250.79:3306) completed successfully. //172.18.250.79已成为主服务器

MariaDB [(none)]> show slave status\G; //在250.80从服务器上看主节点已变更 *************************** 1. row *************************** Slave_IO_State: Waiting for master to send event Master_Host: 172.18.250.79 Master_User: admin Master_Port: 3306 Connect_Retry: 60 Master_Log_File: master-bin.000002 Read_Master_Log_Pos: 245 Relay_Log_File: relay-log.000002 Relay_Log_Pos: 530 Relay_Master_Log_File: master-bin.000002 Slave_IO_Running: Yes Slave_SQL_Running: Yes

注意:主服务器故障下线时,修复后不能立即让主服务器上线,需要修改配置让主服务器变成从服务,以防集群脑裂。

]# service mariadb start MariaDB [(none)]> change master to master_host='172.18.250.79', master_user='admin', master_password='admin', master_log_file='master-bin.000002', master_log_pos=245; MariaDB [(none)]> start slave; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> show slave status\G; *************************** 1. row *************************** Slave_IO_State: Waiting for master to send event Master_Host: 172.18.250.79 Master_User: admin Master_Port: 3306 Connect_Retry: 60 Master_Log_File: master-bin.000002 Read_Master_Log_Pos: 245 Relay_Log_File: relay-log.000002 Relay_Log_Pos: 530 Relay_Master_Log_File: master-bin.000002 Slave_IO_Running: Yes Slave_SQL_Running: Yes

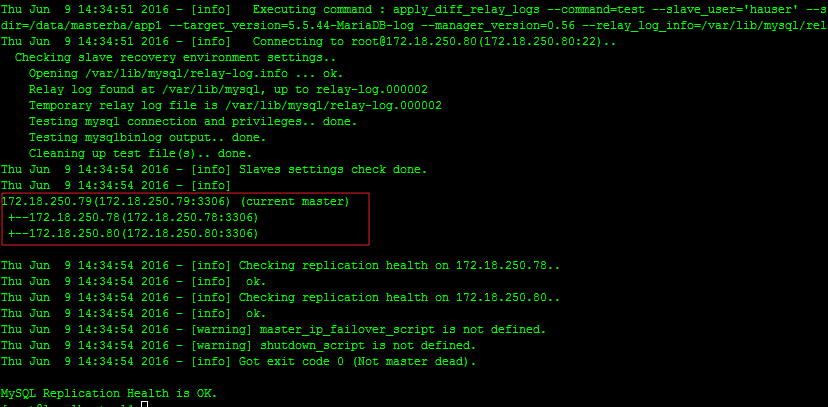

再次通过manger检测复制功能:

]# masterha_check_repl --conf=/etc/masterha/app1.cnf

这就是MHA的高可用mysql集群的流程。

配置MHA还需注意几点:

1、masterha_manager 运行时是工作于前台的(可以放到后台工作),而且不能断开,一旦进行主节点切换后,就停止工作,得手动在把masterha_manager启动起来,这时需要脚本实现

2、提供额外检测机制,以免对master的监控做出误判

3、在master节点上提供虚拟IP地址向外提供服务,以免主节点改变时还需要到分离器上修改IP

4、进制故障转移时对原有master节点执行stonith操作以避免脑裂,可通过指定shutdown_script实现

5、必要时,进行在线master节点转换