| [日期:2018-03-09] | 来源:51cto.com/ylw6006 作者:斩月 | [字体:大 中 小] |

本文将在前文的基础上介绍在kubernetes集群环境中配置dns服务,在k8s集群中,pod的生命周期是短暂的,pod重启后ip地址会产生变化,对于应用程序来说这是不可接受的,为解决这个问题,K8S集群巧妙的引入的dns服务来实现服务的发现,在k8s集群中dns总共需要使用4个组件,各组件分工如下:

etcd:DNS存储

kube2sky:将Kubernetes Master中的service(服务)注册到etcd。

skyDNS:提供DNS域名解析服务。

healthz:提供对skydns服务的健康检查。

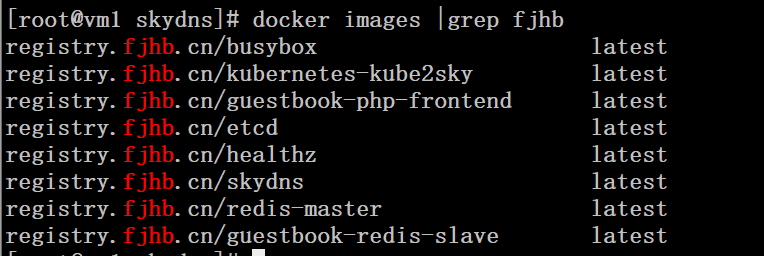

一、下载相关镜像文件,并纳入本地仓库统一管理

# docker pull docker.io/elcolio/etcd

# docker pull docker.io/port/kubernetes-kube2sky

# docker pull docker.io/skynetservices/skydns

# docker pull docker.io/wu1boy/healthz

如发现版本差异请用docker search+ docker pull 拉取其他镜像测试. 原作者拉取镜像后tag并上传至本地仓库,个人认为这种不需要重复部署的没必要每次上传到本地镜像,而且第一次下载完成后,后续其他部署, docker pull会优先查看本地是否有此镜像,如果有的话,不会二次从网络上拉取.

二、通过rc文件创建pod

这里面一个pod包含了4个组件,一个组件运行在一个docker容器中

# cat skydns-rc.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: kube-dns

namespace: default

labels:

k8s-app: kube-dns

version: v12

kubernetes.io/cluster-service: "true"

spec:

replicas: 1

selector:

k8s-app: kube-dns

version: v12

template:

metadata:

labels:

k8s-app: kube-dns

version: v12

kubernetes.io/cluster-service: "true"

spec:

containers:

- name: etcd

image: docker.io/elcolio/etcd #<注意替换此处镜像为你使用的镜像.>

resources:

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 100m

memory: 50Mi

command:

- /bin/etcd

- --data-dir

- /tmp/data

- --listen-client-urls

- http://127.0.0.1:2379,http://127.0.0.1:4001

- --advertise-client-urls

- http://127.0.0.1:2379,http://127.0.0.1:4001

- --initial-cluster-token

- skydns-etcd

volumeMounts:

- name: etcd-storage

mountPath: /tmp/data

- name: kube2sky

image: docker.io/port/kubernetes-kube2sky #<注意替换此处镜像为你使用的镜像.>

resources:

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 100m

memory: 50Mi

args:

- -kube_master_url=http://192.168.115.5:8080 #<此处应为你的master的ip,或者说所有使用8080和2379的基本都是你的master ip>

- -domain=cluster.local

- name: skydns

image: docker.io/skynetservices/skydns #<注意替换此处镜像为你使用的镜像.>

resources:

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 100m

memory: 50Mi

args:

- -machines=http://127.0.0.1:4001

- -addr=0.0.0.0:53

- -ns-rotate=false

- -domain=cluster.local

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- name: healthz

image: docker.io/wu1boy/healthz #<注意替换此处镜像为你使用的镜像.>

resources:

limits:

cpu: 10m

memory: 20Mi

requests:

cpu: 10m

memory: 20Mi

args:

- -cmd=nslookup kubernetes.default.svc.cluster.local 127.0.0.1 >/dev/null

- -port=8080

ports:

- containerPort: 8080

protocol: TCP

volumes:

- name: etcd-storage

emptyDir: {}

dnsPolicy: Default

三、通过srv文件创建service

# cat skydns-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: default

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "KubeDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.254.16.254 #<此处群集ip应先在svc处建立好, 比如使用k8s-dashboard进行创建服务>

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP# kubectl create -f skydns-rc.yaml

# kubectl create -f skydns-svc.yaml

# kubectl get rc

# kubectl get pod

# kubectl get svc

# kubectl describe svc kube-dns

# kubectl describe rc kube-dns

# kubectl describe pod kube-dns-9fllp

Name: kube-dns-9fllp

Namespace: default

Node: 192.168.115.6/192.168.115.6

Start Time: Tue, 23 Jan 2018 10:55:19 -0500

Labels: k8s-app=kube-dns

kubernetes.io/cluster-service=true

version=v12

Status: Running

IP: 172.16.37.5

Controllers: ReplicationController/kube-dns

Containers:

etcd:

Container ID: docker://62ad76bfaca1797c5f43b0e9eebc04074169fce4cc15ef3ffc4cd19ffa9c8c19

Image: registry.fjhb.cn/etcd

Image ID: docker-pullable://docker.io/elcolio/etcd@sha256:3b4dcd35a7eefea9ce2970c81dcdf0d0801a778d117735ee1d883222de8bbd9f

Port:

Command:

/bin/etcd

--data-dir

/tmp/data

--listen-client-urls

http://127.0.0.1:2379,http://127.0.0.1:4001

--advertise-client-urls

http://127.0.0.1:2379,http://127.0.0.1:4001

--initial-cluster-token

skydns-etcd

Limits:

cpu: 100m

memory: 50Mi

Requests:

cpu: 100m

memory: 50Mi

State: Running

Started: Tue, 23 Jan 2018 10:55:23 -0500

Ready: True

Restart Count: 0

Volume Mounts:

/tmp/data from etcd-storage (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-6pddn (ro)

Environment Variables:

kube2sky:

Container ID: docker://6b0bc6e8dce83e3eee5c7e654fbaca693730623fb7936a1fd9d73de1a1dd8152

Image: registry.fjhb.cn/kubernetes-kube2sky

Image ID: docker-pullable://docker.io/port/kubernetes-kube2sky@sha256:0230d3fbb0aeb4ddcf903811441cf2911769dbe317a55187f58ca84c95107ff5

Port:

Args:

-kube_master_url=http://192.168.115.5:8080

-domain=cluster.local

Limits:

cpu: 100m

memory: 50Mi

Requests:

cpu: 100m

memory: 50Mi

State: Running

Started: Tue, 23 Jan 2018 10:55:25 -0500

Ready: True

Restart Count: 0

Volume Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-6pddn (ro)

Environment Variables:

skydns:

Container ID: docker://ebc2aaaa54e2f922e370e454ec537665d813c69d37a21e3afd908e6dad056627

Image: registry.fjhb.cn/skydns

Image ID: docker-pullable://docker.io/skynetservices/skydns@sha256:6f8a9cff0b946574bb59804016d3aacebc637581bace452db6a7515fa2df79ee

Ports: 53/UDP, 53/TCP

Args:

-machines=http://127.0.0.1:4001

-addr=0.0.0.0:53

-ns-rotate=false

-domain=cluster.local

Limits:

cpu: 100m

memory: 50Mi

Requests:

cpu: 100m

memory: 50Mi

State: Running

Started: Tue, 23 Jan 2018 10:55:27 -0500

Ready: True

Restart Count: 0

Volume Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-6pddn (ro)

Environment Variables:

healthz:

Container ID: docker://f1de1189fa6b51281d414d7a739b86494b04c8271dc6bb5f20c51fac15ec9601

Image: registry.fjhb.cn/healthz

Image ID: docker-pullable://docker.io/wu1boy/healthz@sha256:d6690c0a8cc4f810a5e691b6a9b8b035192cb967cb10e91c74824bb4c8eea796

Port: 8080/TCP

Args:

-cmd=nslookup kubernetes.default.svc.cluster.local 127.0.0.1 >/dev/null

-port=8080

Limits:

cpu: 10m

memory: 20Mi

Requests:

cpu: 10m

memory: 20Mi

State: Running

Started: Tue, 23 Jan 2018 10:55:29 -0500

Ready: True

Restart Count: 0

Volume Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-6pddn (ro)

Environment Variables:

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

Volumes:

etcd-storage:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

default-token-6pddn:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-6pddn

QoS Class: Guaranteed

Tolerations:

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

7m 7m 1 {default-scheduler } Normal Scheduled Successfully assigned kube-dns-9fllp to 192.168.115.6

7m 7m 1 {kubelet 192.168.115.6} spec.containers{etcd} Normal Pulling pulling image "registry.fjhb.cn/etcd"

7m 7m 1 {kubelet 192.168.115.6} spec.containers{etcd} Normal Pulled Successfully pulled image "registry.fjhb.cn/etcd"

7m 7m 1 {kubelet 192.168.115.6} spec.containers{etcd} Normal Created Created container with docker id 62ad76bfaca1; Security:[seccomp=unconfined]

7m 7m 1 {kubelet 192.168.115.6} spec.containers{kube2sky} Normal Pulled Successfully pulled image "registry.fjhb.cn/kubernetes-kube2sky"

7m 7m 1 {kubelet 192.168.115.6} spec.containers{etcd} Normal Started Started container with docker id 62ad76bfaca1

7m 7m 1 {kubelet 192.168.115.6} spec.containers{kube2sky} Normal Pulling pulling image "registry.fjhb.cn/kubernetes-kube2sky"

7m 7m 1 {kubelet 192.168.115.6} spec.containers{kube2sky} Normal Created Created container with docker id 6b0bc6e8dce8; Security:[seccomp=unconfined]

7m 7m 1 {kubelet 192.168.115.6} spec.containers{skydns} Normal Pulled Successfully pulled image "registry.fjhb.cn/skydns"

7m 7m 1 {kubelet 192.168.115.6} spec.containers{skydns} Normal Pulling pulling image "registry.fjhb.cn/skydns"

7m 7m 1 {kubelet 192.168.115.6} spec.containers{kube2sky} Normal Started Started container with docker id 6b0bc6e8dce8

7m 7m 1 {kubelet 192.168.115.6} spec.containers{skydns} Normal Created Created container with docker id ebc2aaaa54e2; Security:[seccomp=unconfined]

7m 7m 1 {kubelet 192.168.115.6} spec.containers{skydns} Normal Started Started container with docker id ebc2aaaa54e2

7m 7m 1 {kubelet 192.168.115.6} spec.containers{healthz} Normal Pulling pulling image "registry.fjhb.cn/healthz"

7m 7m 1 {kubelet 192.168.115.6} spec.containers{healthz} Normal Pulled Successfully pulled image "registry.fjhb.cn/healthz"

7m 7m 1 {kubelet 192.168.115.6} spec.containers{healthz} Normal Created Created container with docker id f1de1189fa6b; Security:[seccomp=unconfined]

7m 7m 1 {kubelet 192.168.115.6} spec.containers{healthz} Normal Started Started container with docker id f1de1189fa6b

四、修改kubelet配置文件并重启服务

注意:

--cluster-dns参数要和前面svc文件中的clusterIP参数一致

--cluster-domain参数要和前面rc文件中的-domain参数一致

集群内所有的kubelet节点都需要修改

# grep 'KUBELET_ADDRESS' /etc/kubernetes/kubelet

KUBELET_ADDRESS="--address=192.168.115.5 --cluster-dns=10.254.16.254 --cluster-domain=cluster.local" #此处应替换为你的master地址以及你分派的dns服务的群集ip

# systemctl restart kubelet

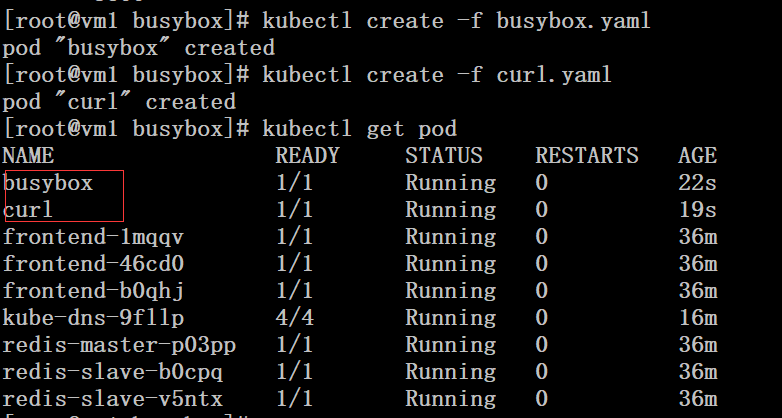

五、运行一个busybox和curl进行测试

# cat busybox.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox

spec:

containers:

- name: busybox

image: docker.io/busybox

command:

- sleep

- "3600"# cat curl.yaml

apiVersion: v1

kind: Pod

metadata:

name: curl

spec:

containers:

- name: curl

image: docker.io/webwurst/curl-utils

command:

- sleep

- "3600"# kubectl create -f busybox.yaml

# kubectl create -f curl.yaml

通过busybox容器对kubernetes的service进行解析,发现service被自动解析成了对应的集群ip地址,而并不是172.16网段的docker地址

# kubectl get svc

# kubectl exec busybox -- nslookup frontend

# kubectl exec busybox -- nslookup redis-master

# kubectl exec busybox -- nslookup redis-slave

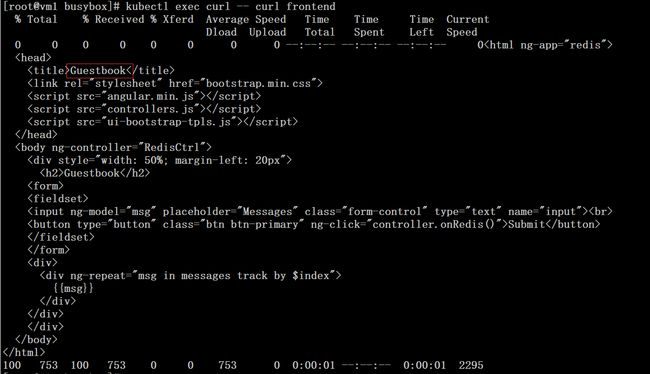

通过curl容器访问前面创建的php留言板

# kubectl exec curl -- curl frontend