一、概述

Redis3.0版本之后支持Cluster,目前redis cluster支持的特性有:

节点自动发现,slave->master 选举,集群容错,Hot resharding:在线分片

进群管理:cluster xxx,基于配置(nodes-port.conf)的集群管理,ASK 转向/MOVED 转向机制.

二、redis cluster安装

1、下载和解包

cd /usr/local/

wget http://download.redis.io/releases/redis-4.0.1.tar.gz

tar -xvzf redis-4.0.1.tar.gz

2、 编译安装

cd redis-4.0.1

make && make install

3、创建redis节点

选择2台服务器,分别为:192.168.59.131,192.168..59.130.每分服务器有3个节点。

先在192.168.59.131创建3个节点:

cd /usr/local/

//创建集群目录

mkdir redis_cluster

//分别代表三个节点 其对应端口 7000 7001 7002

mkdir 7000 7001 7002

//以创建7000节点为例,拷贝到7000目录

cp /usr/local/redis-4.0.1/redis.conf ./redis_cluster/7000/

//拷贝到7001目录

cp /usr/local/redis-4.0.1/redis.conf ./redis_cluster/7001/

//拷贝到7002目录

cp /usr/local/redis-4.0.1/redis.conf ./redis_cluster/7002/

分别对7001,7002、7003文件夹中的3个文件修改对应的配置

daemonize yes //redis后台运行

pidfile /var/run/redis_7000.pid //pidfile文件对应7000,7002,7003

bind 192.168.59.131 //绑定ip地址

port 7000 //端口7000,7002,7003

cluster-enabled yes //开启集群 把注释#去掉

cluster-config-file nodes_7000.conf //集群的配置 配置文件首次启动自动生成 7000,7001,7002

cluster-node-timeout 5000 //请求超时 设置5秒够了

appendonly yes //aof日志开启 有需要就开启,它会每次写操作都记录一条日志

在192.168.59.130创建3个节点:对应的端口改为7003,7004,7005.配置对应的改一下就可以了

4、两台机启动各节点

第一台机器上执行

redis-server redis_cluster/7000/redis.conf

redis-server redis_cluster/7001/redis.conf

redis-server redis_cluster/7002/redis.conf

另外一台机器上执行

redis-server redis_cluster/7003/redis.conf

redis-server redis_cluster/7004/redis.conf

redis-server redis_cluster/7005/redis.conf

5、查看服务

ps -ef | grep redis #查看是否启动成功

netstat -tnlp | grep redis #可以看到redis监听端口

三、创建集群

前面已经准备好了搭建集群的redis节点,接下来我们要把这些节点都串连起来搭建集群。

官方提供了一个工具:redis-trib.rb(/usr/local/redis-4.0.1/src/redis-trib.rb) 看后缀就知道它是用ruby写的一个程序,所以我们还得安装ruby.

yum -y install ruby ruby-devel rubygems rpm-build

再用 gem 这个命令来安装 redis接口 gem是ruby的一个工具包.

gem install redis

接下来运行一下redis-trib.rb

[root@localhost local]# /usr/local/redis-4.0.1/src/redis-trib.rb

Usage: redis-trib

create host1:port1 ... hostN:portN

--replicas

check host:port

info host:port

fix host:port

--timeout

reshard host:port

--from

--to

--slots

--yes

--timeout

--pipeline

rebalance host:port

--weight

--auto-weights

--use-empty-masters

--timeout

--simulate

--pipeline

--threshold

add-node new_host:new_port existing_host:existing_port

--slave

--master-id

del-node host:port node_id

set-timeout host:port milliseconds

call host:port command arg arg .. arg

import host:port

--from

--copy

--replace

help (show this help)

For check, fix, reshard, del-node, set-timeout you can specify the host and port of any working node in the cluster.

确认所有的节点都启动,接下来使用参数create 创建 (在192.168.59.131中来创建)

/usr/local/redis-4.0.1/src/redis-trib.rb create --replicas 1 192.168.59.131:7000 192.168.59.131:7001 192.168.59.131:7003 192.168.59.130:7003 192.168.59.130:7004 192.168.59.130:7005

--replicas 1 表示 自动为每一个master节点分配一个slave节点 上面有6个节点,程序会按照一定规则生成 3个master(主)3个slave(从)

防火墙一定要开放监听的端口,否则会创建失败。

运行中,提示Can I set the above configuration? (type 'yes' to accept): yes //输入yes

[root@localhost local]# /usr/local/redis-4.0.1/src/redis-trib.rb create --replicas 1 192.168.59.131:7000 192.168.59.131:7001 192.168.59.131:7003 192.168.59.130:7003 192.168.59.130:7004 192.168.59.130:7005

>>> Creating cluster

>>> Performing hash slots allocation on 6 nodes...

Using 3 masters:

192.168.59.131:7000

192.168.59.130:7003

192.168.59.131:7001

Adding replica 192.168.59.130:7004 to 192.168.59.131:7000

Adding replica 192.168.59.131:7002 to 192.168.59.130:7003

Adding replica 192.168.59.130:7005 to 192.168.59.131:7001

M: 048937fd4ac542c135e7527f681be12348e8c8d0 192.168.59.131:7000

slots:0-5460 (5461 slots) master

M: 2039bc32bf55d21d74296cdc91a316022a7afc4b 192.168.59.131:7001

slots:10923-16383 (5461 slots) master

S: 76514c28d0a40f9751ccaf117d1f8f1d0878475a 192.168.59.131:7002

replicates a58a304964ff10d10f4323341f7084c9f60f5124

M: a58a304964ff10d10f4323341f7084c9f60f5124 192.168.59.130:7003

slots:5461-10922 (5462 slots) master

S: 7512d76b416d09619d3b639a94945a676d7b8826 192.168.59.130:7004

replicates 048937fd4ac542c135e7527f681be12348e8c8d0

S: 7824b9721cb21de60c8d132d668fd3ac5c4eae49 192.168.59.130:7005

replicates 2039bc32bf55d21d74296cdc91a316022a7afc4b

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join..

>>> Performing Cluster Check (using node 192.168.59.131:7000)

M: 048937fd4ac542c135e7527f681be12348e8c8d0 192.168.59.131:7000

slots:0-5460 (5461 slots) master

1 additional replica(s)

S: 76514c28d0a40f9751ccaf117d1f8f1d0878475a 192.168.59.131:7002

slots: (0 slots) slave

replicates a58a304964ff10d10f4323341f7084c9f60f5124

M: a58a304964ff10d10f4323341f7084c9f60f5124 192.168.59.130:7003

slots:5461-10922 (5462 slots) master

1 additional replica(s)

S: 7512d76b416d09619d3b639a94945a676d7b8826 192.168.59.130:7004

slots: (0 slots) slave

replicates 048937fd4ac542c135e7527f681be12348e8c8d0

M: 2039bc32bf55d21d74296cdc91a316022a7afc4b 192.168.59.131:7001

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: 7824b9721cb21de60c8d132d668fd3ac5c4eae49 192.168.59.130:7005

slots: (0 slots) slave

replicates 2039bc32bf55d21d74296cdc91a316022a7afc4b

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

到这里集群已经初步搭建好了。

四、测试

- get 和 set数据

[root@localhost local]# redis-cli -h 192.168.59.131 -c -p 7002

192.168.59.131:7002> keys *

(empty list or set)

[root@localhost local]# redis-cli -h 192.168.59.130 -c -p 7005

192.168.59.130:7005> set hello world

-> Redirected to slot [866] located at 192.168.59.131:7000

OK

192.168.59.131:7000>

[root@localhost local]# redis-cli -h 192.168.59.131 -c -p 7002

192.168.59.131:7002> get hello

-> Redirected to slot [866] located at 192.168.59.131:7000

"world"

192.168.59.131:7000>

- 模拟故障切换

A. 先把192.168.59.131 其中一个Master服务Down掉,(192.168.59.131有2个Master, 1个Slave) ,

测试一下,依然没有问题,集群依然能继续工作。

原因: redis集群 通过选举方式进行容错,保证一个服务挂了还能跑,这个选举是全部集群超过半数以上的Master发现其他Master挂了后,会将其他对应的Slave节点升级成Master.

B. 要是挂的是192.168.59.131怎么办? 结果是,(error) CLUSTERDOWN The cluster is down 没办法,超过半数挂了那整个集群就无法工作了。

五 、常用命令

- 查看集群情况

redis-trib.rb check ip:port #检查集群状态

redis-cli -c -h ip -p port #使用-c进入集群命令模式

redis-trib.rb rebalance ip:port --auto-weights #重新分配权重

[root@localhost script]# /usr/local/redis-4.0.1/src/redis-trib.rb info 192.168.59.131:7000

192.168.59.130:7003 (a58a3049...) -> 0 keys | 5462 slots | 1 slaves.

192.168.59.130:7005 (7824b972...) -> 0 keys | 5461 slots | 1 slaves.

192.168.59.130:7004 (7512d76b...) -> 0 keys | 5461 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

增加、删除集群节点

redis-trib.rb add-node ip:port(新增节点) ip:port(现有效节点)

redis-trib.rb del-node ip:port id(目标节点的id) #删除master节点之前首先要使用reshard移除master的全部slot重新划分slot

redis-trib.rb reshard ip:port

[root@localhost script]# /usr/local/redis-4.0.1/src/redis-trib.rb reshard 192.168.59.131:7001

>>> Performing Cluster Check (using node 192.168.59.131:7001)

S: 2039bc32bf55d21d74296cdc91a316022a7afc4b 192.168.59.131:7001

slots: (0 slots) slave

replicates 7824b9721cb21de60c8d132d668fd3ac5c4eae49

M: 7824b9721cb21de60c8d132d668fd3ac5c4eae49 192.168.59.130:7005

slots:10923-16383 (5461 slots) master

1 additional replica(s)

S: 048937fd4ac542c135e7527f681be12348e8c8d0 192.168.59.131:7000

slots: (0 slots) slave

replicates 7512d76b416d09619d3b639a94945a676d7b8826

M: 7512d76b416d09619d3b639a94945a676d7b8826 192.168.59.130:7004

slots:0-5460 (5461 slots) master

1 additional replica(s)

S: 76514c28d0a40f9751ccaf117d1f8f1d0878475a 192.168.59.131:7002

slots: (0 slots) slave

replicates a58a304964ff10d10f4323341f7084c9f60f5124

M: a58a304964ff10d10f4323341f7084c9f60f5124 192.168.59.130:7003

slots:5461-10922 (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 5461

What is the receiving node ID? 7824b9721cb21de60c8d132d668fd3ac5c4eae49

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1:7512d76b416d09619d3b639a94945a676d7b8826

Source node #2:done

Ready to move 5461 slots.

Source nodes:

M: 7512d76b416d09619d3b639a94945a676d7b8826 192.168.59.130:7004

slots:0-5460 (5461 slots) master

1 additional replica(s)

Destination node:

M: 7824b9721cb21de60c8d132d668fd3ac5c4eae49 192.168.59.130:7005

slots:10923-16383 (5461 slots) master

1 additional replica(s)

Resharding plan:

Moving slot 0 from 7512d76b416d09619d3b639a94945a676d7b8826

........................

.........................

Moving slot 5460 from 7512d76b416d09619d3b639a94945a676d7b8826

Do you want to proceed with the proposed reshard plan (yes/no)? yes

Moving slot 0 from 192.168.59.130:7004 to 192.168.59.130:7005:

........................

.........................

Moving slot 5460 from 192.168.59.130:7004 to 192.168.59.130:7005:

[root@localhost script]#

- 将master转换为salve

cluster replicate master-id #转换前6380端必须没有slots

[root@localhost script]# redis-cli -h 192.168.59.130 -c -p 7004

192.168.59.130:7004> cluster replicate 7824b9721cb21de60c8d132d668fd3ac5c4eae49

OK

192.168.59.130:7004> quit

六、深入了解

-

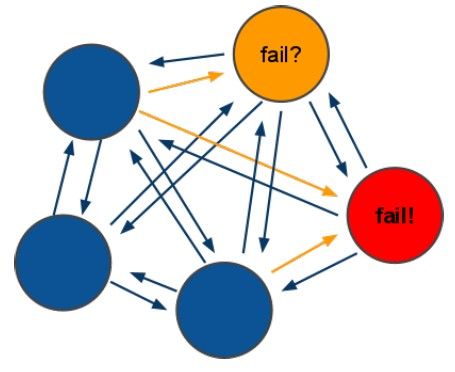

redis-cluster架构图

(1)所有的redis节点彼此互联(PING-PONG机制),内部使用二进制协议优化传输速度和带宽.

(2)节点的fail是通过集群中超过半数的节点检测失效时才生效.

(3)客户端与redis节点直连,不需要中间proxy层.客户端不需要连接集群所有节点,连接集群中任何一个可用节点即可

(4)redis-cluster把所有的物理节点映射到[0-16383]slot上,cluster 负责维护node<->slot<->value-

redis-cluster选举容错

(1)领着选举过程是集群中所有master参与,如果半数以上master节点与master节点通信超过(cluster-node-timeout),认为当前master节点挂掉.

(2):什么时候整个集群不可用(cluster_state:fail),当集群不可用时,所有对集群的操作做都不可用,收到((error) CLUSTERDOWN The cluster is down)错误

a:如果集群任意master挂掉,且当前master没有slave.集群进入fail状态,也可以理解成进群的slot映射[0-16383]不完成时进入fail状态.

b:如果进群超过半数以上master挂掉,无论是否有slave集群进入fail状态.

-

参考:http://www.cnblogs.com/yuanermen/p/5717885.html