准备

环境规划

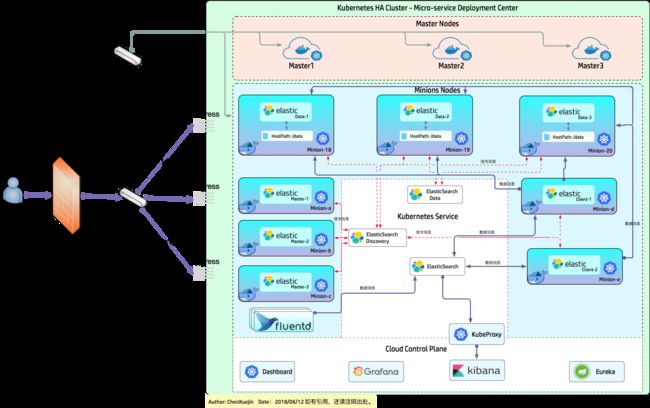

我们准备使用Kubernetes来拉起并管理整个ES的集群。

参考: https://github.com/pires/kubernetes-elasticsearch-cluster

| Node Type | COUNT | MEM | JVM |

|---|---|---|---|

| Master | 3 | 16GB | 512MB |

| Client | 2 | 16GB | 6GB |

| Data | 3 | 16GB | 8GB |

Master 节点

Master节点不做特殊准备,和业务节点混用Minion。(节省资源)

Client节点

原则上推荐Client也用独立的Minion节点,如果资源充足,可如此。

我们也采用节省资源模式,和业务节点混用。

Kubernetes在调配Pod的时候有时候会很Stupid,拉起节点的时候,可以检测下相应节点的状态。

Data节点准备

由于ES的Data节点为有状态服务,需要持久化数据入磁盘,故准备三台机器来实现数据的存储。

| Minion Node | CPU | MEM | DISK |

|---|---|---|---|

| minion18 | 4 Core | 16GB | 500GB |

| minion19 | 4 Core | 16GB | 500GB |

| minion20 | 4 Core | 16GB | 500GB |

部署架构

Kubernetes集群准备

Kubernetes 集群存在, Kubernetes集群搭建在此就不再累述,本人已有文章专门阐述。也可以参考Kubernetes官网进行安装。

Data节点加入集群

将Minion18,Minion19, Minion20加入集群。

[centos@master1 ~]$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 Ready master 54d v1.10.3

master2 Ready master 54d v1.10.3

master3 Ready master 54d v1.10.3

minion1 Ready 35d v1.10.3

minion10 Ready 35d v1.10.3

minion11 Ready 5h v1.10.3

minion12 Ready 35d v1.10.3

minion13 Ready 1d v1.10.3

minion14 Ready 35d v1.10.3

minion15 Ready 35d v1.10.3

minion16 Ready 35d v1.10.3

minion17 Ready 7d v1.10.3

minion18 Ready 2d v1.10.3

minion19 Ready 2d v1.10.3

minion2 Ready 7d v1.10.3

minion20 Ready 2d v1.10.3

minion21 Ready 7d v1.10.3

minion22 Ready 7d v1.10.3

minion3 Ready 35d v1.10.3

minion4 Ready 35d v1.10.3

minion5 Ready 35d v1.10.3

minion6 Ready 35d v1.10.3

minion7 Ready 35d v1.10.3

minion8 Ready 35d v1.10.3

minion9 Ready 35d v1.10.3

[centos@master1 ~]$ kubectl label node minion18 efknode=efk

[centos@master1 ~]$ kubectl label node minion19 efknode=efk

[centos@master1 ~]$ kubectl label node minion20 efknode=efk

Data节点文件系统准备

Option1 使用GlusterFS

依据ES的官网推荐,不太推荐使用分布式文件系统(NFS/GlusterFS等)来进行数据的存储,对ES的性能会造成很大的影响。

个人本着不死心的态度,尝试了一下GlusterFS(由于Redhat的存在,对GlusterFS还是有点情有独钟),只是ES跑在GlusterFS上会有一个恶心死人不偿命的异常,GlusterFS还是ES好像号称3.10版本后解决了,只是更新到最新版本,问题依旧。

Elasticsearch get CorruptIndexException errors when running with GlusterFS persistent storage

折腾了将近一周时间,放弃了使用GlusterFS来作为ES的存储。

Option2 使用Local Volume

将Kubernetes拉起来的GlusterFS清理掉,将清理出来的磁盘空间利用起来。

由于在拉起GlusterFS的时候,磁盘只需挂在宿主机,无须Mount,此处需要再将磁盘mount到宿主机文件系统。

我们将三个磁盘分部mount到各自宿主机 /data 目录。

[centos@minion18 ~]$ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/xvda1 50G 14G 37G 28% /

devtmpfs 7.8G 0 7.8G 0% /dev

tmpfs 7.8G 0 7.8G 0% /dev/shm

tmpfs 7.8G 2.6M 7.8G 1% /run

tmpfs 7.8G 0 7.8G 0% /sys/fs/cgroup

/dev/mapper/docker--vg-docker--lv 200G 8.4G 192G 5% /var/lib/docker

/dev/xvdf1 493G 49M 493G 1% /data

tmpfs 1.6G 0 1.6G 0% /run/user/1000

分别为三个宿主机的Local Volume创建相应的PV。

统一使用 local-storage 作为 storageClassName,以便于后面ES Data节点拉起的时候使用相应的PV。

Minion18: es-pv-local0.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-local-pv0

spec:

capacity:

storage: 450Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- minion18

Minion19: es-pv-local1.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-local-pv1

spec:

capacity:

storage: 450Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- minion19

Minion20: es-pv-local2.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: es-local-pv2

spec:

capacity:

storage: 450Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: local-storage

local:

path: /data

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- minion20

在Master1节点依据上述文件创建相应的PV。

[centos@master1 ~]$ kubectl create -f es-pv-local0.yaml

[centos@master1 ~]$ kubectl create -f es-pv-local1.yaml

[centos@master1 ~]$ kubectl create -f es-pv-local2.yaml

[centos@master1 ~]$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

es-local-pv0 450Gi RWO Retain Avaliable local-storage 1d

es-local-pv1 450Gi RWO Retain Avaliable local-storage 1d

es-local-pv2 450Gi RWO Retain Avaliable local-storage 1d

[centos@master1 ~]$

ES Installation

ES相应的Yaml文件准备

[centos@master1 ~]$

[centos@master1 ~]$ cd kuberepo/

[centos@master1 kuberepo]$ cd sys-kube/

[centos@master1 sys-kube]$ cd kube-log

[centos@master1 kube-log]$ git clone https://github.com/pires/kubernetes-elasticsearch-cluster.git

ES Docker Image 准备

打开 es-master.yaml和kibana.yaml,发现Image为:

image: quay.io/pires/docker-elasticsearch-kubernetes:6.2.4

image: docker.elastic.co/kibana/kibana-oss:6.2.4

为了在拉起的时候减少等待时间,先将这两个镜像pull下来,然后tag到私库,并更改image的镜像地址为私库地址。

[centos@master1 kube-log]$ docker pull quay.io/pires/docker-elasticsearch-kubernetes:6.2.4

[centos@master1 kube-log]$ docker tag quay.io/pires/docker-elasticsearch-kubernetes:6.2.4 hub.***.***/google_containers/docker-elasticsearch-kubernetes:6.2.4

[centos@master1 kube-log]$ docker push hub.***.***/google_containers/docker-elasticsearch-kubernetes:6.2.4

[centos@master1 kube-log]$ docker pull docker.elastic.co/kibana/kibana-oss:6.2.4

[centos@master1 kube-log]$ docker tag docker.elastic.co/kibana/kibana-oss:6.2.4 hub.***.***/google_containers/kibana-oss:6.2.4

[centos@master1 kube-log]$ docker push hub.***.***/google_containers/kibana-oss:6.2.4

ES Yaml文件调整

- 调整所有Yaml文件,使得所有的Pod,Service,Deployment,StatefulSet, Role, ServiceAccount等全部隶属kube-system namespace下。

es-master.yaml

- 调整elasticsearch的镜像来源为私库。

- 调整JVM参数为512MB

完整的Yaml文件如下:

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: es-master

namespace: kube-system

labels:

component: elasticsearch

role: master

spec:

replicas: 3

template:

metadata:

labels:

component: elasticsearch

role: master

spec:

initContainers:

- name: init-sysctl

image: busybox:1.27.2

command:

- sysctl

- -w

- vm.max_map_count=262144

securityContext:

privileged: true

containers:

- name: es-master

image: hub.***.***/google_containers/docker-elasticsearch-kubernetes:6.2.4

imagePullPolicy: Always

env:

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: CLUSTER_NAME

value: myesdb

- name: NUMBER_OF_MASTERS

value: "2"

- name: NODE_MASTER

value: "true"

- name: NODE_INGEST

value: "false"

- name: NODE_DATA

value: "false"

- name: HTTP_ENABLE

value: "false"

- name: ES_JAVA_OPTS

value: -Xms512m -Xmx512m

- name: PROCESSORS

valueFrom:

resourceFieldRef:

resource: limits.cpu

resources:

limits:

cpu: 1

ports:

- containerPort: 9300

name: transport

livenessProbe:

initialDelaySeconds: 30

timeoutSeconds: 30

tcpSocket:

port: transport

volumeMounts:

- name: storage

mountPath: /data

imagePullSecrets:

- name: kube-sec

volumes:

- emptyDir:

medium: ""

name: "storage"

es-client.yaml

- 调整elasticsearch的镜像来源为私库。

- 调整JVM参数为6GB

完整的Yaml文件如下:

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: es-client

namespace: kube-system

labels:

component: elasticsearch

role: client

spec:

replicas: 2

template:

metadata:

labels:

component: elasticsearch

role: client

spec:

initContainers:

- name: init-sysctl

image: busybox:1.27.2

command:

- sysctl

- -w

- vm.max_map_count=262144

securityContext:

privileged: true

containers:

- name: es-client

#image: quay.io/pires/docker-elasticsearch-kubernetes:6.2.4

image: hub.***.***/google_containers/docker-elasticsearch-kubernetes:6.2.4

imagePullPolicy: Always

env:

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: CLUSTER_NAME

value: myesdb

- name: NODE_MASTER

value: "false"

- name: NODE_DATA

value: "false"

- name: HTTP_ENABLE

value: "true"

- name: ES_JAVA_OPTS

value: -Xms6144m -Xmx6144m

- name: NETWORK_HOST

value: _site_,_lo_

- name: PROCESSORS

valueFrom:

resourceFieldRef:

resource: limits.cpu

resources:

limits:

cpu: 2

ports:

- containerPort: 9200

name: http

- containerPort: 9300

name: transport

livenessProbe:

tcpSocket:

port: transport

readinessProbe:

httpGet:

path: /_cluster/health

port: http

initialDelaySeconds: 20

timeoutSeconds: 5

volumeMounts:

- name: storage

mountPath: /data

volumes:

- emptyDir:

medium: ""

name: storage

imagePullSecrets:

- name: kube-sec

stateful/es-data-stateful.yaml

由于生产环境的日志数据比较庞大,并且在部分ES节点down掉重新拉起后数据不丢失,我们推荐使用有状态的数据节点,亦即:stateful/es-data-stateful.yaml。

如果只是自己测试,可以直接使用es-data.yaml来拉起数据节点,用此种方式拉起的节点,重启后数据将丢失。

- 调整elasticsearch的镜像来源为私库。

- 调整JVM参数为8GB

- 增加节点选择efk节点。

完整的Yaml文件如下,请注意volumeClaimTemplates片段,使用的是我们前面准备的local-storage:

apiVersion: apps/v1beta1

kind: StatefulSet

metadata:

name: es-data

namespace: kube-system

labels:

component: elasticsearch

role: data

spec:

serviceName: elasticsearch-data

replicas: 3

template:

metadata:

labels:

component: elasticsearch

role: data

spec:

nodeSelector:

efknode: efk

initContainers:

- name: init-sysctl

image: busybox:1.27.2

command:

- sysctl

- -w

- vm.max_map_count=262144

securityContext:

privileged: true

containers:

- name: es-data

#image: quay.io/pires/docker-elasticsearch-kubernetes:6.2.4

image: hub.***.***/google_containers/docker-elasticsearch-kubernetes:6.2.4

imagePullPolicy: Always

env:

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: CLUSTER_NAME

value: myesdb

- name: NODE_MASTER

value: "false"

- name: NODE_INGEST

value: "false"

- name: HTTP_ENABLE

value: "false"

- name: ES_JAVA_OPTS

value: -Xms8192m -Xmx8192m

- name: PROCESSORS

valueFrom:

resourceFieldRef:

resource: limits.cpu

resources:

limits:

cpu: 1

ports:

- containerPort: 9300

name: transport

livenessProbe:

tcpSocket:

port: transport

initialDelaySeconds: 20

periodSeconds: 10

volumeMounts:

- name: storage

mountPath: /data

volumeClaimTemplates:

- metadata:

name: storage

spec:

storageClassName: local-storage

accessModes: [ ReadWriteOnce ]

resources:

requests:

storage: 450Gi

Kibana Yaml文件调整

kibana.yaml

- 调整kibana的镜像来源为私库。

- 注销SERVER_BASEPATH环境变量。---(由于我们的Kibana portal不和Kubernetes的Dashboard集成,所以此BASEPATH已不需要)。

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: kibana

namespace: kube-system

labels:

component: kibana

spec:

replicas: 1

selector:

matchLabels:

component: kibana

template:

metadata:

labels:

component: kibana

spec:

containers:

- name: kibana

image: hub.***.***/google_containers/kibana-oss:6.2.4

#image: docker.elastic.co/kibana/kibana-oss:6.2.4

imagePullPolicy: Always

env:

- name: CLUSTER_NAME

value: myesdb

#- name: SERVER_BASEPATH

# value: /api/v1/namespaces/default/services/kibana:http/proxy

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 5601

name: http

imagePullSecrets:

- name: kube-sec

kibana-svc.yaml

- 调整kibana的Service类型从默认的ClusterIP类型为NodePort,以便于从外部访问。

- 请注意此文件中的targetPort采用的是名字代称的形式,http 即是Kibana Deployment的 5601端口的名字。

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: kube-system

labels:

component: kibana

spec:

type: NodePort

selector:

component: kibana

ports:

- name: http

port: 80

nodePort: 30072

targetPort: http

集群拉起

ES集群拉起

按照https://github.com/pires/kubernetes-elasticsearch-cluster/tree/master/stateful页面中的建议,我们先后拉起:

[centos@master1 kube-log]$ cd kubernetes-elasticsearch-cluster/

[centos@master1 kubernetes-elasticsearch-cluster]$ kubectl create -f es-discovery-svc.yaml

[centos@master1 kubernetes-elasticsearch-cluster]$ kubectl create -f es-svc.yaml

[centos@master1 kubernetes-elasticsearch-cluster]$ kubectl create -f es-master.yaml

等待所有master节点变为ready状态:

[centos@master1 kubernetes-elasticsearch-cluster]$ kubectl get pods -n kube-system -o wide | grep es-master

es-master-7b74f78667-2n2q5 1/1 Running 0 1m 10.244.12.44 minion18

es-master-7b74f78667-gtvl5 1/1 Running 0 1m 10.244.10.74 minion21

es-master-7b74f78667-hztq9 1/1 Running 0 1m 10.244.11.89 minion22

[centos@master1 kubernetes-elasticsearch-cluster]$

拉起client节点:

[centos@master1 kubernetes-elasticsearch-cluster]$ kubectl create -f es-client.yaml

拉起data节点,此处无须等待client节点拉起:

[centos@master1 kubernetes-elasticsearch-cluster]$ kubectl create -f stateful/es-data-svc.yaml

[centos@master1 kubernetes-elasticsearch-cluster]$ kubectl create -f stateful/es-data-stateful.yaml

等待大概2m,检查数据节点和client节点:

[centos@master1 kubernetes-elasticsearch-cluster]$ kubectl get pods -n kube-system -o wide | grep es

es-client-56cdd8f49f-mjfb4 1/1 Running 0 1m 10.244.16.2 minion11

es-client-56cdd8f49f-pl46b 1/1 Running 0 1m 10.244.15.13 minion13

es-data-0 1/1 Running 0 1m 10.244.12.45 minion18

es-data-1 1/1 Running 0 1m 10.244.14.46 minion20

es-data-2 1/1 Running 0 1m 10.244.13.34 minion19

es-master-7b74f78667-2n2q5 1/1 Running 0 5m 10.244.12.44 minion18

es-master-7b74f78667-gtvl5 1/1 Running 0 5m 10.244.10.74 minion21

es-master-7b74f78667-hztq9 1/1 Running 0 5m 10.244.11.89 minion22

kubernetes-dashboard-7d5dcdb6d9-wm885 1/1 Running 0 10d 10.244.2.13 master2

[centos@master1 kubernetes-elasticsearch-cluster]$

检查PV和PVC:

[centos@master1 kubernetes-elasticsearch-cluster]$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

es-local-pv0 450Gi RWO Retain Bound kube-system/storage-es-data-0 local-storage 10m

es-local-pv1 450Gi RWO Retain Bound kube-system/storage-es-data-2 local-storage 10m

es-local-pv2 450Gi RWO Retain Bound kube-system/storage-es-data-1 local-storage 10m

[centos@master1 kubernetes-elasticsearch-cluster]$

[centos@master1 kubernetes-elasticsearch-cluster]$ kubectl get pvc -n kube-system

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

storage-es-data-0 Bound es-local-pv0 450Gi RWO local-storage 1m

storage-es-data-1 Bound es-local-pv2 450Gi RWO local-storage 1m

storage-es-data-2 Bound es-local-pv1 450Gi RWO local-storage 1m

[centos@master1 kubernetes-elasticsearch-cluster]$

Kibana 拉起

[centos@master1 kubernetes-elasticsearch-cluster]$ kubectl create -f kibana.yaml

[centos@master1 kubernetes-elasticsearch-cluster]$ kubectl create -f kibana-svc.yaml

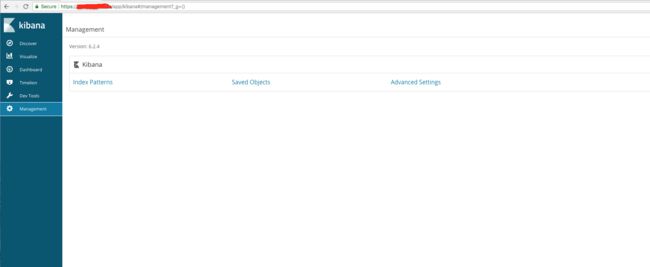

检查Kibana和ES的链接

6.2.4版本的Kibana比以前的5版本的要友好一些。