mongodb副本集介绍

MongoDB副本集早期是没有这个概念的,早期MongoDB是使用master-slave模式,一主一从和MySQL功能类似,当主库宕机后,从库不能自动切换成主

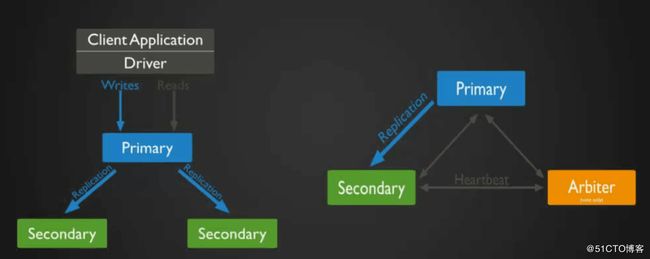

目前版本已经不再使用master-slave模式了,改为使用副本集,这种模式下有一个主(primary),多个从角色(seconday),从角色是只读。可以为副本集设置权重,当主宕机后,剩下权重最高的机器切换成为主角色

在此架构中还可以建立一个仲裁角色(arbiter) ,它只负责对主从切换做裁决,不存储任何数据

在此架构中读写数据都是在主上,想要实现负载均衡目的需要手动指定读库的目标server。在程序代码中实现主写入数据,然后从slave读取数据

![]()

当一个promary宕机后,群集内的secondary会根据优先级选出新的primary出来,如果故障的primary恢复后则群集副本中再次根据优先级来裁决新的主角色,这时候就根据设定的优先级来决定谁是主谁是从,根据优先级来选举不会容易出现"脑裂"的情况发生

mongodb副本集搭建

三台服务器上都指定yum源配置文件(如果时间太久导致该yum源不可用,请到官网自行寻找最新配置):

[root@nfs1 ~]# cat /etc/yum.repos.d/mongodb.repo

[mongodb-org-4.0]

name=MongoDB Repository

baseurl=https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/4.0/x86_64/

gpgcheck=1

enabled=1

gpgkey=https://www.mongodb.org/static/pgp/server-4.0.asc

三台服务器都安装mongodb服务

192.168.1.234(primary)、192.168.1.115(secondary)、192.168.1.200(secondary)

三台服务器编辑配置文件,更改或增加:

replication 把此行注释的#号删除掉,然后定义oplog大小

##oplog定义大小,在这个配置项前有两个空格,数值前必须有一个空格符

oplogSizeMB: 20

##复制集名称,配置项前面需要添加两个空格符,定义名字前必须有一个空格符

replSetName:linux

修改配置文件内容,配置文件中需要针对bindIp这个有不一样的配置,是要配置成自己的网卡ip

[root@nfs1 ~]# vim /etc/mongod.conf

net:

port: 27017

bindIp: 127.0.0.1,192.168.1.234 # Enter 0.0.0.0,:: to bind to all IPv4 and IPv6 addresses or, alternatively, use the net.bindIpAll setting.

#security:

#operationProfiling:

replication:

oplogSizeMB: 20

replSetName: linux

#sharding:

重启mongodb服务,并查看启动进程和监听的端口信息

[root@nfs1 ~]# ps -aux |grep mongod

mongod 11794 0.3 8.4 1072464 84140 ? Sl 14:52 0:32 /usr/bin/mongod -f /etc/mongod.conf

root 11919 0.0 0.0 112652 960 pts/1 S+ 17:37 0:00 grep --color=auto mongod

[root@nfs1 ~]# netstat -ntlp |grep mongod

tcp 0 0 192.168.1.115:27017 0.0.0.0:* LISTEN 11794/mongod

tcp 0 0 127.0.0.1:27017 0.0.0.0:* LISTEN 11794/mongod

在配置副本集前先要确认每台服务器上都没有iptables规则

然后登入到需要指定主的服务器上,指定副本集的服务器。id:0为主服务器,id:1、id:2为从服务器

在执行rs.initiate(config)初始化副本集后可以看到提示有ok:1的信息。表示副本集创建成功

[root@nfs1 ~]# mongo

MongoDB shell version v4.0.4

connecting to: mongodb://127.0.0.1:27017

Implicit session: session { "id" : UUID("a6f3940f-b5e7-4444-8b79-9e12bcdbd21a") }

MongoDB server version: 4.0.4

> config={_id:"linux",members:[{_id:0,host:"192.168.1.234:27017"},{_id:1,host:"192.168.1.115:27017"},{_id:2,host:"192.168.1.200:27017"}]}

{

"_id" : "linux",

"members" : [

{

"_id" : 0,

"host" : "192.168.1.234:27017"

},

{

"_id" : 1,

"host" : "192.168.1.115:27017"

},

{

"_id" : 2,

"host" : "192.168.1.200:27017"

}

]

}

linux:SECONDARY> rs.initiate(config)

> rs.initiate(config)

{

"ok" : 1,

"operationTime" : Timestamp(1542718739, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1542718739, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

这里我出现一个问题,指定了两个"SECONDARY"服务器,但是rs.status出来的只有两台,归根原因是网络问题,原因是因为115机器上的iptables没有清除干净,导致mongodb间通信出现问题,清空iptables规则就可以了

查看mongodb的副本集状态

rs.status()

执行状态查询后可以看到1.234的状态是PRIMARY角色,其他机器是SECONDARY角色

linux:SECONDARY> rs.status()

{

"set" : "linux",

"date" : ISODate("2018-11-20T13:00:08.697Z"),

"myState" : 1,

"term" : NumberLong(1),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1542718802, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1542718802, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1542718802, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1542718802, 1),

"t" : NumberLong(1)

}

},

"lastStableCheckpointTimestamp" : Timestamp(1542718752, 1),

"members" : [

{

"_id" : 0,

"name" : "192.168.1.234:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 1999,

"optime" : {

"ts" : Timestamp(1542718802, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-11-20T13:00:02Z"),

"syncingTo" : "",

"syncSourceHost" : "",

"syncSourceId" : -1,

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1542718751, 1),

"electionDate" : ISODate("2018-11-20T12:59:11Z"),

"configVersion" : 1,

"self" : true,

"lastHeartbeatMessage" : ""

},

{

"_id" : 1,

"name" : "192.168.1.115:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 68,

"optime" : {

"ts" : Timestamp(1542718802, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1542718802, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-11-20T13:00:02Z"),

"optimeDurableDate" : ISODate("2018-11-20T13:00:02Z"),

"lastHeartbeat" : ISODate("2018-11-20T13:00:07.254Z"),

"lastHeartbeatRecv" : ISODate("2018-11-20T13:00:07.078Z"),

"pingMs" : NumberLong(0),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.1.234:27017",

"syncSourceHost" : "192.168.1.234:27017",

"syncSourceId" : 0,

"infoMessage" : "",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.1.200:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 68,

"optime" : {

"ts" : Timestamp(1542718802, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1542718802, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-11-20T13:00:02Z"),

"optimeDurableDate" : ISODate("2018-11-20T13:00:02Z"),

"lastHeartbeat" : ISODate("2018-11-20T13:00:07.268Z"),

"lastHeartbeatRecv" : ISODate("2018-11-20T13:00:07.680Z"),

"pingMs" : NumberLong(1),

"lastHeartbeatMessage" : "",

"syncingTo" : "192.168.1.115:27017",

"syncSourceHost" : "192.168.1.115:27017",

"syncSourceId" : 1,

"infoMessage" : "",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1542718802, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1542718802, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

mongodb删除副本集

rs.remove("ip:port")

>rs.remove("192.168.1.115:27017")

mongodb副本集测试

在主上创建库,创建一个测试集合。并查看该集合的状态

linux:PRIMARY> use mydb

switched to db mydb

linux:PRIMARY> db.acc.insert({AccountID:1,UserName:"linux",password:"pwd@123"})

WriteResult({ "nInserted" : 1 })

linux:PRIMARY> show dbs;

admin 0.000GB

config 0.000GB

local 0.000GB

mydb 0.000GB

linux:PRIMARY> show tables

acc

在启用副本集后,从上是不能正常查询集合的。还需要设定从上的副本集的配置,参考如下:

一开始执行show dbs会出现错误信息:

linux:SECONDARY> show dbs

2018-11-20T21:37:43.395+0800 E QUERY [js] Error: listDatabases failed:{

"operationTime" : Timestamp(1542721053, 1),

"ok" : 0,

"errmsg" : "not master and slaveOk=false",

解决这个错误信息需要执行从上的初始化,然后就可以正常查询到库和集合了

linux:SECONDARY> rs.slaveOk()

linux:SECONDARY> show dbs

admin 0.000GB

config 0.000GB

local 0.000GB

mydb 0.000GB

linux:SECONDARY> use mydb

switched to db mydb

linux:SECONDARY> show tables

acc

指定mongodb的主从权重

默认三台机器权重都为1,如果任何一个权重设定为比其他机器高,则该台机器会马上切换成primary角色,所以这里将三台机器设定为:

192.168.1.234id为0,权重为3

192.168.1.115id为1,权重为2

192.168.1.200id为2,权重为1

操作只在主上执行,在主机器上执行以下语句:

linux:PRIMARY> cfg = rs.conf()

linux:PRIMARY> cfg.members[0].priority = 3

3

linux:PRIMARY> cfg.members[1].priority = 2

2

linux:PRIMARY> cfg.members[2].priority = 1

1

linux:PRIMARY> rs.reconfig(cfg)

{

"ok" : 1,

"operationTime" : Timestamp(1542722379, 1),

"$clusterTime" : {

"clusterTime" : Timestamp(1542722379, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}

再次查看权重状态分配,权重信息再"priority" : 表示权重优先级

linux:PRIMARY> rs.config()

{

"_id" : "linux",

"version" : 6,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" : "192.168.1.234:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 3,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "192.168.1.115:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 2,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "192.168.1.200:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"catchUpTimeoutMillis" : -1,

"catchUpTakeoverDelayMillis" : 30000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("5bf4051352f0b9218cdf373c")

}

}

这样配置完成后,主宕机后,第二个节点会成为候选的主节点

测试:

在主上开启iptables,禁止掉mongod的数据流量,查看副本集会如何切换,是否切换到了第二节点

[root@nfs1 /]# iptables -I INPUT -p tcp --dport 27017 -j DROP