一、ELK简介

ELK是elastic 公司旗下三款产品ElasticSearch 、Logstash 、Kibana的首字母组合,主要用于日志收集、分析与报表展示。

ELK Stack包含:ElasticSearch、Logstash、Kibana。(ELK Stack 5.0版本以后-->Elastic Stack == ELK Stack+Beats)

ElasticSearch是一个搜索引擎,用来搜索、分析、存储日志。它是分布式的,也就是说可以横向扩容,可以自动发现,索引自动分片,总之很强大。

Logstash用来采集日志,把日志解析为Json格式交给ElasticSearch。

Kibana是一个数据可视化组件,把处理后的结果通过WEB界面展示。

Beats是一个轻量级日志采集器,其实Beats家族有5个成员。(早起的Logstash对性能资源消耗比较高,Beats性能和消耗可以忽略不计)

X-pach对Elastic Stack提供了安全、警报、监控、报表、图标于一身的扩展包,收费。

官网:https://www.elastic.co/cn/

中文文档:https://www.elastic.co/guide/cn/elasticsearch/guide/current/index.html

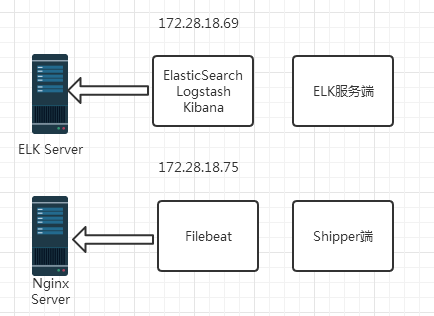

二、单机架构图

三、安装ELK服务端

1、下载elasticsearch-6.2.4.rpm、logstash-6.2.4.rpm、kibana-6.2.4-x86_64.rpm

[root@server-1 src]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.2.4.rpm [root@server-1 src]# wget https://artifacts.elastic.co/downloads/logstash/logstash-6.2.4.rpm [root@server-1 src]# wget https://artifacts.elastic.co/downloads/kibana/kibana-6.2.4-x86_64.rpm

2、rpm安装elasticsearch-6.2.4.rpm

[root@server-1 src]# rpm -ivh elasticsearch-6.2.4.rpm 警告:elasticsearch-6.2.4.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY 准备中... ################################# [100%] Creating elasticsearch group... OK Creating elasticsearch user... OK 正在升级/安装... 1:elasticsearch-0:6.2.4-1 ################################# [100%] ### NOT starting on installation, please execute the following statements to configure elasticsearch service to start automatically using systemd sudo systemctl daemon-reload sudo systemctl enable elasticsearch.service ### You can start elasticsearch service by executing sudo systemctl start elasticsearch.service

3、安装logstash-6.2.4.rpm

[root@server-1 src]# rpm -ivh logstash-6.2.4.rpm 警告:logstash-6.2.4.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY 准备中... ################################# [100%] 正在升级/安装... 1:logstash-1:6.2.4-1 ################################# [100%] which: no java in (/sbin:/bin:/usr/sbin:/usr/bin:/usr/X11R6/bin) could not find java; set JAVA_HOME or ensure java is in PATH chmod: 无法访问"/etc/default/logstash": 没有那个文件或目录 警告:%post(logstash-1:6.2.4-1.noarch) 脚本执行失败,退出状态码为 1

报错,显示需要JAVA环境,安装JAVA

[root@server-1 src]# yum install jdk-8u172-linux-x64.rpm 已加载插件:fastestmirror 正在检查 jdk-8u172-linux-x64.rpm: 2000:jdk1.8-1.8.0_172-fcs.x86_64 jdk-8u172-linux-x64.rpm 将被安装 正在解决依赖关系 --> 正在检查事务 ---> 软件包 jdk1.8.x86_64.2000.1.8.0_172-fcs 将被 安装 --> 解决依赖关系完成 依赖关系解决 ========================================================================================================================================== Package 架构 版本 源 大小 ========================================================================================================================================== 正在安装: jdk1.8 x86_64 2000:1.8.0_172-fcs /jdk-8u172-linux-x64 279 M 事务概要 ========================================================================================================================================== 安装 1 软件包 总计:279 M 安装大小:279 M Is this ok [y/d/N]: y Downloading packages: Running transaction check Running transaction test Transaction test succeeded Running transaction 警告:RPM 数据库已被非 yum 程序修改。 ** 发现 1 个已存在的 RPM 数据库问题, 'yum check' 输出如下: smbios-utils-bin-2.3.3-8.el7.x86_64 有缺少的需求 libsmbios = ('0', '2.3.3', '8.el7') 正在安装 : 2000:jdk1.8-1.8.0_172-fcs.x86_64 1/1 Unpacking JAR files... tools.jar... plugin.jar... javaws.jar... deploy.jar... rt.jar... jsse.jar... charsets.jar... localedata.jar... 验证中 : 2000:jdk1.8-1.8.0_172-fcs.x86_64 1/1 已安装: jdk1.8.x86_64 2000:1.8.0_172-fcs 完毕!

[root@server-1 src]# java -version java version "1.8.0_172" Java(TM) SE Runtime Environment (build 1.8.0_172-b11) Java HotSpot(TM) 64-Bit Server VM (build 25.172-b11, mixed mode) 您在 /var/spool/mail/root 中有新邮件 [root@server-1 src]#

再次安装logstash-6.2.4.rpm

[root@server-1 src]# rpm -ivh logstash-6.2.4.rpm 警告:logstash-6.2.4.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY 准备中... ################################# [100%] 软件包 logstash-1:6.2.4-1.noarch 已经安装 [root@server-1 src]#

4、安装kibana-6.2.4-x86_64.rpm

[root@server-1 src]# rpm -ivh kibana-6.2.4-x86_64.rpm 警告:kibana-6.2.4-x86_64.rpm: 头V4 RSA/SHA512 Signature, 密钥 ID d88e42b4: NOKEY 准备中... ################################# [100%] 正在升级/安装... 1:kibana-6.2.4-1 ################################# [100%] [root@server-1 src]#

四、相关配置与启动服务

1、Elasticsearch配置

cluster.name: test-cluster #集群名称 node.name: node-1 #节点名称 path.data: /var/lib/elasticsearch #数据存放路径 path.logs: /var/log/elasticsearch #日志存放路径 network.host: 172.28.18.69 #监听IP http.port: 9200 #监听端口 discovery.zen.ping.unicast.hosts: ["172.28.18.69"] #集群各主机地址,单机模式就一个本机IP

2、启动服务,并查看端口

[root@server-1 old]# systemctl start elasticsearch [root@server-1 old]# netstat -tunlp|grep java tcp6 0 0 172.28.18.69:9200 :::* LISTEN 5176/java tcp6 0 0 172.28.18.69:9300 :::* LISTEN 5176/java

3、curl查看端口信息

[root@server-1 old]# curl 172.28.18.69:9200 { "name" : "node-1", "cluster_name" : "test-cluster", "cluster_uuid" : "2oBg0RqYR2ewNeRfAN88zg", "version" : { "number" : "6.2.4", "build_hash" : "ccec39f", "build_date" : "2018-04-12T20:37:28.497551Z", "build_snapshot" : false, "lucene_version" : "7.2.1", "minimum_wire_compatibility_version" : "5.6.0", "minimum_index_compatibility_version" : "5.0.0" }, "tagline" : "You Know, for Search"

4、logstash配置

[root@server-1 old]# vim /etc/logstash/logstash.yml path.data: /var/lib/logstash #数据存放路径 http.host: "172.28.18.69" #监听IP http.port: 9600 #监听的端口 path.logs: /var/log/logstash #日志路径

5、配置logstash用户相应目录写权限

[root@server-1 old]# chown -R logstash /var/log/logstash/ /var/lib/logstash/ [root@server-1 old]#

6、新建一个配置文件用于收集系统日志

[root@server-1 old]# vim /etc/logstash/conf.d/syslog.conf input{ syslog{ type => "system-syslog" port => 10000 } } #输出到elastcisearch output{ elasticsearch{ hosts => ["172.28.18.69:9200"] #elasticsearch服务地址 index => "system-syslog-%{+YYYY.MM}" #创建的索引 } }

7、测试日志收集配置文件

[root@server-1 old]# ln -s /usr/share/logstash/bin/logstash /usr/local/bin/ [root@server-1 old]# logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/syslog.conf --config.test_and_exit Sending Logstash's logs to /var/log/logstash which is now configured via log4j2.properties Configuration OK

8、启动logstash服务,并查看端口

[root@server-1 old]# systemctl start logstash [root@server-1 old]# netstat -tunlp|grep java tcp6 0 0 :::10000 :::* LISTEN 6046/java tcp6 0 0 172.28.18.69:9200 :::* LISTEN 5176/java tcp6 0 0 172.28.18.69:9300 :::* LISTEN 5176/java tcp6 0 0 172.28.18.69:9600 :::* LISTEN 6046/java udp 0 0 0.0.0.0:10000 0.0.0.0:* 6046/java

9600是logstash监听端口,10000是系统日志收集输入端口

9、查看elasticsearch日志收集的索引信息

[root@server-1 old]# curl http://172.28.18.69:9200/_cat/indices yellow open system-syslog-2019.07 REp7fM_gSaquo9PX2_sREQ 5 1 10 0 58.9kb 58.9kb [root@server-1 old]#

10、查看指定索引的详细信息

[root@server-1 old]# curl http://172.28.18.69:9200/system-syslog-2019.07?pretty { "system-syslog-2019.07" : { "aliases" : { }, "mappings" : { "doc" : { "properties" : { "@timestamp" : { "type" : "date" }, "@version" : { "type" : "text", "fields" : { "keyword" : { "type" : "keyword", "ignore_above" : 256 } } }, "facility" : { "type" : "long" }, "facility_label" : { "type" : "text", "fields" : { "keyword" : { "type" : "keyword", "ignore_above" : 256 } } }, "host" : { "type" : "text", "fields" : { "keyword" : { "type" : "keyword", "ignore_above" : 256 } } }, "message" : { "type" : "text", "fields" : { "keyword" : { "type" : "keyword", "ignore_above" : 256 } } }, "priority" : { "type" : "long" }, "severity" : { "type" : "long" }, "severity_label" : { "type" : "text", "fields" : { "keyword" : { "type" : "keyword", "ignore_above" : 256 } } }, "tags" : { "type" : "text", "fields" : { "keyword" : { "type" : "keyword", "ignore_above" : 256 } } }, "type" : { "type" : "text", "fields" : { "keyword" : { "type" : "keyword", "ignore_above" : 256 } } } } } }, "settings" : { "index" : { "creation_date" : "1562809441246", "number_of_shards" : "5", "number_of_replicas" : "1", "uuid" : "REp7fM_gSaquo9PX2_sREQ", "version" : { "created" : "6020499" }, "provided_name" : "system-syslog-2019.07" } } } } [root@server-1 old]#

说明logstash与elasticsearch之间通讯正常

11、Kibana配置

[root@server-1 old]# vim /etc/kibana/kibana.yml server.port: 5601 #监听端口 server.host: 172.28.18.69 #监听IP elasticsearch.url: "http://172.28.18.69:9200" #elastcisearch服务地址 logging.dest: /var/log/kibana/kibana.log #日志路径

12、新建日志目录,并赋予kibana用户写权限

[root@server-1 old]# mkdir /var/log/kibana/ [root@server-1 old]# chown -R kibana /var/log/kibana/

13、启动kibana服务,并查看端口

[root@server-1 old]# systemctl start kibana [root@server-1 old]# netstat -tunlp|grep 5601 tcp 0 0 172.28.18.69:5601 0.0.0.0:* LISTEN 7511/node

监听成功

14、kibana汉化

下载汉化包

git clone https://github.com/anbai-inc/Kibana_Hanization.git

编译

[root@server-1 src]# cd Kibana_Hanization/old/ [root@server-1 old]# python main.py /usr/share/kibana/

汉化过程较慢,耐心等待

[root@server-1 old]# python main.py /usr/share/kibana/ 恭喜,Kibana汉化完成! [root@server-1 old]#

15、重启kibana服务

[root@server-1 old]# systemctl restart kibana

16、浏览器访问http://172.28.18.69:5601

汉化成功

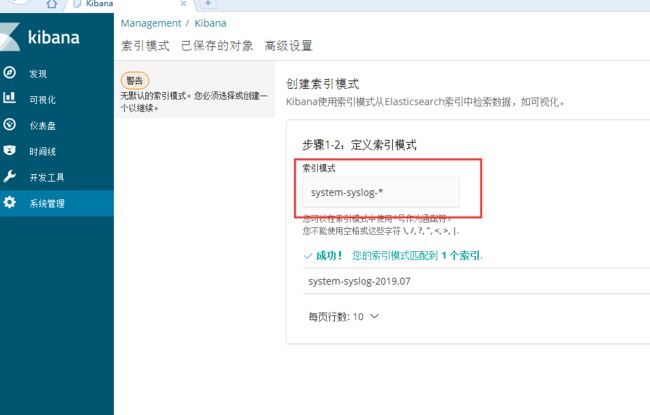

17、在kibana上创建索引

刚才Logstash中创建手机系统日志的配置文件,现在在Kibana上创建索引

系统管理--索引模式

在索引模式中输入之前配置的system-syslog-*,表示匹配所有以system-syslog-开头的索引

下一步,开始配置过滤条件,这里以时间戳为条件字段

创建索引模式

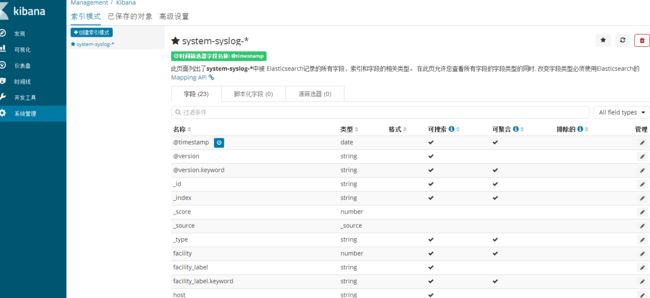

显示了所有系统日志收集的字段,点击发现,可以配置显示的字段

六、ELK收集分析Nginx日志

1、Filebeat组件

ELK的beats组件常用的有以下几种:

filebeat:进行文件和目录采集,可用于收集日志数据。

heartbeat:系统间连通性检测,可收集icmp, tcp, http等系统的连通性情况。

Winlogbeat:专门针对windows的事务日志的数据采集。

packetbeat:通过网络抓包、协议分析,收集网络相关数据。

metricbeat:进行指标采集,主要用于监控系统和软件的性能。(系统、中间件等)

2、在Nginx主机上安装Filebeat组件

[root@zabbix_server src]# cd /usr/local/src/ [root@zabbix_server src]# wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.2.4-x86_64.rpm [root@zabbix_server src]# rpm -ivh filebeat-6.2.4-x86_64.rpm

3、配置并启动

首先配置FielBeat采集Nginx日志,并输出到屏幕终端

[root@zabbix_server etc]# vim /etc/filebeat/filebeat.yml filebeat.prospectors: - type: log enabled: true paths: - /var/log/nginx/host.access.log #需要收集的日志文件路径 output.console: #设置将日志信息输出到屏幕终端 enable: true #将输出到elastcisearch设置注释掉 # output.elasticsearch: # Array of hosts to connect to. # hosts: ["localhost:9200"]

保存退出, 执行以下命令来测试配置文件设置

[root@zabbix_server etc]# filebeat -c /etc/filebeat/filebeat.yml

屏幕大量显示nginx日志,表示配置成功,接下来我们配置输出到Logstash服务

filebeat.prospectors: - type: log enabled: true paths: - /var/log/nginx/access.log #需要收集的日志文件路径 fields: log_topics: nginx-172.28.18.75 #设置日志标题 output.logstash: hosts: ["172.28.18.69:10001"] #输出到logstash服务地址和端口

然后在Logstash服务器上创建一个新的Nginx日志收集配置文件

input { beats { port=>10001 #设置日志输入端口 } } output { if[fields][log_topics]=="nginx-172.28.18.75"{ elasticsearch { hosts=>["172.28.18.69:9200"] #输出到elasticsearch服务地址 index=>"nginx-172.28.18.75-%{+YYYY.MM.dd}" #设置索引 } } }

重启logstash服务、重启nginx主机的filebeat服务

[root@server-1 old]# systemctl restart logstash

[root@zabbix_server filebeat]# service filebeat restart 2019-07-11T14:57:02.728+0800 INFO instance/beat.go:468 Home path: [/usr/share/filebeat] Config path: [/etc/filebeat] Data path: [/var/lib/filebeat] Logs path: [/var/log/filebeat] 2019-07-11T14:57:02.729+0800 INFO instance/beat.go:475 Beat UUID: 1435865e-4392-45fe-86a4-72ea77d3c75d 2019-07-11T14:57:02.729+0800 INFO instance/beat.go:213 Setup Beat: filebeat; Version: 6.2.4 2019-07-11T14:57:02.730+0800 INFO pipeline/module.go:76 Beat name: zabbix_server.jinglong Config OK Stopping filebeat: [确定] Starting filebeat: 2019-07-11T14:57:02.895+0800 INFO instance/beat.go:468 Home path: [/usr/share/filebeat] Config path: [/etc/filebeat] Data path: [/var/lib/filebeat] Logs path: [/var/log/filebeat] 2019-07-11T14:57:02.895+0800 INFO instance/beat.go:475 Beat UUID: 1435865e-4392-45fe-86a4-72ea77d3c75d 2019-07-11T14:57:02.895+0800 INFO instance/beat.go:213 Setup Beat: filebeat; Version: 6.2.4 2019-07-11T14:57:02.896+0800 INFO pipeline/module.go:76 Beat name: zabbix_server.jinglong Config OK [确定]

4、Kibana上配置索引

浏览器打开http://172.28.18.69:5601

系统管理-创建索引模式

可以看到已经有了nginx日志索引,创建索引模式

选择发现

可以看到ngnix的日志了。