实验平台:virtualbox 4.12

操作系统:RedHat5.4

本实验使用两台主机模拟实现高可用集群,使用DRBD实现分布式存储,在一台主机宕机是可以保证数据的完整及服务的正常。

一. 首先进行一些基本的准备设置。

# vim /etc/sysconfig/network-scripts/ifcfg-eth0

#vim /etc/sysconfig/network

安装实验规划进行相应修改即可

#vim /etc/hosts

添加:

- 192.168.56.10 node1.a.org node1

- 192.168.56.30 node2.a.org node2

拷贝该文件至node2

# scp /etc/hosts node2:/etc/

接下来要设置ssh免密码连接

- [root@node1 ~]#ssh-keygen -t rsa

- [root@node1 ~]#ssh-copy-id -i ~/.ssh/id_rsa.pub root@node2

- [root@node2 ~]#ssh-keygen -t rsa

- [root@node2 ~]#ssh-copy-id -i ~/.ssh/id_rsa.pub root@node1

二.安装DRBD,用来构建分布式存储。

1.这里要选用适合自己系统的版本进行安装,我用到的是

- drbd83-8.3.8-1.el5.centos.i386.rpm

- kmod-drbd83-8.3.8-1.el5.centos.i686.rpm

下面开始安装,两台主机都要装上:

- [root@node1 ~]# yum localinstall *.rpm -y –nogpgcheck

- [root@node1 ~]# scp *.rpm node2:/root

- [root@node2 ~]# yum localinstall *.rpm -y –nogpgcheck

还要为DRBD准备分区,同样是要在两台主机上分别进行,关于分区的操作就不细述来,很简单,我在两台主机上各分了一个5G的分区,盘符都是/dev/sda5

2.配置DRBD

- [root@node1 ~]# cp /usr/share/doc/drbd83-8.3.8/drbd.conf /etc/

- cp: overwrite `/etc/drbd.conf'? Y # 这里要选择覆盖

- [root@node1 ~]# scp /etc/drbd.conf node2:/etc/

编辑配置文件:

[root@node1 ~]# vim /etc/drbd.d/global_common.conf

- global {

- usage-count no;

- }

- common {

- protocol C;

- handlers {

- pri-on-incon-degr "/usr/lib/drbd/notify-pri-on-incon-degr.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

- pri-lost-after-sb "/usr/lib/drbd/notify-pri-lost-after-sb.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

- local-io-error "/usr/lib/drbd/notify-io-error.sh; /usr/lib/drbd/notify-emergency-shutdown.sh; echo o > /proc/sysrq-trigger ; halt -f";

- }

- startup {

- wfc-timeout 120;

- degr-wfc-timeout 120;

- }

- disk {

- on-io-error detach;

- fencing resource-only;

- }

- net {

- cram-hmac-alg "sha1";

- shared-secret "mydrbdlab";

- }

- syncer {

- rate 100M;

- }

为DRBD定义一个资源

- [root@node1 ~]# vim /etc/drbd.d/web.res #这里名字可以随便起,但一定要是.res结尾的文件

- resource web {

- on node1.a.org {

- device /dev/drbd0;

- disk /dev/sda5;

- address 192.168.56.10:7789;

- meta-disk internal;

- }

- on node2.a.org {

- device /dev/drbd0;

- disk /dev/sda5;

- address 192.168.56.30:7789;

- meta-disk internal;

- }

- }

- [root@node1 ~]# scp /etc/drbd.d/web.res node2:/etc/drbd.d/

初始化资源,在Node1和Node2上分别执行:

- [root@node1 ~]# drbdadm create-md web

- [root@node2 ~]# drbdadm create-md web

- #启动服务:

- [root@node1 ~]# /etc/init.d/drbd start

- [root@node2 ~]# /etc/init.d/drbd start

设置其中一个节点为主节点

# drbdsetup /dev/drbd0 primary –o

3.创建文件系统

- [root@node1 ~]# mke2fs -j -L DRBD /dev/drbd0

- [root@node1 ~]# mkdir /mnt/drbd

- [root@node1 ~]# mount /dev/drbd0 /mnt/drbd

三.安装corosync

用到的软件包有

- cluster-glue-1.0.6-1.6.el5.i386.rpm

- cluster-glue-libs-1.0.6-1.6.el5.i386.rpm

- corosync-1.2.7-1.1.el5.i386.rpm

- corosynclib-1.2.7-1.1.el5.i386.rpm

- heartbeat-3.0.3-2.3.el5.i386.rpm

- heartbeat-libs-3.0.3-2.3.el5.i386.rpm

- libesmtp-1.0.4-5.el5.i386.rpm

- openais-1.1.3-1.6.el5.i386.rpm

- openaislib-1.1.3-1.6.el5.i386.rpm

- pacemaker-1.0.11-1.2.el5.i386.rpm

- pacemaker-libs-1.0.11-1.2.el5.i386.rpm

- resource-agents-1.0.4-1.1.el5.i386.rpm

[root@node1 ~]# yum localinstall *.rpm -y --nogpgcheck

配置corosync

- [root@node1 ~]#cd /etc/corosync

- [root@node1 ~]# cp corosync.conf.example corosync.conf

- [root@node1 ~]#vim corosync.conf

- bindnetaddr: 192.168.56.0

- #并添加如下内容:

- service {

- ver: 0

- name: pacemaker

- }

- aisexec {

- user: root

- group: root

- }

生成节点间通信时用到的认证密钥文件:

- [root@node1 corosync]# corosync-keygen

- [root@node1 corosync]# scp -p corosync.conf authkey node2:/etc/corosync/

- [root@node1 ~]# mkdir /var/log/cluster

- [root@node1 ~]# ssh node2 'mkdir /var/log/cluster'

启动服务

- [root@node1 ~]# /etc/init.d/corosync start

- [root@node1 ~]# ssh node2 -- /etc/init.d/corosync start

- [root@node1 ~]# crm configure property stonith-enabled=false //禁用stonith

- 为集群添加集群资源

- [root@node1 ~]#crm configure primitive WebIP ocf:heartbeat:IPaddr params ip=192.168.56.20

- [root@node1 ~]# crm configure property no-quorum-policy=ignore

- 为资源指定默认黏性值

- [root@node1 ~]# crm configure rsc_defaults resource-stickiness=100

- [root@node1 ~]# crm

- crm(live)# configure

- crm(live)configure# primitive webdrbd ocf:heartbeat:drbd params drbd_resource=web op monitor role=Master interval=50s timeout=30s op monitor role=Slave interval=60s timeout=30s

- crm(live)configure# master MS_Webdrbd webdrbd meta master-max="1" master-node-max="1" clone-max="2" clone-node-max="1" notify="true"

为Primary节点上的web资源创建自动挂载的集群服务

- # crm

- crm(live)# configure

- crm(live)configure# primitive WebFS ocf:heartbeat:Filesystem params device="/dev/drbd0" directory="/www" fstype="ext3"

- crm(live)configure# colocation WebFS_on_MS_webdrbd inf: WebFS MS_Webdrbd:Master

- crm(live)configure# order WebFS_after_MS_Webdrbd inf: MS_Webdrbd:promote WebFS:start

- crm(live)configure# verify

- crm(live)configure# commit

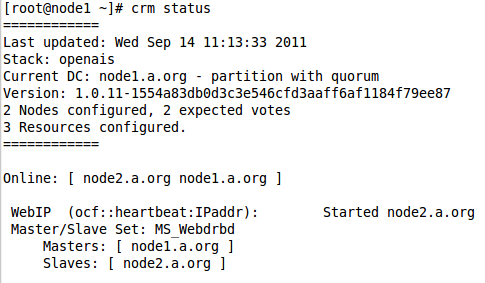

查看集群中资源的运行状态:

下面我们在两个节点分别安装httpd服务用于测试

- [root@node1 ~]# yum install httpd -y

- [root@node2 ~]# yum install httpd -y

- root@node1 ~]# setenforce 0 //关闭SElinux

- root@node2 ~]# setenforce 0

- [root@node1 ~]# vim /etc/httpd/conf/httpd.conf

- DocumentRoot "//mnt/drbd/html"

- [root@node1 ~]# echo "<h1>Node1.a.orgh1>" > /mnt/debd/html/index.html

- [root@node1 ~]# crm configure primitive WebSite lsb:httpd //添加httpd为资源

- [root@node1 ~]# crm configure colocation website-with-ip INFINITY: WebSite WebIP //是IP和web服务在同一主机上

- [root@node1 ~]# crm configure order httpd-after-ip mandatory: WebIP WebSite //定义资源启动顺序

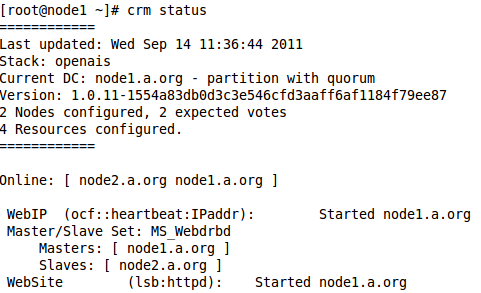

查看资源状态:

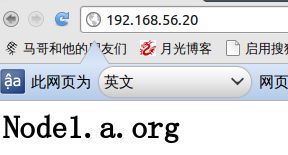

下面在浏览器内输入192.168.56.20,可以看到

此时停掉node1

查看资源状态: