转自:http://blog.csdn.net/qq_21158525/article/details/78052644?locationNum=8&fps=1

ARKit 和 ARCore 都是三部分:相机姿态估计, 环境感知(平面估计)及光源感知。

ARCore 的部分源码:https://github.com/google-ar/arcore-unity-sdk/tree/master/Assets/GoogleARCore/SDK;

ARKit API:https://developer.apple.com/documentation/arkit

ARCore API:https://developers.google.com/ar/reference/

-相机姿态估计

Motion Tracking方面都是VIO.

ARKit 是特征点法,稀疏的点云:https://www.youtube.com/watch?v=rCknUayCsjk

ARKit recognizes notable features in the scene image, tracks differences in the positions of those features across video frames, and compares that information with motion sensing data. —-https://developer.apple.com/documentation/arkit/about_augmented_reality_and_arkit

ARCore 有猜测说是直接法估计的半稠密点云。但是google自己说是特征点,应该也是稀疏的了:

ARCore detects visually distinct features in the captured camera image calledfeature pointsand uses these points to compute its change in location.

—–https://developers.google.com/ar/discover/concepts

-环境感知(平面检测)

不太了解原理,摘了一些原文。

ARKit:

“…can track and place objects on smaller feature points as well…”

“…Use hit-testing methods (see theARHitTestResultclass) to find real-world surfaces corresponding to a point in the camera image….”

“You can use hit-test results ordetected planesto place or interact with virtual content in your scene.”

检测不了垂直面

ARCore:

“ARCore looks for clusters of feature points that appear to lie on common horizontal surfaces…”

“…can also determine each plane’s boundary…”

” …flat surfaces without texture, such as a white desk, may not be detected properly…”

没有解决遮挡:https://www.youtube.com/watch?v=aSKgJEt9l-0

-光源感知

暂时不了解。

ARKit

参考博文:http://blog.csdn.net/u013263917/article/details/72903174

IPhone X添加了TrueDepth camera,也支持 ARKit使用。

一、简介

ARKit 框架

基于3D场景(SceneKit)实现的增强现实(主流)

基于2D场景(SpriktKit)实现的增强现实

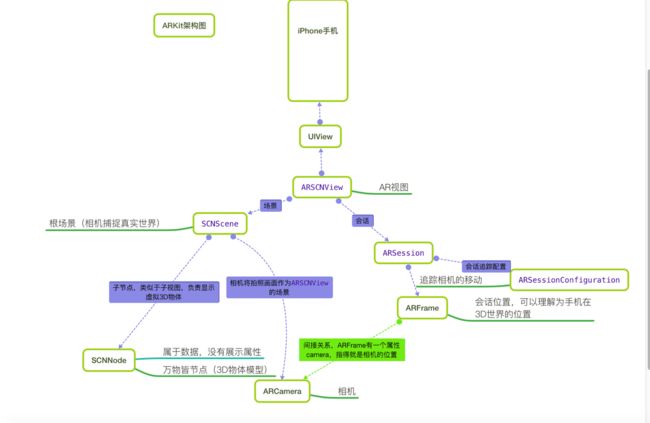

ARKit与SceneKit的关系

ARKit并不是一个独立就能够运行的框架,而是必须要SceneKit一起用才可以。

ARKit 实现相机捕捉现实世界图像并恢复三维世界

SceneKit 实现在图像中现实虚拟的3D模型

我们focus ARKit

二、ARKit

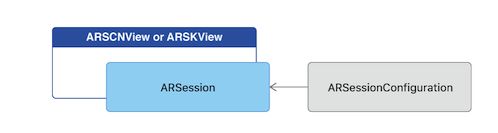

ARKit框架中中显示3D虚拟增强现实的视图ARSCNView继承于SceneKit框架中的SCNView,而SCNView又继承于UIKit框架中的UIView。

在一个完整的虚拟增强现实体验中,ARKit框架只负责将真实世界画面转变为一个3D场景,这一个转变的过程主要分为两个环节:由ARCamera负责捕捉摄像头画面,由ARSession负责搭建3D场景。

ARSCNView与ARCamera两者之间并没有直接的关系,它们之间是通过AR会话,也就是ARKit框架中非常重量级的一个类ARSession来搭建沟通桥梁的。

要想运行一个ARSession会话,你必须要指定一个称之为会话追踪配置的对象:ARSessionConfiguration, ARSessionConfiguration的主要目的就是负责追踪相机在3D世界中的位置以及一些特征场景的捕捉(例如平面捕捉),这个类本身比较简单却作用巨大。

ARSessionConfiguration是一个父类,为了更好的看到增强现实的效果,苹果官方建议我们使用它的子类ARWorldTrackingSessionConfiguration,该类只支持A9芯片之后的机型,也就是iPhone6s之后的机型

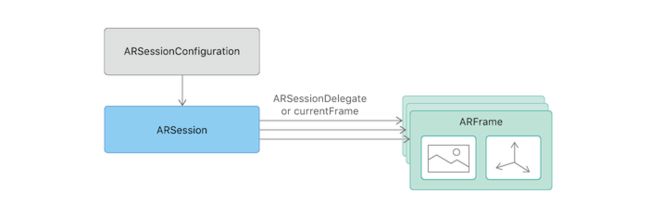

2.1. ARWorldTrackingSessionConfiguration 与 ARFrame

ARSession搭建沟通桥梁的参与者主要有两个ARWorldTrackingSessionConfiguration与ARFrame。

ARWorldTrackingSessionConfiguration(会话追踪配置)的作用是跟踪设备的方向和位置,以及检测设备摄像头看到的现实世界的表面。它的内部实现了一系列非常庞大的算法计算以及调用了你的iPhone必要的传感器来检测手机的移动及旋转甚至是翻滚。

ARWorldTrackingSessionConfiguration 里面就是VIO系统

这里文中提到的ARWorldTrackingSessionConfiguration在最新的iOS 11 beta8中已被废弃,因此以下更改为ARWorldTrackingConfiguration

当ARWorldTrackingSessionConfiguration计算出相机在3D世界中的位置时,它本身并不持有这个位置数据,而是将其计算出的位置数据交给ARSession去管理(与前面说的session管理内存相呼应),而相机的位置数据对应的类就是ARFrame

ARSession类一个属性叫做currentFrame,维护的就是ARFrame这个对象

ARCamera只负责捕捉图像,不参与数据的处理。它属于3D场景中的一个环节,每一个3D Scene都会有一个Camera,它觉得了我们看物体的视野。

2.2. ARSession

ARSession获取相机位置数据主要有两种方式

第一种:push。 实时不断的获取相机位置,由ARSession主动告知用户。通过实现ARSession的代理- (void)session:(ARSession)session didUpdateFrame:(ARFrame)frame来获取

第二种:pull。 用户想要时,主动去获取。ARSession的属性currentFrame来获取

2.3. ARKit工作完整流程

ARSCNView加载场景SCNScene

SCNScene启动相机ARCamera开始捕捉场景

捕捉场景后ARSCNView开始将场景数据交给Session

Session通过管理ARSessionConfiguration实现场景的追踪并且返回一个ARFrame

给ARSCNView的scene添加一个子节点(3D物体模型)

ARSessionConfiguration捕捉相机3D位置的意义就在于能够在添加3D物体模型的时候计算出3D物体模型相对于相机的真实的矩阵位置

三、API分析

-AROrientationTrackingConfiguration

tracks the device’s movement with three degrees of freedom (3DOF): specifically, the three rotation axes;只跟踪三个,不如下面的这个。

-[ARWorldTrackingConfiguration](https://developer.apple.com/documentation/arkit/arworldtrackingconfiguration)

负责跟踪相机,检测平面。tracks the device’s movement with six degrees of freedom (6DOF)。

完成slam工作的主要内容应该就是在这个里面。

但启动一个最简单的AR, 只需要:

let configuration = ARWorldTrackingConfiguration()configuration.planeDetection=.horizontalsceneView.session.run(configuration)

1

2

3

具体的实现还是被封装了。。。

-ARCamera

ARCamera类里有很多相关的Topics:

Tablescolscols

Handling Tracking StatustrackingStateThe general quality of position tracking available when the camera captured a frame.

ARTrackingStatePossible values for position tracking quality.

trackingStateReasonA possible diagnosis for limited position tracking quality as of when the camera captured a frame.

ARTrackingStateReasonPossible causes for limited position tracking quality.

Examining Camera GeometrytransformThe position and orientation of the camera in world coordinate space.

eulerAnglesThe orientation of the camera, expressed as roll, pitch, and yaw values.

Examining Imaging ParametersimageResolutionThe width and height, in pixels, of the captured camera image.

intrinsicsA matrix that converts between the 2D camera plane and 3D world coordinate space.

Applying Camera GeometryprojectionMatrixA transform matrix appropriate for rendering 3D content to match the image captured by the camera.

projectionMatrixForOrientation:Returns a transform matrix appropriate for rendering 3D content to match the image captured by the camera, using the specified parameters.

viewMatrixForOrientation:Returns a transform matrix for converting from world space to camera space.

projectPoint:orientation:viewportSize:Returns the projection of a point from the 3D world space detected by ARKit into the 2D space of a view rendering the scene.

-ARFrame

ARFrame 类里的Topics 可以看到slam输入输出接口

Tablescolscols

Accessing Captured Video FramescapturedImageA pixel buffer containing the image captured by the camera.

timestampThe time at which the frame was captured.

capturedDepthDataThe depth map, if any, captured along with the video frame.

capturedDepthDataTimestampThe time at which depth data for the frame (if any) was captured.

Examining Scene ParameterscameraInformation about the camera position, orientation, and imaging parameters used to capture the frame.

lightEstimateAn estimate of lighting conditions based on the camera image.

displayTransformForOrientation:Returns an affine transform for converting between normalized image coordinates and a coordinate space appropriate for rendering the camera image onscreen.

Tracking and Finding ObjectsanchorsThe list of anchors representing positions tracked or objects detected in the scene.

hitTest:types:Searches for real-world objects or AR anchors in the captured camera image.

Debugging Scene DetectionrawFeaturePointsThe current intermediate results of the scene analysis ARKit uses to perform world tracking.

ARPointCloudA collection of points in the world coordinate space of the AR session.

关于特征点通过ARPointCloud可以看到特征点的个数和identitiers;

究竟是用的什么特征点?可能需要看下identitiers的维数等信息。SIFT 特征的descriptor是128维。

-ARLightEstimate

略

ARCore

google 发布了对应 Android studio、 Unity、Unreal以及Web的环境的ARCore,我们只看Unity。

API 官网:https://developers.google.com/ar/reference/unity/。

SDK on Github :https://github.com/google-ar/arcore-unity-sdk;