Nginx+Keepalived双机热备(主主模式)

Keepalived介绍

Keepalived是一个基于VRRP协议来实现的服务高可用方案,可以利用其来避免IP单点故障,类似的工具还有heartbeat、corosync、pacemaker。但是它一般不会单独出现,而是与其它负载均衡技术(如lvs、haproxy、nginx)一起工作来达到集群的高可用。

Keepalived的 VRRP协议将两台或多台路由器设备虚拟成一个虚拟路由器,对外提供虚拟路由器IP(一个或多个);

而在路由器组内部,如果实际拥有这个对外IP的路由器如果工作正常的话就是MASTER,或者是通过算法选举产生;

MASTER实现针对虚拟路由器IP的各种网络功能,如ARP请求,ICMP,以及数据的转发等;

其他设备不拥有该IP,状态是BACKUP,除了接收MASTER的VRRP状态通告信息外,不执行对外的网络功能。

当主机失效时,BACKUP将接管原先MASTER的网络功能。

双机高可用有两种:

1)双机主从模式:即前端使用两台服务器,一台主服务器和一台热备服务器,正常情况下,主服务器绑定一个公网虚拟IP,提供负载均衡服务,热备服务器处于空闲状态;当主服务器发生故障时,热备服务器接管主服务器的公网虚拟IP,提供负载均衡服务;但是热备服务器在主机器不出现故障的时候,永远处于浪费状态,对于服务器不多的网站,该方案不经济实惠。

2)双机主主模式:即前端使用两台负载均衡服务器,互为主备,且都处于活动状态,同时各自绑定一个公网虚拟IP,提供负载均衡服务;当其中一台发生故障时,另一台接管发生故障服务器的公网虚拟IP(这时由非故障机器一台负担所有的请求)。这种方案,经济实惠,非常适合于当前架构环境。

本文将搭建Nginx+Keepalived实现高可用的双机主主模式

一、环境说明

操作系统:centos7 64位

软件源:阿里云

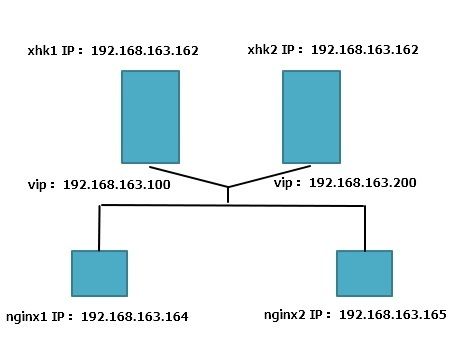

2台服务器(xhk1、xhk2)安装keepalived和安装nginx来反向代理

2台服务器(nginx1、nginx2)安装nginx来提供服务

服务器的防火墙和selinux全部关闭

xhk1 IP:192.168.163.162

xhk2 IP:192.168.163.163

nginx1 IP:192.168.163.164

nginx2 IP:192.168.163.165

虚拟IP:192.168.163.100和192.168.163.200

拓扑图如下:

二、环境安装

首先安装nginx,每台服务器都要安装,前端2台服务器的nginx用作反向代理,后端2台服务器提供web服务。

安装依赖包:

[root@xhk1 src]# yum install -y gcc make pcre-devel zlib-devel openssl-devel

去官网下载nginx软件包,网址为http:// http://nginx.org/

[root@xhk1 ~]# cd /usr/local/src/

[root@xhk1 ~]# wget http://nginx.org/download/nginx-1.13.6.tar.gz

解压到当前路径:

[root@xhk1 src]# tar xf nginx-1.13.6

进入到解压好的目录,编译安装:

[root@xhk1src]#./configure --user=nginx --group=nginx --prefix=/usr/local/nginx --with-http_stub_status_module --with-http_ssl_module --with-pcre

[root@xhk1 src]# make && make install

创建nginx用户,并启动nginx服务:

[root@xhk1 src]# useradd nginx

[root@xhk1 src]# /usr/local/nginx/sbin/nginx

至此,nginx的安装完成,其余3台服务器也按照同样的操作安装nginx

接下来为后端2台web服务器创建简单的首页

[root@nginx1 ~]# echo “nginx1” > /usr/local/nginx/html/index.html

[root@nginx2 ~]# echo “nginx2” > /usr/local/nginx/html/index.html

进行简单的访问测试:

[root@xhk1 ~]# curl 192.168.163.164

nginx1

[root@xhk1 ~]# curl 192.168.163.165

nginx2

接下来,就是要在前端的2台服务器做nginx的反向代理

编辑nginx的配置文件:

[root@xhk1 ~]#vim /usr/local/nginx/conf/nginx.conf

添加upstream模块:(注意该模块要在http模块里,server模块外)

upstream backend {

server 192.168.163.164 max_fails=3 fail_timeout=10s;

server 192.168.163.165 max_fails=3 fail_timeout=10s;

}

每个设备的状态设置为:

1.down 表示单前的server暂时不参与负载

2.weight 默认为1.weight越大,负载的权重就越大。

3.max_fails :允许请求失败的次数默认为1.当超过最大次数时,返回proxy_next_upstream 模块定义的错误

4.fail_timeout:max_fails次失败后,暂停的时间。

5.backup:其它所有的非backup机器down或者忙的时候,请求backup机器。所以这台机器压力会最轻。

在server里添加一个location模块:

location / {

proxy_pass http://backend;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

proxy_set_header指令就是该模块需要读取的配置文件。在这里,所有设置的值的含义和http请求同中的含义完全相同,除了Host外还有X-Forward-For。

Host的含义是表明请求的主机名,因为nginx作为反向代理使用,而如果后端真是的服务器设置有类似防盗链或者根据http请求头中的host字段来进行路由或判断功能的话,如果反向代理层的nginx不重写请求头中的host字段,将会导致请求失败【默认反向代理服务器会向后端真实服务器发送请求,并且请求头中的host字段应为proxy_pass指令设置的服务器】。

保存退出并确认配置文件没错之后,重启nginx服务

[root@xhk1 ~]# /usr/local/nginx/sbin/nginx -t

nginx: theconfiguration file/usr/local/nginx/conf/nginx.conf syntax is ok

nginx:configuration file/usr/local/nginx/conf/nginx.conf test is successful

[root@xhk1 ~]# /usr/local/nginx/sbin/nginx -s reload

检测是否实现负载均衡:

[root@xhk1 ~]# curl192.168.163.162

nginx1

[root@xhk1 ~]# curl 192.168.163.162

nginx2

[root@xhk1 ~]# curl 192.168.163.162

nginx1

[root@xhk1 ~]# curl 192.168.163.162

nginx2

可以发现,每次访问时,前端服务器会将请求均衡地转到后端服务器

在另一台反向代理服务器也进行同样的操作配置nginx反向代理

接下来为前端的2台服务器安装keepalived

[root@xhk1 ~]# yum install -y keepalived

[root@xhk2 ~]# yum install -y keepalived

编辑keepalived文件:

为了实现双机主主模式,我们需要创建2个VIP

先编辑第一台(xhk1)的keepalived配置文件:

[root@xhk1 ~]#vim /etc/keepalived/keepalived.conf

global_defs {

notification_email { #邮件接收者

root@localhost

}

notification_email_fromkeepalived@localhost #邮件发送者

smtp_server 127.0.0.1 #邮件服务器地址

smtp_connect_timeout 30 #超时时限

router_id xhk #此处注意router_id为负载均衡标识

vrrp_mcast_group4 224.0.100.19 #添加ipv4的多播地址,并确保多播功能启用

}

vrrp_script check_nginx {

script /root/1.sh #检测脚本

interval 2 #每隔2秒钟检测一次

weight -2 #优先级减去2

}

vrrp_instance VI_1 {

state MASTER #状态只有MASTER和BACKUP两种

interface ens32 #网络接口

virtual_router_id 51 #虚拟路由标识,同一个instance的MASTER和BACKUP一致的

priority 100 #优先级,同一个instance的MASTER优先级必须比BACKUP高

advert_int 1 #MASTER 与 BACKUP之间同步检查的时间间隔,单位为秒

authentica0tion {

auth_type PASS #验证authentication。包含验证类型和验证密码

auth_pass xhk #AH使用时有问题。验证密码为明文

}

virtual_ipaddress {

192.168.163.100 dev ens32 #虚拟ip地址,可以有多个地址

}

track_script { #运行上面所写的检测脚本

check_nginx

}

}

vrrp_instance VI_2 {

state BACKUP

interface ens32

virtual_router_id 52

priority 99

nopreempt

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.163.200 dev ens32

}

track_script {

check_nginx

}

}

再去编辑第二台服务器(xhk2)的keepalived配置文件:

[root@xhk2 ~]# vim /etc/keepalived/keepalived.conf

global_defs {

notification_email {

root@localhost

}

notification_email_fromkeepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id xhk

vrrp_mcast_group4 224.0.100.19

}

vrrp_script check_nginx {

script"/root/1.sh"

interval 2

weight -2

}

vrrp_instance VI_1 {

state BACKUP

interface ens32

virtual_router_id 51

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass xhk

}

virtual_ipaddress {

192.168.163.100 dev ens32

}

track_script {

check_nginx

}

}

vrrp_instance VI_2 {

state MASTER

interface ens32

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.163.200 dev ens32

}

track_script {

check_nginx

}

}

附上检测nginx反向代理的脚本:

[root@xhk1 ~]# vim /root/1.sh

#!/bin/bash ps -C nginx -o pid if [ $? -eq 1 ];then /usr/local/nginx/sbin/nginx sleep 3 ps -C nginx -o pid if [ $? -eq 1 ];then systemctl stop keepalived fi fi

为脚本添加执行权限

[root@xhk1 ~]# chmod a+x /root/1.sh

在xhk2也创建以上同样的脚本

启动keepalived服务并设置开机自启动:

[root@xhk1 ~]# systemctl start keepalived

[root@xhk1 ~]# systemctl enable keepalived

[root@xhk2 ~]# systemctl start keepalived

[root@xhk2 ~]# systemctl enable keepalived

查看keepalived服务状态

[root@xhk1 ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP HighAvailability Monitor

Loaded:loaded(/usr/lib/systemd/system/keepalived.service; disabled; vendor preset:disabled)

Active: active (running) sinceFri 2017-10-20 00:07:48 EDT;28s ago

Process: 2712ExecStart=/usr/sbin/keepalived$KEEPALIVED_OPTIONS (code=exited,status=0/SUCCESS)

Main PID: 2713 (keepalived)

CGroup:/system.slice/keepalived.service

├─2306 nginx: masterprocess /usr/local/nginx/sbin/nginx

├─2308 nginx: workerprocess

├─2713/usr/sbin/keepalived -D

├─2714/usr/sbin/keepalived -D

└─2715/usr/sbin/keepalived -D

[root@xhk2 ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP HighAvailability Monitor

Loaded:loaded(/usr/lib/systemd/system/keepalived.service; disabled; vendor preset:disabled)

Active: active (running) sinceFri 2017-10-20 00:07:59 EDT;29s ago

Process:2763ExecStart=/usr/sbin/keepalived $KEEPALIVED_OPTIONS(code=exited,status=0/SUCCESS)

Main PID: 2764 (keepalived)

CGroup:/system.slice/keepalived.service

├─2353 nginx: masterprocess /usr/local/nginx/sbin/nginx

├─2355 nginx: workerprocess

├─2764/usr/sbin/keepalived -D

├─2765/usr/sbin/keepalived -D

└─2766/usr/sbin/keepalived -D

接下来就是测试环节

由于xhk1设置MASTER的VIP是192.168.163.100,所以可以看到xhk1有一个VIP:192.168.163.100

[root@xhk1 ~]# ip addr sh

1: lo:

link/loopback00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scopehostlo

valid_lft foreverpreferred_lft forever

inet6 ::1/128 scopehost

valid_lft foreverpreferred_lft forever

2: ens32:

link/ether00:0c:29:0e:bc:15brd ff:ff:ff:ff:ff:ff

inet 192.168.163.162/24brd192.168.163.255 scope global dynamic ens32

valid_lft 1586secpreferred_lft 1586sec

inet 192.168.163.100/32 scopeglobal ens32

valid_lft foreverpreferred_lft forever

inet6fe80::20c:29ff:fe0e:bc15/64 scope link

valid_lft foreverpreferred_lft forever

在xhk2上设置MASTER的VIP为192.168.163.200

[root@xhk2 ~]# ip addr sh

1: lo:

link/loopback00:00:00:00:00:00brd 00:00:00:00:00:00

inet 127.0.0.1/8 scopehostlo

valid_lft forever preferred_lft forever

inet6 ::1/128 scopehost

valid_lft foreverpreferred_lft forever

2: ens32:

link/ether00:0c:29:82:ca:01brd ff:ff:ff:ff:ff:ff

inet 192.168.163.163/24brd192.168.163.255 scope global dynamic ens32

valid_lft 1772secpreferred_lft 1772sec

inet 192.168.163.200/32 scopeglobal ens32

valid_lft foreverpreferred_lft forever

inet6fe80::20c:29ff:fe82:ca01/64scope link

valid_lft foreverpreferred_lft forever

进行访问,能够看到前端服务器将请求均衡转到后端服务器

[root@clinet ~]# curl 192.168.163.100

nginx1

[root@clinet ~]# curl 192.168.163.100

nginx2

[root@clinet ~]# curl 192.168.163.200

nginx1

[root@clinet ~]# curl 192.168.163.200

nginx2

接下来测试前端服务器nginx服务停掉的状态,首先会尝试启动nginx,结果启动nginx服务成功,网页正常访问

[root@xhk1 ~]# /usr/local/nginx/sbin/nginx -s stop

[root@xhk1 ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP HighAvailability Monitor

Loaded:loaded(/usr/lib/systemd/system/keepalived.service; disabled; vendor preset:disabled)

Active: active (running) sinceFri 2017-10-20 00:07:48 EDT;18min ago

Process: 2712ExecStart=/usr/sbin/keepalived$KEEPALIVED_OPTIONS (code=exited,status=0/SUCCESS)

Main PID: 2713 (keepalived)

CGroup:/system.slice/keepalived.service

├─2713/usr/sbin/keepalived -D

├─2714/usr/sbin/keepalived -D

├─2715 /usr/sbin/keepalived -D

├─4375 nginx: masterprocess /usr/local/nginx/sbin/nginx

└─4377 nginx: workerprocess

Oct 20 00:08:13 xhk1Keepalived_vrrp[2715]: Sending gratuitous ARP onens32 for 192.168.163.200

Oct 20 00:08:13 xhk1 Keepalived_vrrp[2715]:Sending gratuitous ARP onens32 for 192.168.163.200

Oct 20 00:08:13 xhk1Keepalived_vrrp[2715]: Sending gratuitous ARP onens32 for 192.168.163.200

Oct 20 00:08:13 xhk1Keepalived_vrrp[2715]: Sending gratuitous ARP onens32 for 192.168.163.200

Oct 20 00:08:16 xhk1Keepalived_vrrp[2715]: VRRP_Instance(VI_2)Received advert with higher priority100, ours 99

Oct 20 00:08:16 xhk1Keepalived_vrrp[2715]: VRRP_Instance(VI_2)Entering BACKUP STATE

Oct 20 00:08:16 xhk1Keepalived_vrrp[2715]: VRRP_Instance(VI_2)removing protocol VIPs.

Oct 20 00:26:05 xhk1Keepalived_vrrp[2715]: VRRP_Script(check_nginx)timed out

Oct 20 00:26:05 xhk1Keepalived_vrrp[2715]: /root/1.sh exited due tosignal 15

Oct 20 00:26:05 xhk1Keepalived_vrrp[2715]: VRRP_Script(check_nginx)succeeded

[root@xhk1 ~]# ps -ef | grep nginx

root 4375 1 0 00:26? 00:00:00 nginx:master process/usr/local/nginx/sbin/nginx

nginx 4377 4375 0 00:26 ? 00:00:00 nginx:worker process

root 4431 2222 0 00:26 pts/0 00:00:00grep--color=auto nginx

可以看到,keepalived每隔2秒运行的nginx检测脚本,发现nginx停掉的时候,尝试启动nginx,启动成功!

接下来测试nginx起不来的情况

为了模拟nginx起不来的情况,修改一下脚本,检测nginx不提供服务,就停掉keepalived服务

[root@xhk1 ~]# vim /root/1.sh

#!/bin/bash ps -C nginx -o pid if [ $? -eq 1 ];then systemctl stop keepalived fi

先停掉nginx服务

[root@xhk1 ~]# ps -ef |grep nginx |awk '{print $2}' |xargs kill -9

[root@xhk1 ~]# ps -ef |grep nginx

root 5390 2222 0 00:51pxs/0 00:00:00 grep --color=auto nginx

发现keepalived自动停掉

[root@xhk1 ~]# systemctl status keepalived

● keepalived.service - LVS and VRRP HighAvailability Monitor

Loaded:loaded(/usr/lib/systemd/system/keepalived.service; disabled; vendor preset:disabled)

Active: inactive (dead)

Oct 20 00:38:50 xhk1 Keepalived_vrrp[5400]:Opening file'/etc/keepalived/keepalived.conf'.

Oct 20 00:39:07 xhk1Keepalived_vrrp[5400]: VRRP_Instance(VI_1)removing protocol VIPs.

Oct 20 00:39:07 xhk1Keepalived_vrrp[5400]: VRRP_Instance(VI_2)removing protocol VIPs.

Oct 20 00:39:07 xhk1 Keepalived_vrrp[5400]:SECURITY VIOLATION -scripts are being executed but script_security n...bled.

Oct 20 00:39:07 xhk1Keepalived_vrrp[5400]: Using LinkWatch kernelnetlink reflector...

Oct 20 00:39:07 xhk1Keepalived_vrrp[5400]: VRRP_Instance(VI_2)Entering BACKUP STATE

Oct 20 00:39:07 xhk1Keepalived_vrrp[5400]: VRRP sockpool: [ifindex(2),proto(112), unicast(0),fd(10,11)]

Oct 20 00:39:07 xhk1 Keepalived[5398]:Stopping

Oct 20 00:39:07 xhk1 systemd[1]: StoppingLVS and VRRP HighAvailability Monitor...

Oct 20 00:39:09 xhk1 systemd[1]: Stopped LVSand VRRP High AvailabilityMonitor.

Hint: Some lines were ellipsized, use -lto show in full.

可以发现VIP都自动跳到另一台前端服务器(xhk2)上

[root@xhk2 ~]# ip add sh

1: lo:

link/loopback00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scopehostlo

valid_lft foreverpreferred_lft forever

inet6 ::1/128 scopehost

valid_lft foreverpreferred_lft forever

2: ens32:

link/ether00:0c:29:82:ca:01brd ff:ff:ff:ff:ff:ff

inet 192.168.163.163/24brd192.168.163.255 scope global dynamic ens32

valid_lft 1598secpreferred_lft 1598sec

inet 192.168.163.200/32scopeglobal ens32

valid_lft foreverpreferred_lft forever

inet 192.168.163.100/32scopeglobal ens32

valid_lft foreverpreferred_lft forever

inet6fe80::20c:29ff:fe82:ca01/64 scope link

valid_lft foreverpreferred_lft forever

网页正常访问

[root@clinet ~]#curl 192.168.163.200

nginx1

[root@clinet ~]#curl 192.168.163.200

nginx2

[root@clinet ~]#curl 192.168.163.100

nginx1

[root@clinet ~]#curl 192.168.163.100

nginx2