借着平时在实验室摸鱼的功夫,尝试了搭建hadoop的集群环境,使用docker来建立了3个节点,模拟集群环境。具体步骤如下:

1.进入docker控制台,使用ubuntu14.04作为基础镜像

docker pull ubuntu:14.04

2.进入ubuntu

docker run -it ubuntu:14.04

3.升级apt-get

apt-get update

4.安装vim

apt-get install vim

5.替换apt-get镜像源

vim /etc/apt/sources.list

全部替换为如下内容

deb-src http://archive.ubuntu.com/ubuntu xenial main restricted #Added by software-properties

deb http://mirrors.aliyun.com/ubuntu/ xenial main restricted

deb-src http://mirrors.aliyun.com/ubuntu/ xenial main restricted multiverse universe #Added by software-properties

deb http://mirrors.aliyun.com/ubuntu/ xenial-updates main restricted

deb-src http://mirrors.aliyun.com/ubuntu/ xenial-updates main restricted multiverse universe #Added by software-properties

deb http://mirrors.aliyun.com/ubuntu/ xenial universe

deb http://mirrors.aliyun.com/ubuntu/ xenial-updates universe

deb http://mirrors.aliyun.com/ubuntu/ xenial multiverse

deb http://mirrors.aliyun.com/ubuntu/ xenial-updates multiverse

deb http://mirrors.aliyun.com/ubuntu/ xenial-backports main restricted universe multiverse

deb-src http://mirrors.aliyun.com/ubuntu/ xenial-backports main restricted universe multiverse #Added by software-properties

deb http://archive.canonical.com/ubuntu xenial partner

deb-src http://archive.canonical.com/ubuntu xenial partner

deb http://mirrors.aliyun.com/ubuntu/ xenial-security main restricted

deb-src http://mirrors.aliyun.com/ubuntu/ xenial-security main restricted multiverse universe #Added by software-properties

deb http://mirrors.aliyun.com/ubuntu/ xenial-security universe

deb http://mirrors.aliyun.com/ubuntu/ xenial-security multiverse

6.重新升级apt-get

apt-get update

7.安装java

sudo-apt install opened-8-jdk

8.安装wget

apt-get install wget

9.创建hadoop目录

mkdir -p soft/apache/hadoop/

10.进入hadoop目录

cd soft/apache/hadoop

11.下载hadoop

wget http://mirrors.sonic.net/apache/hadoop/common/hadoop-2.6.5/hadoop-2.6.5.tar.gz

12.解压hadoop

tar -xvzf Hadoop-2.6.5.tar.gz

13.配置环境变量

vim ~/.bashrc

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export HADOOP_HOME=/root/soft/apache/hadoop/hadoop-2.6.5

export HADOOP_CONFIG_HOME=$HADOOP_HOME/etc/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

14.重启配置文件

source ~/.bashrc

15.创建文件夹

cd $HADOOP_HOME

mkdir tmp

mkdir namenode

mkdir datanode

16.修改配置文件

cd $HADOOP_CONFIG_HOME

vim core-site.xml

hadoop.tmp.dir

/root/soft/apache/hadoop/hadoop-2.6.5/tmp

fs.default.name

hdfs://master:9000

true

vim hdfs-site.xml

dfs.replication

2

true

dfs.namenode.name.dir

/root/soft/apache/hadoop/hadoop-2.6.5/namenode

true

dfs.datanode.name.dir

/root/soft/apache/hadoop/hadoop-2.6.5/datanode

true

cp marred-site.xml.template marred-site.xml

vim mapred-site.xml

mapred.job.tarcker

master:9001

17.修改hadoop环境变量

vim hadoop-env.sh

在该文件最后加入:

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

18.刷新数据源

hadoop namenode -format

19.安装ssh

apt-get install ssh

20.并将ssh自启动写入配置文件

vim ~/.bashrc

在该文件最后写入

/usr/sbin/sshd

创建sshd目录

mkdir -p ~/var/run/sshd

21.生成访问密钥

cd ~/

ssh-keygen -t rsa -P '' -f ~/.ssh/id_dsa

cd .ssh

cat id_dsa.pub >> authorized_keys

22.修改ssh配置

ssh_config文件

vim etc/ssh/ssh_config

StrictHostKeyChecking no #将ask改为no

sshd_config文件

vim etc/ssh/sshd_config

#禁用密码验证

PasswordAuthentication no

#启用密钥验证

RSAAuthentication yes

PubkeyAuthentication yes

AuthorizedKeysFile .ssh/authorized_keys

23.退出容器,进入docker控制台,保存当前容器

docker commit xxxx ubuntu:hadoop

其中xxx为容器id

24.启动matser、slave1、slave2,三个容器

docker run -ti -h master ubuntu:hadoop

docker run -ti -h slave1 ubuntu:hadoop

docker run -ti -h slave2 ubuntu:hadoop

25.修改每个容器的host文件

对matser、slave1、slave2里的host文件,分别加入其他两个容器的ip

vim /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.2 master

172.17.0.3 slave1

172.17.0.4 slave2

26.修改master的slaves配置文件

cd $HADOOP_CONFIG_HOME/

vim slaves

将两个slave的name写入slaves配置文件

slave1

slave2

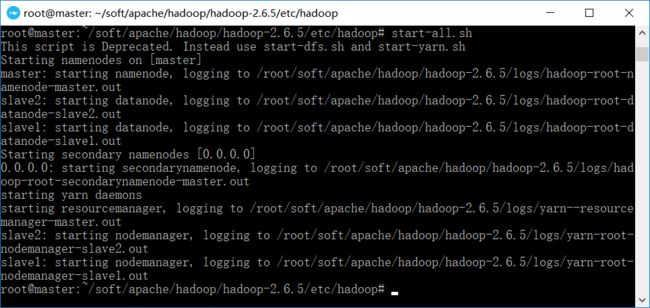

27.启动hadoop!

在master节点启动hadoop

start-all.sh

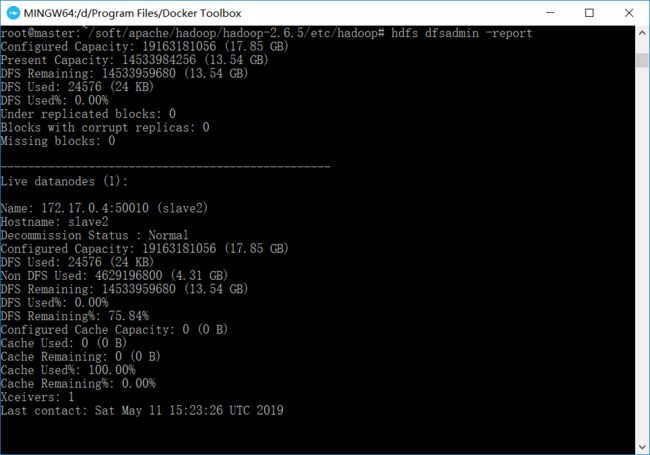

28.查看各节点状态

在master节点输入

hdfs dfsadmin -report

咦,slave1节点挂了?算了,这个问题,改天再解决吧。

总的来说,hadoop环境算是勉强搭建成功了,干杯!