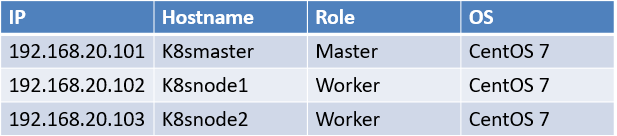

系统清单

环境准备

- 修改主机名并添加主机名映射

- 关闭并禁用防火墙

- 关闭并禁用SELinux

- 关闭Swap

- 设置系统参数

- 所有节点安装docker并启动

- 传输kubeadm v1.9.2文件包

- 导入相关镜像

- 安装kubeadm、kubelet、kubectl

- 启动kubelet服务

关闭Swap:

[root@k8smaster ~]# swapoff -a

临时关闭Swap,如果不关闭,后面操作中节点可能无法加入集群!!

设置系统参数:

[root@k8smaster ~]# cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

[root@k8smaster ~]# sysctl --system

安装docker并启动:

[root@k8smaster ~]# yum install docker -y

启动的过程中如果遇到以下报错:

Nov 16 20:45:28 k8smaster dockerd-current[3932]: Error starting daemon: SELinux is not supported ......

修改/etc/sysconfig/docker中的内容:--selinux-enabled=false

[root@k8smaster ~]# docker --version

Docker version 1.13.1, build 8633870/1.13.1

kubeadm 1.9.2 RAR压缩包需要先解压再上传到各节点:

[root@k8smaster ~]# scp -r kubeadm\ 1.9.2/ [email protected]:/root/

[root@k8smaster ~]# tree kubeadm\ 1.9.2/

kubeadm\ 1.9.2/

├── images

│ ├── cni.tar.gz

│ ├── etcd-amd64.tar.gz

│ ├── etcd.tar.gz

│ ├── flannel.tar.gz

│ ├── k8s-dns-dnsmasq-nanny-amd64.tar.gz

│ ├── k8s-dns-kube-dns-amd64.tar.gz

│ ├── k8s-dns-sidecar-amd64.tar.gz

│ ├── kube-apiserver-amd64.tar.gz

│ ├── kube-controller-manager-amd64.tar.gz

│ ├── kube-controllers.tar.gz

│ ├── kube-proxy-amd64.tar.gz

│ ├── kubernetes-dashboard-amd64.tar.gz

│ ├── kube-scheduler-amd64.tar.gz

│ ├── node.tar.gz

│ └── pause-amd64.tar.gz

├── rpm

│ ├── 0e1c33997496242d47dd85fffa18e364ea000dc423afdb65bb91f8a53ff98a6f-kubelet-1.9.2-0.x86_64.rpm

│ ├── 2dd849a46b6ca33d527c707136f6f66dfc12887640171333f3bb8fab9f95faac-kubectl-1.9.2-0.x86_64.rpm

│ ├── eb3f5ec9f28b4e6548acc87211b74534c6eae3cabfe870addeff84ac3243a77e-kubeadm-1.9.2-0.x86_64.rpm

│ ├── fe33057ffe95bfae65e2f269e1b05e99308853176e24a4d027bc082b471a07c0-kubernetes-cni-0.6.0-0.x86_64.rpm

│ └── socat-1.7.3.2-2.el7.x86_64.rpm

└── yml

├── calico.yaml

├── kube-flannel.yml

├── kubernetes-dashboard-rbac-admin.yml

├── kubernetes-dashboard.yml

├── nginx-ingress-rbac.yml

└── nginx-ingress.yml

Images目录下是部署集群时需要的相关镜像。

rpm目录下是kubeadm、kubelet、kubectl相关软件包

yml目录下是k8s集群安装网络和Dashboard的配置文件

在所有节点导入相关镜像:

[root@k8smaster ~]# cat loading.sh

#/bin/sh

for Imagefile in `ls /root/kubeadm\ 1.9.2/`;

do

tar -xzvf $Imagefile

docker load -i $Imagefile

done

[root@k8smaster images]# . /root/loading.sh

检查相关镜像:

[root@k8smaster images]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

quay.io/calico/node v2.6.7 7c694b9cac81 9 months ago 282 MB

gcr.io/google_containers/kube-proxy-amd64 v1.9.2 e6754bb0a529 10 months ago 109 MB

gcr.io/google_containers/kube-apiserver-amd64 v1.9.2 7109112be2c7 10 months ago 210 MB

gcr.io/google_containers/kube-controller-manager-amd64 v1.9.2 769d889083b6 10 months ago 138 MB

gcr.io/google_containers/kube-scheduler-amd64 v1.9.2 2bf081517538 10 months ago 62.7 MB

k8s.gcr.io/kubernetes-dashboard-amd64 v1.8.2 c87ea0497294 10 months ago 102 MB

quay.io/calico/cni v1.11.2 6f0a76fc7dd2 11 months ago 70.8 MB

gcr.io/google_containers/etcd-amd64 3.1.11 59d36f27cceb 11 months ago 194 MB

quay.io/coreos/flannel v0.9.1-amd64 2b736d06ca4c 12 months ago 51.3 MB

gcr.io/google_containers/k8s-dns-sidecar-amd64 1.14.7 db76ee297b85 12 months ago 42 MB

gcr.io/google_containers/k8s-dns-kube-dns-amd64 1.14.7 5d049a8c4eec 12 months ago 50.3 MB

gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64 1.14.7 5feec37454f4 12 months ago 41 MB

quay.io/coreos/etcd v3.1.10 47bb9dd99916 16 months ago 34.6 MB

gcr.io/google_containers/pause-amd64 3.0 99e59f495ffa 2 years ago 747 kB

安装kubeadm、kubelet、kubectl:

[root@k8smaster ~]# cd kubeadm\ 1.9.2/rpm/

[root@k8smaster rpm]# yum install -y *.rpm

初始化集群

网络方案:flannel和calico

使用flannel方案:

初始化集群:

[root@k8smaster ~]# kubeadm init --kubernetes-version=v1.9.2 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=192.168.20.101

[root@k8smaster ~]# mkdir -p $HOME/.kube

[root@k8smaster ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@k8smaster ~]# chown $(id -u):$(id -g) $HOME/.kube/config

加入K8s集群:

[root@k8snode1 rpm]# kubeadm join --token b6ea38.36afd3e974849eeb 192.168.20.101:6443 --discovery-token-ca-cert-hash sha256:b79cc4dd68a7d10b8b4461fa6f8ded0eb33a260940208ec64c45889261ea93ba

[root@k8snode2 rpm]# kubeadm join --token b6ea38.36afd3e974849eeb 192.168.20.101:6443 --discovery-token-ca-cert-hash sha256:b79cc4dd68a7d10b8b4461fa6f8ded0eb33a260940208ec64c45889261ea93ba

部署flannel网络方案:

[root@k8smaster yml]# pwd

/root/kubeadm 1.9.2/yml

[root@k8smaster yml]# kubectl apply -f kube-flannel.yml

检查集群状态:

[root@k8smaster yml]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"}

检查kubelet服务是否正常运行:

[root@k8smaster yml]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: active (running) since Fri 2018-11-16 21:58:56 EST; 12min ago

Docs: http://kubernetes.io/docs/

Main PID: 15607 (kubelet)

CGroup: /system.slice/kubelet.service

└─15607 /usr/bin/kubelet --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --k...

检查集群的节点列表:

[root@k8smaster yml]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster Ready master 12m v1.9.2

k8snode1 Ready 9m v1.9.2

k8snode2 Ready 9m v1.9.2

检查集群的命名空间:

[root@k8smaster yml]# kubectl get namespaces

NAME STATUS AGE

default Active 12m

kube-public Active 12m

kube-system Active 12m

检查相关Pod:

[root@k8smaster yml]# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

etcd-k8smaster 1/1 Running 0 12m

kube-apiserver-k8smaster 1/1 Running 0 11m

kube-controller-manager-k8smaster 1/1 Running 0 11m

kube-dns-6f4fd4bdf-dj2q9 3/3 Running 0 12m

kube-flannel-ds-499c8 1/1 Running 0 7m

kube-flannel-ds-lh8gn 1/1 Running 0 7m

kube-flannel-ds-sjf9g 1/1 Running 0 7m

kube-proxy-54x7b 1/1 Running 0 10m

kube-proxy-htmc9 1/1 Running 0 12m

kube-proxy-xgw52 1/1 Running 0 9m

kube-scheduler-k8smaster 1/1 Running 0 12m

查看加入集群的token:

[root@k8smaster ~]# kubeadm token create --print-join-command

kubeadm join --token abeef5.a7f626195233d950 192.168.20.101:6443 --discovery-token-ca-cert-hash sha256:b79cc4dd68a7d10b8b4461fa6f8ded0eb33a260940208ec64c45889261ea93ba

安装部署Dashboard:

[root@k8smaster yml]# kubectl apply -f kubernetes-dashboard.yml

secret "kubernetes-dashboard-certs" created

serviceaccount "kubernetes-dashboard" created

role "kubernetes-dashboard-minimal" created

rolebinding "kubernetes-dashboard-minimal" created

deployment "kubernetes-dashboard" created

service "kubernetes-dashboard" created

[root@k8smaster yml]# kubectl apply -f kubernetes-dashboard-rbac-admin.yml

serviceaccount "kubernetes-dashboard-admin" created

clusterrolebinding "kubernetes-dashboard-admin" created

检查dashboard的admin用户的token:

[root@k8smaster yml]# kubectl get secret -n kube-system | grep kubernetes-dashboard-admin-token

kubernetes-dashboard-admin-token-g6h2x kubernetes.io/service-account-token 3 1m

查询token值:

[root@k8smaster yml]# kubectl describe secret kubernetes-dashboard-admin-token-g6h2x -n kube-system

Data

====

token: eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbi10b2tlbi1nNmgyeCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImUwYmZkMTk4LWVhMWItMTFlOC05ZjA3LTAwMGMyOWQ5ZjliNCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiJ9.Ez9pkUEqiSd49Sm49Oq7Qjzc5Fa4DoXI-pZfrwKlV2gSGZB8OTKJJiCGc70JBHjPH_oCraDFU2OcjRplC56oD-PQxDy9thON-5RhQkf42Mka5uxXOyYs31FuNP79iUy9ZWHTrJUff099l9xL7dOctJPiyXtyTscJuIcIrmtYY5bcuO1FKcyW2nKZMgzO3IKiuBjS1JrLyy4MmKNQmehGTfQW7_yayJB0_LHBObDl8nTE7d_LVs4N17TBbS0BLDzzdeFtT_i4wxgUGYw7hTpWjOH-hcoyqsn0tPKZK0zn3TLl4m2ovKq2zM9XuBzCTeh7goP6kvL8Sq-0JLueU_a8zA

检查dashboard服务的Nodeport:

[root@k8smaster yml]# kubectl get svc -n kube-system kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.106.216.140 443:31640/TCP 4m

打开浏览器:https://192.168.20.101:31640,输入令牌即可登录