克隆虚拟机

我克隆过来是直接可用的,可以 ping 通外网;若克隆出来的虚拟机有问题,可以查阅相关资料进行修改。

VMware虚拟机克隆CentOS后网卡修改方法

配置ssh 免登录

- 启动三台机器,分别修改机器名为 master、slave1、slave2 ,重启系统

[root@master ~]# vim /etc/sysconfig/network

NETWORKING=yes

iHOSTNAME=master

- 修改master 上的 etc/hosts

[root@master ~]# vim /etc/hosts

1 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomai n4

2 ::1 localhost localhost.localdomain localhost6 localhost6.localdomai n6

3 192.168.1.121 master

4 192.168.1.115 slave1

5 192.168.1.116 slave2

6 192.168.1.127 slave3

3.将hosts文件复制到slave1 和slave2

[root@master ~]# su hadoop

[hadoop@master root]$

[hadoop@master root]$ cd

[hadoop@master ~]$ sudo scp /etc/hosts root@slave1:/etc

We trust you have received the usual lecture from the local System

Administrator. It usually boils down to these three things:

#1) Respect the privacy of others.

#2) Think before you type.

#3) With great power comes great responsibility.

[sudo] password for hadoop:

The authenticity of host 'slave1 (192.168.1.115)' can't be established.

RSA key fingerprint is 59:a1:54:8c:86:55:eb:a8:a8:85:76:ca:59:05:f4:6f.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'slave1,192.168.1.115' (RSA) to the list of known hosts.

root@slave1's password:

hosts 100% 242 0.2KB/s 00:00

[hadoop@master ~]$ sudo scp /etc/hosts root@slave2:/etc

- 在master机器上使用Hadoop用户登录(确保接下来的操作都是通过hadoop用户执行)。执行 ssh -keygen -t rsa 命令产生公钥。

[hadoop@master ~]$ ssh -keygen -t rsa

Bad escape character 'ygen'.

[hadoop@master ~]$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/home/hadoop/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/hadoop/.ssh/id_rsa.

Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub.

The key fingerprint is:

ec:38:ba:0a:39:6d:97:60:b0:41:5a:e6:f3:b7:94:3e hadoop@master

The key's randomart image is:

+--[ RSA 2048]----+

| .o |

|o+ |

|+ o |

| + o o |

|. o . + S |

| + . = + |

|+ o o E . |

| + . . o |

| ..o. |

+-----------------+

- 将公钥复制到 slave1 和 slave2

[hadoop@master ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub slave1

The authenticity of host 'slave1 (192.168.1.115)' can't be established.

RSA key fingerprint is 59:a1:54:8c:86:55:eb:a8:a8:85:76:ca:59:05:f4:6f.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'slave1,192.168.1.115' (RSA) to the list of known hosts.

hadoop@slave1's password:

Now try logging into the machine, with "ssh 'slave1'", and check in:

.ssh/authorized_keys

to make sure we haven't added extra keys that you weren't expecting.

[hadoop@master ~]$ ssh-copy-id -i ~/.ssh/id_rsa.pub slave2

- 再次登录,可以发现不需要密码就可以登录slave1 ,slave2

v[hadoop@master ~]$ ssh slave1

[hadoop@slave1 ~]$

安装JDK

- 使用 yum search jdk 在线查找jdk 列表,任意选择一个版本进行安装

[root@master ~]# yum search jdk

[root@master ~]# yum install java-1.7.0-openjdk-devel.i686 -y

- 配置Java环境变量

# 查询Jdk 路径

[root@master ~]# whereis java

java: /usr/bin/java /etc/java /usr/lib/java /usr/share/java /usr/share/man/man1/java.1.gz

[root@master ~]# ll /usr/bin/java

lrwxrwxrwx. 1 root root 22 Sep 21 19:41 /usr/bin/java -> /etc/alternatives/java

#修改配置文件

法一:直接在末尾追究如下语句

[root@master ~]# vim /etc/profile

export JAVA_HOME=/usr/lib/jvm/java-1.7.0-openjdk-devel.i686

export MAVEN_HOME=/home/hadoop/local/opt/apache-maven-3.3.1

export JRE_HOME=${JAVA_HOME}/jre

export CLASSPATH=.:${JAVA_HOME}/lib/dt.jar:${JAVA_HOME}/lib/tools.jar

export PATH=${JAVA_HOME}/bin:$MAVEN_HOME/bin:$PATH

法二:系统环境变量文件一般不要轻易更改,可以选择在 /etc/profile.d/java.sh 文件中更改

[root@master ~]# vim /etc/profile.d/java.sh

添加如上所示的语句,然后保存。

# 保存配置后使用source 命令立即生效,进行测试

[root@master ~]# source /etc/profile.d/java.sh

[hadoop@master ~]$ java -version

java version "1.7.0_191"

OpenJDK Runtime Environment (rhel-2.6.15.4.el6_10-i386 u191-b01)

OpenJDK Client VM (build 24.191-b01, mixed mode, sharing)

安装配置MySQL(主节点)

#安装mysql服务器

[root@master ~]# yum install mysql-server

#设置开机启动

[root@master ~]# chkconfig mysqld on

#启动mysql服务

[root@master ~]# service mysqld start

Initializing MySQL database: Installing MySQL system tables...

OK

#设置root的初始密码

[root@master ~]# mysqladmin -u root password '******'

#进入mysql命令行,创建以下数据库

[root@master ~]# mysql -uroot -p******

mysql> #hive

mysql> create database hive DEFAULT CHARSET utf8 COLLATE utf8_general_ci;

Query OK, 1 row affected (0.00 sec)

mysql> #activity monitor

mysql> create database amon DEFAULT CHARSET utf8 COLLATE utf8_general_ci;Query OK, 1 row affected (0.00 sec)

#设置root授权访问以上所有的数据库

mysql> grant all privileges on *.* to 'root'@'%' identified by '123456' with grant option;

Query OK, 0 rows affected (0.00 sec)

mysql> flush privileges;

Query OK, 0 rows affected (0.00 sec)

所有节点配置NTP服务

集群中的所有主机必须保持时间同步,如果时间相差较大会引起一些问题。具体配置如下

#所有节点安装相关组件

[root@master ~]# yum install ntp

#完成后配置开机启动

[root@master ~]# chkconfig ntpd on

#检查设置是否成功,其中2--5为 on 就代表成功

[root@master ~]# chkconfig --list ntpd

ntpd 0:off 1:off 2:on 3:on 4:on 5:on 6:off

- 修改配置文件

打开master机器 /etc/ntp.conf 文件,留下关键信息。

driftfile /var/lib/ntp/drift

restrict 127.0.0.1

restrict -6 ::1

restrict default nomodify notrap

server 127.127.1.0

fudge 127.127.1.0 stratum 8

includefile /etc/ntp/crypto/pw

keys /etc/ntp/keys

- 配置主节

在配置之前,先使用ntpdate手动同步一下时间,免得本机与对时中心时间差距太大,使得ntpd不能正常同步。这里选用65.55.56.206作为对时中心,ntpdate -u 65.55.56.206

- 配置文件完成,保存退出,启动服务,执行如下命令:service ntpd start ;

- 检查是否成功:

[root@master ~]# ntpstat

#若出现以下字符,表示成功;否则需要等待5min左右

synchronised to NTP server (85.199.214.101) at stratum 2

time correct to within 438 ms

polling server every 64 s

安装Cloudera Manager Server 和Agent

主节点解压安装

cloudera manager的目录默认位置在/opt下,解压:cloudera-manager-centos7-cm5.12.1_x86_64.tar.gz 将解压后的cm 5.12.1 和cloudera目录放到/opt目录下。

[root@master opt]# cd cloudera-manager-centos7-cm5.12.1_x86_64

[root@master cloudera-manager-centos7-cm5.12.1_x86_64]# mv cloudera /opt/

[root@master cloudera-manager-centos7-cm5.12.1_x86_64]# mv cm-5.12.1 /opt/

首先需要去MySql的官网下载JDBC驱动,http://dev.mysql.com/downloads/connector/j/,解压后,找到mysql-connector-java-8.0.12.jar,放到/opt/cm-5.12.1/share/cmf/lib/中。

注:我在官网上下载的是 .rpm格式。经过多次加压之后可以找到目标文件:mysql-connector-java-8.0.12.jar

- 在主节点初始化CM5的数据库

/opt/cm-5.12.1/share/cmf/schema/scm_prepare_database.sh mysql cm -hmaster -uroot -p123456 --scm-host master scm scm scm

[root@master schema]# ./scm_prepare_database.sh mysql cm -hmaster -uroot -p123456 --scm-host master scm scm scm --force

JAVA_HOME=/usr/lib/jvm/java-1.7.0-openjdk-devel.i686

Verifying that we can write to /opt/cm-5.12.1/etc/cloudera-scm-server

./scm_prepare_database.sh: line 379: /usr/lib/jvm/java-1.7.0-openjdk-devel.i686/bin/java: No such file or directory

--> Error 127, ignoring (because force flag is set)

Creating SCM configuration file in /opt/cm-5.12.1/etc/cloudera-scm-server

groups: cloudera-scm: No such user

Executing: /usr/lib/jvm/java-1.7.0-openjdk-devel.i686/bin/java -cp /usr/share/java/mysql-connector-java.jar:/usr/share/java/oracle-connector-java.jar:/opt/cm-5.12.1/share/cmf/schema/../lib/* com.cloudera.enterprise.dbutil.DbCommandExecutor /opt/cm-5.12.1/etc/cloudera-scm-server/db.properties com.cloudera.cmf.db.

./scm_prepare_database.sh: line 441: /usr/lib/jvm/java-1.7.0-openjdk-devel.i686/bin/java: No such file or directory

--> Error 127, ignoring (because force flag is set)

All done, your SCM database is configured correctly!

- Agent配置

修改/opt/cm-5.3.3/etc/cloudera-scm-agent/config.ini中的server_host为主节点的主机名。 - 同步Agent到其他节点

scp -r /opt/cm-5.3.3 root@slave1:/opt/

scp -r /opt/cm-5.3.3 root@slave1:/opt/

- 在所有节点创建cloudera-scm用户

useradd --system --home=/opt/cm-5.12.1/run/cloudera-scm-server/ --no-create-home --shell=/bin/false --comment "Cloudera SCM User" cloudera-scm

准备Parcels,用以安装CDH

- 将CHD5相关的Parcel包放到主节点的/opt/cloudera/parcel-repo/目录中(parcel-repo需要手动创建)。

相关的文件如下:

CDH-5.12.1-1.cdh5.12.1.p0.3-el7.parcel.sha1

CDH-5.12.1-1.cdh5.12.1.p0.3-el7.parcel

manifest.json

最后将 CDH-5.12.1-1.cdh5.12.1.p0.3-el7.parcel.sha1 ,重命名为CDH-5.12.1-1.cdh5.12.1.p0.3-el7.parcel.sha,这点必须注意,否则,系统会重新下载CDH-5.12.1-1.cdh5.12.1.p0.3-el7.parcel.sha1 文件。

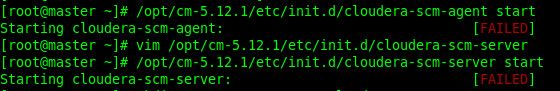

- 相关启动脚本

通过/opt/cm-5.12.1/etc/init.d/cloudera-scm-server start启动服务端。

通过/opt/cm-5.12.1/etc/init.d/cloudera-scm-agent start启动Agent服务。

我们启动的其实是个service脚本,需要停止服务将以上的start参数改为stop就可以了,重启是restart。

如上所示,启动服务端时报错,正在进一步学习。

未完 。。。。

修改

**[root@master ~]# vim /opt/cm-5.12.1/etc/init.d/cloudera-scm-server

**

chown -R cloudera-scm:cloudera-scm /opt/cm-5.7.0/run/cloudera-scm-agent