jdk 集合大家族之Map

前言:

之前章节复习了Collection接口相关,此次我们来一起回顾一下Map相关

。本文基于jdk1.8。

1. HashMap

1.1 概述

- HashMap相对于List的数据结构而言,它是键值对的集合。主要通过提供key值来取相对应的value的值。而不是通过遍历来查找所需要的值。

- key值允许一个为null value不限制

- key通常使用String Integer这种不可变类作为key

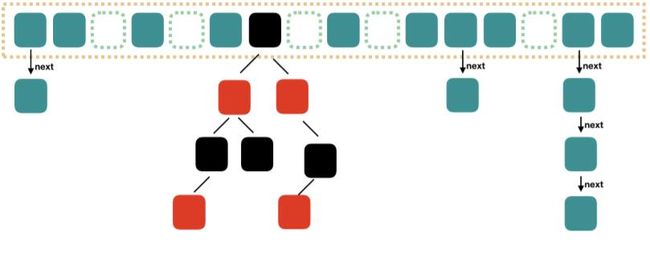

- 通过数组加链表加红黑树来实现,如下图所示

1.2 源码分析

- 成员变量

/**

* The default initial capacity - MUST be a power of two.

*/

// 数组长度默认的容量为16 必须是2的幂

static final int DEFAULT_INITIAL_CAPACITY = 1 << 4; // aka 16

/**

* The maximum capacity, used if a higher value is implicitly specified

* by either of the constructors with arguments.

* MUST be a power of two <= 1<<30.

*/

// 最大容量 为2^30

static final int MAXIMUM_CAPACITY = 1 << 30;

/**

* The load factor used when none specified in constructor.

*/

// 默认的负载因子,当构造时没有指定负载因子时使用此值

static final float DEFAULT_LOAD_FACTOR = 0.75f;

/**

* The bin count threshold for using a tree rather than list for a

* bin. Bins are converted to trees when adding an element to a

* bin with at least this many nodes. The value must be greater

* than 2 and should be at least 8 to mesh with assumptions in

* tree removal about conversion back to plain bins upon

* shrinkage.

*/

// 链表的长度大于8时转换为红黑树

static final int TREEIFY_THRESHOLD = 8;

/**

* The bin count threshold for untreeifying a (split) bin during a

* resize operation. Should be less than TREEIFY_THRESHOLD, and at

* most 6 to mesh with shrinkage detection under removal.

*/

// 链表中的长度小于6时从红黑树转换会链表

static final int UNTREEIFY_THRESHOLD = 6;

/**

* The smallest table capacity for which bins may be treeified.

* (Otherwise the table is resized if too many nodes in a bin.)

* Should be at least 4 * TREEIFY_THRESHOLD to avoid conflicts

* between resizing and treeification thresholds.

*/

// 链表转换为红黑树也不是随便转换的,需要满足Map中最少有MIN_TREEIFY_CAPACITY个Node才能允许树形化(将链表转化为红黑树)。

// 否则表中的过多节点会选择扩容

// 为了避免扩容和树形化的冲突的选择冲突,此值最少为 4 * TREEIFY_THRESHOLD

// 数组长度小于64也不会将链表转为红黑树(链表长度大于8但是小于64选择扩容数组,重新hash)

static final int MIN_TREEIFY_CAPACITY = 64;

/**

* The table, initialized on first use, and resized as

* necessary. When allocated, length is always a power of two.

* (We also tolerate length zero in some operations to allow

* bootstrapping mechanics that are currently not needed.)

*/

//

transient Node[] table;

/**

* Holds cached entrySet(). Note that AbstractMap fields are used

* for keySet() and values().

*/

transient Set> entrySet;

/**

* The number of key-value mappings contained in this map.

*/

// Map中键值对的数量

transient int size;

/**

* The number of times this HashMap has been structurally modified

* Structural modifications are those that change the number of mappings in

* the HashMap or otherwise modify its internal structure (e.g.,

* rehash). This field is used to make iterators on Collection-views of

* the HashMap fail-fast. (See ConcurrentModificationException).

*/

// 修改次数

transient int modCount;

/**

* The next size value at which to resize (capacity * load factor).

*

* @serial

*/

// (The javadoc description is true upon serialization.

// Additionally, if the table array has not been allocated, this

// field holds the initial array capacity, or zero signifying

// DEFAULT_INITIAL_CAPACITY.)

// 下一次扩容的阈值 Map数组长度 * 负载因子

int threshold;

/**

* The load factor for the hash table.

*

* @serial

*/

// 负载因子

final float loadFactor; - 构造函数

/**

* Constructs an empty HashMap with the specified initial

* capacity and load factor.

*

* @param initialCapacity the initial capacity

* @param loadFactor the load factor

* @throws IllegalArgumentException if the initial capacity is negative

* or the load factor is nonpositive

*/

// 构造指定容量 指定负载因子的HashMap

public HashMap(int initialCapacity, float loadFactor) {

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " +

initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " +

loadFactor);

this.loadFactor = loadFactor;

this.threshold = tableSizeFor(initialCapacity); // tableSizeFor 在大于等于initialCapacity 范围内找最接近initialCapacity的2的n次幂

}

static final int tableSizeFor(int cap) {

int n = cap - 1; // 防止cap为2的n次幂 造成翻倍现象

n |= n >>> 1;

n |= n >>> 2;

n |= n >>> 4;

n |= n >>> 8;

n |= n >>> 16;

return (n < 0) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1;

}

/**

* Constructs an empty HashMap with the specified initial

* capacity and the default load factor (0.75).

*

* @param initialCapacity the initial capacity.

* @throws IllegalArgumentException if the initial capacity is negative.

*/

// 构造指定容量 负载因子为默认0.75的HashMap

public HashMap(int initialCapacity) {

this(initialCapacity, DEFAULT_LOAD_FACTOR);

}

/**

* Constructs an empty HashMap with the default initial capacity

* (16) and the default load factor (0.75).

*/

// 构造默认容量为16 负载因子为默认0.75的HashMap

public HashMap() {

this.loadFactor = DEFAULT_LOAD_FACTOR; // all other fields defaulted

}

/**

* Constructs a new HashMap with the same mappings as the

* specified Map. The HashMap is created with

* default load factor (0.75) and an initial capacity sufficient to

* hold the mappings in the specified Map.

*

* @param m the map whose mappings are to be placed in this map

* @throws NullPointerException if the specified map is null

*/

public HashMap(Map m) {

this.loadFactor = DEFAULT_LOAD_FACTOR;

putMapEntries(m, false);

}- 内部键值对的存储 Node 和 TreeNode

/**

* Basic hash bin node, used for most entries. (See below for

* TreeNode subclass, and in LinkedHashMap for its Entry subclass.)

*/

static class Node implements Map.Entry {

final int hash;

final K key;

V value;

Node next;

Node(int hash, K key, V value, Node next) {

this.hash = hash;

this.key = key;

this.value = value;

this.next = next;

}

public final K getKey() { return key; }

public final V getValue() { return value; }

public final String toString() { return key + "=" + value; }

public final int hashCode() {

return Objects.hashCode(key) ^ Objects.hashCode(value);

}

public final V setValue(V newValue) {

V oldValue = value;

value = newValue;

return oldValue;

}

public final boolean equals(Object o) {

if (o == this)

return true;

if (o instanceof Map.Entry) {

Map.Entry e = (Map.Entry)o;

if (Objects.equals(key, e.getKey()) &&

Objects.equals(value, e.getValue()))

return true;

}

return false;

}

}

/**

* Entry for Tree bins. Extends LinkedHashMap.Entry (which in turn

* extends Node) so can be used as extension of either regular or

* linked node.

*/

// 继承了Node(LinkedHashMap.Entry继承了HashMap.Node)

static final class TreeNode extends LinkedHashMap.Entry {

TreeNode parent; // red-black tree links

TreeNode left;

TreeNode right;

TreeNode prev; // needed to unlink next upon deletion

boolean red;

TreeNode(int hash, K key, V val, Node next) {

super(hash, key, val, next);

}

} - 添加元素 put方法

//

public V put(K key, V value) {

return putVal(hash(key), key, value, false, true);

}

// 计算hashcode 无符号右移16位然后做异或运算,比取余效率高

static final int hash(Object key) {

int h;

return (key == null) ? 0 : (h = key.hashCode()) ^ (h >>> 16);

}

final V putVal(int hash, K key, V value, boolean onlyIfAbsent,

boolean evict) {

Node[] tab; Node p; int n, i;

// 为空 或者数组长度为0 进行扩容

if ((tab = table) == null || (n = tab.length) == 0)

n = (tab = resize()).length;

// (n-1)&hash 对数组长度减一与运算计算map数组中的下表 位运算比取余效率高

// 如果数组的桶为空则直接放到桶内

if ((p = tab[i = (n - 1) & hash]) == null)

tab[i] = newNode(hash, key, value, null);

else { // 桶内已经有其他数据了,进行比较 扩容判断等操作

// e用于原来的值的返回

Node e; K k;

// p点为桶中第一个节点 如果与桶内第一个节点hash相同 ,判断是否key是否equals 相同则把原来的值赋值给它

if (p.hash == hash &&

((k = p.key) == key || (key != null && key.equals(k))))

e = p;

else if (p instanceof TreeNode) // 如果是树节点则存入

e = ((TreeNode)p).putTreeVal(this, tab, hash, key, value);

else { // 桶中为链表

for (int binCount = 0; ; ++binCount) { // 遍历链表

if ((e = p.next) == null) { // 如果遍历到结尾了 则加到结尾

p.next = newNode(hash, key, value, null);

// 遍历到大于等于8时 进行转换红黑树

if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st

treeifyBin(tab, hash);

break;

}

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k)))) // 如果遍历到key hash相同 并且equals时跳出循环

break;

// 继续向后遍历

p = e;

}

}

// 存在相同值的key 替换并返回旧的value

if (e != null) { // existing mapping for key

V oldValue = e.value;

if (!onlyIfAbsent || oldValue == null)

e.value = value;

// 默认为空实现,允许我们修改完成后做一些操作

afterNodeAccess(e);

return oldValue;

}

}

++modCount;

// 达到 负载因子*capacity 进行扩容

if (++size > threshold)

resize();

// 默认也是空实现,允许我们插入完成后做一些操作

afterNodeInsertion(evict);

return null;

} - 转换成树

final void treeifyBin(Node[] tab, int hash) {

int n, index; Node e;

// 此处如果数组的长度小于64 只是进行扩容 rehash 并未进行红黑树操作

if (tab == null || (n = tab.length) < MIN_TREEIFY_CAPACITY)

resize();

else if ((e = tab[index = (n - 1) & hash]) != null) {

TreeNode hd = null, tl = null;

do { // 将节点的数据结构转成TreeNode 形成一个新的链表

TreeNode p = replacementTreeNode(e, null);

if (tl == null)

hd = p;

else {

p.prev = tl;

tl.next = p;

}

tl = p;

} while ((e = e.next) != null);

// 将新的树结点链表赋给第index个桶

if ((tab[index] = hd) != null)

// 真正的转成红黑树

hd.treeify(tab);

}

}

final void treeify(Node[] tab) {

TreeNode root = null;

// 遍历链表中的每一个TreeNode 当前的节点为x

for (TreeNode x = this, next; x != null; x = next) {

next = (TreeNode)x.next;

x.left = x.right = null;

// 当root为空 当前节点设置成根节点 根节点设置成黑色(x.red=false)

if (root == null) {

x.parent = null;

x.red = false;

root = x;

}

else {

K k = x.key;

int h = x.hash;

Class kc = null;

// 从根节点开始遍历 找到x插入的位置

for (TreeNode p = root;;) {

int dir, ph;

K pk = p.key;

// 如果当前结点的hash值小于根结点的hash值,方向dir = -1;

if ((ph = p.hash) > h)

dir = -1;

// 如果当前结点的hash值大于根结点的hash值,方向dir = 1;

else if (ph < h)

dir = 1;

// 如果x结点的key没有实现comparable接口,或者其key和根结点的key相等(k.compareTo(x) == 0)仲裁插入规则

// 只有k的类型K直接实现了Comparable接口,才返回K的class,否则返回null,间接实现也不行。

else if ((kc == null &&

(kc = comparableClassFor(k)) == null) ||

(dir = compareComparables(kc, k, pk)) == 0)

// 仲裁插入规则

dir = tieBreakOrder(k, pk);

TreeNode xp = p;

// 如果p的左右结点都不为null,继续for循环,否则执行插入

if ((p = (dir <= 0) ? p.left : p.right) == null) {

x.parent = xp;

if (dir <= 0)

xp.left = x;

else

xp.right = x;

// 插入后进行树的调整

root = balanceInsertion(root, x);

break;

}

}

}

}

moveRootToFront(tab, root);

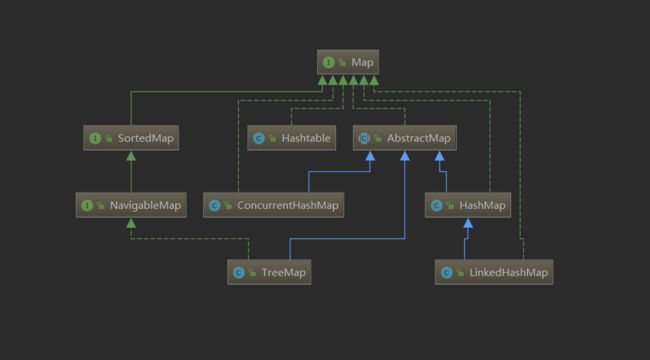

} 其他map相关集合的如下图所示,concurrentHashMap 更高性能的支持并发,可以单独讲一篇。

LinkdedHashMap

LinkedHashMap保存了记录的插入顺序,在用Iteraor遍历LinkedHashMap时,先得到的记录肯定是先插入的,在遍历的时候会比HashMap慢,有HashMap的全部特性。

TreeMap

TreeMap将存储的键值对进行默认排序,并且还能够指定排序的比较器,是线程不安全的。TreeMap不允许键值为null

Hashtable

是线程安全的HashMap 但是他的线程安全控制是通过任意一个线程只能有一个线程写Hashtable,所以并发性不是很好。相比于ConcurrentHashMap使用的是分段锁,并发性更好。所以推荐使用ConcurrentHashMap。

参考资料

- https://www.cnblogs.com/rjzheng/p/11302835.html

- https://www.jianshu.com/p/309ea054cbc9