存储设备有三种使用类型,块存储、文件存储、对象存储。

Ceph对于上述3中类型的使用方式都支持,需要进行不同的服务端配置与客户端调用。

1、块存储

1.1服务端配置

创建pool

ceph osd pool create test 32

创建块设备

rbd create rbd_write --size 1024 --pool test

查看pool下面的块

rbd -p test ls

1.2客户端调用

客户端映射块

rbd map test/rbd_write

#同理,断开映射为

rbd unmap test/rbd_write

挂载后,如果报rbd: sysfs write failed

RBD image feature set mismatch. You can disable features unsupported by the kernel with "rbd feature disable".的错,是因为当前OS的内核不支持某些feature。

解决方案:关闭某些扩展特性,然后重新映射。

rbd feature disable test/rbd_write exclusive-lock object-map fast-diff deep-flatten

rbd map test/rbd_write

映射后,对裸磁盘进行格式化。

mkfs.xfs /dev/rbd0 mkdir -p /mnt/ceph_rbd mkdir /dmnt/ceph_rbd mount /dev/rbd0 /mnt/ceph_rbd

可以进入/mnt/ceph_rbd目录,然后创建文件进行测试。

2、文件存储

2.1服务端配置

创建挂载目录

mkdir /cephfs

复制配置文件

将ceph配置文件ceph.conf从管理节点copy到client节点(10.1.30.43为管理节点)

rsync -e "ssh -p22" -avpgolr [email protected]:/etc/ceph/ceph.conf /etc/ceph/

复制密钥

将ceph的ceph.client.admin.keyring从管理节点copy到client节点

rsync -e "ssh -p22" -avpgolr [email protected]:/etc/ceph/ceph.client.admin.keyring /etc/ceph/

查看ceph授权

ceph auth list

installed auth entries: mds.ceph-admin key: AQAZZxdbH6uAOBAABttpSmPt6BXNtTJwZDpSJg== caps: [mds] allow caps: [mon] allow profile mds caps: [osd] allow rwx osd.0 key: AQCuWBdbV3TlBBAA4xsAE4QsFQ6vAp+7pIFEHA== caps: [mon] allow profile osd caps: [osd] allow * osd.1 key: AQC6WBdbakBaMxAAsUllVWdttlLzEI5VNd/41w== caps: [mon] allow profile osd caps: [osd] allow * osd.2 key: AQDJWBdbz6zNNhAATwzL2FqPKNY1IvQDmzyOSg== caps: [mon] allow profile osd caps: [osd] allow * client.admin key: AQCNWBdbf1QxAhAAkryP+OFy6wGnKR8lfYDkUA== caps: [mds] allow * caps: [mon] allow * caps: [osd] allow * client.bootstrap-mds key: AQCNWBdbnjLILhAAT1hKtLEzkCrhDuTLjdCJig== caps: [mon] allow profile bootstrap-mds client.bootstrap-mgr key: AQCOWBdbmxEANBAAiTMJeyEuSverXAyOrwodMQ== caps: [mon] allow profile bootstrap-mgr client.bootstrap-osd key: AQCNWBdbiO1bERAARLZaYdY58KLMi4oyKmug4Q== caps: [mon] allow profile bootstrap-osd client.bootstrap-rgw key: AQCNWBdboBLXIBAAVTsD2TPJhVSRY2E9G7eLzQ== caps: [mon] allow profile bootstrap-rgw

2.2客户端调用

客户端设备,安装ceph-fuse(这里直接用ceph-admin-10.1.30.43测试,如果需要在其他设备测试请注意yum源配置)

yum install -y ceph-fuse

将ceph集群存储挂载到客户机的/cephfs目录下

ceph-fuse -m 10.1.30.43:6789 /cephfs

2018-06-06 14:28:54.149796 7f8d5c256760 -1 init, newargv = 0x4273580 newargc=11 ceph-fuse[16107]: starting ceph client ceph-fuse[16107]: starting fuse

df -h

Filesystem Size Used Avail Use% Mounted on /dev/mapper/vg_centos602-lv_root 50G 3.5G 44G 8% / tmpfs 1.9G 0 1.9G 0% /dev/shm /dev/sda1 477M 41M 412M 9% /boot /dev/mapper/vg_centos602-lv_home 45G 52M 43G 1% /home /dev/vdb1 20G 5.1G 15G 26% /data/osd1 ceph-fuse 45G 100M 45G 1% /cephfs

由上面可知,已经成功挂载了ceph存储,三个osd节点,每个节点有15G(在节点上通过"lsblk"命令可以查看ceph data分区大小),一共45G!

取消ceph存储的挂载

umount /cephfs

3、对象存储

3.1服务端配置

Ceph 对象存储使用 Ceph 对象网关守护进程( radosgw ),它是个与 Ceph 存储集×××互的 FastCGI 模块。Ceph RGW的FastCGI支持多种Web服务器作为前端,而Ceph从v0.8开始,使用内嵌Civetweb作为WebServer,无需额外安装nginx或者apache作web服务。

修改yum文件,并且安装网关服务。

vi /etc/yum.repos.d/CentOS-Base.repo [centosplus] enabled=1

ceph-deploy install --rgw ceph-admin

创建网关实例

/home/cephuser/cluster

ceph-deploy rgw create testrgw

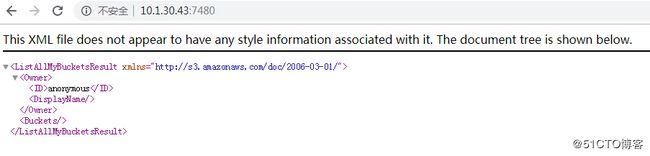

一旦网关开始运行,可以通过7480端口访问尝试

创建账号

radosgw-admin user create --uid="testuser" --display-name="First User"

自动返回如下

{

"user_id": "testuser",

"display_name": "First User",

"email": "",

"suspended": 0,

"max_buckets": 1000,

"auid": 0,

"subusers": [],

"keys": [

{

"user": "testuser",

"access_key": "EVAKP3SXQ5PX8EMEM9XK",

"secret_key": "pr7WUkuEyTJS8obM0i75GgHTYlYSPluzViCJCCIX"

}

],

"swift_keys": [],

"caps": [],

"op_mask": "read, write, delete",

"default_placement": "",

"placement_tags": [],

"bucket_quota": {

"enabled": false,

"max_size_kb": -1,

"max_objects": -1

},

"user_quota": {

"enabled": false,

"max_size_kb": -1,

"max_objects": -1

},

"temp_url_keys": []

}

使用查看命令也能再次看到上述信息

radosgw-admin user info --uid=testuser

3.2客户端调用

客户端安装软件

yum install s3cmd

s3cmd --configure

Access Key:EVAKP3SXQ5PX8EMEM9XK Secret Key:pr7WUkuEyTJS8obM0i75GgHTYlYSPluzViCJCCIX Default Region [US]:默认 S3 Endpoint [s3.amazonaws.com]: 10.1.30.43:7480 [%(bucket)s.s3.amazonaws.com]: %(bucket)s.10.1.30.43:7480 Use HTTPS protocol [Yes]: No Test access with supplied credentials? [Y/n] Y Retry configuration[Y/n]:n Save settings? [y/N] y

其他选项直接回车即可,不要修改。设置完成后,运行

s3cmd ls

如果报错如下,则证明配置文件里面有问题

Invoked as: /usr/bin/s3cmd ls Problem: error: [Errno 111] Connection refused

解决办法:

vi ~/.s3cfg

检查5个参数

access_key = EVAKP3SXQ5PX8EMEM9XK cloudfront_host = ceph-admin:7480 host_base = ceph-admin:7480 host_bucket = %(*)s.ceph-admin:7480 secret_key = pr7WUkuEyTJS8obM0i75GgHTYlYSPluzViCJCCIX

然后重新s3cmd测试

s3cmd ls

创建名叫my_bucket的数据目录(类似创建文件夹,但是注意,bucket是对象存储的概念,不是文件夹)

s3cmd mb s3://my_bucket

![]()

put上传对象到具体目录

s3cmd put /var/log/messages s3://my_bucket/log/

查看对象

s3cmd ls s3://my_bucket/log/

get下载对象

s3cmd get s3://my_bucket/log/messages /tmp/

删除对象

s3cmd del s3://my_bucket/log/messages

删除bucket

s3cmd rb s3://my_bucket/log/