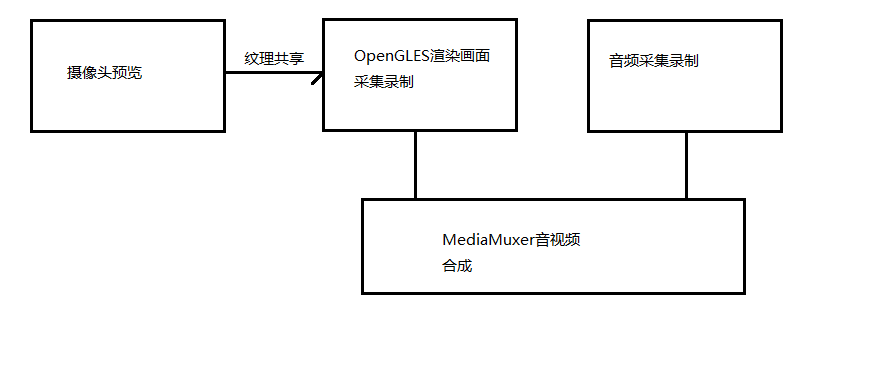

主要的步骤分为视频录制,音频录制,视频合成。

视频录制采用

OpenGLES渲染预览摄像头画面,通过MediaCodec创建一个surface,然后通过创建一个新的egl环境共享预览的EglContext和这个surface绑定,渲染摄像头预览的fbo绑定的纹理,即可录制。

音频录制采用MediaCodec即可,从外部传入pcm数据进行编码录制。

音视频合成采用MediaMuxer合成。

视频录制

OpenGLES渲染画面通过MediaCodec录制

音频录制

相关参考 MediaCodec硬编码pcm2aac

主要分为以下几步骤:

- 初始化

private void initAudioEncoder(String mineType, int sampleRate, int channel) {

try {

mAudioEncodec = MediaCodec.createEncoderByType(mineType);

MediaFormat audioFormat = MediaFormat.createAudioFormat(mineType, sampleRate, channel);

audioFormat.setInteger(MediaFormat.KEY_BIT_RATE, 96000);

audioFormat.setInteger(MediaFormat.KEY_AAC_PROFILE, MediaCodecInfo.CodecProfileLevel.AACObjectLC);

audioFormat.setInteger(MediaFormat.KEY_MAX_INPUT_SIZE, 4096);

mAudioEncodec.configure(audioFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

mAudioBuffInfo = new MediaCodec.BufferInfo();

} catch (IOException e) {

e.printStackTrace();

mAudioEncodec = null;

mAudioBuffInfo = null;

}

}

- 开始录制

audioEncodec.start();

int outputBufferIndex = audioEncodec.dequeueOutputBuffer(audioBufferinfo, 0);

while (outputBufferIndex >= 0) {

ByteBuffer outputBuffer = audioEncodec.getOutputBuffers()[outputBufferIndex];

outputBuffer.position(audioBufferinfo.offset);

outputBuffer.limit(audioBufferinfo.offset + audioBufferinfo.size);

//设置时间戳

if (pts == 0) {

pts = audioBufferinfo.presentationTimeUs;

}

audioBufferinfo.presentationTimeUs = audioBufferinfo.presentationTimeUs - pts;

//写入数据

mediaMuxer.writeSampleData(audioTrackIndex, outputBuffer, audioBufferinfo);

audioEncodec.releaseOutputBuffer(outputBufferIndex, false);

outputBufferIndex = audioEncodec.dequeueOutputBuffer(audioBufferinfo, 0);

}

- 传入数据

这里编码为aac不用添加adts是因为这里是写入到mp4,而不是单独的aac文件

private long audioPts;

private long getAudioPts(int size, int sampleRate, int channel, int sampleBit) {

audioPts += (long) (1.0 * size / (sampleRate * channel * (sampleBit / 8)) * 1000000.0);

return audioPts;

}

public void putPcmData(byte[] buffer, int size) {

if (mAudioEncodecThread != null && !mAudioEncodecThread.isExit && buffer != null && size > 0) {

int inputBufferIndex = mAudioEncodec.dequeueInputBuffer(0);

if (inputBufferIndex >= 0) {

ByteBuffer byteBuffer = mAudioEncodec.getInputBuffers()[inputBufferIndex];

byteBuffer.clear();

byteBuffer.put(buffer);

//获取时间戳

long pts = getAudioPts(size, sampleRate, channel, sampleBit);

Log.e("zzz", "AudioTime = " + pts / 1000000.0f);

mAudioEncodec.queueInputBuffer(inputBufferIndex, 0, size, pts, 0);

}

}

}

- 停止录制

audioEncodec.stop();

audioEncodec.release();

audioEncodec = null;

音视频合成

有了音视频数据,通过MediaMuxer进行合并。

官方示例:

//MediaMuxer facilitates muxing elementary streams. Currently MediaMuxer supports MP4, Webm

//and 3GP file as the output. It also supports muxing B-frames in MP4 since Android Nougat.

//MediaMuxer muxer = new MediaMuxer("temp.mp4", OutputFormat.MUXER_OUTPUT_MPEG_4);

// More often, the MediaFormat will be retrieved from MediaCodec.getOutputFormat()

// or MediaExtractor.getTrackFormat().

MediaFormat audioFormat = new MediaFormat(...);

MediaFormat videoFormat = new MediaFormat(...);

int audioTrackIndex = muxer.addTrack(audioFormat);

int videoTrackIndex = muxer.addTrack(videoFormat);

ByteBuffer inputBuffer = ByteBuffer.allocate(bufferSize);

boolean finished = false;

BufferInfo bufferInfo = new BufferInfo();

muxer.start();

while(!finished) {

// getInputBuffer() will fill the inputBuffer with one frame of encoded

// sample from either MediaCodec or MediaExtractor, set isAudioSample to

// true when the sample is audio data, set up all the fields of bufferInfo,

// and return true if there are no more samples.

finished = getInputBuffer(inputBuffer, isAudioSample, bufferInfo);

if (!finished) {

int currentTrackIndex = isAudioSample ? audioTrackIndex : videoTrackIndex;

muxer.writeSampleData(currentTrackIndex, inputBuffer, bufferInfo);

}

};

muxer.stop();

muxer.release();

主要步骤如下:

- 初始化

mMediaMuxer = new MediaMuxer(savePath, MediaMuxer.OutputFormat.MUXER_OUTPUT_MPEG_4);

- 获取录制音视频的

TrackIndex

int outputBufferIndex = videoEncodec.dequeueOutputBuffer(videoBufferinfo, 0);

if (outputBufferIndex == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

videoTrackIndex = mediaMuxer.addTrack(videoEncodec.getOutputFormat());

}

int outputBufferIndex = audioEncodec.dequeueOutputBuffer(audioBufferinfo, 0);

if (outputBufferIndex == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

audioTrackIndex = mediaMuxer.addTrack(audioEncodec.getOutputFormat());

}

- 开始合成

mediaMuxer.start();

//写入视频数据

mediaMuxer.writeSampleData(videoTrackIndex, outputBuffer, videoBufferinfo);

//写入音频数据

mediaMuxer.writeSampleData(audioTrackIndex, outputBuffer, audioBufferinfo);

- 合成结束,写入头信息

mediaMuxer.stop();

mediaMuxer.release();

mediaMuxer = null;

具体查看demo:

https://github.com/ChinaZeng/SurfaceRecodeDemo