资源下载

常用的Hadoop发行版:

| / | 优点 | 缺点 |

|---|---|---|

| Apache | 纯开源 | 不同版本/不同框架之间整合 jar冲突 |

| CDH | 有比较完善的客户端cm、可以一键式安装升级 | cm不开源、与社区版本有些许出入 |

| Hortonworks | 原装Hadoop、纯开源、支持tez | 企业级安全不开源 |

其中CDH占市场使用率的60%-70%,所以本次学习打算使用CDH的版本

Hadoop-2.6.0-cdh5.11.1下载地址

CDH官方文档

CentOS7下载地址

JDK8下载地址(百度网盘提取码dg3v)

安装Hadoop单机版

安装CentOS7的时候设置hostname为hadoop000 并且创建hadoop用户

目录介绍

[hadoop@hadoop000 ~]$ pwd

/home/hadoop

[hadoop@hadoop000 ~]$ ll

总用量 0

drwxrwxr-x. 5 hadoop hadoop 67 2月 10 04:07 app //java、hadoop等软件的安装目录

drwxrwxr-x. 2 hadoop hadoop 77 2月 10 00:27 software //安装包目录安装JDK

解压jdk并配置环境变量

scp复制本地文件到Linux

scp jdk-8u241-linux-x64.tar.gz [email protected]:~/software/解压jdk

tar -zvxf jdk-8u241-linux-x64.tar.gz -C ~/app/配置环境变量

vi ~/.bash_profile

export JAVA_HOME=/home/hadoop/app/jdk1.8.0_241

export PATH=$JAVA_HOME/bin:$PATH

source ~/.bash_profile验证

[hadoop@hadoop000 ~]$ java -version

java version "1.8.0_241"

Java(TM) SE Runtime Environment (build 1.8.0_241-b07)

Java HotSpot(TM) 64-Bit Server VM (build 25.241-b07, mixed mode)安装本机ssh免密登陆

ssh-keygen -t rsa //一路回车[hadoop@hadoop000 ~]$ cd ~/.ssh

[hadoop@hadoop000 .ssh]$ ll

总用量 16

-rw-------. 1 hadoop hadoop 1675 2月 10 03:52 id_rsa //私钥

-rw-r--r--. 1 hadoop hadoop 398 2月 10 03:52 id_rsa.pub //公钥

-rw-r--r--. 1 hadoop hadoop 376 2月 18 01:28 known_hostscat id_rsa.pub >> authorized_keys

chmod 600 authorized_keys安装hdfs单机版

解压Hadoop

tar -zxvf ~/software/hadoop-2.6.0-cdh5.11.1.tar.gz -C ~/app/配置Hadoop环境变量(加在JDK的下面即可)

vi ~/.bash_profile

export HADOOP_HOME=/home/hadoop/app/hadoop-2.6.0-cdh5.11.1

export PATH=$HADOOP_HOME/bin:$PATH

source ~/.bash_profileHadoop目录介绍

[hadoop@hadoop000 software]$ cd ~/app/hadoop-2.6.0-cdh5.11.1/

[hadoop@hadoop000 hadoop-2.6.0-cdh5.11.1]$ ll

总用量 116

drwxr-xr-x. 2 hadoop hadoop 137 6月 1 2017 bin //Hadoop客户端操作命令

drwxr-xr-x. 2 hadoop hadoop 166 6月 1 2017 bin-mapreduce1

drwxr-xr-x. 3 hadoop hadoop 4096 6月 1 2017 cloudera

drwxr-xr-x. 6 hadoop hadoop 109 6月 1 2017 etc //Hadoop配置文件

drwxr-xr-x. 5 hadoop hadoop 43 6月 1 2017 examples

drwxr-xr-x. 3 hadoop hadoop 28 6月 1 2017 examples-mapreduce1

drwxr-xr-x. 2 hadoop hadoop 106 6月 1 2017 include

drwxr-xr-x. 3 hadoop hadoop 20 6月 1 2017 lib

drwxr-xr-x. 3 hadoop hadoop 261 6月 1 2017 libexec

-rw-r--r--. 1 hadoop hadoop 85063 6月 1 2017 LICENSE.txt

-rw-r--r--. 1 hadoop hadoop 14978 6月 1 2017 NOTICE.txt

-rw-r--r--. 1 hadoop hadoop 1366 6月 1 2017 README.txt

drwxr-xr-x. 3 hadoop hadoop 4096 6月 1 2017 sbin //Hadoop启动命令脚本

drwxr-xr-x. 4 hadoop hadoop 31 6月 1 2017 share //例子

drwxr-xr-x. 18 hadoop hadoop 4096 6月 1 2017 srcetc/hadoop/hadoop-env.sh(如果已经配置JAVA_HOME则可以省略)

export JAVA_HOME=/home/hadoop/app/jdk1.8.0_241etc/hadoop/core-site.xml

fs.defaultFS

hdfs://hadoop000:8020

创建HDFS存储目录

mkdir /home/hadoop/app/tmpetc/hadoop/hdfs-site.xml

Hadoop单机版hdfs的副本配置(dfs.replication)为1即可

dfs.replication

1

hadoop.tmp.dir

/home/hadoop/app/tmp

etc/hadoop/slaves

hadoop000启动HDFS

第一次执行的时候一定要格式化文件系统,不要重复执行

hdfs namenode -format启动与停止hdfs集群

$HADOOP_HOME/sbin/start-dfs.sh

$HADOOP_HOME/sbin/stop-dfs.sh验证:

[hadoop@hadoop000 bin]$ jps

3345 DataNode

3494 SecondaryNameNode

3597 Jps

3230 NameNode上传文件到hdfs

[hadoop@hadoop000 software]$ hadoop fs -ls /

20/02/18 02:46:52 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[hadoop@hadoop000 software]$ hadoop fs -put jdk-8u241-linux-x64.tar.gz /

20/02/18 02:47:07 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[hadoop@hadoop000 software]$

[hadoop@hadoop000 software]$

[hadoop@hadoop000 software]$ hadoop fs -ls /

20/02/18 02:47:16 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 1 items

-rw-r--r-- 1 hadoop supergroup 194545143 2020-02-18 02:47 /jdk-8u241-linux-x64.tar.gz安装yarn

etc/hadoop/mapred-site.xml

mapreduce.framework.name

yarn

etc/hadoop/yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

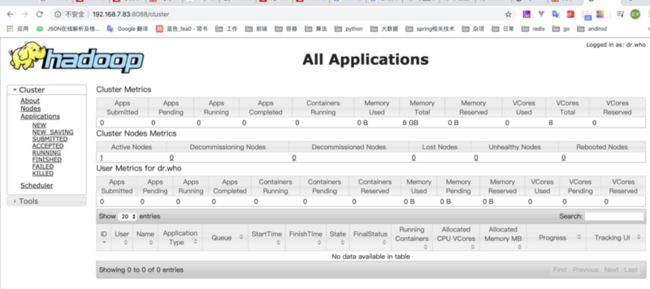

启动与停止yarn集群

$HADOOP_HOME/sbin/start-yarn.sh

$HADOOP_HOME/sbin/stop-yarn.sh验证

[hadoop@hadoop000 hadoop-2.6.0-cdh5.11.1]$ jps

21042 ResourceManager

21493 Jps

4070 NameNode

4342 SecondaryNameNode

4190 DataNode

21198 NodeManager其他

完整的~/.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/.local/bin:$HOME/bin

export JAVA_HOME=/home/hadoop/app/jdk1.8.0_241

export PATH=$JAVA_HOME/bin:$PATH

export HADOOP_HOME=/home/hadoop/app/hadoop-2.6.0-cdh5.11.1

export PATH=$HADOOP_HOME/bin:$PATH

export PATH访问该机的50070端口(hdfs)

如果无法访问请关闭防火墙

sudo firewall-cmd --state //查看防火墙状态

sudo systemctl stop firewalld.service //关闭防火墙

sudo systemctl disable firewalld.service //禁止开机启动HDFS 常用命令

hadoop fs -ls /

hadoop fs -put

hadoop fs -copyFromLocal

hadoop fs -moveFromLocal

hadoop fs -cat

hadoop fs -text

hadoop fs -get

hadoop fs -mkdir

hadoop fs -mv //移动/改名

hadoop fs -getmerge

hadoop fs -rm

hadoop fs -rmdir

hadoop fs -rm -r