- python的reload

风语者666

pythonjavalinux

先看看from...import...的问题#importparse_tumor_report.parse_tumor_report#这样是错的fromparse_tumor_reportimportparse_tumor_report#这个是正确用法再看reload的问题parse_report是我自定义的一个模块文件。该文件(模块)中包含了一个parse_tumor_report类。这样是没错

- C++14--内存管理(new delete)

大胆飞猪

c++

目录1.C++内存管理方式1.1new/delete操作内置类型1.2new和delete操作自定义类型2.operatornew与operatordelete函数3.new和delete的实现原理3.1内置类型3.2自定义类型4.定位new表达式(placement-new)5.malloc/free和new/delete的区别1.C++内存管理方式前言C语言内存管理在C++中可以继续使用,但是

- 【后端】【django】Django DRF `@action` 详解:自定义 ViewSet 方法

患得患失949

django知识面试考题专栏(前后端)djangosqlitepython

DjangoDRF@action详解:自定义ViewSet方法在DjangoRESTFramework(DRF)中,@action装饰器用于为ViewSet添加自定义的API端点。相比于update、create等默认方法,@action允许我们定义更加清晰、语义化的API访问路径,使接口更加易读且符合RESTful设计原则。1.@action的作用@action主要用于自定义API端点,避免滥用

- SpringBoot设置过滤器(Filter)或拦截器(Interceptor)的执行顺序:@Order注解、setOrder()方法

pan_junbiao

SpringSpringBoot我の原创springbootjava后端

JavaWeb过滤器、拦截器、监听器,系列文章:(1)过滤器(Filter)的使用:《Servlet过滤器(Filter)的使用:Filter接口、@WebFilter注释》《SpringMVC使用过滤器(Filter)解决中文乱码》《SpringBoot过滤器(Filter)的使用:Filter接口、FilterRegistrationBean类配置、@WebFilter注释》《SpringBo

- Flutter 按钮组件 ElevatedButton 详解

帅次

Flutterflutterandroidiosmacosandroidstudiowebapptaro

目录1.引言2.ElevatedButton的基本用法3.主要属性4.自定义按钮样式4.1修改背景颜色和文本颜色4.2修改按钮形状和边框4.3修改按钮大小4.4阴影控制4.5水波纹效果5.结论相关推荐1.引言在Flutter中,ElevatedButton是一个常用的按钮组件,它带有背景颜色和阴影效果,适用于强调操作。ElevatedButton继承自ButtonStyleButton,相比Tex

- C语言数据结构——变长数组(柔性数组)

Iawfy22

数据结构c语言柔性数组

前言这是一位即将大二的大学生(卷狗)在暑假预习数据结构时的一些学习笔记,供大家参考学习。水平有限,如有错误,还望多多指正。本文主要介绍了如何手动实现一个变长数组,以及实现其部分功能(如删除、查找、添加、排序等)变长数组介绍变长数组又可以叫柔性数组,与一般数组不同,它是一个动态的数组,具体表现为可以根据数组里面元素个数的多少而自动的进行扩容,以便达到变长(柔性)的特点。预备知识为了实现自动边长扩容这

- html5使用本地sqlite数据库

小祁爱编程

sqlitehtml5bigdata

html5使用本地sqlite数据库本地数据库概述在HTML5中,大大丰富了客户端本地可以存储的内容,添加了很多功能将原本必须要保存在服务器上的数据转为保存在客户端本地,从而大大提高了Web应用程序性能,减轻了服务器的负担,使用Web时代重新回到了“客户端为重、服务器端为轻”的时代。HTML5中内置了两种本地数据库,一种是SQLite,一种是indexedDBSQLite数据库使用操作本地数据库的

- vscode语言支持插件开发

amux9527

笔记vscodetypescript编辑器

安装脚手架npminstall-gyogenerator-code生成插件模板yocode配置语言支持我这里就自定义一种以.da结尾的语言,修改根目录下的package.json文件的contributes处的属性{"contributes":{"languages":[{"id":"da","aliases":["DA"],"extensions":[".da"],"icon":{"dark":

- HTML网页中添加视频的代码

冬瓜生鲜

JavaWeb

//非原创(当时忘记保存大佬连接了,不知道是谁的了,所以没有转载链接,见谅)只需要把名字改改就行如果要实现自动播放:改下这个:controlsautoplaymuted;

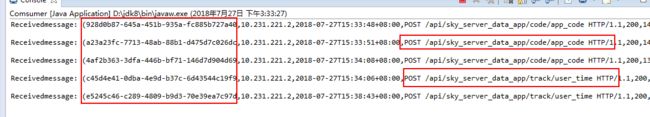

- 手动部署?NONONO,动态上传热部署才是王道!!

架构文摘JGWZ

接口学习后端spring

近期开发系统过程中遇到的一个需求,系统给定一个接口,用户可以自定义开发该接口的实现,并将实现打成jar包,上传到系统中。系统完成热部署,并切换该接口的实现。定义简单的接口这里以一个简单的计算器功能为例,接口定义比较简单,直接上代码。public interface Calculator { int calculate(int a, int b); int add(int a, int

- vscode中调试Python和C++的混合代码

destiny44123

vscodepythonc++

文章目录使用流程参考一些差异使用流程参考ExampledebuggingmixedPythonC++inVSCode一些差异这里假设的项目是通过python调用c++的相应共享库(so)文件。首先,新建文件夹.vscode,在其中添加文件配置launch.json.示例如下:{"version":"0.2.0","configurations":[{"name":"(gdb)附加","type":

- Android - ViewPager 从基础到进阶

whd_Alive

Android基础AndroidViewPager

前言好记性不如烂笔头,学习的知识总要记录下来,通过本文来加深对ViewPager方方面面的理解:ViewPager的基础介绍PagerAdapter+FragmentPagerAdapter&FragmentStatePagerAdapter与Fragment+TabLayout的联动使用Banner轮播图自定义切换动画首次登录引导界面闲话少说,下面进入正题。基础介绍ViewPager是Andro

- php 商户转账到微信零钱

用黑色铅笔画场盛世烟火..

php开发语言

首先在微信商户开通此功能(开通可能不易)拿到商户号证书还有证书序列号关联商户的appid(如果是app在开放平台申请appid小程序的话那就是小程序的appid)注意一定要关联appid在微信商户号里开通api权限以及添加服务器的ip/**生成认证信息*@param$url*@param$pars*@param$http_method*@param$cert_path*@param$key_pat

- Git学习总结(9)——如何构建你自己的 Git 服务器

一杯甜酒

Git

现在我们将开始学习如何构建一个Git服务器,如何在具体的事件中写一个针对特定的触发操作的自定义Git(例如通告),如何发布你的代码到一个网站。

- Flutter中沉浸式状态栏的设置

啦啦啦种太阳wqz

flutter沉浸式状态栏

Flutter中沉浸式状态栏的设置沉浸式状态栏是什么?状态栏是指Android手机顶部显示手机状态信息的位置,Android从4.4版本开始新增了透明状态栏功能,状态栏可以自定义颜色背景,是titleBar能够和状态栏融为一体,增进沉浸感。状态栏默认为黑色半透明,设置沉浸式状态栏后,可以与标题栏颜色一致,效果如上图。如何实现沉浸式状态栏在flutter项目目录下找到android主入口页面Main

- Git:Git高级特性:钩子与自定义脚本_2024-07-17_20-40-39.Tex

chenjj4003

游戏开发gitelasticsearch大数据搜索引擎javaservlet全文检索

Git:Git高级特性:钩子与自定义脚本Git钩子简介Git钩子的基本概念Git钩子(Hooks)是Git提供的一种自动化脚本执行机制,允许你在Git的特定事件(如提交、合并、推送等)发生时运行自定义脚本。钩子脚本可以用来执行各种任务,如数据验证、环境准备、自动构建等,从而增强Git的功能,提高开发效率和代码质量。钩子的目录结构Git钩子脚本位于仓库的.git/hooks目录下。这个目录包含了多个

- 基于PySide6与PyCatia的CATIA几何体智能重命名工具开发实践

Python×CATIA工业智造

python开发语言CATIA二次开发

一、工具概述本工具基于CATIAV5/V6的二次开发接口,结合PySide6图形界面框架与PyCatia自动化库,实现了三大核心功能模块:几何体前缀批量添加、后缀动态追加、智能文本替换。该工具显著提升了工程师在大型零件体设计中的几何体命名管理效率,解决了传统手动操作易出错、耗时长的问题。二、技术架构解析1.分层架构设计classStats(QMainWindow):def__init__(self

- 鸿蒙开发:自定义一个Toast

前言代码案例基于Api13。系统的toast已经可以满足大部分的场景了,而且使用起来也是十分的简单,可以修改很多的可配置属性,简单的使用代码如下:promptAction.showToast({message:"toast提示"})但是偏偏有一点实现不了,那就是圆角度数的设置,还有就是和icon结合使用的场景也无法满足,为了更好的适配UI的设计图,那么自定义一个Toast是在所难免的。简单的实现效

- Vue2+OpenLayers动态绘制两个经纬度并计算距离(提供Gitee源码)

黄团团

VueOpenLayersgiteejavascriptexceljavahtml前端

目录一、案例截图二、安装OpenLayers库三、代码实现3.1、初始化变量3.2、开始/结束绘制3.3、计算两点距离3.4、添加文本标注3.5、添加点3.6、添加线3.7、初始化地图点击事件3.8、加载地图3.9、完整代码四、Gitee源码一、案例截图二、安装OpenLayers库npminstallol三、代码实现页面代码如下:&l

- Vue2+OpenLayers实现点位拖拽功能(提供Gitee源码)

黄团团

VueOpenLayersgitee前端htmljavascript开发语言

目录一、案例截图二、安装OpenLayers库三、代码实现3.1、初始化变量3.2、创建一个点3.3、将点添加到地图上3.4、实现点位拖拽3.5、完整代码四、Gitee源码一、案例截图可以随意拖拽点位到你想要的位置二、安装OpenLayers库npminstallol三、代码实现3.1、初始化变量关键代码:data(){return{map:null,vectorLayer:null,}},3.2

- 使用Qt创建悬浮窗口

水瓶丫头站住

QtQt

在Qt中创建悬浮窗口(如无边框、可拖动的浮动面板或提示框)可以通过以下方法实现。以下是几种常见场景的解决方案:方法1:使用无边框窗口+鼠标事件拖动适用于自定义浮动工具窗口(如Photoshop的工具栏)。#include#includeclassFloatingWindow:publicQWidget{public:FloatingWindow(QWidget*parent=nullptr):QW

- Flutter container text 组件详解

mylgcs

flutterflutterandroid

Flutter文章目录Container组件是一个常用的可视化容器,可以用来包裹其他Widget,并且它可以设置自己的宽和高,边距,背景颜色等等。而Text组件则是用来显示文本的Widget,可以设置字体大小,颜色,字体样式等等。提示:如有雷同、请联系作者删除文章目录Flutter文章目录前言一、Container1.container组件详解2.用container实现一个自定义按钮二、text

- Git 本地常见快捷操作

笔沫拾光

git

Git本地常见快捷操作1.基本操作操作命令初始化Git仓库gitinit查看Git状态gitstatus添加所有文件到暂存区gitadd.添加指定文件gitadd提交更改gitcommit-m"提交信息"修改最后一次提交信息gitcommit--amend-m"新提交信息"显示提交历史gitlog--oneline--graph显示修改的文件gitdiff2.分支管理操作命令查看当前分支gitbr

- Qt常用控件之垂直布局QVBoxLayout

laimaxgg

qt开发语言c++qt6.3qt5前端

垂直布局QVBoxLayoutQVBoxLayout是一种垂直布局控件。1.QVBoxLayout属性属性说明layoutLeftMargin左侧边距。layoutRightMargin右侧边距。layoutTopMargin顶部边距。layoutBottomMargin底部边距。layoutSpacing相邻元素间距。2.QVBoxLayout方法方法说明addWidget把控件添加到布局管理器

- Qt的QToolButton设置弹出QMenu下拉菜单

水瓶丫头站住

QtQt

在Qt中,使用QToolButton显示下拉菜单可以通过以下步骤实现:基本实现步骤创建QToolButton:实例化一个QToolButton对象。创建QMenu:实例化一个QMenu作为下拉菜单。添加菜单项:通过QMenu::addAction方法添加动作(QAction)。关联菜单到按钮:使用QToolButton::setMenu将菜单绑定到按钮。设置弹出模式:通过setPopupMode调

- C# HashTable、HashSet、Dictionary

有诗亦有远方

C#Hash

哈希一、HashTable1.什么是哈希表2.哈希表的Key&Value(1)添加数据(2)“键值对”均是object类型(3)必须有Key键,且Key键不能重复。(4)乱序读取数据3.基本操作二、HashSet1.特点2.HashSet常用扩展方法3.HashSet与Linq操作三、Dictionary四、HashTable和Dictionary的区别一、HashTable哈希表(HashTab

- SpringBoot整合RabbitMQ

z小天才b

RabbitMQruby开发语言后端

1、添加依赖org.springframework.bootspring-boot-starter-weborg.springframework.bootspring-boot-starter-amqporg.projectlomboklomboktrue2.配置文件(application.yml)spring:rabbitmq:host:localhostport:5672username:g

- MyBatis底层原理深度解析:动态代理与注解如何实现ORM映射

rider189

java开发语言mybatis

一、引言MyBatis作为一款优秀的ORM框架,其核心设计思想是通过动态代理和注解将接口方法与SQL操作解耦。开发者只需定义Mapper接口并添加注解,便能实现数据库操作,这背后隐藏着精妙的动态代理机制与源码设计。本文将从源码层解析MyBatis如何实现这一过程。二、动态代理机制:从接口到实现类关键点:MyBatis通过JDK动态代理为Mapper接口生成代理对象,拦截所有方法调用,将其路由到SQ

- shell脚本创建分区、格式化分区、转换分区

why—空空

运维

一、shell脚本代码#!/bin/bash#添加一个函数检查用户是否操作sda,如果用户操作sda直接退出脚本functionbd_sda(){if[["$cname"=="sda"]]thenecho"不能对磁盘sda进行操作"exit1fi}#创建分区函数functioncreate_pra(){localsize=$1#获取第一个参数:分区大小localxnum=$2#获取第二个参数,分区

- CListCtrl使用完全指南

panjean

VC/MFC转载的文章listheadersortingwizardcallbacklistview

创建图形列表并和CListCtrl关联:m_image_list.Create(IDB_CALLER2,16,10,RGB(192,192,192));m_image_list.SetBkColor(GetSysColor(COLOR_WINDOW));m_caller_list.SetImageList(&m_image_list,LVSIL_SMALL);为报表添加4列:char*szColu

- 关于旗正规则引擎下载页面需要弹窗保存到本地目录的问题

何必如此

jsp超链接文件下载窗口

生成下载页面是需要选择“录入提交页面”,生成之后默认的下载页面<a>标签超链接为:<a href="<%=root_stimage%>stimage/image.jsp?filename=<%=strfile234%>&attachname=<%=java.net.URLEncoder.encode(file234filesourc

- 【Spark九十八】Standalone Cluster Mode下的资源调度源代码分析

bit1129

cluster

在分析源代码之前,首先对Standalone Cluster Mode的资源调度有一个基本的认识:

首先,运行一个Application需要Driver进程和一组Executor进程。在Standalone Cluster Mode下,Driver和Executor都是在Master的监护下给Worker发消息创建(Driver进程和Executor进程都需要分配内存和CPU,这就需要Maste

- linux上独立安装部署spark

daizj

linux安装spark1.4部署

下面讲一下linux上安装spark,以 Standalone Mode 安装

1)首先安装JDK

下载JDK:jdk-7u79-linux-x64.tar.gz ,版本是1.7以上都行,解压 tar -zxvf jdk-7u79-linux-x64.tar.gz

然后配置 ~/.bashrc&nb

- Java 字节码之解析一

周凡杨

java字节码javap

一: Java 字节代码的组织形式

类文件 {

OxCAFEBABE ,小版本号,大版本号,常量池大小,常量池数组,访问控制标记,当前类信息,父类信息,实现的接口个数,实现的接口信息数组,域个数,域信息数组,方法个数,方法信息数组,属性个数,属性信息数组

}

&nbs

- java各种小工具代码

g21121

java

1.数组转换成List

import java.util.Arrays;

Arrays.asList(Object[] obj); 2.判断一个String型是否有值

import org.springframework.util.StringUtils;

if (StringUtils.hasText(str)) 3.判断一个List是否有值

import org.spring

- 加快FineReport报表设计的几个心得体会

老A不折腾

finereport

一、从远程服务器大批量取数进行表样设计时,最好按“列顺序”取一个“空的SQL语句”,这样可提高设计速度。否则每次设计时模板均要从远程读取数据,速度相当慢!!

二、找一个富文本编辑软件(如NOTEPAD+)编辑SQL语句,这样会很好地检查语法。有时候带参数较多检查语法复杂时,结合FineReport中生成的日志,再找一个第三方数据库访问软件(如PL/SQL)进行数据检索,可以很快定位语法错误。

- mysql linux启动与停止

墙头上一根草

如何启动/停止/重启MySQL一、启动方式1、使用 service 启动:service mysqld start2、使用 mysqld 脚本启动:/etc/inint.d/mysqld start3、使用 safe_mysqld 启动:safe_mysqld&二、停止1、使用 service 启动:service mysqld stop2、使用 mysqld 脚本启动:/etc/inin

- Spring中事务管理浅谈

aijuans

spring事务管理

Spring中事务管理浅谈

By Tony Jiang@2012-1-20 Spring中对事务的声明式管理

拿一个XML举例

[html]

view plain

copy

print

?

<?xml version="1.0" encoding="UTF-8"?>&nb

- php中隐形字符65279(utf-8的BOM头)问题

alxw4616

php中隐形字符65279(utf-8的BOM头)问题

今天遇到一个问题. php输出JSON 前端在解析时发生问题:parsererror.

调试:

1.仔细对比字符串发现字符串拼写正确.怀疑是 非打印字符的问题.

2.逐一将字符串还原为unicode编码. 发现在字符串头的位置出现了一个 65279的非打印字符.

- 调用对象是否需要传递对象(初学者一定要注意这个问题)

百合不是茶

对象的传递与调用技巧

类和对象的简单的复习,在做项目的过程中有时候不知道怎样来调用类创建的对象,简单的几个类可以看清楚,一般在项目中创建十几个类往往就不知道怎么来看

为了以后能够看清楚,现在来回顾一下类和对象的创建,对象的调用和传递(前面写过一篇)

类和对象的基础概念:

JAVA中万事万物都是类 类有字段(属性),方法,嵌套类和嵌套接

- JDK1.5 AtomicLong实例

bijian1013

javathreadjava多线程AtomicLong

JDK1.5 AtomicLong实例

类 AtomicLong

可以用原子方式更新的 long 值。有关原子变量属性的描述,请参阅 java.util.concurrent.atomic 包规范。AtomicLong 可用在应用程序中(如以原子方式增加的序列号),并且不能用于替换 Long。但是,此类确实扩展了 Number,允许那些处理基于数字类的工具和实用工具进行统一访问。

- 自定义的RPC的Java实现

bijian1013

javarpc

网上看到纯java实现的RPC,很不错。

RPC的全名Remote Process Call,即远程过程调用。使用RPC,可以像使用本地的程序一样使用远程服务器上的程序。下面是一个简单的RPC 调用实例,从中可以看到RPC如何

- 【RPC框架Hessian一】Hessian RPC Hello World

bit1129

Hello world

什么是Hessian

The Hessian binary web service protocol makes web services usable without requiring a large framework, and without learning yet another alphabet soup of protocols. Because it is a binary p

- 【Spark九十五】Spark Shell操作Spark SQL

bit1129

shell

在Spark Shell上,通过创建HiveContext可以直接进行Hive操作

1. 操作Hive中已存在的表

[hadoop@hadoop bin]$ ./spark-shell

Spark assembly has been built with Hive, including Datanucleus jars on classpath

Welcom

- F5 往header加入客户端的ip

ronin47

when HTTP_RESPONSE {if {[HTTP::is_redirect]}{ HTTP::header replace Location [string map {:port/ /} [HTTP::header value Location]]HTTP::header replace Lo

- java-61-在数组中,数字减去它右边(注意是右边)的数字得到一个数对之差. 求所有数对之差的最大值。例如在数组{2, 4, 1, 16, 7, 5,

bylijinnan

java

思路来自:

http://zhedahht.blog.163.com/blog/static/2541117420116135376632/

写了个java版的

public class GreatestLeftRightDiff {

/**

* Q61.在数组中,数字减去它右边(注意是右边)的数字得到一个数对之差。

* 求所有数对之差的最大值。例如在数组

- mongoDB 索引

开窍的石头

mongoDB索引

在这一节中我们讲讲在mongo中如何创建索引

得到当前查询的索引信息

db.user.find(_id:12).explain();

cursor: basicCoursor 指的是没有索引

&

- [硬件和系统]迎峰度夏

comsci

系统

从这几天的气温来看,今年夏天的高温天气可能会维持在一个比较长的时间内

所以,从现在开始准备渡过炎热的夏天。。。。

每间房屋要有一个落地电风扇,一个空调(空调的功率和房间的面积有密切的关系)

坐的,躺的地方要有凉垫,床上要有凉席

电脑的机箱

- 基于ThinkPHP开发的公司官网

cuiyadll

行业系统

后端基于ThinkPHP,前端基于jQuery和BootstrapCo.MZ 企业系统

轻量级企业网站管理系统

运行环境:PHP5.3+, MySQL5.0

系统预览

系统下载:http://www.tecmz.com

预览地址:http://co.tecmz.com

各种设备自适应

响应式的网站设计能够对用户产生友好度,并且对于

- Transaction and redelivery in JMS (JMS的事务和失败消息重发机制)

darrenzhu

jms事务承认MQacknowledge

JMS Message Delivery Reliability and Acknowledgement Patterns

http://wso2.com/library/articles/2013/01/jms-message-delivery-reliability-acknowledgement-patterns/

Transaction and redelivery in

- Centos添加硬盘完全教程

dcj3sjt126com

linuxcentoshardware

Linux的硬盘识别:

sda 表示第1块SCSI硬盘

hda 表示第1块IDE硬盘

scd0 表示第1个USB光驱

一般使用“fdisk -l”命

- yii2 restful web服务路由

dcj3sjt126com

PHPyii2

路由

随着资源和控制器类准备,您可以使用URL如 http://localhost/index.php?r=user/create访问资源,类似于你可以用正常的Web应用程序做法。

在实践中,你通常要用美观的URL并采取有优势的HTTP动词。 例如,请求POST /users意味着访问user/create动作。 这可以很容易地通过配置urlManager应用程序组件来完成 如下所示

- MongoDB查询(4)——游标和分页[八]

eksliang

mongodbMongoDB游标MongoDB深分页

转载请出自出处:http://eksliang.iteye.com/blog/2177567 一、游标

数据库使用游标返回find的执行结果。客户端对游标的实现通常能够对最终结果进行有效控制,从shell中定义一个游标非常简单,就是将查询结果分配给一个变量(用var声明的变量就是局部变量),便创建了一个游标,如下所示:

> var

- Activity的四种启动模式和onNewIntent()

gundumw100

android

Android中Activity启动模式详解

在Android中每个界面都是一个Activity,切换界面操作其实是多个不同Activity之间的实例化操作。在Android中Activity的启动模式决定了Activity的启动运行方式。

Android总Activity的启动模式分为四种:

Activity启动模式设置:

<acti

- 攻城狮送女友的CSS3生日蛋糕

ini

htmlWebhtml5csscss3

在线预览:http://keleyi.com/keleyi/phtml/html5/29.htm

代码如下:

<!DOCTYPE html>

<html>

<head>

<meta charset="UTF-8">

<title>攻城狮送女友的CSS3生日蛋糕-柯乐义<

- 读源码学Servlet(1)GenericServlet 源码分析

jzinfo

tomcatWebservlet网络应用网络协议

Servlet API的核心就是javax.servlet.Servlet接口,所有的Servlet 类(抽象的或者自己写的)都必须实现这个接口。在Servlet接口中定义了5个方法,其中有3个方法是由Servlet 容器在Servlet的生命周期的不同阶段来调用的特定方法。

先看javax.servlet.servlet接口源码:

package

- JAVA进阶:VO(DTO)与PO(DAO)之间的转换

snoopy7713

javaVOHibernatepo

PO即 Persistence Object VO即 Value Object

VO和PO的主要区别在于: VO是独立的Java Object。 PO是由Hibernate纳入其实体容器(Entity Map)的对象,它代表了与数据库中某条记录对应的Hibernate实体,PO的变化在事务提交时将反应到实际数据库中。

实际上,这个VO被用作Data Transfer

- mongodb group by date 聚合查询日期 统计每天数据(信息量)

qiaolevip

每天进步一点点学习永无止境mongodb纵观千象

/* 1 */

{

"_id" : ObjectId("557ac1e2153c43c320393d9d"),

"msgType" : "text",

"sendTime" : ISODate("2015-06-12T11:26:26.000Z")

- java之18天 常用的类(一)

Luob.

MathDateSystemRuntimeRundom

System类

import java.util.Properties;

/**

* System:

* out:标准输出,默认是控制台

* in:标准输入,默认是键盘

*

* 描述系统的一些信息

* 获取系统的属性信息:Properties getProperties();

*

*

*

*/

public class Sy

- maven

wuai

maven

1、安装maven:解压缩、添加M2_HOME、添加环境变量path

2、创建maven_home文件夹,创建项目mvn_ch01,在其下面建立src、pom.xml,在src下面简历main、test、main下面建立java文件夹

3、编写类,在java文件夹下面依照类的包逐层创建文件夹,将此类放入最后一级文件夹

4、进入mvn_ch01

4.1、mvn compile ,执行后会在