- 【2025】拥抱未来 砥砺前行

摔跤猫子

其他年终总结拥抱未来砥砺前行深度思考

2024是怎样的一年2024在历史画卷上是波澜壮阔的一年,人工智能的浪潮来临,涌现出无数国产大模型。22年11月ChatGPT发布,它的出现如同在平静湖面上投下一颗巨石,激起了层层波澜,短短五天用户数就达到了100万,让整个世界为之侧目的同时也掀起了一场AI技术竞赛的浪潮。面对大模型这一蓝海,各方力量都试图搭上这趟时代的列车,争先恐后的相继开启布局。公司大模型名称发布时间澜舟科技孟子GPTV120

- 不用到2038年,MySQL的TIMESTAMP就能把我们系统搞崩

大老二在不在

程序员Java面试java后端程序人生

不用到2038年,MySQL的TIMESTAMP就能把我们系统搞崩MySql中常见的时间类型有三种DATE,DATETIME和TIMESTAMP,其中DATE类型用于表示日期,但是不会包含时间,格式为YYYY-MM-DD,而DATETIME和TIMESTAMP用于表示日期和时间,常见的格式为YYYY-MM-DDHH:MM:SS,也可以带6位小数来表示微秒。不同于DATETIME,TIMESTAMP

- mysql的timestamp类型_MySQL数据库中的timestamp类型与时区

weixin_39758696

MySQL的timestamp类型时间范围between'1970-01-0100:00:01'and'2038-01-1903:14:07',超出这个范围则值记录为'0000-00-0000:00:00',该类型的一个重要特点就是保存的时间与时区密切相关,上述所说的时间范围是UTC(UniversalTimeCoordinated)标准,指的是经度0度上的标准时间,我国日常生活中时区以首都北京所

- mysql timestamp 插入null报错_mysql TIMESTAMP 设置为可NULL字段

白咸明

mysqltimestamp插入null报错

今天遇到问题是mysql新建表的时候TIMESTAMP类型的字段默认是NOTNULL然后上网查了一下发现很多都说就是不能为NULL的这都什么心态其实设置为空很简单只要在字段后面加上NULL就行了eg.CREATETABLE`TestTable`(Column1INTNOTNULLCOMMENT'Column1',Column2TIMESTAMPNULLCOMMENT'Column2',PRIMAR

- 【开源免费】kettle作业调度—自动化运维—数据挖掘—informatica-批量作业工具taskctl

加菲盐008

KettleETL作业调度工具taskctl运维数据库linux大数据数据挖掘

关注公众号"taskctl",关键字回复"领取"即可获权产品简介taskctl是一款由成都塔斯克信息技术公司历经10年研发的etl作业集群调度工具,该产品概念新颖,体系完整、功能全面、使用简单、操作流畅,它不仅有完整的调度核心、灵活的扩展,同时具备完整的应用体系。目前已获得金融,政府,制造,零售,健康,互联网等领域1000多家头部客户认可。图片来自网络2020年疫情席卷全球,更是对整个市场经济造成

- 新星计划Day11【数据结构与算法】 排序算法2

京与旧铺

java学习排序算法java算法

新星计划Day11【数据结构与算法】排序算法2博客主页:京与旧铺的博客主页✨欢迎关注点赞收藏⭐留言✒本文由京与旧铺原创,csdn首发!系列专栏:java学习参考网课:尚硅谷首发时间:2022年5月13日你做三四月的事,八九月就会有答案,一起加油吧如果觉得博主的文章还不错的话,请三连支持一下博主哦最后的话,作者是一个新人,在很多方面还做的不好,欢迎大佬指正,一起学习哦,冲冲冲推荐一款模拟面试、刷题

- BP神经网络概述及其预测的Python和MATLAB实现

追蜻蜓追累了

神经网络回归算法深度学习机器学习启发式算法lstmgru

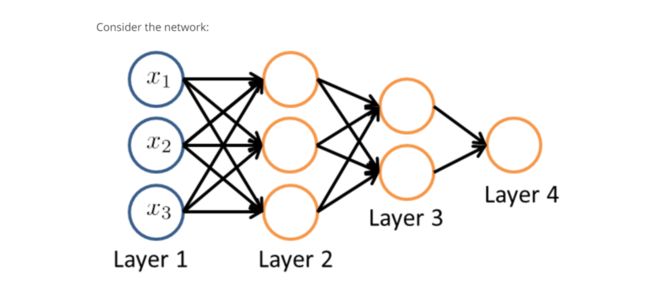

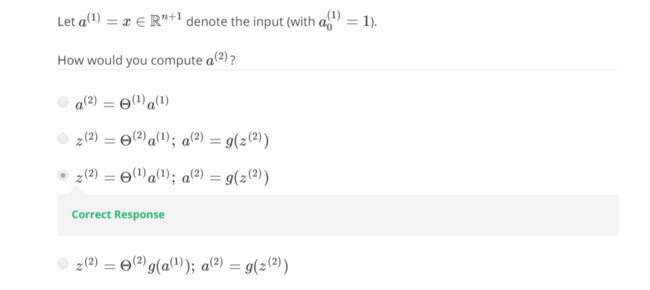

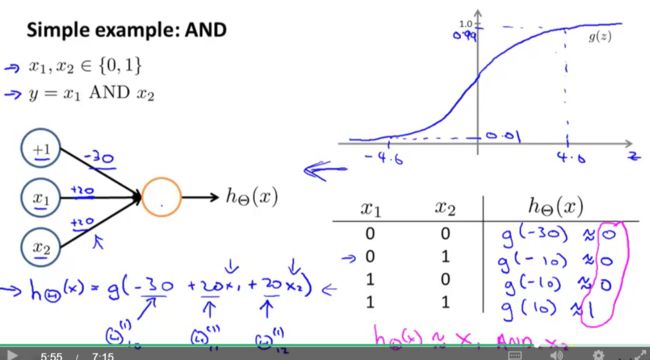

##一、背景###1.1人工神经网络的起源人工神经网络(ArtificialNeuralNetwork,ANN)受生物神经网络的启发,模拟大脑神经元之间的连接和信息处理方式。尽管早在1943年就有学者如McCulloch和Pitts提出了数学模型,但人工神经网络真正被广泛研究是在20世纪80年代。###1.2BP神经网络的兴起反向传播(BackPropagation,简称BP)算法是20世纪80年

- React Hooks 基于 JS 闭包原理实现,但是闭包也会带来很多麻烦

头脑旋风

javascriptreact.js前端reactnative

文章开始之前希望大家支持一下我独立开发的微信小程序“头脑旋风”,或微信扫描我的头像进入,谢谢支持~文章目录1.JS中的闭包2.ReactHooks中的闭包3.过时的闭包4.修复过时闭包的问题5.Hook中过时的闭包总结1.JS中的闭包下面定义了一个工厂函数createIncrement(i),它返回一个increment函数。之后,每次调用increment函数时,内部计数器的值都会增加i。fun

- 聊聊如何实现Android 放大镜效果

咖啡老师

android

一、前言很久没有更新Android原生技术内容了,前些年一直在做跨端方向开发,最近换工作用重新回到原生技术,又回到了熟悉但有些生疏的环境,真是感慨万分。近期也是因为准备做地图交互相关的需求,功能非常复杂,尤其是交互部分,不过再复杂的交互,只要一点点将它拆解,分而治之,问题还是可以解决,就比如接下来要做的放大镜功能。二、功能设计该功能的场景是在操作地图时,对于边缘的精细化操作(像素级别的)需要在放大

- 【DAY.2】PHP数据结构与算法_排序_冒泡排序

我是妖怪_

天天学习冒泡排序算法php

思路分析:循环逐个对比,从第一个开始,与下一个数字进行对比,若大于则交换位置,每循环一遍将最大的一个排到最后。(依次比较相邻的元素,两两比较,就可以最终将最大(小)的元素调整到最顶端、次顶端、、、)$arr=array(3,2,5,6,1,8,4,9);functionbubble_sort($arr){$len=count($arr);//判断数组是否为空if($len$arr[$i+1]){$

- python转转商超书籍信息爬虫

Python数据分析与机器学习

爬虫python网络爬虫爬虫

1基本理论1.1概念体系网络爬虫又称网络蜘蛛、网络蚂蚁、网络机器人等,可以按照我们设置的规则自动化爬取网络上的信息,这些规则被称为爬虫算法。是一种自动化程序,用于从互联网上抓取数据。爬虫通过模拟浏览器的行为,访问网页并提取信息。这些信息可以是结构化的数据(如表格数据),也可以是非结构化的文本。爬虫任务的执行流程通常包括发送HTTP请求、解析HTML文档、提取所需数据等步骤。1.2技术体系1请求库:

- hadoop常用命令汇总

m0_67402026

javajava后端

1、查看目录下的文件列表:hadoopfs–ls[文件目录]hadoopfs-ls-h/lance2、将本机文件夹存储至hadoop上:hadoopfs–put[本机目录][hadoop目录]hadoopfs-putlance/3、在hadoop指定目录内创建新目录:hadoopfs–mkdir[目录]hadoopfs-mkdir/lance4、在hadoop指定目录下新建一个文件,使用touch

- Python中的数字类型

不爱敲代码的小李0812

python二级通关宝典python开发语言后端

目录一、概述二、整数类型三、浮点数四、复数类型一、概述1)Python语言提供三种数字类型:整数类型,浮点数类型和复数类型,分别对应数学中的整数,实数和复数2)1010是整数类型,10.10是一个浮点数类型,10+10j是一个复数类型二、整数类型1)与数学中的整数概念一致,没有取值范围限制。2)整数类型有4种进制表示:十进制,二进制,八进制和十六进制。默认情况,整数采用十进制,其他进制需要增加引导

- Class相关api介绍

uncleqiao

java基础java

文章目录版本约定api一览1.获取类信息的方法2.获取类成员(字段、方法、构造函数)的方法3.操作类成员的方法4.类型检查和类型转换的方法5.数组相关的方法6.注解相关的方法7.类加载和反射相关的辅助方法api测试isA的判断isSynthetic判断动态类获取泛型类型获取直接继承的泛型父类方法中的本地类构造器中的本地类内部类判断类名称匿名类判断判断本地类判断成员类获取成员类castasSubcl

- Java 8 Stream API 详解

·云扬·

Java#JavaSEjava开发语言学习1024程序员节笔记

在Java8中,引入了一个全新的API——StreamAPI,它与传统的java.io包下的InputStream和OutputStream没有任何关系。StreamAPI的引入主要是为了提高程序员在操作集合(Collection)时的生产力,而这一提升很大程度上得益于同时引入的Lambda表达式,它极大地提高了编程效率和程序的可读性。1什么是Stream?Stream可以被看作是一个高级的迭代器

- 湖北移动魔百盒ZN90_Hi3798MV300/MV310-当贝桌面精简卡刷固件包

fatiaozhang9527

机顶盒刷机固件魔百盒刷机魔百盒固件移动魔百盒机顶盒ROM盒子ROM

湖北移动魔百盒ZN90_Hi3798MV300/MV310-当贝桌面精简卡刷固件包特点:1、适用于对应型号的电视盒子刷机;2、开放原厂固件屏蔽的市场安装和u盘安装apk;3、修改dns,三网通用;4、大量精简内置的没用的软件,运行速度提升,多出大量的存储空间;5、去除应用安装限制;6、支持开机自启动、开机密码锁、儿童应用锁、应用隐藏、开机自动进入HDMI等各种花式功能;魔百和ZN90代工机顶盒刷机

- M302H-ZN-Hi3798MV300/MV300H-当贝纯净桌面-卡刷固件包

fatiaozhang9527

机顶盒刷机固件魔百盒刷机魔百盒固件移动魔百盒机顶盒ROM盒子ROM

M302H-ZN-Hi3798MV300/MV300H-当贝纯净桌面-卡刷固件包-内有教程特点:1、适用于对应型号的电视盒子刷机;2、开放原厂固件屏蔽的市场安装和u盘安装apk;3、修改dns,三网通用;4、大量精简内置的没用的软件,运行速度提升,多出大量的存储空间;5、去除应用安装限制;6、支持开机自启动、开机密码锁、儿童应用锁、应用隐藏、开机自动进入HDMI等各种花式功能;魔百和M302H-Z

- jmeter接口压测

test猿

压力测试jmeter

一、接口压力测试过程与步骤接口压力测试的过程与步骤通常包括以下几个阶段:1.确定测试目标和指标在开始接口压力测试之前,首先需要明确测试的目标和指标。这可能包括测试接口在不同并发请求下的响应时间、吞吐量、错误率等。这些指标应根据业务需求、系统设计和性能预期来设定。2.准备测试环境和工具为了进行压力测试,需要准备相应的测试环境和工具。测试环境应尽可能与生产环境相似,以便获得更准确的测试结果。常用的压力

- GarageBand:录制与编辑音频轨道教程_2024-07-17_16-51-15.Tex

chenjj4003

游戏开发2音视频自动化运维游戏unity

GarageBand:录制与编辑音频轨道教程GarageBand基础操作启动GarageBand并创建新项目打开GarageBand在Mac上,点击Dock栏中的GarageBand图标或通过Finder中的应用程序文件夹找到并启动GarageBand。在iOS设备上,从主屏幕找到GarageBand应用并点击打开。创建新项目选择“文件”>“新建”或点击屏幕左上角的“+”按钮。在弹出的窗口中,选择

- Kubernetes(k8s) 架构设计

boonya

#k8skubernetes容器云原生

目录节点管理节点自注册手动节点管理节点状态地址状况容量与可分配信息节点控制器节点容量节点拓扑节点体面关闭接下来控制面到节点通信节点到控制面控制面到节点API服务器到kubeletapiserver到节点、Pod和服务SSH隧道Konnectivity服务控制器控制器模式通过API服务器来控制直接控制期望状态与当前状态设计运行控制器的方式接下来云控制器管理器的基础概念设计云控制器管理器的功能节点控制

- 探索 Jiron 的奇妙世界!

jiron开源

平台开发flink大数据数据仓库hive

亲爱的探索者,欢迎您踏入Jiron的数字领地!我们非常荣幸能在这里与您相遇。为了回馈您的关注与支持,特此献上Jiron的GitHub及Gitee云端宝藏地图,诚邀您一同探索与贡献:GitHub星际之旅:https://github.com/642933588/jiron-cloudGitee云端漫步:https://gitee.com/642933588/jiron-cloud请不吝赐予您的小星星

- DolphinScheduler × Jiron:打造高效智能的数据调度新生态

jiron开源

平台开发flink大数据hadoophivesqoopspringcloudsentinel

JironGitHub地址https://github.com/642933588/jiron-cloudhttps://gitee.com/642933588/jiron-cloudDolphinScheduler×Jiron:打造高效智能的数据调度新生态DolphinScheduler是一个开源的分布式任务调度平台,专为大数据场景下的工作流调度和数据治理而设计。将DolphinSchedule

- k8s部署rabbitmq集群(使用rabbitmq-cluster-operator部署)

仇誉

rabbitmqrabbitmqkubernetes

1.下载并安装cluster-operatorkubectlapply-frabbitmq-cluster-operator.yml百度网盘请输入提取码:qy992.部署rabbitmq实例kubectlapply-frabbitmq.yaml存储类改为自己的(如:managed-nfs-storage)#rabbitmq.yaml---apiVersion:rabbitmq.com/v1beta

- 【Linux奇遇记】我和Linux的初次相遇

2401_89210258

linux状态模式运维

Linux的文件路径类型编辑Linux常用命令介绍Linux在生活中的应用全文总结前端和后端的介绍前端和后端是指现代Web应用程序的两个主要组成部分。1.前端前端(也称为客户端)是指向用户显示内容的所有方面。前端开发涉及使用HTML、CSS和JavaScript等技术来创建和维护Web应用程序的用户接口。2.后端后端(也称为服务器端)是指Web应用程序的非用户界面部分。后端开发涉及使用不同的编程语

- 2024年Vue面试题汇总

2401_89210258

vue.js前端javascript

流程图如下:vue核心知识——语法篇1.请问v-if和v-show有什么区别?相同点:两者都是在判断DOM节点是否要显示。不同点:a.实现方式:v-if是根据后面数据的真假值判断直接从Dom树上删除或重建元素节点。v-show只是在修改元素的css样式,也就是display的属性值,元素始终在Dom树上。b.编译过程:v-if切换有一个局部编译/卸载的过程,切换过程中合适地销毁和重建内部的事件监听

- 【深度学习】Pytorch:导入导出模型参数

T0uken

深度学习pytorch人工智能

PyTorch是深度学习领域中广泛使用的框架,熟练掌握其模型参数的管理对于模型训练、推理以及部署非常重要。本文将全面讲解PyTorch中关于模型参数的操作,包括如何导出、导入以及如何下载模型参数。什么是模型参数模型参数是指深度学习模型中需要通过训练来优化的变量,如神经网络中的权重和偏置。这些参数存储在PyTorch的torch.nn.Module对象中,通过以下方式访问:importtorchim

- RabbitMQ-消息可靠性以及延迟消息

mikey棒棒棒

java中间件开发语言消息可靠性死信交换机惰性队列rabbitmq

目录消息丢失一、发送者的可靠性1.1生产者重试机制1.2生产者确认机制1.3实现生产者确认(1)开启生产者确认(2)定义ReturnCallback(3)定义ConfirmCallback二、MQ的持久化2.1数据持久化2.2LazyQueue2.2.1控制台配置Lazy模式2.2.2代码配置Lazy模式2.2.3更新已有队列为lazy模式三、消费者的可靠性3.1消费者确认机制3.2失败重试机制3

- shell流程控制

般木h

linux运维服务器

流程控制是改变程序运行顺序的指令。1.if语句格式:iflist;thenlist;[eliflist;thenlist;]...[elselist;]fi1.1单分支if条件表达式;then命令fi示例:#!/bin/bashN=10if[$N-gt5];thenechoyesfi#bashtest.shyes1.2双分支if条件表达式;then命令else命令fi示例1:#!/bin/bash

- 【从零开始入门unity游戏开发之——C#篇46】C#补充知识点——命名参数和可选参数

向宇it

unityc#游戏引擎编辑器开发语言

考虑到每个人基础可能不一样,且并不是所有人都有同时做2D、3D开发的需求,所以我把【零基础入门unity游戏开发】分为成了C#篇、unity通用篇、unity3D篇、unity2D篇。【C#篇】:主要讲解C#的基础语法,包括变量、数据类型、运算符、流程控制、面向对象等,适合没有编程基础的同学入门。【unity通用篇】:主要讲解unity的基础通用的知识,包括unity界面、unity脚本、unit

- matlab程序代编程写做代码图像处理BP神经网络机器深度学习python

matlabgoodboy

深度学习matlab图像处理

1.安装必要的库首先,确保你已经安装了必要的Python库。如果没有安装,请运行以下命令:bash复制代码pipinstallnumpymatplotlibtensorflowopencv-python2.图像预处理我们将使用OpenCV来加载和预处理图像数据。假设你有一个图像数据集,每个类别的图像存放在单独的文件夹中。python复制代码importosimportcv2importnumpya

- 戴尔笔记本win8系统改装win7系统

sophia天雪

win7戴尔改装系统win8

戴尔win8 系统改装win7 系统详述

第一步:使用U盘制作虚拟光驱:

1)下载安装UltraISO:注册码可以在网上搜索。

2)启动UltraISO,点击“文件”—》“打开”按钮,打开已经准备好的ISO镜像文

- BeanUtils.copyProperties使用笔记

bylijinnan

java

BeanUtils.copyProperties VS PropertyUtils.copyProperties

两者最大的区别是:

BeanUtils.copyProperties会进行类型转换,而PropertyUtils.copyProperties不会。

既然进行了类型转换,那BeanUtils.copyProperties的速度比不上PropertyUtils.copyProp

- MyEclipse中文乱码问题

0624chenhong

MyEclipse

一、设置新建常见文件的默认编码格式,也就是文件保存的格式。

在不对MyEclipse进行设置的时候,默认保存文件的编码,一般跟简体中文操作系统(如windows2000,windowsXP)的编码一致,即GBK。

在简体中文系统下,ANSI 编码代表 GBK编码;在日文操作系统下,ANSI 编码代表 JIS 编码。

Window-->Preferences-->General -

- 发送邮件

不懂事的小屁孩

send email

import org.apache.commons.mail.EmailAttachment;

import org.apache.commons.mail.EmailException;

import org.apache.commons.mail.HtmlEmail;

import org.apache.commons.mail.MultiPartEmail;

- 动画合集

换个号韩国红果果

htmlcss

动画 指一种样式变为另一种样式 keyframes应当始终定义0 100 过程

1 transition 制作鼠标滑过图片时的放大效果

css

.wrap{

width: 340px;height: 340px;

position: absolute;

top: 30%;

left: 20%;

overflow: hidden;

bor

- 网络最常见的攻击方式竟然是SQL注入

蓝儿唯美

sql注入

NTT研究表明,尽管SQL注入(SQLi)型攻击记录详尽且为人熟知,但目前网络应用程序仍然是SQLi攻击的重灾区。

信息安全和风险管理公司NTTCom Security发布的《2015全球智能威胁风险报告》表明,目前黑客攻击网络应用程序方式中最流行的,要数SQLi攻击。报告对去年发生的60亿攻击 行为进行分析,指出SQLi攻击是最常见的网络应用程序攻击方式。全球网络应用程序攻击中,SQLi攻击占

- java笔记2

a-john

java

类的封装:

1,java中,对象就是一个封装体。封装是把对象的属性和服务结合成一个独立的的单位。并尽可能隐藏对象的内部细节(尤其是私有数据)

2,目的:使对象以外的部分不能随意存取对象的内部数据(如属性),从而使软件错误能够局部化,减少差错和排错的难度。

3,简单来说,“隐藏属性、方法或实现细节的过程”称为——封装。

4,封装的特性:

4.1设置

- [Andengine]Error:can't creat bitmap form path “gfx/xxx.xxx”

aijuans

学习Android遇到的错误

最开始遇到这个错误是很早以前了,以前也没注意,只当是一个不理解的bug,因为所有的texture,textureregion都没有问题,但是就是提示错误。

昨天和美工要图片,本来是要背景透明的png格式,可是她却给了我一个jpg的。说明了之后她说没法改,因为没有png这个保存选项。

我就看了一下,和她要了psd的文件,还好我有一点

- 自己写的一个繁体到简体的转换程序

asialee

java转换繁体filter简体

今天调研一个任务,基于java的filter实现繁体到简体的转换,于是写了一个demo,给各位博友奉上,欢迎批评指正。

实现的思路是重载request的调取参数的几个方法,然后做下转换。

- android意图和意图监听器技术

百合不是茶

android显示意图隐式意图意图监听器

Intent是在activity之间传递数据;Intent的传递分为显示传递和隐式传递

显式意图:调用Intent.setComponent() 或 Intent.setClassName() 或 Intent.setClass()方法明确指定了组件名的Intent为显式意图,显式意图明确指定了Intent应该传递给哪个组件。

隐式意图;不指明调用的名称,根据设

- spring3中新增的@value注解

bijian1013

javaspring@Value

在spring 3.0中,可以通过使用@value,对一些如xxx.properties文件中的文件,进行键值对的注入,例子如下:

1.首先在applicationContext.xml中加入:

<beans xmlns="http://www.springframework.

- Jboss启用CXF日志

sunjing

logjbossCXF

1. 在standalone.xml配置文件中添加system-properties:

<system-properties> <property name="org.apache.cxf.logging.enabled" value=&

- 【Hadoop三】Centos7_x86_64部署Hadoop集群之编译Hadoop源代码

bit1129

centos

编译必需的软件

Firebugs3.0.0

Maven3.2.3

Ant

JDK1.7.0_67

protobuf-2.5.0

Hadoop 2.5.2源码包

Firebugs3.0.0

http://sourceforge.jp/projects/sfnet_findbug

- struts2验证框架的使用和扩展

白糖_

框架xmlbeanstruts正则表达式

struts2能够对前台提交的表单数据进行输入有效性校验,通常有两种方式:

1、在Action类中通过validatexx方法验证,这种方式很简单,在此不再赘述;

2、通过编写xx-validation.xml文件执行表单验证,当用户提交表单请求后,struts会优先执行xml文件,如果校验不通过是不会让请求访问指定action的。

本文介绍一下struts2通过xml文件进行校验的方法并说

- 记录-感悟

braveCS

感悟

再翻翻以前写的感悟,有时会发现自己很幼稚,也会让自己找回初心。

2015-1-11 1. 能在工作之余学习感兴趣的东西已经很幸福了;

2. 要改变自己,不能这样一直在原来区域,要突破安全区舒适区,才能提高自己,往好的方面发展;

3. 多反省多思考;要会用工具,而不是变成工具的奴隶;

4. 一天内集中一个定长时间段看最新资讯和偏流式博

- 编程之美-数组中最长递增子序列

bylijinnan

编程之美

import java.util.Arrays;

import java.util.Random;

public class LongestAccendingSubSequence {

/**

* 编程之美 数组中最长递增子序列

* 书上的解法容易理解

* 另一方法书上没有提到的是,可以将数组排序(由小到大)得到新的数组,

* 然后求排序后的数组与原数

- 读书笔记5

chengxuyuancsdn

重复提交struts2的token验证

1、重复提交

2、struts2的token验证

3、用response返回xml时的注意

1、重复提交

(1)应用场景

(1-1)点击提交按钮两次。

(1-2)使用浏览器后退按钮重复之前的操作,导致重复提交表单。

(1-3)刷新页面

(1-4)使用浏览器历史记录重复提交表单。

(1-5)浏览器重复的 HTTP 请求。

(2)解决方法

(2-1)禁掉提交按钮

(2-2)

- [时空与探索]全球联合进行第二次费城实验的可能性

comsci

二次世界大战前后,由爱因斯坦参加的一次在海军舰艇上进行的物理学实验 -费城实验

至今给我们大家留下很多迷团.....

关于费城实验的详细过程,大家可以在网络上搜索一下,我这里就不详细描述了

在这里,我的意思是,现在

- easy connect 之 ORA-12154: TNS: 无法解析指定的连接标识符

daizj

oracleORA-12154

用easy connect连接出现“tns无法解析指定的连接标示符”的错误,如下:

C:\Users\Administrator>sqlplus username/

[email protected]:1521/orcl

SQL*Plus: Release 10.2.0.1.0 – Production on 星期一 5月 21 18:16:20 2012

Copyright (c) 198

- 简单排序:归并排序

dieslrae

归并排序

public void mergeSort(int[] array){

int temp = array.length/2;

if(temp == 0){

return;

}

int[] a = new int[temp];

int

- C语言中字符串的\0和空格

dcj3sjt126com

c

\0 为字符串结束符,比如说:

abcd (空格)cdefg;

存入数组时,空格作为一个字符占有一个字节的空间,我们

- 解决Composer国内速度慢的办法

dcj3sjt126com

Composer

用法:

有两种方式启用本镜像服务:

1 将以下配置信息添加到 Composer 的配置文件 config.json 中(系统全局配置)。见“例1”

2 将以下配置信息添加到你的项目的 composer.json 文件中(针对单个项目配置)。见“例2”

为了避免安装包的时候都要执行两次查询,切记要添加禁用 packagist 的设置,如下 1 2 3 4 5

- 高效可伸缩的结果缓存

shuizhaosi888

高效可伸缩的结果缓存

/**

* 要执行的算法,返回结果v

*/

public interface Computable<A, V> {

public V comput(final A arg);

}

/**

* 用于缓存数据

*/

public class Memoizer<A, V> implements Computable<A,

- 三点定位的算法

haoningabc

c算法

三点定位,

已知a,b,c三个顶点的x,y坐标

和三个点都z坐标的距离,la,lb,lc

求z点的坐标

原理就是围绕a,b,c 三个点画圆,三个圆焦点的部分就是所求

但是,由于三个点的距离可能不准,不一定会有结果,

所以是三个圆环的焦点,环的宽度开始为0,没有取到则加1

运行

gcc -lm test.c

test.c代码如下

#include "stdi

- epoll使用详解

jimmee

clinux服务端编程epoll

epoll - I/O event notification facility在linux的网络编程中,很长的时间都在使用select来做事件触发。在linux新的内核中,有了一种替换它的机制,就是epoll。相比于select,epoll最大的好处在于它不会随着监听fd数目的增长而降低效率。因为在内核中的select实现中,它是采用轮询来处理的,轮询的fd数目越多,自然耗时越多。并且,在linu

- Hibernate对Enum的映射的基本使用方法

linzx0212

enumHibernate

枚举

/**

* 性别枚举

*/

public enum Gender {

MALE(0), FEMALE(1), OTHER(2);

private Gender(int i) {

this.i = i;

}

private int i;

public int getI

- 第10章 高级事件(下)

onestopweb

事件

index.html

<!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd">

<html xmlns="http://www.w3.org/

- 孙子兵法

roadrunners

孙子兵法

始计第一

孙子曰:

兵者,国之大事,死生之地,存亡之道,不可不察也。

故经之以五事,校之以计,而索其情:一曰道,二曰天,三曰地,四曰将,五

曰法。道者,令民于上同意,可与之死,可与之生,而不危也;天者,阴阳、寒暑

、时制也;地者,远近、险易、广狭、死生也;将者,智、信、仁、勇、严也;法

者,曲制、官道、主用也。凡此五者,将莫不闻,知之者胜,不知之者不胜。故校

之以计,而索其情,曰

- MySQL双向复制

tomcat_oracle

mysql

本文包括:

主机配置

从机配置

建立主-从复制

建立双向复制

背景

按照以下简单的步骤:

参考一下:

在机器A配置主机(192.168.1.30)

在机器B配置从机(192.168.1.29)

我们可以使用下面的步骤来实现这一点

步骤1:机器A设置主机

在主机中打开配置文件 ,

- zoj 3822 Domination(dp)

阿尔萨斯

Mina

题目链接:zoj 3822 Domination

题目大意:给定一个N∗M的棋盘,每次任选一个位置放置一枚棋子,直到每行每列上都至少有一枚棋子,问放置棋子个数的期望。

解题思路:大白书上概率那一张有一道类似的题目,但是因为时间比较久了,还是稍微想了一下。dp[i][j][k]表示i行j列上均有至少一枚棋子,并且消耗k步的概率(k≤i∗j),因为放置在i+1~n上等价与放在i+1行上,同理