图像分类是人工智能领域的一个热门话题,同样在生产环境中也会经常会遇到类似的需求,那么怎么快速搭建一个图像分类,或者图像内容是别的API呢?

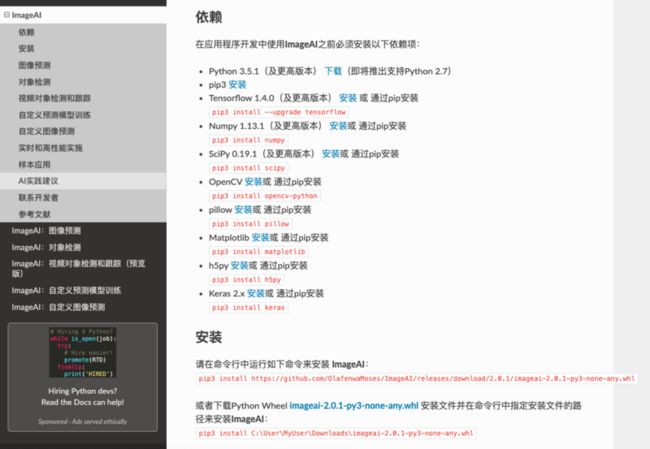

首先,给大家推荐一个图像相关的库:ImageAI

通过官方给的代码,我们可以看到一个简单的Demo:

from imageai.Prediction import ImagePrediction

import os

execution_path = os.getcwd()

prediction = ImagePrediction()

prediction.setModelTypeAsResNet()

prediction.setModelPath(os.path.join(execution_path, "resnet50_weights_tf_dim_ordering_tf_kernels.h5"))

prediction.loadModel()

predictions, probabilities = prediction.predictImage(os.path.join(execution_path, "1.jpg"), result_count=5 )

for eachPrediction, eachProbability in zip(predictions, probabilities):

print(eachPrediction + " : " + eachProbability)通过这个Demo我们可以考虑将这个模块部署到云函数:

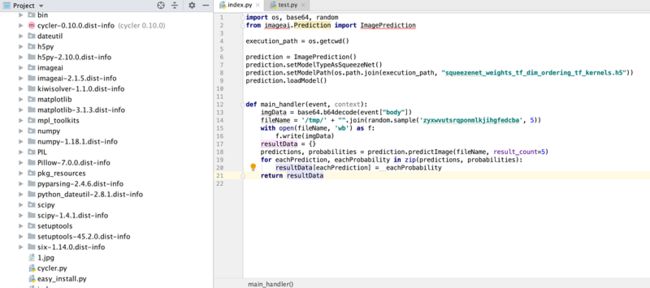

首先,我们在本地创建一个Python的项目:

mkdir imageDemo

然后新建文件:vim index.py

from imageai.Prediction import ImagePrediction

import os, base64, random

execution_path = os.getcwd()

prediction = ImagePrediction()

prediction.setModelTypeAsSqueezeNet()

prediction.setModelPath(os.path.join(execution_path, "squeezenet_weights_tf_dim_ordering_tf_kernels.h5"))

prediction.loadModel()

def main_handler(event, context):

imgData = base64.b64decode(event["body"])

fileName = '/tmp/' + "".join(random.sample('zyxwvutsrqponmlkjihgfedcba', 5))

with open(fileName, 'wb') as f:

f.write(imgData)

resultData = {}

predictions, probabilities = prediction.predictImage(fileName, result_count=5)

for eachPrediction, eachProbability in zip(predictions, probabilities):

resultData[eachPrediction] = eachProbability

return resultData

创建完成之后,我们需要下载一下我们所依赖的模型:

- SqueezeNet(文件大小:4.82 MB,预测时间最短,精准度适中)

- ResNet50 by Microsoft Research (文件大小:98 MB,预测时间较快,精准度高)

- InceptionV3 by Google Brain team (文件大小:91.6 MB,预测时间慢,精度更高)

- DenseNet121 by Facebook AI Research (文件大小:31.6 MB,预测时间较慢,精度最高)我们先用第一个SqueezeNet来做测试:

在官方文档复制模型文件地址:

使用wget直接安装:

wget https://github.com/OlafenwaMoses/ImageAI/releases/download/1.0/squeezenet_weights_tf_dim_ordering_tf_kernels.h5接下来,我们就需要进行安装依赖了,这里面貌似安装的内容蛮多的:

而且这些依赖有一些需要编译的,这就需要我们在centos + python2.7/3.6的版本下打包才可以,这样就显得非常复杂,尤其是mac/windows用户,伤不起。

所以这时候,直接用我之前的打包网址:

直接下载解压,然后放到自己的项目中:

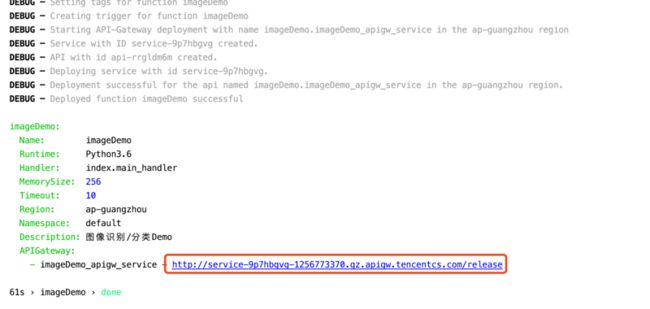

最后,一步了,我们创建serverless.yaml

imageDemo:

component: "@serverless/tencent-scf"

inputs:

name: imageDemo

codeUri: ./

handler: index.main_handler

runtime: Python3.6

region: ap-guangzhou

description: 图像识别/分类Demo

memorySize: 256

timeout: 10

events:

- apigw:

name: imageDemo_apigw_service

parameters:

protocols:

- http

serviceName: serverless

description: 图像识别/分类DemoAPI

environment: release

endpoints:

- path: /image

method: ANY完成之后,执行我们的sls --debug部署,部署过程中会有扫码的登陆,登陆之后等待即可,完成之后,我们可以复制生成的URL:

通过Python语言进行测试,url就是我们刚才复制的+/image:

import urllib.request

import base64

with open("1.jpg", 'rb') as f:

base64_data = base64.b64encode(f.read())

s = base64_data.decode()

url = 'http://service-9p7hbgvg-1256773370.gz.apigw.tencentcs.com/release/image'

print(urllib.request.urlopen(urllib.request.Request(

url = url,

data=s.encode("utf-8")

)).read().decode("utf-8"))通过网络搜索一张图片,例如我找了这个:

得到运行结果:

{"cheetah": 83.12643766403198, "Irish_terrier": 2.315458096563816, "lion": 1.8476998433470726, "teddy": 1.6655176877975464, "baboon": 1.5562783926725388}将代码修改一下,进行一下简单的耗时测试:

import urllib.request

import base64, time

for i in range(0,10):

start_time = time.time()

with open("1.jpg", 'rb') as f:

base64_data = base64.b64encode(f.read())

s = base64_data.decode()

url = 'http://service-hh53d8yz-1256773370.bj.apigw.tencentcs.com/release/test'

print(urllib.request.urlopen(urllib.request.Request(

url = url,

data=s.encode("utf-8")

)).read().decode("utf-8"))

print("cost: ", time.time() - start_time)输出结果:

{"cheetah": 83.12643766403198, "Irish_terrier": 2.315458096563816, "lion": 1.8476998433470726, "teddy": 1.6655176877975464, "baboon": 1.5562783926725388}

cost: 2.1161561012268066

{"cheetah": 83.12643766403198, "Irish_terrier": 2.315458096563816, "lion": 1.8476998433470726, "teddy": 1.6655176877975464, "baboon": 1.5562783926725388}

cost: 1.1259253025054932

{"cheetah": 83.12643766403198, "Irish_terrier": 2.315458096563816, "lion": 1.8476998433470726, "teddy": 1.6655176877975464, "baboon": 1.5562783926725388}

cost: 1.3322770595550537

{"cheetah": 83.12643766403198, "Irish_terrier": 2.315458096563816, "lion": 1.8476998433470726, "teddy": 1.6655176877975464, "baboon": 1.5562783926725388}

cost: 1.3562259674072266

{"cheetah": 83.12643766403198, "Irish_terrier": 2.315458096563816, "lion": 1.8476998433470726, "teddy": 1.6655176877975464, "baboon": 1.5562783926725388}

cost: 1.0180821418762207

{"cheetah": 83.12643766403198, "Irish_terrier": 2.315458096563816, "lion": 1.8476998433470726, "teddy": 1.6655176877975464, "baboon": 1.5562783926725388}

cost: 1.4290671348571777

{"cheetah": 83.12643766403198, "Irish_terrier": 2.315458096563816, "lion": 1.8476998433470726, "teddy": 1.6655176877975464, "baboon": 1.5562783926725388}

cost: 1.5917718410491943

{"cheetah": 83.12643766403198, "Irish_terrier": 2.315458096563816, "lion": 1.8476998433470726, "teddy": 1.6655176877975464, "baboon": 1.5562783926725388}

cost: 1.1727900505065918

{"cheetah": 83.12643766403198, "Irish_terrier": 2.315458096563816, "lion": 1.8476998433470726, "teddy": 1.6655176877975464, "baboon": 1.5562783926725388}

cost: 2.962592840194702

{"cheetah": 83.12643766403198, "Irish_terrier": 2.315458096563816, "lion": 1.8476998433470726, "teddy": 1.6655176877975464, "baboon": 1.5562783926725388}

cost: 1.2248001098632812这个数据,整体性能基本是在我可以接受的范围内。

至此,我们通过Serveerless架构搭建的Python版本的图像识别/分类小工具做好了。