CrawlSpider是什么?

和spider一样,都是scrapy里面的一个爬虫类,但是---CrawlSpider是Spider的子类,子类要比父类功能多,它有自己的都有功能------提取链接的功能extract_links,链接提取器

form scrapy.linkextractors import LinkExtractor

定制规则,提取符合规则的连接(三种方式)

正则提取

k = LinkExtractor(allow=r'http://www\.xiaohuar\.com/list-1-\d+\.html')

ret = lk.extract_links(response)

# 将所有链接提取出来

lt = []

for lk in ret:

lt.append(lk.url)

print(lt)

xpath提取

xpath提取

lk = LinkExtractor(restrict_xpaths='//div[@class="page_num"]/a')

lk = LinkExtractor(restrict_xpaths='//div[@class="page_num"]')

精确到a和不精确到a都可以,精确到a找的是直接子节点a

css提取

ret = LinkExtractor(restrict_css='.page_num')

ret = LinkExtractor(restrict_css='.page_num > a')

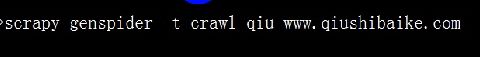

生成爬虫文件

scrapy startproject test

# 需要加上 -t crawl

scrapy genspider -t crawl qiuqiu www.qiushibaike.com

qiuqiu.py

# -*- coding: utf-8 -*-

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from qiubaipro.items import QiubaiproItem

class QiuqiuSpider(CrawlSpider):

name = 'qiuqiu'

allowed_domains = ['www.qiushibaike.com']

start_urls = ['http://www.qiushibaike.com/']

“”“

一启动爬虫,会执行start_request这个方法,会向这些列表里url发起请求做出响应,

由parse函数来处理这些响应

”“”

“”“

def start_requests(self):

for url in start_urls:

yield scrapy.Request(url=url, callback=self.parse)

这个parse函数是crawl自己实现的,作用就是:按照规则从响应中提取链接

”“”

'''

启动爬虫,向起始url发送请求,通过parse函数来处理响应

parse函数的作用就是按照规则从响应中提取链接

2 3 4 5 13

然后将这些链接扔给调度器,响应来了之后,parse_item来处理

处理完毕之后,要看follow是真还是假,如果是假,就没有了

如果是真,会将响应交给parse函数进行处理

'''

# 写规则

# 制定规则,规则是一个元组,可以有多个规则,规则对象

'''

参数1:链接提取器

参数2:回调函数,用来处理这一批url的响应,这个写法需要注意,是函数名字符串

在crawlspider中,parse函数有其特定的作用,就是提取链接的作用,所以parse函数不能重写,如果重写了,那么crawlspider就不能用了

参数3:跟进,是否跟进。要不要再提取的链接的响应中接着按照规则提取链接,要就是True,不要就是False(如果是详情页,一般是不需要跟进的,页码页基本都是跟进(爬所有页码跟进))

链接提取器会提取一批的url,调度器会向 这一批url都发送请求,都会得到所有响应,

会通过callback处理这些响应,要不要按着这个规则再去提取链接

'''

page_link = LinkExtractor(allow=r'/8hr/page/\d+/')

rules = (

Rule(page_link, callback='parse_item', follow=False),

)

def parse_item(self, response):

# 先查找得到所有的div

div_list = response.xpath('//div[@id="content-left"]/div')

# 遍历每一个div,获取每一个div的属性

for odiv in div_list:

# 创建一个对象

item = QiubaiproItem()

# 获取头像

image_url = odiv.xpath('.//div//img/@src')[0].extract()

# 获取名字

name = odiv.css('.author h2::text').extract()[0].strip('\n')

# 获取年龄

try:

age = odiv.xpath('.//div[starts-with(@class,"articleGender")]/text()')[0].extract()

except Exception as e:

age = '没有年龄'

# 获取内容

lt = odiv.css('.content > span::text').extract()

content = ''.join(lt).rstrip('查看全文').replace('\n', '')

# 获取好笑个数

haha_count = odiv.xpath('.//i[@class="number"]/text()').extract()[0]

# 获取评论个数

ping_count = odiv.xpath('.//i[@class="number"]/text()').extract()[1]

for field in item.fields:

item[field] = eval(field)

# 将item扔给引擎

yield item

tupian.py

# -*- coding: utf-8 -*-

import scrapy

from scrapy.linkextractors import LinkExtractor

from scrapy.spiders import CrawlSpider, Rule

from imagepro.items import ImageproItem

class TupianSpider(CrawlSpider):

name = 'tupian'

allowed_domains = ['699pic.com']

start_urls = ['http://699pic.com/people.html']

# 指定规则

# 详情页规则

detail_link = LinkExtractor(restrict_xpaths='//div[@class="list"]/a')

# 页码规则

page_link = LinkExtractor(allow=r'/photo-0-6-\d+-0-0-0\.html')

rules = (

# 如果将链交给parse函数,可以不写callback='parse'

Rule(page_link, follow=True),# True获取全网这个规则的url

Rule(detail_link, callback='parse_item', follow=False)

)

def parse_item(self, response):

# 创建一个对象

item = ImageproItem()

# 获取名字

item['name'] = response.xpath('//div[@class="photo-view"]/h1/text()').extract()[0].replace(' ', '')

# 发布时间

item['publish_time'] = response.css('.publicityt::text').extract()[0].strip(' 发布')

item['look'] = response.xpath('//span[@class="look"]/read/text()').extract()[0]

item['collect']=response.css('.collect::text')[0].extract().replace(',','').strip('收藏') .strip()

item['download']=''.join(response.css('span.download::text').extract()).replace('\t','').replace('\n','').strip('下载').strip().replace(',','')

item['image_src']=response.xpath('//img[@id="photo"]/@src')[0].extract()

yield item

使用spider实现

# -*- coding: utf-8 -*-

import scrapy

from imagepro.items import ImageproItem

import os

class ImageSpider(scrapy.Spider):

name = 'image'

allowed_domains = ['699pic.com']

start_urls = ['http://699pic.com/people.html']

page=1

# 400页的图片全都要,图片的信息保存到文件中

def parse(self, response):

# 解析什么呢?获取所有图片的详情页链接

href_list = response.xpath('//div[@class="list"]/a/@href').extract()

# 遍历,依次向详情页发送请求

for href in href_list:

yield scrapy.Request(url=href, callback=self.parse_detail)

newps='http://699pic.com/photo-0-6-{}-0-0-0.html'

ImageSpider.page+=1

if ImageSpider.page <=400:

print('-------------------------'+str(ImageSpider.page)+'-----------------------')

yield scrapy.Request(url=newps.format(ImageSpider.page), callback=self.parse)

def parse_detail(self, response):

# 创建一个对象

item = ImageproItem()

# 获取名字

item['name'] = response.xpath('//div[@class="photo-view"]/h1/text()').extract()[0].replace(' ', '')

# 发布时间

item['publish_time'] = response.css('.publicityt::text').extract()[0].strip(' 发布')

item['look'] = response.xpath('//span[@class="look"]/read/text()').extract()[0]

item['collect']=response.css('.collect::text')[0].extract().replace(',','').strip('收藏') .strip()

item['download']=''.join(response.css('span.download::text').extract()).replace('\t','').replace('\n','').strip('下载').strip().replace(',','')

item['image_src']=response.xpath('//img[@id="photo"]/@src')[0].extract()

yield item

newname=item['name']+'.'+item['image_src'].split('.')[-1]

print(item['image_src'])

yield scrapy.Request(url=item['image_src'], callback=self.down, meta={'name':newname})

# 保存图片,写在这里的好处:自带refre

def down(self,response):

with open(os.path.join('img',response.meta['name']),'wb') as fp :

fp.write(response.body)