之前我们介绍过CDH怎么集成Kerberos,现在我们尝试基于现有环境安装Sentry

实施方案前,假设具体 以下条件

- CDH集成Kerberos后,CM界面各服务没有报红

- 具备root权限

CDH安装sentry

- 这个我就不会说得太详细,几乎就是下一步下一步

- 安装Sentry数据库

create database sentry default character set utf8;

CREATE USER 'sentry'@'%' IDENTIFIED BY 'password';

GRANT ALL PRIVILEGES ON sentry. * TO 'sentry'@'%';

FLUSH PRIVILEGES;

-

进入Cloudera Manager控制台点击“添加服务”

-

添加sentry服务

剩下都是下一步操作,这里省略。。。。。

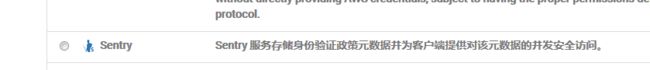

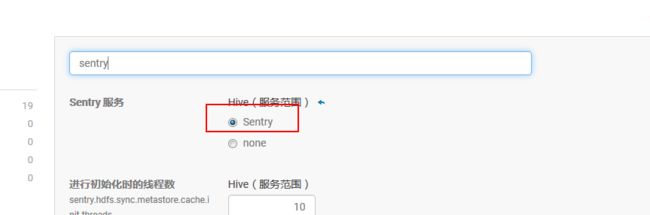

Sentry配置

-

hive配置

-

启用sentry

-

关闭Hive的用户模拟功能

-

Impala设置

-

启用sentry

-

hue配置

-

HDFS配置

- 完成以上配置后,回到Cloudera Manager主页,部署客户端配置并重启相关服务

Sentry测试

- 创建hive用户

#获取hive超级用户票据

[root@bi-master 1089-hive-HIVESERVER2]# kinit -kt hive.keytab hive/[email protected]

[root@bi-master 1089-hive-HIVESERVER2]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: hive/[email protected]

Valid starting Expires Service principal

06/07/18 17:40:39 06/08/18 17:40:39 krbtgt/[email protected]

renew until 06/12/18 17:40:39

- 使用beeline连接HiveServer2 (建议用beeline代替hive client)

[root@bi-master 1089-hive-HIVESERVER2]# beeline

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Beeline version 1.1.0-cdh5.11.0 by Apache Hive

beeline> !connect jdbc:hive2://localhost:10000/;principal=hive/[email protected]

scan complete in 5ms

Connecting to jdbc:hive2://localhost:10000/;principal=hive/[email protected]

Connected to: Apache Hive (version 1.1.0-cdh5.11.0)

Driver: Hive JDBC (version 1.1.0-cdh5.11.0)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://localhost:10000/> show roles;

INFO : Compiling command(queryId=hive_20180607174444_fd3b6374-23a5-45b4-a267-c1a55d50e67f): show roles

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:role, type:string, comment:from deserializer)], properties:null)

INFO : Completed compiling command(queryId=hive_20180607174444_fd3b6374-23a5-45b4-a267-c1a55d50e67f); Time taken: 0.082 seconds

INFO : Executing command(queryId=hive_20180607174444_fd3b6374-23a5-45b4-a267-c1a55d50e67f): show roles

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20180607174444_fd3b6374-23a5-45b4-a267-c1a55d50e67f); Time taken: 0.052 seconds

INFO : OK

+---------------+--+

| role |

+---------------+--+

| analyst_role |

| bi_dev |

| wms_dev |

| admin_role |

+---------------+--+

5 rows selected (0.276 seconds)

注意上面我已经已经有admin_role了 ,假如没有的话,先创建超级用户,流程如下。(也是在beeline客户端操作)

#创建超级角色

create role admin_role;

...

INFO : OK

No rows affected (0.37 seconds)

0: jdbc:hive2://localhost:10000/>

#给超级角色赋予所有权限,咱们hive服务别名就叫server1

0: jdbc:hive2://localhost:10000> grant all on server server1 to role admin_role;

...

INFO : OK

No rows affected (0.221 seconds)

0: jdbc:hive2://localhost:10000>

#将admin角色授权给hive用户组

0: jdbc:hive2://localhost:10000> grant role admin_role to group hive;

...

INFO : OK

No rows affected (0.162 seconds)

0: jdbc:hive2://localhost:10000>

- 以上操作创建了一个admin角色, 并授权给hive组:

- 持有hive超级用户的ticket能看到所有的库和表

0: jdbc:hive2://localhost:10000/> show databases;

INFO : Compiling command(queryId=hive_20180607180000_d9f7fa67-4022-4fd3-a6fe-0894106b3a83): show databases

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:database_name, type:string, comment:from deserializer)], properties:null)

INFO : Completed compiling command(queryId=hive_20180607180000_d9f7fa67-4022-4fd3-a6fe-0894106b3a83); Time taken: 0.16 seconds

INFO : Executing command(queryId=hive_20180607180000_d9f7fa67-4022-4fd3-a6fe-0894106b3a83): show databases

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20180607180000_d9f7fa67-4022-4fd3-a6fe-0894106b3a83); Time taken: 0.497 seconds

INFO : OK

+----------------+--+

| database_name |

+----------------+--+

| bi |

| default |

| gms |

| gtp |

| gtp_data |

| gtp_dc |

| gtp_test |

| gtp_txt |

| kudu_raw |

| kudu_test |

| kudu_vip |

+----------------+--+

11 rows selected (0.88 seconds)

- 下面基于一个业务场景做测试,创建一个用户叫deng_yb 只能访问叫BI的库

- 创建linux 用户 deng_yb (千万记得每个节点都要创建)

( 先用hive用户创建bi库,然后往里面导数,脚本在最下面备注);

#创建deng_yb用户组

groupadd deng_yb

#创建deng_yb用户,权限目录为/usr/deng

[root@bi-master 1089-hive-HIVESERVER2]# useradd -d /usr/deng_yb -m deng_yb

#赋值密码

passwd deng_yb

[root@bi-master 1089-hive-HIVESERVER2]# id deng_yb

uid=504(deng_yb) gid=506(deng_yb) 组=506(deng_yb)

- 在beeline使用hive用户创建角色deng,并把bi库的ALL权限赋给该角色

#创建deng角色

0: jdbc:hive2://localhost:10000> create role deng;

....

INFO : OK

No rows affected (0.094 seconds)

#把对BI数据库增删改查权限赋给角色deng

0: jdbc:hive2://localhost:10000> grant all on database bi to role deng;

- 将deng角色赋给用户组deng_yb

0: jdbc:hive2://localhost:10000> grant role dengto group deng_yb;

...

INFO : OK

No rows affected (0.187 seconds)

- 使用kadmin创建deng_yb的principal

[root@bi-master log]# kadmin.local

Authenticating as principal hive/[email protected] with password.

kadmin.local: addprinc [email protected]

WARNING: no policy specified for [email protected]; defaulting to no policy

Enter password for principal "[email protected]":

Re-enter password for principal "[email protected]":

Principal "[email protected]" created.

#退出

exit

-

beeline验证

- 使用deng_yb登陆kerberos

kadmin.local: exit

[root@bi-master log]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: hive/[email protected]

Valid starting Expires Service principal

06/07/18 17:40:39 06/08/18 17:40:39 krbtgt/[email protected]

renew until 06/12/18 17:40:39

[root@bi-master log]# kdestroy

[root@bi-master log]# kinit deng_yb

Password for [email protected]:

[root@bi-master log]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: [email protected]

Valid starting Expires Service principal

06/07/18 18:30:10 06/08/18 18:30:10 krbtgt/[email protected]

renew until 06/14/18 18:30:10

- 登陆beeline , 操作bi库

[root@bi-master log]# beeline

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: Using incremental CMS is deprecated and will likely be removed in a future release

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=512M; support was removed in 8.0

Beeline version 1.1.0-cdh5.11.0 by Apache Hive

beeline> !connect jdbc:hive2://localhost:10000/;principal=hive/[email protected]

Connecting to jdbc:hive2://localhost:10000/;principal=hive/[email protected]

Connected to: Apache Hive (version 1.1.0-cdh5.11.0)

Driver: Hive JDBC (version 1.1.0-cdh5.11.0)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://localhost:10000/> show databases;

INFO : Compiling command(queryId=hive_20180607183232_cf56064f-802f-4598-ba82-5c28aea52640): show databases

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:database_name, type:string, comment:from deserializer)], properties:null)

INFO : Completed compiling command(queryId=hive_20180607183232_cf56064f-802f-4598-ba82-5c28aea52640); Time taken: 0.141 seconds

INFO : Executing command(queryId=hive_20180607183232_cf56064f-802f-4598-ba82-5c28aea52640): show databases

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20180607183232_cf56064f-802f-4598-ba82-5c28aea52640); Time taken: 0.587 seconds

INFO : OK

+----------------+--+

| database_name |

+----------------+--+

| bi |

| default |

+----------------+--+

3 rows selected (0.931 seconds)

0: jdbc:hive2://localhost:10000/

3 rows selected (0.889 seconds)

0: jdbc:hive2://localhost:10000/> use bi;

INFO : Compiling command(queryId=hive_20180607183434_ac7db16e-5605-46b2-a6b6-443b3a859acb): use bi

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:null, properties:null)

INFO : Completed compiling command(queryId=hive_20180607183434_ac7db16e-5605-46b2-a6b6-443b3a859acb); Time taken: 0.199 seconds

INFO : Executing command(queryId=hive_20180607183434_ac7db16e-5605-46b2-a6b6-443b3a859acb): use bi

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20180607183434_ac7db16e-5605-46b2-a6b6-443b3a859acb); Time taken: 0.011 seconds

INFO : OK

No rows affected (0.232 seconds)

0: jdbc:hive2://localhost:10000/> show tables;

INFO : Compiling command(queryId=hive_20180607183434_8e7b51a8-2ff5-42c6-948c-7907457912c6): show tables

INFO : Semantic Analysis Completed

INFO : Returning Hive schema: Schema(fieldSchemas:[FieldSchema(name:tab_name, type:string, comment:from deserializer)], properties:null)

INFO : Completed compiling command(queryId=hive_20180607183434_8e7b51a8-2ff5-42c6-948c-7907457912c6); Time taken: 0.127 seconds

INFO : Executing command(queryId=hive_20180607183434_8e7b51a8-2ff5-42c6-948c-7907457912c6): show tables

INFO : Starting task [Stage-0:DDL] in serial mode

INFO : Completed executing command(queryId=hive_20180607183434_8e7b51a8-2ff5-42c6-948c-7907457912c6); Time taken: 0.22 seconds

INFO : OK

+---------------+--+

| tab_name |

+---------------+--+

| wx_user_info |

+---------------+--+

1 row selected (0.373 seconds)

0: jdbc:hive2://localhost:10000/> select count(1) from wx_user_info;

INFO : Total MapReduce CPU Time Spent: 8 seconds 390 msec

INFO : Completed executing command(queryId=hive_20180607183434_8be98e50-49fb-4227-a8f0-acfcc500d961); Time taken: 35.198 seconds

INFO : OK

+-------+--+

| _c0 |

+-------+--+

| 5324 |

+-------+--+

1 row selected (35.613 seconds)

- 出现类似以上的结果 证明,hive配置成功

- HDFS验证

[root@bi-master log]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: [email protected]

Valid starting Expires Service principal

06/07/18 18:30:10 06/08/18 18:30:10 krbtgt/[email protected]

renew until 06/14/18 18:30:10

[root@bi-master log]# hadoop fs -ls /user/hive/warehouse

ls: Permission denied: user=deng_yb, access=READ_EXECUTE, inode="/user/hive/warehouse":hive:hive:drwxrwx--x

[root@bi-master log]# hadoop fs -ls /user/hive/warehouse/bi.db

Found 1 items

drwxrwx--x+ - hive hive 0 2018-06-06 17:30 /user/hive/warehouse/bi.db/wx_user_info

-

以上操作, 用户只能访问bi.db目录的文件,不能访问其他目录,证明Sentry实现了HDFS的ACL同步

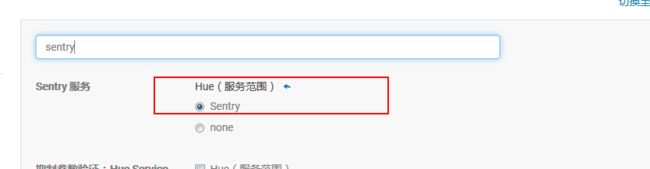

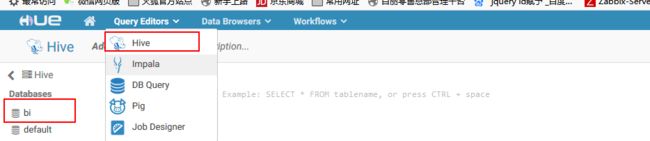

hue测试

-

hue用管理员账号登上,并创建一个用户

-

用deng_yb用户登陆hue

-

查询下

-

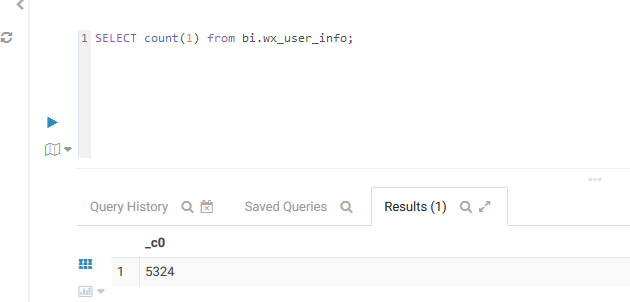

hue查询impala同理

- 出现以上类似结果,证明sentry在hue上授权成功

-

impala测试 (在impala Daemon实例上做的测试)

- 使用deng_yb登陆kerberos,并进入impala-shell

[root@bi-slave1 ~]# kinit deng_yb

Password for [email protected]:

[root@bi-slave1 ~]# klist

Ticket cache: FILE:/tmp/krb5cc_0

Default principal: [email protected]

Valid starting Expires Service principal

06/07/18 18:57:16 06/08/18 18:57:16 krbtgt/[email protected]

renew until 06/14/18 18:57:16

[root@bi-slave1 ~]# impala-shell

Starting Impala Shell without Kerberos authentication

Error connecting: TTransportException, TSocket read 0 bytes

Kerberos ticket found in the credentials cache, retrying the connection with a secure transport.

Connected to bi-slave1:21000

Server version: impalad version 2.8.0-cdh5.11.0 RELEASE (build e09660de6b503a15f07e84b99b63e8e745854c34)

***********************************************************************************

Welcome to the Impala shell.

(Impala Shell v2.8.0-cdh5.11.0 (e09660d) built on Wed Apr 5 19:51:24 PDT 2017)

To see more tips, run the TIP command.

***********************************************************************************

[bi-slave1:21000] > show databases;

Query: show databases

+----------+-----------------------+

| name | comment |

+----------+-----------------------+

| bi | |

| default | Default Hive database |

+----------+-----------------------+

Fetched 3 row(s) in 0.03s

[bi-slave1:21000] > use bi;

Query: use bi

[bi-slave1:21000] > show tables;

Query: show tables

+--------------+

| name |

+--------------+

| wx_user_info |

+--------------+

Fetched 1 row(s) in 0.01s

[bi-slave1:21000] > select * from wx_user_info limit 10;

Query: select * from wx_user_info limit 10

Query submitted at: 2018-06-07 18:59:11 (Coordinator: http://bi-slave1:25000)

Query progress can be monitored at: http://bi-slave1:25000/query_plan?query_id=4d42efe823b336c6:cf88ec7400000000

+----------+-----------------------+-----------------+----------+-----------+-----------------------+-------------+-----------------------+-------------+-----------+-----------+---------+-----------+-----------+

| pkid | user_id | user_name | password | user_type | create_time | create_user | update_time | update_user | user_code | admin_uid | card_no | card_name | card_type |

+----------+-----------------------+-----------------+----------+-----------+-----------------------+-------------+-----------------------+-------------+-----------+-----------+---------+-----------+-----------+

| 10005776 | [email protected] | 朱莎-湖北鄂北 | NULL | 1 | 2016-12-27 09:30:21.0 | system | 2016-12-27 14:01:02.0 | system | Ec | NULL | NULL | NULL | NULL |

| 10005925 | [email protected] | 马春燕-湖北鄂北 | NULL | 1 | 2017-01-17 17:52:51.0 | system | 2017-05-11 17:50:10.0 | 10004270 | 4U | NULL | NULL | NULL | NULL |

| 10006282 | [email protected] | 李俊会-天津 | NULL | 1 | 2017-02-23 13:16:36.0 | system | 2017-02-23 13:16:36.0 | system | Pl | NULL | NULL | NULL | NULL |

| 10006350 | zhangchunming946780 | 张春明-北京 | NULL | 1 | 2017-02-27 10:12:02.0 | system | 2017-02-27 10:12:02.0 | system | fF | NULL | NULL | NULL | NULL |

| 10007687 | bucai_feng | 李瑞丰-郑州 | NULL | 1 | 2017-07-03 17:02:46.0 | system | 2017-07-03 17:02:46.0 | system | 0g | NULL | NULL | NULL | NULL |

| 10006504 | w13259423226 | 王萍丽-西北 | NULL | 1 | 2017-03-07 10:27:06.0 | system | 2017-03-07 10:27:06.0 | system | Ic | NULL | NULL | NULL | NULL |

| 10006467 | liujp1111986 | 柳江萍-贵州 | NULL | 1 | 2017-03-06 14:17:22.0 | system | 2017-03-06 15:03:32.0 | 10004273 | 2i | NULL | NULL | NULL | NULL |

| 10006539 | hxc15849739248 | 黄晓春 | NULL | 1 | 2017-03-08 09:52:28.0 | system | 2017-03-24 10:09:51.0 | 10004267 | 2k | NULL | NULL | NULL | NULL |

| 10007254 | hwj406494199 | 胡雯瑾-西北 | NULL | 1 | 2017-05-03 13:49:45.0 | system | 2017-05-03 13:49:45.0 | system | qd | NULL | NULL | NULL | NULL |

| 10007157 | fanfan5751 | 刘凡 | NULL | 1 | 2017-04-18 14:36:33.0 | system | 2017-04-18 14:36:33.0 | system | hU | NULL | NULL | NULL | NULL |

+----------+-----------------------+-----------------+----------+-----------+-----------------------+-------------+-----------------------+-------------+-----------+-----------+---------+-----------+-----------+

Fetched 10 row(s) in 0.07s

上述只能看到BI库的信息,说明impala配置sentry也成功

以上就是CDH集成Kerberos和Sentry的内容

下面会介绍多租户配完之后,各服务和工具使用上可能遇到的问题和解决办法

https://www.jianshu.com/p/ef9eee385988-

备注

sqoop脚本

sqoop import -D oracle.sessionTimeZone=CST

--connect jdbc:oracle:thin:@172.17.209.184:1521/devbiedwshoes

--username u_md_rs

--password belle@16_rs

--table U_DB_PT.WX_USER_INFO1

--hive-database=bi

--hive-import

--hive-table WX_USER_INFO

--null-string '\N'

--null-non-string '\N'

--hive-drop-import-delims

--target-dir /user/wms_dev/import/

--delete-target-dir

--m 1;