本文所需环境如下:

操作系统:CentOS 7.2 64位

Flume版本:flume-1.7.0

JDK版本:jdk1.8.0_131

netcat源

source-nc.conf

#配置Agent a1 的组件

a1.sources=r1

#(可以配置多个,以空格隔开,名字自己定)

a1.channels=c1

#(可以配置多个,以空格隔开,名字自己定)

a1.sinks=s1

#描述/配置a1的r1

#(netcat表示通过指定端口来访问)

a1.sources.r1.type=netcat

# (表示本机)

a1.sources.r1.bind=0.0.0.0

#端口号

a1.sources.r1.port=8888

#描述a1的c1

a1.channels.c1.type=memory

a1.channels.c1.capacity=1000

a1.channels.c1.transactionCapacity=100

#描述a1的s1

#(表示数据汇聚点的类型是logger日志)

a1.sinks.s1.type=logger

#为channel 绑定 source和sink

#(一个sink,只能对应一个通道)

a1.sinks.s1.channel=c1

#(一个source是可以对应多个通道的)

a1.sources.r1.channels=c1

启动flume

[root@hadoop01 conf]# ../bin/flume-ng agent -n a1 -c ./ -f ./source-nc.conf

-Dflume.root.logger=INFO,console

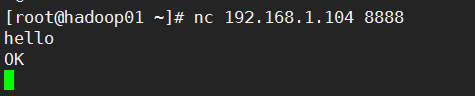

启动nc

flume得到消息

avro源

source-avro.conf

a1.sources=r1

a1.channels=c1

a1.sinks=s1

a1.sources.r1.type=avro

a1.sources.r1.bind=0.0.0.0

a1.sources.r1.port=8888

a1.sources.r1.channels=c1

a1.channels.c1.type=memory

a1.channels.c1.capacity=1000

a1.channels.c1.transactionCapacity=100

a1.sinks.s1.type=logger

a1.sinks.s1.channel=c1

启动flume

[root@hadoop01 conf]# ../bin/flume-ng agent -n a1 -c ./

-f ./source-avro.conf -Dflume.root.logger=INFO,console

使用flume自带的avro序列化

[root@hadoop01 bin]# cat 1.txt

huawei mate10

[root@hadoop01 bin]# ./flume-ng avro-client -H 192.168.1.104 -p 8888

-F ./1.txt -c ../conf/

Exec源

source-exec.conf

a1.sources=r1

a1.channels=c1

a1.sinks=s1

a1.sources.r1.type=exec

a1.sources.r1.command=ping 192.168.1.104

a1.sources.r1.channels=c1

a1.channels.c1.type=memory

a1.channels.c1.capacity=1000

a1.channels.c1.transactionCapacity=100

a1.sinks.s1.type=logger

a1.sinks.s1.channel=c1

启动flume

[root@hadoop01 conf]# ../bin/flume-ng agent -n a1 -c ./

-f ./source-exec.conf -Dflume.root.logger=INFO,console

SpoolDir监听一个目录

source-spooldir.conf

a1.sources=r1

a1.channels=c1

a1.sinks=s1

a1.sources.r1.type=spooldir

a1.sources.r1.spoolDir=/root/spool

a1.sources.r1.fileSuffix=.mydone

a1.sources.r1.channels=c1

a1.channels.c1.type=memory

a1.channels.c1.capacity=1000

a1.channels.c1.transactionCapacity=100

a1.sinks.s1.type=logger

a1.sinks.s1.channel=c1

启动flume

[root@hadoop01 conf]# ../bin/flume-ng agent -n a1 -c ./ -f ./source-spooldir.conf

-Dflume.root.logger=INFO,console

http,json源

source-http.conf

a1.sources=r1

a1.channels=c1

a1.sinks=s1

a1.sources.r1.type=http

a1.sources.r1.port=8888

a1.sources.r1.channels=c1

a1.channels.c1.type=memory

a1.channels.c1.capacity=1000

a1.channels.c1.transactionCapacity=100

a1.sinks.s1.type=logger

a1.sinks.s1.channel=c1

启动flume

../bin/flume-ng agent -n a1 -c ./ -f ./source-http.conf -Dflume.root.logger=INFO,console

[root@hadoop01 ~]# curl -X POST -d '[{"headers":{"a":"a1","b":"b1"},"body":"hello http-flume"}]'

http://0.0.0.0:8888

[root@hadoop01 ~]#