A Test for the Presence of Jumps in Financial Markets using Neural Networks in R

Wall Street Bull

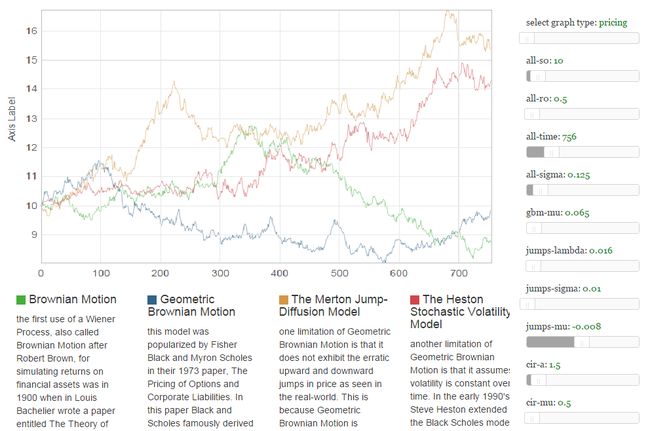

Modelling of financial markets is usually undertaken using stochastic processes. Stochastic processes are collection of random variables indexed, for our purposes, by time. Examples of stochastic processes used in finance includeGBM,OU,Heston Model andJump Diffusion processes. For a more mathematically detailed explanation of stochastic processes, diffusion and jump diffusion models, readthisarticle. To get an intuitive feeling of how these different stochastic processes behave, visit the interactiveweb applicationthat I worked on in conjunction withTuring FinanceandSouthern Ark.

As was witnessed during the recent financial crisis, stock markets exhibit jumps. That is, they exhibit large falls in value. Over the past couple of decades, the has been increasing interest in the modelling of these jumps. The classic model for modelling jumps in stochastic processes is theMerton Jump diffusionmodel. This model says that the returns from an asset are driven by “normal” price vibrations (representing the continuous diffusion component) and “abnormal” price vibrations (representing the discontinuous jump component). The SDE of the Merton Jump diffusion model is given as:

In practice, before we can proceed with fitting a jump diffusion model to data, we first have to establish if the data that we are fitting the model to has jumps. This requires us to statistically test for the presence of jumps in return data.

There are various tests that have been developed for testing for jumps in return data. Examples of such tests include the bi-power variations test of Barndorff-Nielsen and Shepard (2006). This jump test compares the estimate of variance that is not robust to the presence of jumps, called realized variance, with an estimate of variance that is robust to the presence of jumps, called bi-power variation. This test was improved by Ait Sahalia and Jacod (2009). In their test, they compare the bi-power variations for returns sampled at different frequencies. Lee and Mykland (2008) also used insights from the test of Barndorff-Nielsen and Shepard (2006) by testing for the presence of jumps at each observed value of the process, while taking into account the volatility of the process at the time the observation was made. The test of Lee and Mykland (2008) has the added advantage that it not only indicates whether or not jumps have occurred, but also gives information as to what time the jumps occurred and their size.

In this blog post, I propose a test for the presence of jumps using Neural Networks. This test is then assessed using simulation compared to the Lee and Mykland (2008) test, then we look at how the Neural Network test fares on stocks on the JSE.

The Neural Network Test

An example of biological neural networks

Neural Networks are a group of learning models which fall under machine learning. They were inspired by the biological neural networks. For a detailed analysis of neural networks and the algorithm used to train neural networks, please refer to this article by Turing Finance.

As mentioned above, the test I am proposing uses neural networks to test for jumps. This test establishes whether or not the whole series of returns has jumps. That is, the test has a binary outcome. This means that we can treat the testing for the presence of jumps as a classification problem. We want to classify a set of returns as belonging to on one of two categories, having jumps or not having jumps.

Given that neural networks can perform well in classification problems, such as in credit rating, it seems natural to try see how neural networks perform when trained to distinguish between a set of returns that has jumps and one that does not have jumps.

Architecture of Neural Network

As the test uses neural networks, we need to carefully think about the architecture of the neural network. That is, we need to think of: what the inputs to the network are, what number of hidden layers (and associated number of neurons) we should have, and what the output layer should look like.

I have chosen the inputs into the neural network are: The first and second centered moments, skewness, kurtosis, the fifth, sixth, seventh and eighth centered moments. All of the moments used are sample moments. These particular variables were chosen as inputs to the neural network as the tests of Barndorff-Nielsen and Shepard (2006), Ait Sahalia and Jacod (2009) and Lee and Mykland (2008) use versions of these moments as their test statistics. So we believe that these moments should have strong predictive power.However, it should be noted that the moments are not necessarily independent and this could affect the performance of the neural network. Thus the inputs into the neural network still need further work. Let

be a series ofIt is important note that this particular architecture was chosen just for illustrating how one would think about testing for jumps using neural networks. It is by no means necessarily the “best’ architecture. This is definitely an area for future work. We hope to cover this in later posts.

Having decided on the architecture of the neural network, we still needed to train it. The neural network was trained on 3000 observations from a processes that has jumps (generated using the Merton Jump model) and a process which does not have jumps (generated using GBM). The neural network was trained using the neuralnet package in R.

Simulation study

Simulations were undertaken to assess how the neural network test performs against the Lee & Mykland Test (2008). The underlying model being assumed is the basic Merton model discussed above. Using simulations, we worked out the Probability of ACTUAL detection (the test being able to detect jumps in a series that has jumps) and the probability of FALSE detection (the test incorrectly detecting jumps in a series of returns that doesn't have jumps) of each of the tests. The simulation was conducted at a daily frequency, using different combinations of the parameters. A more rigorous comparison would have to compare the two tests at different frequencies, and for large and small jumps.

We have summarized the results of the simulations conducted in the table below:

Based on the simulation results in the table above, the neural network test to perform better than the Lee & Mykland (2008) test. This is because the probability of actual detection for the neural network test is higher than for the Lee & Mykland (2008) test, and the probability of false detection is lower than that of the Lee & Mykland (2008) test.

Given that we have seen how the test performs on simulated data, we are now in a position to apply the test on data from the Johannesburg Stock Exchange.

Applying the Test to JSE Data

The Johannesburg Stock Exchange (JSE) building in Sandton. It has operated as a market place for the trading of financial products for nearly 125 years.

After seeing how the neural network test for jumps performs in simulations, we applied the test to 217 stocks which are listed on the Johannesburg Stock Exchange (JSE). The various stocks used in this post, categorized by industry, are shown in the table below.