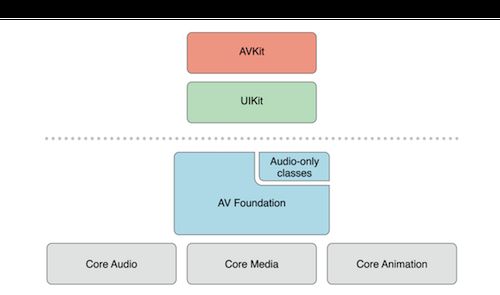

概述

AVFoundation 是一个可以用来使用和创建基于时间的视听媒体数据的框架。AVFoundation 的构建考虑到了目前的硬件环境和应用程序,其设计过程高度依赖多线程机制。充分利用了多核硬件的优势并大量使用block和GCD机制,将复杂的计算机进程放到了后台线程运行。会自动提供硬件加速操作,确保在大部分设备上应用程序能以最佳性能运行。该框架就是针对64位处理器设计的,可以发挥64位处理器的所有优势。

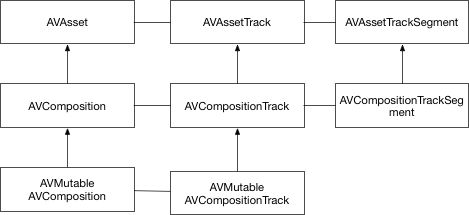

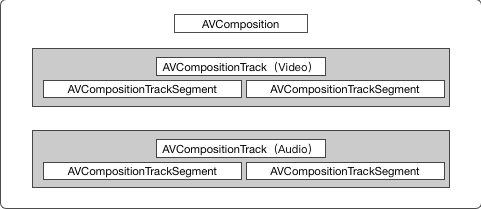

AVComposition

AVFoundation有关资源组合的功能源于AVComposition。一个组合就是将其它几种媒体资源组合成一个自定义的媒体排列。AVComposition中的轨道是AVAssetTrack的子类AVCompositionTrack。一个组合则由一个或多个AVCompositionTrackSegment媒体片段组成。

组合创建

AVComposition、AVCompositionTrack都是不可变对象,提供对资源的只读操作。因此需要创建自定义组合的时候就需要使用它们的子类AVMutableComposition和AVMutableCompositionTack。创建轨道的时候我们需要指定媒体的类型,如:AVMediaTypeVideo、AVMediaTypeAudio。同时我们需要传递一个排列标识,虽然我们可以传任意的,但是我们一般传入

kCMPersistentTrackID_Invalid,表示我们将创建合适轨道排列的任务交给框架。

- (void)buildComposition

{

self.composition = [AVMutableComposition composition];

self.videoTrack = [self.composition addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

self.audioTrack = [self.composition addMutableTrackWithMediaType:AVMediaTypeAudio preferredTrackID:kCMPersistentTrackID_Invalid];

}

资源加载

为了高效加载资源,AVAsset 使用了延迟加载资源属性的方案。不过属性的访问总是同步发生,如果正在请求的属性没有预先加载,程序就会阻塞。不过 AVAsset 提供了异步加载资源属性的方案。AVAsset 实现了 AVAsynchronousKeyValueLoading 协议,可以通过相关的接口进行异步查询资源的属性。

- (void)loadAssets

{

AVAsset *video = [AVAsset assetWithURL:[[NSBundle mainBundle] URLForResource:@"1" withExtension:@"mp4"]];

AVAsset *audio = [AVAsset assetWithURL:[[NSBundle mainBundle] URLForResource:@"许嵩-素颜" withExtension:@"mp3"]];

[video loadValuesAsynchronouslyForKeys:@[@"tracks", @"duration", @"commonMetadata"] completionHandler:^{

}];

[audio loadValuesAsynchronouslyForKeys:@[@"tracks", @"duration", @"commonMetadata"] completionHandler:^{

}];

}

组合编辑

当创建出了音频轨道和视频轨道,就可以在这两个轨道上分别插入相关的轨道资源了。在插入轨道资源的时候或进行媒体编辑的时候,我们是用CMTime作为时间的量度。如果需要创建时间段我们则使用CMTimeRange。

- (void)addVideo:(AVAsset *)videoAssets toTimeRange:(CMTimeRange)range atTime:(CMTime)time

{

AVAssetTrack *vidoTrack = [[videoAssets tracksWithMediaType:AVMediaTypeVideo] firstObject];

[self.videoTrack insertTimeRange:range ofTrack:vidoTrack atTime:time error:nil];

NSLog(@"%@", @"add video finish");

}

- (void)addAudio:(AVAsset *)audioAssets toTimeRange:(CMTimeRange)range atTime:(CMTime)time

{

AVAssetTrack *audioTrack = [[audioAssets tracksWithMediaType:AVMediaTypeAudio] firstObject];

[self.audioTrack insertTimeRange:range ofTrack:audioTrack atTime:time error:nil];

NSLog(@"%@", @"add audio finish");

}

混合音频

当一个组合资源导出时,默认是最大音量或者正常音量来播放。当只有一个音轨的时候,这样问题还不大,当出现多个音轨的时候就会带来许多问题。对于对个音轨,每个音轨都在争夺资源,这就导致有些资源可能听不到,这个时候我们就需要使用音频混合来调节出美妙的音乐。

- (AVAudioMix *)audioMix

{

AVMutableAudioMixInputParameters *params = [AVMutableAudioMixInputParameters audioMixInputParametersWithTrack:self.audioTrack];

[params setVolume:0.5f atTime:kCMTimeZero];

[params setVolumeRampFromStartVolume:0.1f toEndVolume:0.9f timeRange:CMTimeRangeFromTimeToTime(CMTimeMake(3.0f, 1.0f), CMTimeMake(6.0f, 1.0f))];

AVMutableAudioMix *audioMix = [AVMutableAudioMix audioMix];

audioMix.inputParameters = @[params];

return [audioMix copy];

}

媒体保存

在保存编辑之后的媒体时,由于AVComposition以及相关的类并没有遵循NSCoding协议,因此不能简单地将一个组合的状态归档到磁盘上。我们一般使用AVAssetExportSession进行媒体保存操作。

- (void)export

{

AVAssetExportSession *session = [AVAssetExportSession exportSessionWithAsset:[self.composition copy] presetName:AVAssetExportPresetHighestQuality];

session.outputURL = [NSURL fileURLWithPath:kOutputFile];

session.outputFileType = AVFileTypeMPEG4;

session.audioMix = [self audioMix];

NSLog(@"%@", kOutputFile);

[session exportAsynchronouslyWithCompletionHandler:^{

if (!session.error) {

NSLog(@"%@", @"finish export");

}else {

NSLog(@"error : %@", [session.error localizedDescription]);

}

}];

}

编辑实例

在实例中为一段视频增加两段音频。

//

// ViewController.m

// AVFoundation

//

// Created by mac on 17/6/20.

// Copyright © 2017年 Qinmin. All rights reserved.

//

#import "ViewController.h"

#import

#import

#import

#define kDocumentPath(path) [[NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES) firstObject] stringByAppendingPathComponent:path]

#define kOutputFile kDocumentPath(@"out.mp4")

static void *ExportContext;

@interface ViewController ()

@property (nonatomic, strong) dispatch_queue_t videoQueue;

@property (nonatomic, strong) AVMutableComposition *composition;

@property (nonatomic, strong) AVMutableCompositionTrack *videoTrack;

@property (nonatomic, strong) AVMutableCompositionTrack *audioTrack;

@end

@implementation ViewController

- (void)viewDidLoad

{

[super viewDidLoad];

_videoQueue = dispatch_queue_create("com.qm.video", NULL);

dispatch_async(_videoQueue, ^{

[self buildComposition];

});

}

- (IBAction)buttonTapped:(UIButton *)sender

{

if (sender.tag == 1) {

dispatch_async(_videoQueue, ^{

[[NSFileManager defaultManager] removeItemAtPath:kOutputFile error:nil];

[self loadAssets];

});

}else if (sender.tag == 2) {

dispatch_async(_videoQueue, ^{

[self export];

});

}else if (sender.tag == 3) {

[self play];

}

}

- (void)loadAssets

{

AVAsset *video = [AVAsset assetWithURL:[[NSBundle mainBundle] URLForResource:@"1" withExtension:@"mp4"]];

AVAsset *audio = [AVAsset assetWithURL:[[NSBundle mainBundle] URLForResource:@"许嵩-素颜" withExtension:@"mp3"]];

[video loadValuesAsynchronouslyForKeys:@[@"tracks", @"duration", @"commonMetadata"] completionHandler:^{

dispatch_async(_videoQueue, ^{

[self addVideo:video toTimeRange:CMTimeRangeMake(kCMTimeZero, CMTimeMake(10.0, 1.0)) atTime:kCMTimeZero];

});

}];

[audio loadValuesAsynchronouslyForKeys:@[@"tracks", @"duration", @"commonMetadata"] completionHandler:^{

dispatch_async(_videoQueue, ^{

[self addAudio:audio toTimeRange:CMTimeRangeMake(kCMTimeZero, CMTimeMake(5.0, 1.0)) atTime:kCMTimeZero];

[self addAudio:audio toTimeRange:CMTimeRangeMake(kCMTimeZero, CMTimeMake(5.0, 1.0)) atTime:CMTimeMake(5.0, 1.0)];

});

}];

}

- (void)buildComposition

{

self.composition = [AVMutableComposition composition];

self.videoTrack = [self.composition addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

self.audioTrack = [self.composition addMutableTrackWithMediaType:AVMediaTypeAudio preferredTrackID:kCMPersistentTrackID_Invalid];

}

- (void)addVideo:(AVAsset *)videoAssets toTimeRange:(CMTimeRange)range atTime:(CMTime)time

{

AVAssetTrack *vidoTrack = [[videoAssets tracksWithMediaType:AVMediaTypeVideo] firstObject];

[self.videoTrack insertTimeRange:range ofTrack:vidoTrack atTime:time error:nil];

NSLog(@"%@", @"add video finish");

}

- (void)addAudio:(AVAsset *)audioAssets toTimeRange:(CMTimeRange)range atTime:(CMTime)time

{

AVAssetTrack *audioTrack = [[audioAssets tracksWithMediaType:AVMediaTypeAudio] firstObject];

[self.audioTrack insertTimeRange:range ofTrack:audioTrack atTime:time error:nil];

NSLog(@"%@", @"add audio finish");

}

- (AVAudioMix *)audioMix

{

AVMutableAudioMixInputParameters *params = [AVMutableAudioMixInputParameters audioMixInputParametersWithTrack:self.audioTrack];

[params setVolume:0.5f atTime:kCMTimeZero];

[params setVolumeRampFromStartVolume:0.1f toEndVolume:0.9f timeRange:CMTimeRangeFromTimeToTime(CMTimeMake(3.0f, 1.0f), CMTimeMake(6.0f, 1.0f))];

AVMutableAudioMix *audioMix = [AVMutableAudioMix audioMix];

audioMix.inputParameters = @[params];

return [audioMix copy];

}

- (void)export

{

AVAssetExportSession *session = [AVAssetExportSession exportSessionWithAsset:[self.composition copy] presetName:AVAssetExportPresetHighestQuality];

session.outputURL = [NSURL fileURLWithPath:kOutputFile];

session.outputFileType = AVFileTypeMPEG4;

session.audioMix = [self audioMix];

NSLog(@"%@", kOutputFile);

[session exportAsynchronouslyWithCompletionHandler:^{

if (!session.error) {

NSLog(@"%@", @"finish export");

}else {

NSLog(@"error : %@", [session.error localizedDescription]);

}

}];

}

- (void)play

{

NSURL * videoURL = [NSURL fileURLWithPath:kOutputFile];

AVPlayerViewController *avPlayer = [[AVPlayerViewController alloc] init];

avPlayer.player = [[AVPlayer alloc] initWithURL:videoURL];

avPlayer.videoGravity = AVLayerVideoGravityResizeAspect;

[self presentViewController:avPlayer animated:YES completion:nil];

}

@end

参考

AVFoundation开发秘籍:实践掌握iOS & OSX应用的视听处理技术

源码地址:AVFoundation开发 https://github.com/QinminiOS/AVFoundation