移步系列Android跨进程通信IPC系列

1 源码位置

framework/native/libs/binder/

- Binder.cpp

- BpBinder.cpp

- IPCThreadState.cpp

- ProcessState.cpp

- IServiceManager.cpp

- IInterface.cpp

- Parcel.cpp

frameworks/native/include/binder/

- IInterface.h (包括BnInterface, BpInterface)

/frameworks/av/media/mediaserver/

- main_mediaserver.cpp

/frameworks/av/media/libmediaplayerservice/

- MediaPlayerService.cpp

对应的链接为

- Binder.cpp

- BpBinder.cpp

- IPCThreadState.cpp

- ProcessState.cpp

- IServiceManager.cpp

- IInterface.cpp

- IInterface.h

- main_mediaserver.cpp

- MediaPlayerService.cpp

2 概述

- 由于服务注册会涉及到具体的服务注册,网上大多数说的都是Media注册服务,我们也说它。

- media入口函数是 “main_mediaserver.cpp”中的main()方法,代码如下:

frameworks/av/media/mediaserver/main_mediaserver.cpp 44行

int main(int argc __unused, char** argv)

{

*** 省略部分代码 *****

InitializeIcuOrDie();

// 获得ProcessState实例对象

sp proc(ProcessState::self());

//获取 BpServiceManager

sp sm = defaultServiceManager();

AudioFlinger::instantiate();

//注册多媒体服务

MediaPlayerService::instantiate();

ResourceManagerService::instantiate();

CameraService::instantiate();

AudioPolicyService::instantiate();

SoundTriggerHwService::instantiate();

RadioService::instantiate();

registerExtensions();

//启动Binder线程池

ProcessState::self()->startThreadPool();

//当前线程加入到线程池

IPCThreadState::self()->joinThreadPool();

}

所以在main函数里面

- 首先 获得了一个ProcessState的实例

- 其次 调用defualtServiceManager方法获取IServiceManager实例

- 再次 进行重要服务的初始化

- 最后调用startThreadPool方法和joinThreadPool方法。

PS: (1)获取ServiceManager:我们上篇文章讲解了defaultServiceManager()返回的是BpServiceManager对象,用于跟servicemanger进行通信。

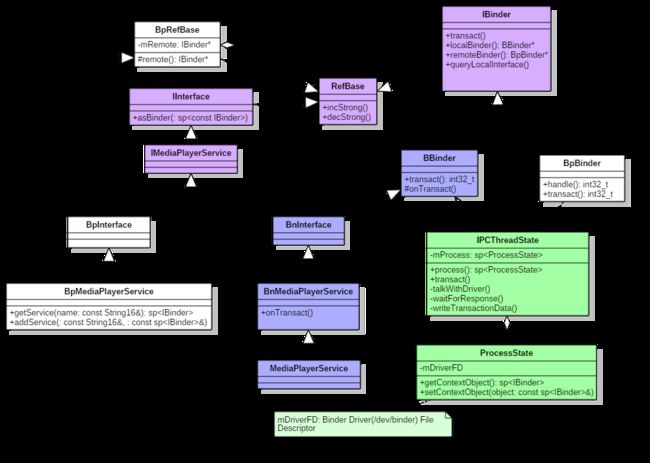

3 类图

我们这里主要讲解的是Native层的服务,所以我们以native层的media为例,来说一说服务注册的过程,先来看看media的关系图

图解

- 蓝色代表的是注册MediaPlayerService

- 绿色代表的是Binder架构中与Binder驱动通信

- 紫色代表的是注册服务和获取服务的公共接口/父类

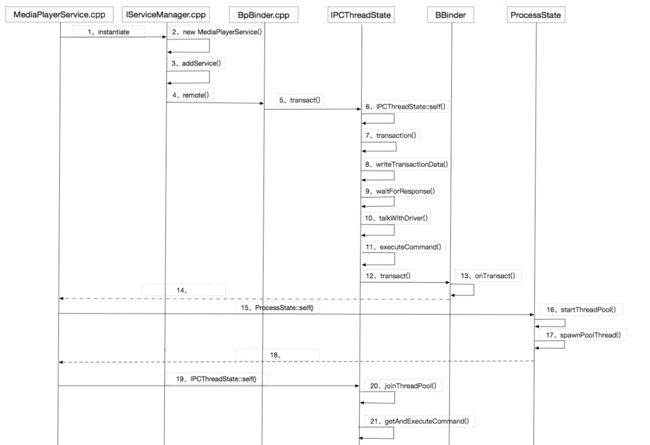

4 时序图

先通过一幅图来说说,media服务启动过程是如何向servicemanager注册服务的。

5 流程介绍

5.1 inistantiate()函数

// MediaPlayerService.cpp 269行

void MediaPlayerService::instantiate() {

defaultServiceManager()->addService(String16("media.player"), new MediaPlayerService());

}

- 1 创建一个新的Service——BnMediaPlayerService,想把它告诉ServiceManager。然后调用BnServiceManager的addService来向ServiceManager中添加一个Service,其他进程可以通过字符串"media.player"来向ServiceManager查询此服务。

- 2 注册服务MediaPlayerService:由defaultServiceManager()返回的是BpServiceManager,同时会创建ProcessState对象和BpBinder对象。故此处等价于调用BpServiceManager->addService。

5.2 BpSserviceManager.addService()函数

/frameworks/native/libs/binder/IServiceManager.cpp 155行

virtual status_t addService(const String16& name, const sp& service,

bool allowIsolated)

{

//data是送到BnServiceManager的命令包

Parcel data, reply;

//先把interface名字写进去,写入头信息"android.os.IServiceManager"

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

// 再把新service的名字写进去 ,name为"media.player"

data.writeString16(name);

// MediaPlayerService对象

data.writeStrongBinder(service);

// allowIsolated= false

data.writeInt32(allowIsolated ? 1 : 0);

//remote()指向的BpServiceManager中保存的BpBinder

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);

return err == NO_ERROR ? reply.readExceptionCode() : err;

}

服务注册过程:向ServiceManager 注册服务MediaPlayerService,服务名为"media.player"。这样别的进程皆可以通过"media.player"来查询该服务

这里我们重点说下writeStrongBinder()函数和最后的transact()函数

5.2.1 writeStrongBinder()函数

/frameworks/native/libs/binder/Parcel.cpp 872行

status_t Parcel::writeStrongBinder(const sp& val)

{

return flatten_binder(ProcessState::self(), val, this);

}

里面调用flatten_binder()函数,那我们继续跟踪

5.2.1.1 flatten_binder()函数

/frameworks/native/libs/binder/Parcel.cpp 205行

status_t flatten_binder(const sp& /*proc*/,

const sp& binder, Parcel* out)

{

flat_binder_object obj;

obj.flags = 0x7f | FLAT_BINDER_FLAG_ACCEPTS_FDS;

//本地Binder不为空

if (binder != NULL) {

IBinder *local = binder->localBinder();

if (!local) {

BpBinder *proxy = binder->remoteBinder();

const int32_t handle = proxy ? proxy->handle() : 0;

obj.type = BINDER_TYPE_HANDLE;

obj.binder = 0;

obj.handle = handle;

obj.cookie = 0;

} else {

// 进入该分支

obj.type = BINDER_TYPE_BINDER;

obj.binder = reinterpret_cast(local->getWeakRefs());

obj.cookie = reinterpret_cast(local);

}

} else {

...

}

return finish_flatten_binder(binder, obj, out);

}

其实是将Binder对象扁平化,转换成flat_binder_object对象

- 对于Binder实体,则用cookie记录binder实体的指针。

- 对于Binder代理,则用handle记录Binder代理的句柄。

关于localBinder,代码如下:

//frameworks/native/libs/binder/Binder.cpp 191行

BBinder* BBinder::localBinder()

{

return this;

}

//frameworks/native/libs/binder/Binder.cpp 47行

BBinder* IBinder::localBinder()

{

return NULL;

}

上面 最后又调用了finish_flatten_binder()让我们一起来看下

5.2.1.2 finish_flatten_binder()函数

//frameworks/native/libs/binder/Parcel.cpp 199行

inline static status_t finish_flatten_binder(

const sp& , const flat_binder_object& flat, Parcel* out)

{

return out->writeObject(flat, false);

}

2.2.2 transact()函数

//frameworks/native/libs/binder/BpBinder.cpp 159行

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

if (mAlive) {

// code=ADD_SERVICE_TRANSACTION

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

Binder代理类调用transact()方法,真正的工作还是交给IPCThreadState来进行transact工作,先来,看见IPCThreadState:: self的过程。

Binder代理类调用transact()方法,真正工作还是交给IPCThreadState来进行transact工作。先来 看看IPCThreadState::self的过程。

5.2.2.1 IPCThreadState::self()函数

//frameworks/native/libs/binder/IPCThreadState.cpp 280行

IPCThreadState* IPCThreadState::self()

{

if (gHaveTLS) {

restart:

const pthread_key_t k = gTLS;

IPCThreadState* st = (IPCThreadState*)pthread_getspecific(k);

if (st) return st;

// new 了一个 IPCThreadState对象

return new IPCThreadState;

}

if (gShutdown) return NULL;

pthread_mutex_lock(&gTLSMutex);

//首次进入gHaveTLS为false

if (!gHaveTLS) {

// 创建线程的TLS

if (pthread_key_create(&gTLS, threadDestructor) != 0) {

pthread_mutex_unlock(&gTLSMutex);

return NULL;

}

gHaveTLS = true;

}

pthread_mutex_unlock(&gTLSMutex);

goto restart;

}

TLS 是指Thread local storage(线程本地存储空间),每个线程都拥有自己的TLS,并且是私有空间,线程空间是不会共享的。通过pthread_getspecific/pthread_setspecific函数可以设置这些空间中的内容。从线程本地存储空间中获得保存在其中的IPCThreadState对象。

5.2.2.2 IPCThreadState的构造函数

//frameworks/native/libs/binder/IPCThreadState.cpp 686行

IPCThreadState::IPCThreadState()

: mProcess(ProcessState::self()),

mMyThreadId(gettid()),

mStrictModePolicy(0),

mLastTransactionBinderFlags(0)

{

pthread_setspecific(gTLS, this);

clearCaller();

mIn.setDataCapacity(256);

mOut.setDataCapacity(256);

}

每个线程都有一个IPCThreadState,每个IPCThreadState中都有一个mIn,一个mOut。成员变量mProcess保存了ProccessState变量(每个进程只有一个)

- mIn:用来接收来自Binder设备的数据,默认大小为256字节

- mOut:用来存储发往Binder设备的数据,默认大小为256字节

5.2.2.3 IPCThreadState::transact()函数

//frameworks/native/libs/binder/IPCThreadState.cpp 548行

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

//数据错误检查

status_t err = data.errorCheck();

flags |= TF_ACCEPT_FDS;

// *** 省略部分代码 ***

if (err == NO_ERROR) {

//传输数据

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL);

}

// *** 省略部分代码 ***

if ((flags & TF_ONE_WAY) == 0) {

if (reply) {

//等待响应

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

} else {

//one waitForReponse(NULL,NULL)

err = waitForResponse(NULL, NULL);

}

return err;

}

IPCThreadState进行trancsact事物处理3部分:

- errorCheck() :负责 数据错误检查

- writeTransactionData(): 负责 传输数据

- waitForResponse(): 负责 等待响应

那我们重点看下writeTransactionData()函数与waitForResponse()函数

5.2.2.3.1 writeTransactionData)函数

//frameworks/native/libs/binder/IPCThreadState.cpp 904行

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags, int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr;

tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */

// handle=0

tr.target.handle = handle;

//code=ADD_SERVICE_TRANSACTION

tr.code = code;

// binderFlags=0

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

// data为记录Media服务信息的Parcel对象

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

tr.data.ptr.offsets = data.ipcObjects();

} else if (statusBuffer) {

tr.flags |= TF_STATUS_CODE;

*statusBuffer = err;

tr.data_size = sizeof(status_t);

tr.data.ptr.buffer = reinterpret_cast(statusBuffer);

tr.offsets_size = 0;

tr.data.ptr.offsets = 0;

} else {

return (mLastError = err);

}

// cmd=BC_TRANSACTION

mOut.writeInt32(cmd);

// 写入binder_transaction_data数据

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

}

- 其中handle的值用来标示目的端,注册服务过程的目的端为service manager,此处handle=0所对应的是binder_context_mgr_node对象,正是service manager所对应的binder实体对象。

- 其中 binder_transaction_data结构体是binder驱动通信的数据结构,该过程最终是把Binder请求码BC_TRANSACTION和binder_transaction_data写入mOut。

- transact的过程,先写完binder_transaction_data数据,接下来执行waitForResponse。

5.2.2.3.2 waitForResponse()函数

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult) {

uint32_t cmd;

int32_t err;

while (1) {

if ((err = talkWithDriver()) < NO_ERROR) break;

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = (uint32_t) mIn.readInt32();

IF_LOG_COMMANDS() {

alog << "Processing waitForResponse Command: "

<< getReturnString(cmd) << endl;

}

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

case BR_DEAD_REPLY:

err = DEAD_OBJECT;

goto finish;

case BR_FAILED_REPLY:

err = FAILED_TRANSACTION;

goto finish;

case BR_ACQUIRE_RESULT: {

ALOG_ASSERT(acquireResult != NULL, "Unexpected brACQUIRE_RESULT");

const int32_t result = mIn.readInt32();

if (!acquireResult) continue;

*acquireResult = result ? NO_ERROR : INVALID_OPERATION;

}

goto finish;

case BR_REPLY: {

binder_transaction_data tr;

err = mIn.read( & tr, sizeof(tr));

ALOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY");

if (err != NO_ERROR) goto finish;

if (reply) {

if ((tr.flags & TF_STATUS_CODE) == 0) {

reply -> ipcSetDataReference(

reinterpret_cast (tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast (tr.data.ptr.offsets),

tr.offsets_size / sizeof(binder_size_t),

freeBuffer, this);

} else {

err = *reinterpret_cast (tr.data.ptr.buffer);

freeBuffer(NULL,

reinterpret_cast (tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast (tr.data.ptr.offsets),

tr.offsets_size / sizeof(binder_size_t), this);

}

} else {

freeBuffer(NULL,

reinterpret_cast (tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast (tr.data.ptr.offsets),

tr.offsets_size / sizeof(binder_size_t), this);

continue;

}

}

goto finish;

default:

err = executeCommand(cmd);

if (err != NO_ERROR) goto finish;

break;

}

}

finish:

if (err != NO_ERROR) {

if (acquireResult) *acquireResult = err;

if (reply) reply -> setError(err);

mLastError = err;

}

return err;

}

在waitForResponse过程,首先执行BR_TRANSACTION_COMPLETE;另外,目标进程收到事物后,处理BR_TRANSACTION事物,然后送法给当前进程,再执行BR_REPLY命令。

5.2.2.3.3 talkWithDriver()函数

status_t IPCThreadState::talkWithDriver(bool doReceive) {

if (mProcess -> mDriverFD <= 0) {

return -EBADF;

}

binder_write_read bwr;

// Is the read buffer empty?

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

// We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t) mOut.data();

// This is what we'll read.

if (doReceive && needRead) {

//接受数据缓冲区信息的填充,如果以后收到数据,就直接填在mIn中了。

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t) mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

IF_LOG_COMMANDS() {

TextOutput::Bundle _b(alog);

if (outAvail != 0) {

alog << "Sending commands to driver: " << indent;

const void*cmds = (const void*)bwr.write_buffer;

const void*end = ((const uint8_t *)cmds)+bwr.write_size;

alog << HexDump(cmds, bwr.write_size) << endl;

while (cmds < end) cmds = printCommand(alog, cmds);

alog << dedent;

}

alog << "Size of receive buffer: " << bwr.read_size

<< ", needRead: " << needRead << ", doReceive: " << doReceive << endl;

}

// Return immediately if there is nothing to do.

// 当读缓冲和写缓冲都为空,则直接返回

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

IF_LOG_COMMANDS() {

alog << "About to read/write, write size = " << mOut.dataSize() << endl;

}

#if defined(HAVE_ANDROID_OS)

//通过ioctl不停的读写操作,跟Binder驱动进行通信

if (ioctl(mProcess -> mDriverFD, BINDER_WRITE_READ, & bwr) >=0)

err = NO_ERROR;

else

err = -errno;

#else

err = INVALID_OPERATION;

#endif

if (mProcess -> mDriverFD <= 0) {

err = -EBADF;

}

IF_LOG_COMMANDS() {

alog << "Finished read/write, write size = " << mOut.dataSize() << endl;

}

} while (err == -EINTR);

IF_LOG_COMMANDS() {

alog << "Our err: " << (void*)(intptr_t) err << ", write consumed: "

<< bwr.write_consumed << " (of " << mOut.dataSize()

<< "), read consumed: " << bwr.read_consumed << endl;

}

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else

mOut.setDataSize(0);

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

IF_LOG_COMMANDS() {

TextOutput::Bundle _b(alog);

alog << "Remaining data size: " << mOut.dataSize() << endl;

alog << "Received commands from driver: " << indent;

const void*cmds = mIn.data();

const void*end = mIn.data() + mIn.dataSize();

alog << HexDump(cmds, mIn.dataSize()) << endl;

while (cmds < end) cmds = printReturnCommand(alog, cmds);

alog << dedent;

}

return NO_ERROR;

}

return err;

}

binder_write_read结构体 用来与Binder设备交换数据的结构,通过ioctl与mDriverFD通信,是真正与Binder驱动进行数据读写交互的过程。

ioctl经过系统调用后进入Binder Driver

大体流程如下图

6 Binder驱动

Binder驱动内部调用了流程

ioctl——> binder_ioctl ——> binder_ioctl_write_read

6.1 binder_ioctl_write_read()函数处理

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

struct binder_proc *proc = filp->private_data;

void __user *ubuf = (void __user *)arg;

struct binder_write_read bwr;

//将用户空间bwr结构体拷贝到内核空间

copy_from_user(&bwr, ubuf, sizeof(bwr));

// ***省略部分代码***

if (bwr.write_size > 0) {

//将数据放入目标进程

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

// ***省略部分代码***

}

if (bwr.read_size > 0) {

//读取自己队列的数据

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

// ***省略部分代码***

}

//将内核空间bwr结构体拷贝到用户空间

copy_to_user(ubuf, &bwr, sizeof(bwr));

// ***省略部分代码***

}

6.2 binder_thread_write()函数处理

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error == BR_OK) {

//拷贝用户空间的cmd命令,此时为BC_TRANSACTION

if (get_user(cmd, (uint32_t __user *)ptr)) -EFAULT;

ptr += sizeof(uint32_t);

switch (cmd) {

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

//拷贝用户空间的binder_transaction_data

if (copy_from_user(&tr, ptr, sizeof(tr))) return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr, cmd == BC_REPLY);

break;

}

// ***省略部分代码***

}

*consumed = ptr - buffer;

}

return 0;

}

6.3 binder_thread_write()函数处理

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply){

if (reply) {

// ***省略部分代码***

}else {

if (tr->target.handle) {

// ***省略部分代码***

} else {

// handle=0则找到servicemanager实体

target_node = binder_context_mgr_node;

}

//target_proc为servicemanager进程

target_proc = target_node->proc;

}

if (target_thread) {

// ***省略部分代码***

} else {

//找到servicemanager进程的todo队列

target_list = &target_proc->todo;

target_wait = &target_proc->wait;

}

t = kzalloc(sizeof(*t), GFP_KERNEL);

tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);

//非oneway的通信方式,把当前thread保存到transaction的from字段

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;

else

t->from = NULL;

t->sender_euid = task_euid(proc->tsk);

t->to_proc = target_proc; //此次通信目标进程为servicemanager进程

t->to_thread = target_thread;

t->code = tr->code; //此次通信code = ADD_SERVICE_TRANSACTION

t->flags = tr->flags; // 此次通信flags = 0

t->priority = task_nice(current);

//从servicemanager进程中分配buffer

t->buffer = binder_alloc_buf(target_proc, tr->data_size,

tr->offsets_size, !reply && (t->flags & TF_ONE_WAY));

t->buffer->allow_user_free = 0;

t->buffer->transaction = t;

t->buffer->target_node = target_node;

if (target_node)

//引用计数+1

binder_inc_node(target_node, 1, 0, NULL);

offp = (binder_size_t *)(t->buffer->data + ALIGN(tr->data_size, sizeof(void *)));

//分别拷贝用户空间的binder_transaction_data中ptr.buffer和ptr.offsets到内核

copy_from_user(t->buffer->data,

(const void __user *)(uintptr_t)tr->data.ptr.buffer, tr->data_size);

copy_from_user(offp,

(const void __user *)(uintptr_t)tr->data.ptr.offsets, tr->offsets_size);

off_end = (void *)offp + tr->offsets_size;

for (; offp < off_end; offp++) {

struct flat_binder_object *fp;

fp = (struct flat_binder_object *)(t->buffer->data + *offp);

off_min = *offp + sizeof(struct flat_binder_object);

switch (fp->type) {

case BINDER_TYPE_BINDER:

case BINDER_TYPE_WEAK_BINDER: {

struct binder_ref *ref;

struct binder_node *node = binder_get_node(proc, fp->binder);

if (node == NULL) {

//服务所在进程 创建binder_node实体

node = binder_new_node(proc, fp->binder, fp->cookie);

// ***省略部分代码***

}

//servicemanager进程binder_ref

ref = binder_get_ref_for_node(target_proc, node);

...

//调整type为HANDLE类型

if (fp->type == BINDER_TYPE_BINDER)

fp->type = BINDER_TYPE_HANDLE;

else

fp->type = BINDER_TYPE_WEAK_HANDLE;

fp->binder = 0;

fp->handle = ref->desc; //设置handle值

fp->cookie = 0;

binder_inc_ref(ref, fp->type == BINDER_TYPE_HANDLE,

&thread->todo);

} break;

case : // ***省略部分代码***

}

if (reply) {

// ***省略部分代码***

} else if (!(t->flags & TF_ONE_WAY)) {

//BC_TRANSACTION 且 非oneway,则设置事务栈信息

t->need_reply = 1;

t->from_parent = thread->transaction_stack;

thread->transaction_stack = t;

} else {

// ***省略部分代码***

}

//将BINDER_WORK_TRANSACTION添加到目标队列,本次通信的目标队列为target_proc->todo

t->work.type = BINDER_WORK_TRANSACTION;

list_add_tail(&t->work.entry, target_list);

//将BINDER_WORK_TRANSACTION_COMPLETE添加到当前线程的todo队列

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

list_add_tail(&tcomplete->entry, &thread->todo);

//唤醒等待队列,本次通信的目标队列为target_proc->wait

if (target_wait)

wake_up_interruptible(target_wait);

return;

}

- 注册服务的过程,传递的是BBinder对象,因此上面的writeStrongBinder()过程中localBinder不为空,从而flat_binder_object.type等于BINDER_TYPE_BINDER。

- 服务注册过程是在服务所在进程创建binder_node,在servicemanager进程创建binder_ref。对于同一个binder_node,每个进程只会创建一个binder_ref对象。

- 向servicemanager的binder_proc->todo添加BINDER_WORK_TRANSACTION事务,接下来进入ServiceManager进程。

这里说下这个函数里面涉及的三个重要函数

- binder_get_node()

- binder_new_node()

- binder_get_ref_for_node()

6.3.1 binder_get_node()函数处理

// /kernel/drivers/android/binder.c 904行

static struct binder_node *binder_get_node(struct binder_proc *proc,

binder_uintptr_t ptr)

{

struct rb_node *n = proc->nodes.rb_node;

struct binder_node *node;

while (n) {

node = rb_entry(n, struct binder_node, rb_node);

if (ptr < node->ptr)

n = n->rb_left;

else if (ptr > node->ptr)

n = n->rb_right;

else

return node;

}

return NULL;

}

从binder_proc来根据binder指针ptr值,查询相应的binder_node

6.3.2 binder_new_node()函数处理

//kernel/drivers/android/binder.c 923行

static struct binder_node *binder_new_node(struct binder_proc *proc,

binder_uintptr_t ptr,

binder_uintptr_t cookie)

{

struct rb_node **p = &proc->nodes.rb_node;

struct rb_node *parent = NULL;

struct binder_node *node;

//第一次进来是空

while (*p) {

parent = *p;

node = rb_entry(parent, struct binder_node, rb_node);

if (ptr < node->ptr)

p = &(*p)->rb_left;

else if (ptr > node->ptr)

p = &(*p)->rb_right;

else

return NULL;

}

//给创建的binder_node 分配内存空间

node = kzalloc(sizeof(*node), GFP_KERNEL);

if (node == NULL)

return NULL;

binder_stats_created(BINDER_STAT_NODE);

//将创建的node对象添加到proc红黑树

rb_link_node(&node->rb_node, parent, p);

rb_insert_color(&node->rb_node, &proc->nodes);

node->debug_id = ++binder_last_id;

node->proc = proc;

node->ptr = ptr;

node->cookie = cookie;

//设置binder_work的type

node->work.type = BINDER_WORK_NODE;

INIT_LIST_HEAD(&node->work.entry);

INIT_LIST_HEAD(&node->async_todo);

binder_debug(BINDER_DEBUG_INTERNAL_REFS,

"%d:%d node %d u%016llx c%016llx created\n",

proc->pid, current->pid, node->debug_id,

(u64)node->ptr, (u64)node->cookie);

return node;

}

6.3.3 binder_get_ref_for_node()函数处理

// kernel/drivers/android/binder.c 1066行

static struct binder_ref *binder_get_ref_for_node(struct binder_proc *proc,

struct binder_node *node)

{

struct rb_node *n;

struct rb_node **p = &proc->refs_by_node.rb_node;

struct rb_node *parent = NULL;

struct binder_ref *ref, *new_ref;

//从refs_by_node红黑树,找到binder_ref则直接返回。

while (*p) {

parent = *p;

ref = rb_entry(parent, struct binder_ref, rb_node_node);

if (node < ref->node)

p = &(*p)->rb_left;

else if (node > ref->node)

p = &(*p)->rb_right;

else

return ref;

}

//创建binder_ref

new_ref = kzalloc_preempt_disabled(sizeof(*ref));

new_ref->debug_id = ++binder_last_id;

//记录进程信息

new_ref->proc = proc;

// 记录binder节点

new_ref->node = node;

rb_link_node(&new_ref->rb_node_node, parent, p);

rb_insert_color(&new_ref->rb_node_node, &proc->refs_by_node);

//计算binder引用的handle值,该值返回给target_proc进程

new_ref->desc = (node == binder_context_mgr_node) ? 0 : 1;

//从红黑树最最左边的handle对比,依次递增,直到红黑树遍历结束或者找到更大的handle则结束。

for (n = rb_first(&proc->refs_by_desc); n != NULL; n = rb_next(n)) {

//根据binder_ref的成员变量rb_node_desc的地址指针n,来获取binder_ref的首地址

ref = rb_entry(n, struct binder_ref, rb_node_desc);

if (ref->desc > new_ref->desc)

break;

new_ref->desc = ref->desc + 1;

}

// 将新创建的new_ref 插入proc->refs_by_desc红黑树

p = &proc->refs_by_desc.rb_node;

while (*p) {

parent = *p;

ref = rb_entry(parent, struct binder_ref, rb_node_desc);

if (new_ref->desc < ref->desc)

p = &(*p)->rb_left;

else if (new_ref->desc > ref->desc)

p = &(*p)->rb_right;

else

BUG();

}

rb_link_node(&new_ref->rb_node_desc, parent, p);

rb_insert_color(&new_ref->rb_node_desc, &proc->refs_by_desc);

if (node) {

hlist_add_head(&new_ref->node_entry, &node->refs);

}

return new_ref;

}

handle值计算方法规律:

- 每个进程binder_proc所记录的binder_ref的handle值是从1开始递增的

- 所有进程binder_proc所记录的handle=0的binder_ref都指向service manager

- 同一服务的binder_node在不同进程的binder_ref的handle值可以不同

7 ServiceManager流程

关于ServiceManager的启动流程,我这里就不详细讲解了。启动后,就会循环在binder_loop()过程,当来消息后,会调用binder_parse()函数

7.1 binder_parse()函数

// framework/native/cmds/servicemanager/binder.c 204行

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

int r = 1;

uintptr_t end = ptr + (uintptr_t) size;

while (ptr < end) {

uint32_t cmd = *(uint32_t *) ptr;

ptr += sizeof(uint32_t);

switch(cmd) {

case BR_TRANSACTION: {

struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr;

// *** 省略部分源码 ***

binder_dump_txn(txn);

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

bio_init(&reply, rdata, sizeof(rdata), 4);

//从txn解析出binder_io信息

bio_init_from_txn(&msg, txn);

// 收到Binder事务

res = func(bs, txn, &msg, &reply);

// 发送reply事件

binder_send_reply(bs, &reply, txn->data.ptr.buffer, res);

}

ptr += sizeof(*txn);

break;

}

case : // *** 省略部分源码 ***

}

return r;

}

7.2 svcmgr_handler()函数

//frameworks/native/cmds/servicemanager/service_manager.c

244行

int svcmgr_handler(struct binder_state *bs,

struct binder_transaction_data *txn,

struct binder_io *msg,

struct binder_io *reply)

{

struct svcinfo *si;

uint16_t *s;

size_t len;

uint32_t handle;

uint32_t strict_policy;

int allow_isolated;

// *** 省略部分源码 ***

strict_policy = bio_get_uint32(msg);

s = bio_get_string16(msg, &len);

// *** 省略部分源码 ***

switch(txn->code) {

case SVC_MGR_ADD_SERVICE:

s = bio_get_string16(msg, &len);

...

handle = bio_get_ref(msg); //获取handle

allow_isolated = bio_get_uint32(msg) ? 1 : 0;

//注册指定服务

if (do_add_service(bs, s, len, handle, txn->sender_euid,

allow_isolated, txn->sender_pid))

return -1;

break;

case : // *** 省略部分源码 ***

}

bio_put_uint32(reply, 0);

return 0;

}

7.3 do_add_service()函数

// frameworks/native/cmds/servicemanager/service_manager.c 194行

int do_add_service(struct binder_state *bs,

const uint16_t *s, size_t len,

uint32_t handle, uid_t uid, int allow_isolated,

pid_t spid)

{

struct svcinfo *si;

if (!handle || (len == 0) || (len > 127))

return -1;

//权限检查

if (!svc_can_register(s, len, spid)) {

return -1;

}

//服务检索

si = find_svc(s, len);

if (si) {

if (si->handle) {

//服务已经注册时,释放相应的服务

svcinfo_death(bs, si);

}

si->handle = handle;

} else {

si = malloc(sizeof(*si) + (len + 1) * sizeof(uint16_t));

//内存不足时,无法分配足够的内存

if (!si) {

return -1;

}

si->handle = handle;

si->len = len;

//内存拷贝服务信息

memcpy(si->name, s, (len + 1) * sizeof(uint16_t));

si->name[len] = '\0';

si->death.func = (void*) svcinfo_death;

si->death.ptr = si;

si->allow_isolated = allow_isolated;

//svclist保存所有已注册的服务

si->next = svclist;

svclist = si;

}

//以BC_ACQUIRE命令,handle为目标的信息,通过ioctl发送给binder驱动

binder_acquire(bs, handle);

//以BC_REQUEST_DEATH_NOTIFICATION命令的信息,通过ioctl发送给binder驱动,主要用于清理内存等收尾工作。

binder_link_to_death(bs, handle, &si->death);

return 0;

}

svcinfo记录着服务名和handle信息

7.4 binder_send_reply()函数

// frameworks/native/cmds/servicemanager/binder.c 170行

void binder_send_reply(struct binder_state *bs,

struct binder_io *reply,

binder_uintptr_t buffer_to_free,

int status)

{

struct {

uint32_t cmd_free;

binder_uintptr_t buffer;

uint32_t cmd_reply;

struct binder_transaction_data txn;

} __attribute__((packed)) data;

//free buffer命令

data.cmd_free = BC_FREE_BUFFER;

data.buffer = buffer_to_free;

// reply命令

data.cmd_reply = BC_REPLY;

data.txn.target.ptr = 0;

data.txn.cookie = 0;

data.txn.code = 0;

if (status) {

// *** 省略部分源码 ***

} else {

data.txn.flags = 0;

data.txn.data_size = reply->data - reply->data0;

data.txn.offsets_size = ((char*) reply->offs) - ((char*) reply->offs0);

data.txn.data.ptr.buffer = (uintptr_t)reply->data0;

data.txn.data.ptr.offsets = (uintptr_t)reply->offs0;

}

//向Binder驱动通信

binder_write(bs, &data, sizeof(data));

}

- binder_write进去binder驱动后,将BC_FREE_BUFFER和BC_REPLY命令协议发送给Binder驱动,向Client端发送reply

- binder_write进入binder驱动后,将BC_FREE_BUFFER和BC_REPLY命令协议发送给Binder驱动, 向client端发送reply.

8 总结

服务注册过程(addService)核心功能:在服务所在进程创建的binder_node,在servicemanager进程创建binder_ref。其中binder_ref的desc在同一个进程内是唯一的:

- 每个进程binder_proc所记录的binder_ref的handle值是从1开始递增的

- 所有进程binder_proc所记录的bandle=0的binder_ref指向service manager

- 同一个服务的binder_node在不同的进程的binder_ref的handle值可以不同

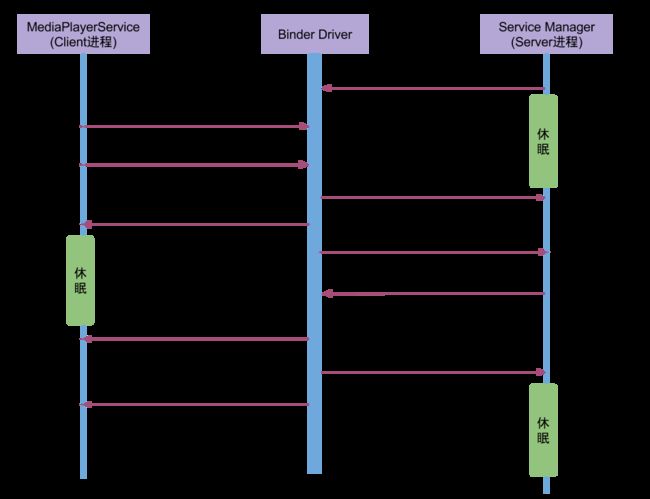

Media服务注册的过程设计到MediaPlayerService(作为Cliient进程)和Service Manager(作为Service 进程),通信的流程图如下:

过程分析:

- 1、MediaPlayerService进程调用 ioctl()向Binder驱动发送IPC数据,该过程可以理解成一个事物 binder_transaction (记为BT1),执行当前操作线程的binder_thread(记为 thread1),则BT1 ->from_parent=NULL, BT1 ->from=thread1,thread1 ->transaction_stack=T1。其中IPC数据内容包括:

- Binder协议为BC_TRANSACTION

- Handle等于0

- PRC代码为ADD_SERVICE

- PRC数据为"media.player"

- 2、Binder驱动收到该Binder请求。生成BR_TRANSACTION命令,选择目标处理该请求的线程,即ServiceManager的binder线程(记为thread2),则T1->to_parent=NULL,T1 -> to_thread=thread2,并将整个binder_transaction数据(记为BT2)插入到目标线程的todo队列。

- 3、Service Manager的线程thread收到BT2后,调用服务注册函数将服务“media.player”注册到服务目录中。当服务注册完成,生成IPC应答数据(BC_REPLY),BT2->from_parent=BT1,BT2 ->from=thread2,thread2->transaction_stack=BT2。

- 4、Binder驱动收到该Binder应答请求,生成BR_REPLY命令,BT2->to_parent=BT1,BT2->to_thread1,thread1->transaction_stack=BT2。在MediaPlayerService收到该命令后,知道服务注册完成便可以正常使用。

参考

Android跨进程通信IPC之9——Binder之Framework层C++篇2