原文网址

A few years ago Google made a proposal for a new HTTP compression method, called SDCH (SanDwiCH). The idea behind the method is to create a dictionary of long strings that appear throughout many pages of the same domain (or popular search results). The compression is then simply searching for the appearance of the long strings in a dictionary and replacing them with references to the aforementioned dictionary. Afterwards the output is further compressed with DEFLATE

With the right dictionary for the right page the savings can be spectacular, even 70% smaller than gzip alone. In theory, a whole file can be replaced by a single token.

The drawbacks of the method are twofold: first - the dictionary that is created is fairly large and must be distributed as a separate file, in fact the dictionary is often larger than the individual pages it compresses; second - the dictionary is usually absolutely useless for another set of pages.

For large domains that are visited repeatedly the advantage is huge: at a cost of single dictionary download, all the following page views can be compressed with much higher efficiency. Currently we aware of Google and LinkedIn compressing content with SDCH.

SDCH for millions of domains

Here at CloudFlare our task is to support millions of domains, which have little in common, and creating a single SDCH dictionary is very difficult. Nevertheless better compression is important, because it produces smaller payloads, which result in content being delivered faster to our clients. That is why we set out to find that little something that is common to all the pages and to see if we could compress them further.

Besides SDCH (which is only supported by the Chrome browser), the common compression methods over HTTP are gzip and DEFLATE. Some do not know it but the compression they perform is identical. The two formats differ in the content headers they use, with gzip having slightly larger headers, and the error detection function - gzip uses CRC32, whereas DEFLATE uses Adler32.

Usually the servers opt to compress with gzip, however its cousin DEFLATE supports a neat feature called "Preset Dictionary". This dictionary is not like the dictionary used by SDCH, in fact it is not a real dictionary. To understand how this "dictionary" can be used to our advantage, it is important to understand how the DEFLATE algorithm works.

讲述DEFLATE算法的工作原理 - 分两步:1. LZ77算法 2.哈夫曼编码

The DEFLATE algorithm consists of two stages, first it performs the LZ77 algorithm, where it simply goes over the input, and replaces occurrences of previously encountered strings with (short) "directions" where the same string can be found in the previous input. The directions are a tuple of (length, distance), where distance tells how far back in the input the string was and length tells how many bytes were matched. The minimal length deflate will match is 3 (4 in the highly optimized implementation CloudFlare uses), the maximal length is 258, and the farthest distance back is 32KB.

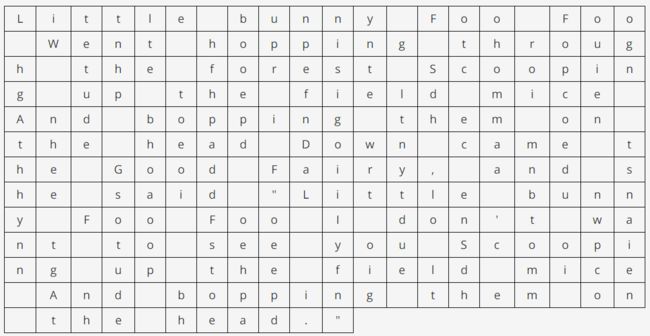

This is an illustration of the LZ77 algorithm:

Input:

Output(length tokens are blue, distance tokens are red):

DEFLATE managed to reduce the original text from 251 characters, to just 152 tokens! Those tokens are later compressed further by Huffman encoding, which is the second stage. How long and devotedly the algorithm searches for a string before it stops is defined by the compression level. For example with compression level 4 the algorithm will be happy to find a match of 16 bytes, whereas with level 9 it will attempt to look for the maximal 258 byte match. If a match was not found the algorithm outputs the input as is, uncompressed. Clearly at the beginning of the input, there can be no references to previous strings, and it is always uncompressed. Similarly the first occurrence of any string in the input will never be compressed. For example almost all HTML files start with the string "

Now, even strings at the very beginning of the input are compressed and strings that only appear once in the file are compressed as well. With the help of the dictionary we are down to 115 tokens. That means roughly 25% better compression rate.

An experiment

We wanted to see if we could make a dictionary that would benefit ALL the HTML pages we serve, and not just a specific domain. To that end we scanned over 20,000 publicly available random HTML pages that passed through our servers on a random sunny day, we took the first 16KB of each page, and used them to prepare two dictionaries, one of 16KB and the other of 32KB. Using a larger dictionary is useless, because then it would be larger than the LZ77 window used by deflate.

To build a dictionary, I made a little go program that takes a set of files and performs "pseudo" LZ77 over them, finding strings that DEFLATE would not compress in the first 16KB of each input file. It then performs a frequency count of the individual strings, and scores them according to their length and frequency. In the end the highest scoring strings are saved into the dictionary file.

构建预置字典的方法:使用一组文件,并对它们执行"伪"LZ77,查找在每个输入文件的第一个16KB中不能被DEFLATE压缩的字符串。然后再执行对每个字符串的频率计数,并根据它们的长度和频率对它们进行评分。最后,选取得分最高的字符串保存为字典文件。

Our benchmark consists of another set of pages obtained in similar manner. The number of benchmarked files was about 19,000 with total size of 563MB.

We can see from the results that the compression we gain for level 4 is almost 5% better than without the dictionary, which is even greater than the compression gained by using level 9 compression, while being substantially faster. For level 9, the gain is greater than 5% without a significant performance hit.

The results highly depend on the dataset used for the dictionary and on the compressed pages. For example when making a dicitonary aimed at a specific web site, the compression rate for that site increased by up to 30%.

For very small pages, such as error pages, with size less than 1KB, a DEFLATE dictionary was able to gain compression rates of up to 50% smaller than DEFLATE alone.

Of course different dictionaries may be used for different file types. In fact we think that it would make sense to create a standard set of dictionaries that could be used accross the web.

The utility to make a dictionary for deflate can be found at

https://github.com/vkrasnov/dictator

The optimized version of zlib used by CloudFlare can be found at

https://github.com/cloudflare/zlib